If you were around when the IBM PC rolled out, two things probably caught you by surprise. One is that the company that made the Selectric put that ridiculous keyboard on it. The other was that it had an 8-bit CPU onboard. It was actually even stranger than that. The PC sported an 8088 which was a 16-bit 8086 stripped down to an 8 bit external bus. You have to wonder what caused that, and [Steven Leibson] has a great post that explains what went down all those years ago.

Before the IBM PC, nearly all personal computers were 8-bit and had 16-bit address buses. Although 64K may have seemed enough for anyone, many realized that was going to be a brick wall fairly soon. So the answer was larger address buses and addressing modes.

Intel knew this and was working on the flagship iAPX 432. This was going to represent a radical departure from the 8080-series CPUs designed from the start for high-level languages like Ada. However, the radical design took longer than expected. The project started in 1976 but wouldn’t see the light of day until 1981. It was clear they needed something sooner, so the 8086 — a 16-bit processor clearly derived from the 8080 was born.

There were other choices, too. The Motorola 68000 was a great design, but it was expensive and not widely available when IBM was selecting a processor. TI had the TMS9900 in production, but they had bet on CPU throughput being the key to success and stuck with the same old 16-bit address bus. That processor, too, had a novel way of storing registers in main memory which was great if your CPU was slow, but as CPU speeds outpaced memory speeds, that was a losing design decision.

That still leaves the question: why the 8088 instead of the 8086? Price. IBM had a goal to pay under $5 for a CPU and Intel couldn’t meet that price with the 8086. Apparently, it wasn’t a technical problem but a contractual one. However, folding the chip to an 8-bit external bus allowed for a smaller die, lower cost, and freedom from contractual obligations that plagued the 8086. That last point was important, as the manufacturing cost wasn’t that different, but setting the price was all about paperwork.

There is a lot more to read. At the bottom of the post, you’ll find links to the source oral history transcripts from the Computer History Museum. Fascinating stuff. If you lived though it, you probably didn’t know all these details and if you didn’t, it gives a good flavor of how many choices there were in those days and how many design trades you had to make to get a product into the marketplace.

We borrowed the title graphic from [Steven’s] post and he, in turn, borrowed it from the well-known [Ken Shirriff].

The 8088 is 16bit, same as the 8086. Just because it had an 8bit databus doesn’t change that. The 68008 was the same.

The story I always heard, and I remember when it came along, was that memory was an issue. 8bts required 8 RAM, plus the one for error checking. At the time of the original IBM PC, there wasn’t 4bit wide dynamic ram. 16 bits required 16 RAM. Maybe ok from the factory, but for expansion you needed all those empty IC sockets, and the user had to add in 16 IC increments.

8bits made it easier.

In that case, the 68000 is 32-bit so you’re contradicting yourself

Similar to the 8088, the 68000 has it’s data accessed by half-words so it only needs a 16-bit wide data bus. They also sold an 8-bit bus version (the 68008) but IDK if that was available at the time. That was used in the Sinclair QL IIRC, and doing 4 bus accesses to get one 32-bit word was apparently not great.

Nevermind, I see you were responding to a different part of the comment, but sadly there isn’t an edit or delete button on this website.

Both the 68000 and 68008 are 32-bit, because the architecture is 32-bit even though they had 16-bit and 8-bit data busses respectively. The data bus width doesn’t define the bit-ness of a processor.

I think they’re specifically talking about data bus widths and how they don’t change the architecture. It seems like this whole thread is in violent agreement

Same game with 386sx – 16 bit links ARE cheaper

Yeah it’s a 32-bit *architecture*.

Implemented internally as a 16-bit ALU.

With a external 24-bit address bus.

An external 16-bit data bus (for the 68000).

An external 8-bit data bus (for the 68008).

Different people see the processor differently. The 8088 is an 8-bit processor to a hardware engineer, and a 16-bit processor to a chip designer or programmer. The 68000 is a 16-bit chip from the hardware designer perspective, 32-bit to the programmer, and a hybrid to the chip designer: 32-bit registers but only 16-bit ALUs (three of them).

“In that case, the 68000 is 32-bit”

Correct

I don’t care. The 8088 was the same as the 8086 exceot the external bus. The 68008 was the same as the 68000 except for the external bus.

Reading comprehension is important.

The nitpickers aren’t even addressing the post’s premise that the 8088 was somehow different from the 8088

The 68008 also had a narrower physical address bus; 20 bits (same as the 8086/8) on the DIP48 version and 22 bits on the PLCC52 version, vs 24 bits on the 68000 (I’m not sure what the 4 extra pins were on the PLCC68 and SMT versions, but it’s not extra address lines.)

The 8088 also made it a little easier to interface to the mostly-8085-generation interface chips they were using but given that a fair number of clones managed to use the 8086 it can’t have been that hard.

I think that having an 8-bit bus made esaier to use peripheral ICs designed on 8 bit bus, for instance the CRTC or the floppy controller. Olivetti M24 that had an 8086 had to use a bus converter circuitry for the expansion bus and some peripherals, and I think they patented it.

When you’re making peripherals for these things you think of it as an 8-bit. You buy components out of the 8-bit catalogs and not pairs of them for 16-bit or more expensive 16-bit parts. The terrible DMA controller used on the PC/XT and later weirdly adapted to the AT is a great example of leveraging 8-bit components to make a sort of Frankenstein’s monster of a computer architecture.

I thought peripheral ICs factored i to the decision for the 8088, but can’t remember details. The 68000 was designed to use 68XX 8bit peripherals, so that can’t be it.

Lots was written about the IBM PC at the time, it’s all explained, but forty years later, memory fades

One of the things Adam Osborne emphasises in his coverage of microprocessors (https://archive.org/details/Introduction_to_Microcomputers_Volume_II_1977_Osborne_and_Associates/page/n5/mode/2up) is that while it’s relatively trivial for a 6800-class CPU to use 8080 support chips, it’s somewhat problematic to do things the other way, because the 6800 bus depends on the E signal to synchronise everything. (The 8080 wasn’t the worst; he called the CP1600 “singularly incompatible” with 6800 support chips.)

Is that why the AY-3-8910’s interfa ce seemed so odd? It was made for the CP1600 even though I suspect it got used more with other CPUs?

I had to waste a day figuring that out for myself, derp. Had a few and a Z80, went all round the houses with what ifs, and arrived at the inevitable.

I was there (Intel) during that period. This was exactly the reason IBM went with the 8088 – the entry level machine was cheaper because it only needed 9 memory chips (infamous and useless parity bit). Competing designs based on 16 bit data busses needed 16 chips (or 18 with parity). At that time dynamic RAM memory chips were a significant cost factor and IBM was not concerned about performance hit due to 2 memory bus cycles per data fetch..

The 68008 was kind of a dog because of 4 memory bus cycles for each data item. I’m not sure there were any major design wins for it.

I do remember the introduction of the IBM PC as it was reviewed in the UK magazine Personal Computer World, November 1981. The introduction of the 8086 and 8088 is fascinating, but at least one assertion in this article can’t be correct.

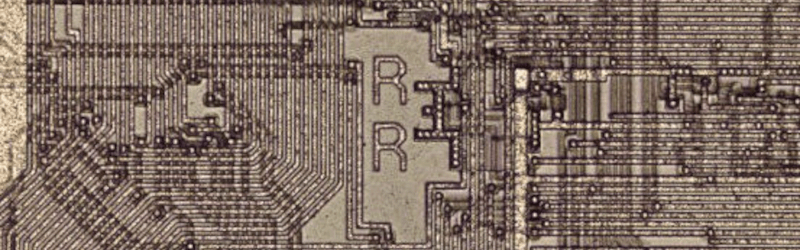

The 8088 and the 8086 have basically the same die. If you compare the 8086 at Ken Shirriff’s blog.

http://www.righto.com/2020/06/a-look-at-die-of-8086-processor.html

And the 8088 at ieee Spectrum:

https://spectrum.ieee.org/chip-hall-of-fame-intel-8088-microprocessor

You can see, once you rotate them, that they must be virtually identical. This makes sense as, in my naive mind, all you need to do to turn an 8086 into an 8088 is start latching instruction fetches in to the 3rd byte of the instruction queue instead of the first (thus achieving a 4-byte queue instead of 6), and treat all word data fetches as unaligned (an 8086 had 16-bit datapaths internally so an unaligned 16-bit access, .e.g read must read from the first address and latch it in the low byte of its MBR and then increment the address and place the byte in the high byte of its MBR).

I’m pretty sure there are almost no other differences. So, the chip isn’t simpler to make and wouldn’t have a smaller die (except for process shrinks).

Exactly. The linked article says pretty much the same thing. The 8086 and the 8088 are very nearly the same chip, with similar die size and complexity. They were different, though, with different performance and difference part numbers. That difference let Intel sell the 8088 at a price lower than the 8086.

It had nothing to do with the hardware.

Intel had contracts to deliver the 8086 to bunches of customers. If they had sold the 8086 to IBM at a lower price they’d have had angry customers demanding lower prices.

Since Intel sold the 8088 to IBM (and there were no other contracts for it,) Intel could set the price for the 8088 wherever they liked without pissing off other customers.

Purely business details.

Here in Europe, the 8086 was used by popular PCs, at least:

The Amstrad/Schneider PC1512 and PC1640.

The 8086 was running at 8MHz, though some users like my father replaced it by a NEC V30.

For a while, they had a similar level of popularity as the Tandy 1000, I think.

https://en.wikipedia.org/wiki/PC1512

The 8088 die is no smaller than the 8086. It uses a smaller package, which cut costs there, and could use 8 bit peripherals, which were in plentiful supply, but cost savings in the silicon wafer did not exist.

I take part of the above back. Both used multiplexed address and data lines, so even the package was the same size at 40 pins.

Ok, so I read the linked article and the reasons are actually as follows:

Intel could not sell discounted 8086 CPUs to IBM because of contracts they had with other companies over the price of the chip (they would have to give the same discount to these other customers also).

The 8088 was a byproduct of a die shrink project for the 8086 (there was a smaller 8086 AND the 8088 which were about the same size). The 8088 was however a new part not covered by the existing contracts so could be discounted for IBM only.

As a plus point it played better with cheap 8 bit IO hardware that was available off the shelf.

You are talking about the “most favored nations” clause (MFN) that many large semiconductor customers required. A common dodge to this was to produce a variant that had a different part number. Internally at Intel, the 88 was viewed as the same as the 86. All the peripheral chips, development tools, memories were the same. The semiconductor business is extremely volume sensitive so if one customer doubles your volume you bend way over for them.

The 8088 wasn’t a “byproduct”, it was very much a targeted market segment and part of the chip family from a very early point. During the pre IBM PC era, Intel was starting to see Motorola 68K design wins. The 88 was a big part of their attack (AKA Orange Crush) by undercutting system chip cost. Orange Crush’s goal was to cut off Motorola’s design wins. The entire company was mobilized behind it. The 68K never really got any big design wins becuase of Intel’s single minded attack. Even though it was near gospel among programmers of that era that the 68K instruction set was better, most companies chose cost/performance over architectural elegance. In the Semi business, design wins are a key measure of future revenues. The IBM design win was very much part of Orange Crush.

What do you mean by “that ridiculous keyboard”?

Are you thinking of the PC Jr.’s original keyboard?

Years of using selectrics and IBM terminals we all assumed it would have a keyboard nicer than what it had. The fact that that keyboard has propagated itself doesn’t mean it was all that great. The Jr keyboard was horrifying, true.

I found the IBM PC keyboard much better than using a reed stylus on a wet slab of clay!

Sheer luxury, we had to walk 20 miles to find a fresh cave.

Isn’t there a macro for this? Just invoke instead of the same old one upmanship.

Yeah it’s a sketch called the 4 Yorkshiremen variously performed by the “At last the 1948 show” followed by “Monty Python” and various permutations of former Pythons and other comic actors.

When I was young, we had things rough.

Nobody knew the tired old jokes

We laughed at them and we liked it!

Nobody criticized old jokes, like some sort of Spanish inquisition.

And quickly thereafter, users replaced their 8088/8086s by proper NEC V20/30s.

And.. and they lived happily ever after. 😁

Or at least until the AT

The AT and 286 were way too expensive when they were new. Until the 386 came out and everyone started selling 286 AT clones built out of their garage for $800.

In my rememberance the appearance of the AT did more to enable the use of V20 et al. (Which had only appeared at basically the same time as the AT anyway) This was because there was a lot of bare metal programming around that ignored DOS and the BIOS calls, or which relied on exact programmed timign loops instead of system timers etc, resulting in programs that crashed on slightly faster machines, or when instructions didn’t take as long as expected. So early V20 adopters locked themselves out of a lot of software, where the attitude of the author/publisher would be “Put the 8088 back in and it works fine” when their stuff was acting up on the XT and failing to run at all on the AT, they maybe saw the light and fixed their halfassery.

Also the V20 etc had some compatibility with 286 instructions, so enhancements to some programs for 286 also benefitted V20… so the upgrade had additional attractions.

You’re not wrong, I think.

In the beginning, though, “MS-DOS compatibles” existed as well.

Sirius 1/Victor 9000, DEC Rainbow 100, Alphatronic PC with 808x card, BBC Master 512 with 8018x/DOS Plus 1.x, Sanyo MBC555, Zenith Z-100, PC-9801,Tiki 100,…

So in some way or another, the IBM PC/4,77MHz/8088 configuration wasn’t set into stone originally. There was competition. Like in the CP/M days. That’s why MS insisted to be able to sell MS-DOS to other interested parties, I think.

The IBM PC as a defacto-standard became a thing in the DOS 3.x days, I guess, when PC and compatibles became more dominant. That’s when non-IBM PC OEM versions of DOS slowly declined.

That being said, I’m just a random person in cyberspace. I don’t mean to rewrite history, but this is what I know from reading articles and old magazines. So please double check what I wrote. It’s just a very compact summary, after all.

Best regards,

Compaq was first to reverse engineer the IBM PC bios. Which was key to the PC taking off.

It took some time, IIRC the first Compaq PC compatible was about an early AT equivalent, but made of old tank armor and ship anchors.

IIRC There was also some shenanigans related to one of Compaq’s bios team later founding one of the clone bios companies.

The V20 had some compatibility with the 80186/80188.

And it could run 8080 code.

Right, they first appeared in the 186, but that was barely relevant to PCs and compatibles, think there were one or two machines maybe, but got carried on to the much more common 286, so would be regarded as “new” to the PC realm with the 286 introduction.

I second this. There are two or three CP/M emulators that use that 8080 emulation feature.

Unfortunately, a Z80 is required for popular Turbo Pascal software.

So software emulation is still needed for some programs.

Anyway, there’s nothing preventing a user to use both kind of emulators on an XT, depending on the CP/M application.

– I just wished that 8080 emulation feature was used more often. Say, to make MP/M 80 run on IBM PC. Would have turned the PC into a real, powerful workstation for CP/M-80 development.

Yes, the NECs could run most DOS real-mode applications from the 1990s, too. Those that were compiled automatically with 80286 instructions by the compilers of the time without the intention of the programmers.

The NECs also made the VGA driver of Windows 3.0 work. There’s an 808x patch on vcfed.org that removes this requirement. But it’s ~20 years too late.

However, one thing the 80286 could do, but not the 808x/8018x or NECs, was exception handling (illegal instructions).

An 80386 emulator, EMU386, uses that feature to handle newer instructions on a 286 PC. Real-Mode instructions only, of course. In practice, the software emulator was essentially serving the same purpose as the NEC V20/V30, to upgrade the system a bit and make it more compatible.

We used the 5150 as our first PC and replaced the 8088 direct for the cheap ($8) NEC V20 as it did run with a performace of 170% and because we did use bare metal (hex machine code) we didn’t have trouble with timing (That came later with the 40486 and only realy resolved when TimeStampCounter arrived on later processors).

From 1992 till 2016 we used Borland Pascal 7 in protected mode (32Bit) together with DRDOS 6 and/or MSDOS 6.22 after a fix in TP521.lib (changed just 1 byte from 0Ah to 40h) DOS and BP7 programs will run nicely on even the latest i9 systems. No more runtime error 200 (divide by zero). Also the size of executables did grow to even over 3.2 Mbytes and no need of TRS (Terminate and Stay Resident) programs.

When i retiered that was also the fate of the DOS machines, now they are struggeling with Win7, 10 and 11 (a complete crime to make ,and run, a driver). Also the PNP (Plug And Pray) when switching boards on a Windos OS makes it impossible for a non specialist to replace a defective board in the field (with DOS that PNP was realy Play And Play).

Oops.. typo last sentense Play And Play should be Plug And Play.

“Play and play” = keep playing with the various setting options until it finally decides to work.

>a lot of software

Name one other than Lode Runner. Someone on Vogons managed to find two more obscure text based games.

Don’t forget the Tandy 2000, which was an 80186 MS-DOS compatible that kicked a$$. Real 16 bit bus, better graphics, higher density floppies, bigger HD, more memory than the PC, but not BIOS compatible, which was it’s downfall.

Ran Xenix quite nicely too. In 1984.

Since it used the 80186 it would never be compatible. I’d bet the bios was fine, the whole point was to isolate the hardware from the software. The separate bios meant it only had to be written for different hardware, rather than the whole OS.

The 80186/88 of course added peripheral to the CPU, useful for better integration. But that fixed things like DMA to certain addresses, which didn’t match the IBM PC.

So for a brief time, there was a variety of hardware, some actually better than the IBM PC.

The bios meant that other neat hardware still worked.

But once applications started bypassing the bios to directly address hardware, for speed, compatibility became an issue. Because the bios was no longer there to do a translation.

Thus you had to be compatible, and the end of any variatiin.

I have an Intel data book that not only called the 8088 an 8-bit processor, it also compared it against the Z80 and 6809!

I still put strong weight to the “too many DRAM chips for a low-cost product” theory.

Also, I can’t recall when Motorola was ever excited about producing the 68008, particularly when around that time they were trying to push the 68000 for low-volume high-cost Unix style systems. (read DTACK Grounded for some background on that)

As is often the case, I must rely on the comment section to correct and clarify a hastily written article filled with errors, opinion, and speculation.

To be fair, it seems to relay what was said elsewhere, rather than introducing errors and speculation.

5$ for the cpu, which is the most costly component. at the end the markup seem huge.