There have been many robots and AIs in science fiction over the years, from Astro Boy to Cortana, or even Virgil for fans of the long-forgotten Crash Zone. However, all these pale into insignificance in front of the cold, uncaring persona of the HAL 9000. Thus, [Jürgen Pabel] thought the imposing AI would make the perfect home assistant.

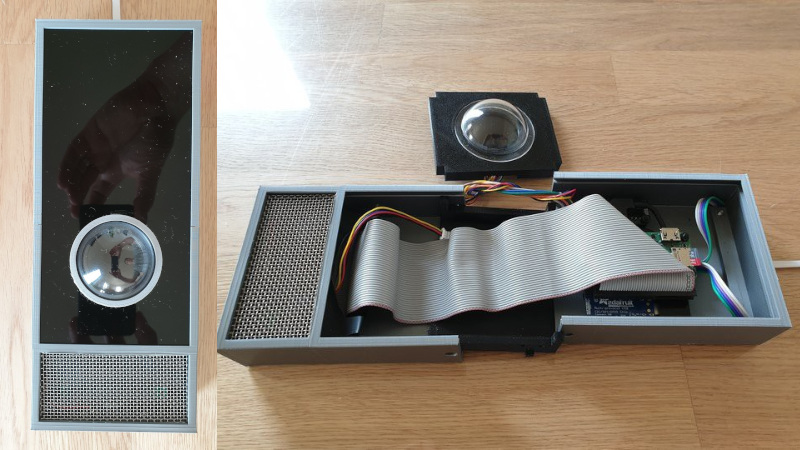

The build is based on a Raspberry Pi Zero 2, which boasts more grunt than the original Pi Zero while still retaining good battery life and a compact form factor. It’s hooked up with a 1.28″ round TFT display which acts as the creepy glowing eye through which HAL is supposed to perceive the world. There’s naturally a speaker on board to deliver HAL’s haunting monotone, and it’s all wrapped up in an tidy case that really looks the part. It runs on the open-source voice assistant Kalliope to help out with tasks around the home.

[Jürgen]’s page shares all the details you need to make your own, from the enclosure construction to the code that laces everything together. It’s not the first HAL 9000 we’ve seen around these parts, either. Video after the break.

Nice!! I like the visuals on the eyeball!

I am also building such a device – nonetheless, instead of a display, I use a High Power LED and as a lens I use a “Smartphone Wideangle” Extension Lens.

Nice project :)

Thanks! My main point for the LED “eye” was to display some other UI also. The 3D print design includes (optionally) a RFID reader, two rotary encoders and small buttons (all exposed via the top panel); one rotary encoder is used to control a small UI that shows up when used and one is a volume control that will also visualize on the LED.

Also, my initial attempts (some years ago) with LEDs and fisheye lenses all didn’t yield the look-n-feel that I was aiming for. The availability of a round waveshare display was a game-changer for this project.

If I ever have a voice activated assistant in my home, it’ll be activated like “Big Computer” for Louise Baltimore in John Varley’s novel “Millenium”

“Listen up, mother******.”

https://en.wikipedia.org/wiki/Millennium_(novel)

i always wanted to build my own voice-activated speaker that would work with my Home Assistant build, something i could shout “Computer!’ at like i was in Dexter’s Laboratory. unfortunately the only system I could think of is Alexa, or open source assistance that require training voice models.

I set up something like this before voice assistants like Alexa were around. My MacBook had basic voice recognition, and you could tell it to run scripts based on various commands. The wake word was also programable. It was pretty fun pretending to be Captain Picard yelling, “Computer! Increase volume by 15%!”

He says Hey Mycroft?

I did also build a mycroft AI from a raspberry, and my intention was to name it HAL, and have it display the red eye om the 7″ display, when not displaying anything else useful, but I gave up on the project when my brand new Pi4 burned up due to heatissues

I did get to play with Mycroft a bit, and I didn’t really care for it, lacking support for Spotify and other useful things, I got bored with it.

Hi, this is my project: That video is actually a bit older and at that point I was still aiming for mycroft as the software (thus the “hey mycroft” wakeword in the video); however, after discovering kalliope in combination with vosk for STT, I switched to kalliope. Why? Because the presumably biggest drawback of kalliope – the lack of an intent engine – can be used to reduce the vocabulary for STT down to a few hundred words; the effect is a near zero word error rate.

I am currently working on finalizing the software for all that hardware (display & UI but also optional RFID reader, rotary encoders and buttons); it all works at a PoC stage and should be “completed” (whatever that means) sometime this summer. I’ll update videos and documentation as I progress….

PS: spotify support is also on my todo-list

I’ve still got the greatest enthusiasm and confidence for this build!

Thanks – I think I’m really going to finish this project (it all started 4-5 years ago after all, so it’s about time)

The main problems I’m finding with things like Mycroft is that they are still cloud based- going fully local for an offline solution is a bit more involved. :(

This project is a fully offline solution; at least if you’re not counting in online-services like spotify or such. But the voice assistant itself is fully offline:

– wake-work: offline (that’s presumably an expected answer); porcupine or precise or …

– speech-to-text (STT): offline with VOSK and reduced vocabulary (generated from kalliope orders)

– text processing: N/A – kalliope has no intent engine or such

– skill execution: skills are executed offline…but of course may rely on online services – but that’s entirely the user’s choice

– text-to-speech (TTS): offline with pico2wave (I’m still evaluating other options, like the upcoming mycroft mimic3)

All that and the other stuff (optional peripherals like an RFID reader but also die UI and display driver) keep a Pi Zero 2 hardly busy at all; I’ll probably publish runtime data later on).

Hey, you can’t say science fiction AIs without mentioning Zen and his sidekick Orac. Zen would fight your spacebattles for you!