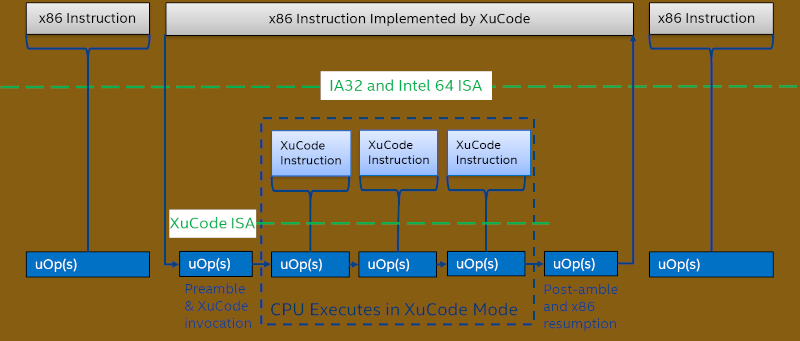

The aptly-named [chip-red-pill] team is offering you a chance to go down the Intel rabbit hole. If you learned how to build CPUs back in the 1970s, you would learn that your instruction decoder would, for example, note a register to register move and then light up one register to write to a common bus and another register to read from the common bus. These days, it isn’t that simple. In addition to compiling to an underlying instruction set, processors rarely encode instructions in hardware anymore. Instead, each instruction has microcode that causes the right things to happen at the right time. But Intel encrypts their microcode. Of course, what can be encrypted can also be decrypted.

Using vulnerabilities, you can activate an undocumented debugging mode called red unlock. This allows a microcode dump and the decryption keys are inside. The team did a paper for OffensiveCon22 on this technique and you can see a video about it, below.

So far, the keys for Gemini Lake and Apollo Lake processors are available. That covers quite a number of processors. Of course, there are many more processors out there if you want to try your hand at a similar exploit.

This same team has done other exploits, such as executing arbitrary microcode inside an Atom CPU. If you want to play along, you might find this useful. You do know that your CPU has instructions it is keeping from you, don’t you?

This was done before on a pentium II, yielding some fun results: https://twitter.com/peterbjornx/status/1319133436041482240 https://github.com/peterbjornx/patchtools_pub https://twitter.com/peterbjornx/status/1321653489899081728

There was also a talk on it at https://hardwear.io/netherlands-2020/presentation/under-the-hood-of-a-CPU-hardwear-io-nl-2020.pdf

>processors rarely encode instructions in hardware anymore

RISC processors do, and there is a good reason why RISC is overtaking x86.

Apart from modern RISC being so bloated it practically qualifies every processor before the mid 90s as RISC in comparison and post mid 90s, they’ve all got RISC cores anyway, so it’s probably a very redundant appellation.

OTOH I kinda wanna see some x86 chip get given a microcode interpreter for RISCV or something.

Whenever people makes that type of reasoning I have to pop up and protest. Modern x86 absolutely do have CISC cores with many complications needed to effectively execute CISC code, and modern RISC cores still follow design features that ease pipelining and reduces complications.

Not to mention, “CISC” style processors have always broken instructions down into simpler internal operations, the only difference with modern x86 was the realization that not only could instructions be executed out of order, but so can the micro-operations that make up each instruction.

Also, “RISC” these days is more of a marketing term. Modern high-performance ARM processors often break instructions into micro-ops, just like x86, and high-performance RISC-V implementations will combine instructions into macro-operations, meaning the core has a more “CISC”-like implementation than the instruction set exposes.

Stuff like this makes me ponder how long intelligence agencies (e.g. NSA) have had this kind of access and what they concluded.

Exactly one day. It’s well known that they get most of their security tips from Hackaday articles.

Working for an NSA subcontractor on computer remote eavesdropping equipment, I had an IBM PC apart on the bench behind me 6 months before it was released to the public. 256k ram, cassette tape and ROM BASIC.

My group was trying to keep it from radiating data, but that goes hand in hand with the guys in the secure “tank” figuring out how to listen into the PC before anyone could even buy one.

(that is a subject in itself since the PC was so noisy that the FCC had to change the rules to radiated noise just to allow it to exist)

I take it you’re talking about TEMPEST?

I was working at IBM in the 80’s when TEMPESTed PC’s were still big sellers (to approved customers of course). It always fascinated me because even internally it was hard even to get a real definition of what it was, never mind how it worked.

It wasn’t until years later digging through the Snowden dumps that I really realized the extent of those programs operationally and technically.

Now we’re seeing Joe black hat turning SATA cables into data exfil antennas, meaning air-gapped isn’t good enough. You need EMI/RF gapped too.

Crazy, but fascinating stuff.

Complexity breeds bugs. Unless we start moving in the opposite direction, bugs will multiply and problems will get even worse over time.

They have to multiply, because Intel bugs can’t divide.

The modern microprocessor is the most complex and intricate system on the planet, and has been so for over 2 decades. I’ve done many processor designs and the mantra has always been (with a nod to A. Einstein), “Things should be as simple as possible, but not simpler”. The untold story of how such extreme complexity has developed and the thin expertise that supports it is fascinating. By any measure “RISC” and “CISC” are the same implementation and some (nVidia with their Denver microarchitecture) implementations don’t care any more what the actual instruction set is. The X86 advantage is and always has been extreme compatibility, which is why it’s the most used general purpose processor ever. Even ARM has to maintain legacy, which over time complicates every implementation also. “RISC” has always been on the verge of overtaking “CISC” ever since Dave Patterson popularized the term, but the reality is far from simple and there will continue to be architecture wars each side of which proclaims their design is inherently better. But, when you have to deal with 16 executions units, 6 instruction decoders, multiported caches, massive reordering and disaggregation driven by process technology lags the only difference ends up being who can run the right code the fastest with the lowest power. So far Intel and AMD have won this battle and it has little to do with what instruction set is running. Microcode or the equivalent is used in every implementation that cares about any degree of performance. Embedded processors are simpler not because of the instruction set but rather because of performance/power requirements don’t require the additional complexity.

By the way, the advent of multiple processors on one die has always been seen by designers as the end game since it means that single core microarchitecture has reached it’s limit. It signals the need for fundamental architectural research for the next steps. Thus the increased interest in quantum computing, optical systems, etc.

Regarding RISC

I think a lot of the discussion/debate in this thread is based on false assumptions.

Originally RISC was by definition a system in which all instructions took the same number of clock cycles to execute. The holy grail being one clock cycle. Period. That’s RISC. This meant REDUCING the number of instructions by reducing all desired operations to their core, nuclear building blocks. The goals were many and mostly obvious but all centred on hardware optimization and efficiency. EG increased pipeline efficiency, and easier parallelism.

The idea being that instead of having COMPLEX instructions with multi-cycle, or worse variable, execution times you would replace them all with their simpler building blocks and let the compiler break down the complex operations into the simpler RISC instructions, allowing the CPU to optimally execute those simpler instructions in the most efficient way possible.

Of course like most things, the original idea got corrupted, RISC is no longer even RISC by the original definition, and many of the advantages of the original definition have been lost.

Intel was stuck in the jail of legacy x86 code compatibility and tried to cheat their way around it by moving the CISC->RISC translation from the compiler into hardware at the microcode level. A pretty good idea IMO, even if the result’s success is questionable. Part of this jail was imposed on them by the dominance of Windows. Unlike the early RISC world which was totally UNIX based and where it was common to distribute source code and then do an optimized compile on the end user platform. x86 didn’t have that option at the time.

I was there to see RISC in it’s infancy as the IBM 801 and it’s marketable child the ROMP (in the IBM RT PC) I saw the potential of RISC when applied without the limitations of legacy compatibility. I even owned a few IBM RT’s that I lucked into. Compared to x86 PC’s of the time they were impressive. It’s a shame that the concept got off track due to market forces and the deviation from the original all instructions have the same execution time design philosophy.

I’d love to see an architecture return to that design concept and push the complex instructions back out into the source code where they belong. I think it would really help fix our inability to take advantage of the parallelism available to CPU core designs today. I had originally hoped that RISC-V would be that return to the original RISC idea, but it isn’t. Not that I’m not a big fan of RISC-V, it’s just not the return of true RISC that I’d hoped would happen one day.