It wasn’t long ago I was nostalgic about an old computer I saw back in the 1980s from HP. It was sort of an early attempt at a PC, although price-wise it was only in reach for professionals. HP wasn’t the only one to try such a thing, and one of the more famous attempts was the company that arguably did get the PC world rolling: IBM. Sure, there were other companies that made PCs before the IBM PC, but that was the computer that cemented the idea of a computer on an office desk or at your home more than any computer before it. Even now, our giant supercomputer desktop machines boot as though they were a vintage 1981 PC for a few minutes on each startup. But the PC wasn’t the first personal machine from IBM and, in fact, the IBM 5100 was not only personal, but it was also portable. Well, portable by 1970s standards that also had very heavy video cameras and luggable computers like the Osborne 1.

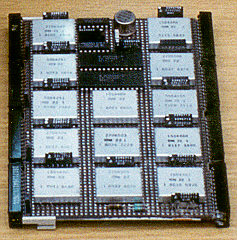

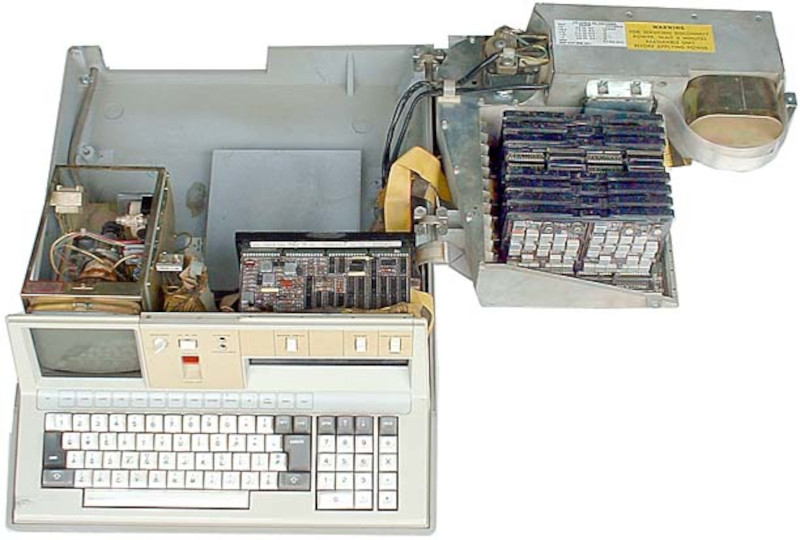

The IBM 5100 had a brief three-year life from 1975 to 1978. A blistering 1.9 MHz 16-bit CPU drove a 5-inch CRT monitor and you could have between 16K and 64K of RAM along with a fair amount of ROM. In fact, the ROMs were the key feature and a giant switch on the front let you pick between an APL ROM and a BASIC ROM (assuming you had bought both).

Computer hobbyists in the 1970s loved BASIC, so this was the object of desire for many. The entry price of around $9,000 squashed those dreams, though — that was even more money then than it would be today. The idea was influential, though and there was even a dedicated book published about the machine. Like the HP computer, the main mass storage was via tape drive. You could even get an add-on to make it work as an IBM remote terminal or use the serial port for a modem. If the screen was too small, a BNC connector on the back could drive an external monitor.

Oddities

It may seem funny to think of a 55-pound computer with a CRT as portable. But this was a time when computers sat on raised floors in special rooms with exotic power systems. Prior to this, the military had the most portable computer, also from IBM, which was an IBM 1401 on a special truck.

APL might seem like an odd choice, but in its day it was a prestigious language. Of course, that also meant the machine had to handle the oddball character set and strikeovers necessary for APL in those days. However, APL was very powerful for manipulating large sets of data and if you dropped $10K on a computer, that’s probably what you had in mind. Other workstations — like the one from HP — had you using BASIC which did not have a lot of facilities for dealing with high-level math and matrices, especially in those days.

However, a beta tester for the machine warned IBM that normal people weren’t going to learn APL just to use the machine. This spurred the addition of the BASIC option. However, the way the machine implemented APL and BASIC was perhaps the oddest thing of all.

IBM had no shortage of software to run APL. They also had a BASIC system for the IBM System/3. To save development costs, the 5100’s processor emulated most of the features of a System/360 and a System/3. This way, they could make minor changes to the existing APL and BASIC interpreters. What that means, though, is that the 5100 wasn’t just a portable computer. It was a portable and slow mainframe computer.

Time Travel

Maybe the oddest thing, though, isn’t a technical thing. I don’t remember it being widely known that the IBM5100 was really a tiny mainframe. It probably wouldn’t be attractive for IBM to make that well known, anyway. You don’t want your bread-and-butter mainframe customers either planning to buy a (relatively) cheap replacement or complaining that you are charging them way more than this cheap device that “does the same thing.”

However, in the year 2000, this became a key plot point in what was almost certainly a time travel hoax. John Titor claimed to be from the year 2036 and that the military had sent him back to 1975 to collect one of these computers. Why? Because after World War III (which supposedly would happen in 2015), they needed the computer to run old IBM software and they couldn’t transport a several-ton mainframe to the future.

However, in the year 2000, this became a key plot point in what was almost certainly a time travel hoax. John Titor claimed to be from the year 2036 and that the military had sent him back to 1975 to collect one of these computers. Why? Because after World War III (which supposedly would happen in 2015), they needed the computer to run old IBM software and they couldn’t transport a several-ton mainframe to the future.

Plausible? Maybe. Even though the predictions didn’t come to pass, true believers will simply say that was because his time travel changed events. We aren’t buying it, but we have to admire that someone knew enough about the IBM 5100 to craft this story.

Try It

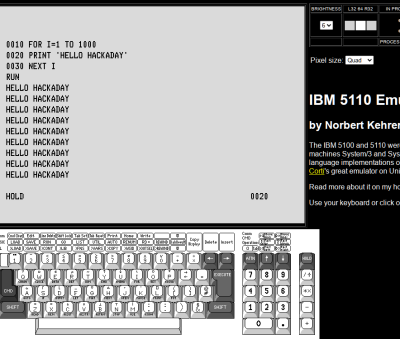

I have never seen one of these in person, but I imagine if one came up for sale now, the price would be astronomical. But you can, of course, try the obligatory emulator by [Norbert Kehrer]. Or watch [Abort, Retry, Fail] run a real one in the video, below. There is a lot of detail over on the Computer Museum site if you want to dig into the technology. There were actually a few different models with slightly different options. You’ll find a lot of interesting info over at oldcomputers.net, too, which is where some of the photos in this post are taken from.

If you do dig into the diagrams, it is helpful to know some of the old IBM terminologies. For example, ROS is “read only storage” or what we call ROM. RAM is RWS or read/write storage. Don’t forget, IBM wasn’t keen on ASCII, either.

Maybe I’m biased, but if I had my choice, I’d rather have the old HP machine on my desk. But I will admit that this machine captured my imagination and was a precursor of things to come. Of course, I liked APL, so your take on it might be different. The original IBM PC, by the way, was the model 5150, so the 5100 is sort of its older geeky cousin.

It looks like a hobbyist made HP85 :) but that one came later

We had a HP85 in our ECM shop in the late 80’s. It was surplus, I’m not sure how we got ahold of one but a coworker wrote some useful programs in BASIC.

Our office computer was a Zenith Data Systems 286, and you could order one through the base exchange, but they were still pricy. I ended uop with an XT clone that cost $1200 in 1989, buit i get get a fancy CGA monitor.

And there are several of these strange machines eaking out a decent living in NJ at InfoAge. I see one there at the Repair Weekends show up to have it guts cleaned perhaps twice.

Regarding boot times..

That’s why, I love working with real electronics: aerospace, measurement systems or military equipment. No bloat ware! No fancy effects – just the right data at the right time!

The problem with having more power and more memory IMHO is, that developers tend to get sloppy “Bah, I’ll just allocate 2GB RAM, just in case”…

We saw this many times. People called themselves developers but didn’t understand the system. We even had a guy do 2000 lines of code, which could be done in 8!

I call this “Henrik’s Inverse Moore’s Law”:

“Developers add bloat/do shitty work to counter balance Moore’s Law”…

Have you done any cost analysis to show that military coding standards would help the bottom line? Let’s review the cost of military hardware compared to standard consumer goods.

It’s true, that sloppy coding styles and languages such as C# lets people produce a lot of code in a short time. However, it comes with a cost : Lower performance, higher probability of errors and security holes.

Often companies skimp on testing to get product to market. “Who cares about customers? They can just upgrade” seems to be the mantra

There are no magic bullets against leaks. C#, Java etc produce generally less bad errors than pointer based languages (which still have their place).

Coding in Ada has been said to be ‘coding in triplicate’. Still doesn’t prevent all leaks.

I’m an advocate for Klingon coding:

copy con: program.exe.

Enter op codes and data with Alt-keypad. That will prevent creation of most bugs.

But we’re all living in a world where server side Javascript isn’t just a bad joke. There are low hanging fruit to be stomped into mush first.

Some of the most severe bugs, I’ve fixed was due to not checking return codes, no input validation and just sloppy coding.

People have come to rely too much on Garbage Collection or “Bah, an Exception Handler will handle this”..

My “magic bullet” is simple: if you allocate memory, you need to clean it up. If you get input, assume it’s crappy.

Maybe we should stop making the bottom line our only metric, then.

Could be. Or maybe, we should start thinking long term instead of short term :-)

And maybe we should realize that the purpose of testing is not only checking functionality but also breaking the software. Thus, finding bugs and limitations

I had a good laugh at “developers allocate”. My lead AI dev hasn’t used the word allocate in years but managed to trigger oomkiller with his last neural network.

Developers call frameworks.

And the frameworks magically appears? ;-)

you look to be stronger than everyone

Nah, just been schooled in the right industry at the right time making me a pedantic, grumpy asshole..

It’s Gates law. Been Gates law for _decades_.

‘Software gets 40% slower every year.’

I’m sure he’d love it if you fell on that grenade. But no, he earned it.

Cool!!! Didn’t know that one. Thanks :-)

Gate was an optimist

It was neither aimed nor intended for hobbyists. I see it came out in the fall of 1975, so by then Byte existed, so it probably got some coverage. But not really as an option.

I think I saw one of these in a university in 1976 or 77. A step up from the PDP/11, it was on somebody’s desk. Putting BASIC in there meant it coukd be used as a calculator.

Hobbyists took up BASIC in part because there was a movement before home computers. And you weren’t going to be allowed to run machine code on a timeshare. The People’s Computer Company was there when the Altair came along, and they started Tiny BASIC. Besides, if you were an electronic hobbyist, the foundation of early home computers, BASIC gave yiu something to do with it, if you had the memory.

BASIC is also fairly simple to port from one system to another, with the exception of specialty stuff like graphics and sound that are often wildly different from one machine to another, or may not exist.

That’s how games like space war, star trek, lunar lander, and trade wars spread around. They used the lowest common functions of a plain ASCII display. I had a Xerox 820-II, got some printouts of those games from some other computer, adapted them to whatever BASIC was on the CP/M disks that came with the secondhand Xerox, then spent many hours playing them.

IBM followed on in the early 80’s with the PC XT/370, a desktop system that ran a slimmed-down version of the mainframe OS VM/370. I lusted after that machine, but alas, the 5-figure price was a wall I could not climb.

That always seemed more interesting. Used some modified 68000s

The story that I heard at the time, perhaps apocryphal, was that IBM used two 68Ks to build one small S/370. One 68K was modified to run 370 ops, and the other to serve as an S/370 memory management unit. The 68K was a microcoded device, so changing its instruction set architecture by altering the microcode portion of the masks could have been feasible.

This work was done by William Beausoleil, an IBM fellow and one of the most influential pioneers in the computer industry.

I was a contract software engineer at Intermetrics in the early 80s. They built specialized compilers targeting the processors used in the space shuttle, various military systems, and highly parallel supercomputers. Most of the compilers were hosted on DECsystem-10, 20, and/or VM/370. We had a prototype IBM System/370 scientific workstation in our lab that used, as others have described, a pair of 68xxx processors running IBM-proprietary microcode and emulation software. That produced some quirks that needed to be avoided in compiled code. This desktop system included an 8086 (?) to manage peripherals and emulate a terminal for the system. As far as I know, this was not released as a product until it emerged as the XT/370 a few years later.

Intentionally discarded a 5150 in 2001. It sat on the curb for 3 days and got peed on by a dog. Before that, it was used as a counterweight for opening a 3,500-pound stone door that led to my loot vault (rape dungeon). I already had a calculator so never used the 5150. RIP, shitty computer.

Call Okabe. The path to Steins;Gate has been opened!

El Psy Kongroo

Finally, I was getting worried that I was the only one who got the reference.

El! Psy! Congroo!

Don’t let SERN get their hands on this thing

Had 5100 and 5110 (well IBM owned it) I sold the 5100 on eBay .. for ~$8K (someone n Korea has it now).. Any APL folks still about? Does the name Adin Falkoff ring a bell?

Falkoff?

Growing up must’ve been tough.

What languages did Ted Nelson recommend in Computer Lib/Dream Mqchines? One was Trac, that never went anywhere. But I think one of the other two was APL.

In the early days of Byte, there were some letters about custom type balls for the IBM Selectric, specifically for APL.

That would be one of these. https://www.computerhistory.org/collections/catalog/102696478

Computer lib here: https://archive.org/details/computer-lib-dream-machines

Also, when it came to APL typeballs for the selectric terminals (2741 / 1050) there were two variants, Correspondence and BCD.

Did APL programming for 40+ years, 5120 in 1979/1980 or so. I never met Falkoff but knew all of the other APL implementors, including Ken Iverson himself. From my understanding (or disunderstanding), Falkoff kept Iverson’s dreams real, as Iverson frequently needed adult supervision (look at J).

The 5100’s emulation of S/360 and S/3 was of an integer-only nonpriv-only subset. It was unable to run operating systems or normal application programs. For both the APL interpreter and the BASIC interpreter, the interpreter’s assembly language source code was patched to remove every OS call and every floating point instruction. Those were replaced by calls into the 5100’s small native OS and FP library, written in the SCAMP cpu’s quirky machine code. This was before IBM had CMOS and so it was hard to fit a cpu into a few small cards. So the machine’s native program counter lived in memory, not in a hardware register.

My father wrote a 4th generation language for this system and we sold accounting systems including a word processor. After learning basic on a Wang I worked with my fathers company developing software. I still make my living programming in basic

Do you still do it on your Wang?

“John Titor claimed to be from the year 2036 and that the military had sent him back to 1975 to collect one of these computers.”

Nobody believes that, he just wanted to sell it on EBay.

But there wouldn’t be an Ebay for another 20 years, so, still a time traveler. And they probably would have sent him to get it when it was just cheap junk, not brand new full price.

Besides, if it was military sponsored, I doubt it would be to pick up an old computer.

Yes, you need that sweet spot when the stuff has become obsolete beause of newer stuff, but before it changes to collectible

And that’s why he came back in time, build a time machine, buy collectible stuff when it’s cheap, profit!

I remember Adin, and Larry Breed, and Ken Iverson and the rest of the gang, although I met most of them only once. I was an early user of the first APL system based in Yorktown Heights, but accessed it from Chicago. Developed APL applications for the 5100 for a couple of years, until the first IBM PC came out. I edited one of Sandy Pakin’s APL\360 manuals. Good memories!

Working for IBM at Santa Teresa, I borrowed a 5100 and poked around in it for some weeks. My goal was to make a form of SpaceWar game: a star, two ships (or a ship against an orbiting platform), and torpedoes. Problem was that I had only the built-in BASIC (which would not support a real time display) and an engineering manual which detailed the instruction set. No assembler and dead silence when I sought such. I had to hand-assemble (hex code) the software, manually resolving memory addresses, and revisions were painful in the extreme. The star’s gravitational field (table driven) was too coarse, and there were some issues with the keyboard when two players were competing. Also I burned-through a bit of the screen. Loading from the magnetic tape cartridge was pretty tedious. After that experience, by comparison, hunting at work System/360-370 multiplexer channel overruns was downright pleasant.

We have a PALM assembler available now for the IBM 5100 ! I’ve been verifying it, works quite well:

https://www.youtube.com/watch?v=2pKpCWdYujA&t=6m23s

Now I’m working on an easier way to transfer the resulting binary over to a 5100 (tentative solution so far is to stream it in through serial interface to the keyboard port, but plan is to define an IO device through the external pins at the back). I’ll be working on preparing a better/updated orientation about the PALM instruction set. It is quirky, but I think it is because IBM was limited to how much they could fit in the microcode on the processor card.

I’ve found an old IBM manual “GENASM” (general assembler) that is the earliest mention of PALM that I’ve been able to find (about c. 1978), that being the name of the instruction set associated with the IBM 5100 (and same set carried to 5110/5120).

Yes, it is agonizing that the BASIC on the system has no PEEK/POKE capability (along with an EXEC like command), that would have helped to make more “interactive” software and experiment with applying machine code directly to memory yourself. But I understand why they don’t – the S/3 BASIC they are emulating didn’t have those capabilities either and the system just wraps what the mini could do. But luckily the system does have the built in DCP to apply machine code. Although, I don’t think there is a way to detect multiple key presses at the same time – the last key pressed always “wins.”

The Fish That Got Away

I took a break from my studies at University of Florida to go on internship at IBM Boca Raton the summer of 1980(?). My professor had lined up “something special” for me with his friends at IBM, but when I got to Boca a last minute reassignment meant I spent the summer doing a little GPIB hardware and mostly writing APL software on an IBM 5110, for “Quality Assurance” testing on other IBM products (ATM machines, terminals, etc.).

APL was the first computer language I learned at university, and I really loved the 5110. I discovered more than a few bugs in the interpreter. I think the code was maintained out of Rochester, MN and a few phone calls confirmed the bug, but there was no workaround. The 5110 used IBM’s PALM processor to emulate the 360 to run the APL interpreter. As explained, some optimization in the code caused the bug. A key APL function gave incorrect results if the argument was an even binary number (eg. 8, 16, 32, 64, etc.). Very weird, and Rochester explained that repairing the bug would require updating the ROS (and that wasn’t going to happen). The workaround was to emulate this function using other functions; a suboptimal pain in the butt.

I do recall a big meeting that us summer hires were invited to attend, and a guy (Don Estridge?) talked a bit about the Apple computer, and IBM’s plans. Weird. Later we were told to forget what we had heard; we’d been mistakenly invited.

Fast forward a year, and I had graduated and was working at Hewlett-Packard in California doing work related to my master’s thesis (alphanumeric CRT display terminals, graphics frame buffers, color maps, etc.). The IBM PC had just been announced, and out of the blue I get a call from my old professor (the one that had secured me the IBM internship the summer before). He explained, “THAT is what you were supposed to work on!”

As it turned out, at the IBM Boulder facility, some summer hire had pilfered the IBM copier plans that he had worked on, and tried to sell them to a competitor. So one of the outcomes of unraveling this mess was that IBM Armonk dictated “that *no* temporary employees would be allowed on projects of a certain classified level”, and the PC being developed at Boca Raton was one of them. Hence my last minute reassignment.

A lot has been written about IBM’s choice of Intel’s processor versus Motorola’s. Who knows what the outcome would have been had IBM chosen differently. IBM has chosen to go a lean route with memory on the PC. This was a bad choice; never bet against more memory! But what about the displays?

While the P39 green alphanumeric display was respectable, it cried out for a bit-mapped graphics overlay. (And this was addressed by other companies.) On the other hand, the Color Graphics Adapter, with it’s 320×200 resolution, and 16-colors, and TV monitor, was an annoyance to program and an eyesore to look at. IBM should have been embarrassed to have such a pathetic offering on a computer costing thousands of dollars. Some sort of internal politics must have played a role (with the PC cutting into sales on premium-price IBM products).

Anyway, I bragged to my friends for a while: “that’s not what I would have designed”. Sheesh, for only a $100 or so of extra DRAM and logic, that could have been a fine offering. It took a while before IBM somewhat corrected this with the EGA, and then finally with the VGA. And by then IBM was easing out of the PC industry it had created.

Epilog

IBM has long since cleared out of Boca Raton. When I looked for our old building (designed by the famous Marcel Breuer) it’s not something called “Boca Raton Innovation Campus”. The best I can figure, our building is now occupied by, among other things, a dentist office. Sigh.

Epilog to the Epilog

The site of my old office at Hewlett-Packard in Cupertino was sold off and bull-dozed.

It’s where Apple Computer’s flying saucer headquarters was built.

Do you have any recollection or estimate of IBM 5110 sales numbers? The best estimate we have is that “not more than 10k units were built and sold” (that’s of each the 5100, 5110, and 5120 — so collectively about 30k units). One estimate puts under 10% of those being “APL versions” (having the APL keyboard and ROS support), but no way to verify that. These aren’t horrible numbers – in the early 1970s, the estimates of the Datapoint 2200, Wang, and HP systems of those years are in the order of 3000-5000 units sold. By mid-1970s, the Altair/SOL-20 and like systems are in the ~10k units sold. Even “the trinity” by 1977, they are estimated to be roughly 50k units sold (talking worldwide too). I think possibly the first TRS-80 was the first to reach 100,000 units sold (by virtue of the RadioShack relationship). Even the original Apple2 wasn’t all that popular, but it “exploded” in 1979 with the Apple2e and introduction of VisiCalc (and other reasons, like more peripherals like the graphics tablet). Still, it was the IBM PC that first reach 1,000,000 units sold.

Haven’t a clue about sales numbers, as we were in engineering.

This machine also had a life as a mainframe debug tool for CEs and developers to use, when equipped with adapters that allowed bus and tag cables to be plugged in. I recall it was called the EDT-2. We used them in Hursley when developing mainframe terminal systems and host graphics.

Was that more the 5100 or 5110? Asking because the 5110 had quite a different display font, where the first 64 characters seem to be a sequence of binary symbols that would be useful for debugging (and quick display of binary values).

That was a trick I used on my thesis (a Z80-based Alphanumeric Display Terminal). An 80-column x 25-row display needs only 2000 bytes, so of a 2048 ram, that leaves 48 bytes available to the processor, which were my scratch memory for various software pointer, values, buffers, etc. A hardware dip-switch allowed the display screen to start in the scratch RAM area (rather than the normal display RAM area). So I could actually see on the screen the values of the scratch RAM at the top row of the screen as the terminal was being used. For example, if you moved the cursor, you could see a byte location increment and decrement. This was a really helpful debugging trick as the Z80 environment I was using was very limited.