Fascinated by art generated by deep learning systems such as DALL-E and Stable Diffusion? Then perhaps a wall installation like this phenomenal e-paper Triptych created by [Zach Archer] is in your future.

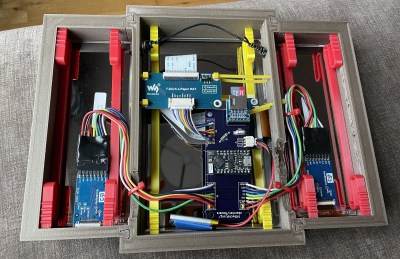

The three interlocking frames were printed out of “Walnut Wood” HTPLA from ProtoPasta, and hold a pair of 5.79 inch red/black/white displays along with a single 7.3 inch red/yellow/black/white panel from Waveshare. There are e-paper panels out there with more colors available if you wanted to go that route, but judging by the striking images [Zach] has posted, the relatively limited color palettes available on these displays doesn’t seem to be a hindrance.

To create the images themselves, [Zach] wrote a script that would generate endless customized portraits using Stable Diffusion v1.4, and then manually selected the best to get copied over to a 32 GB micro SD card. The side images were generated on the dreamstudio.ai website, and also dumped on the card.

Every 12 hours a TinyPico ESP32 development board in the frame picks some images from the card, applies the necessary dithering and color adjustments to make them look good on the e-paper, and then updates the displays.

With the onboard 350 mAh battery, [Zach] says the frame will run for about 16 days. That’s pretty impressive given the relatively low capacity, so we imagine you could really get some serious runtime from a pair of 18650s. In the past we’ve even seen e-paper wall displays use solar panels to help stretch out their battery life, though we can see how tacking on some photovoltaic cells might impact the carefully considered aesthetics of this piece.

It’s a beautiful project, but according to [Zach], he’s starting to have some second thoughts about the images he’s showing on it. Like many of us, he’s wondering about the implications of content generated by AI, specifically how it can devalue the work of human artists. Thankfully loading the display up with new images is just a matter of popping in a new SD card, so we imagine the hardware itself will still be put to use even if the content itself is swapped out.

We live in a world where there is more and more information, and less and less meaning.

–Jean Baudrillard (1994)

What is AI generated “art” anyways?

The present age – dominated by simulations, things that have no original or prototype. Death of the real: No more counterfeits or prototype, just simulations of reality – hyperreality.

Cannot wait for 4th order simulacra being the dominant type of art, but that is what GPT3 is for. AI will ultimatively control other AI. One AI trained with slurs will detect if the 2nd AI is out of line and censor it. The sign bears no relation to any reality whatsoever; it is its own pure simulacrum.

Ah, what is “art” anyways? Can art without a context be art? If you have a Rembrandt painting and a very good counterfeit an you show them side by side, you probably couldn’t differentiate the art from the copy. People could have a counterfeit one the wall for all their lifetime and everyday enjoy the details craftsmanship and complexity. Art is often confused with value and all art is inspired and/or derived from things the artist has experienced, so AI is in some ways not so different.

BTW, you might want to read “Light Verse” by Isaac Asimov

G.K. Chesterton wrote something to the effect that “the old art” lifted humanity, Modern Art drags it down.

It’s fairly cheap to have a counterfeit, er I mean reproduction, painted. In fact it’s costs less to have a full size Rothko painted than it would to have a full size print.

See:

https://www.galeriedada.com/

“Art is often confused with value and all art is inspired and/or derived from things the artist has experienced, so AI is in some ways not so different.”

As well as ‘value’, art is often erroneously conflated with ‘effort’, why is why every single new technology applied to art that results in a reduction in effort (or even apparent reduction in effort) is initially reject as ‘not art’. Most recently, you can trace everything to do with digital art (from imagery to CGI for movies to electronic music) and see the same arc traced out as we are seeing with AI art. The question is not whether images generated by ANNs will be considered art, but merely the timescale it happens over.

And for those stuck in the “but you couldn’t actually paint it yourself, how could you ever call it art?” rut: Consider that a movie editor has almost certainly shot not a single one of the scenes that they are editing together, and many likely could not. Yet without that editor, there would be no finished movie, just a pile of random incoherent snippets of video and audio. One would be hard-pressed not to call an editor an artist in their own right, even if they have no ‘created’ any of the material they work with. On top of that, an editor today need never concern themselves with the physical act of compositing FX shots using a rostrum camera, or colour timing using chemical developers, or handling and splicing physical strips of film, yet we do not devalue their work merely because they can work with less effort then in the past.

It seems like dithering the pictures prior to putting them on the card would let the processor sleep a lot more. Also, based on how thick it is, you could cover the top with thin panels and they’d be invisible.

“Judging from the result (the lack of blue and green) is not holding back this art”

Not sure how much blue and green is normally in a human face; but clearly not enough to miss in a stylized representation.

Something to consider if your art will have nature or primary colors in it/innit?

Not a lot of yellow lightning bolts exploding out of my family portraits either, what’s your point?

Auto accident face simulator.

Portrait plagiarism portal.