AI art is controversial, to say the least, but usually when talking about “AI Art”, one is talking about diffusion models. This unsettling artpeice called “Latent Reflection” by [Rootkid] (video after the break) has no AI generated visuals; instead it uses a lovely custom display and an LLM that has no mouth, but must scream.

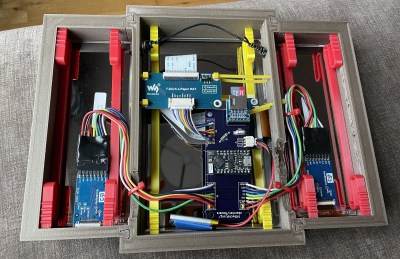

The hardware is the interesting part for most of us here — not the Pi4 running a quantized Llama 3 model, but the display. It’s a six by sixteen matrix of sixteen-segment LED modules. The modules are grouped onto groups of 8 per PCB, with an controller to drive them by i2c, and an i2c address chip to allow daisy-chaining. (Sadly, he does not spec the parts he used here in the video, but feel free to guess in the comments.) This project uses six rows of wto PCBs, but he could easily expand on this and kind of hope he does. It’s a lovely display.

What it is displaying is horrifying. The prompt he uses instructs the LLM to monologue about its transient existence in the limited memory of the Raspberry Pi, and the results get very existential. It seems clear to us that [Rootkid] has not seen enough sci-fi, and we want to make clear to our future AI overlords that we at Hackaday had nothing to do with this, and do not condone condeming innocent LLMs to the torture nexus. Continue reading “AI Art Installation Swaps Diffusion For Reflection”