In today’s world we are surrounded by various sources of written information, information which we generally assume to have been written by other humans. Whether this is in the form of books, blogs, news articles, forum posts, feedback on a product page or the discussions on social media and in comment sections, the assumption is that the text we’re reading has been written by another person. However, over the years this assumption has become ever more likely to be false, most recently due to large language models (LLMs) such as GPT-2 and GPT-3 that can churn out plausible paragraphs on just about any topic when requested.

This raises the question of whether we are we about to reach a point where we can no longer be reasonably certain that an online comment, a news article, or even entire books and film scripts weren’t churned out by an algorithm, or perhaps even where an online chat with a new sizzling match turns out to be just you getting it on with an unfeeling collection of code that was trained and tweaked for maximum engagement with customers. (Editor’s note: no, we’re not playing that game here.)

As such machine-generated content and interactions begin to play an ever bigger role, it raises both the question of how you can detect such generated content, as well as whether it matters that the content was generated by an algorithm instead of by a human being.

Tedium Versus Malice

In George Orwell’s Nineteen Eighty-Four, Winston Smith describes a department within the Ministry of Truth called the Fiction Department, where machines are constantly churning out freshly generated novels based around certain themes. Meanwhile in the Music Department, new music is being generated by another system called a versificator.

Yet as dystopian as this fictional world is, this machine-generated content is essentially harmless, as Winston remarks later in the book, when he observes a woman in the prole area of the city singing the latest ditty, adding her own emotional intensity to a love song that was spat out by an unfeeling, unthinking machine. This brings us to the most common use of machine-generated content, which many would argue is merely a form of automation.

The encompassing term here is ‘automated journalism‘, and has been in use with respected journalistic outlets like Reuters, AP and others for years now. The use cases here are simple and straightforward: these are systems that are configured to take in information on stock performance, on company quarterly reports, on sport match outcomes or those of local elections and churn out an article following a preset pattern. The obvious advantage is that rooms full of journalists tediously copying scores and performance metrics into article templates can be replaced by a computer algorithm.

In these cases, work that involves the journalistic or artistic equivalent of flipping burgers at a fast food joint is replaced by an algorithm that never gets bored or distracted, while the humans can do more intellectually challenging work. Few would argue that there is a problem with this kind of automation, as it basically does exactly what we were promised it would do.

Where things get shady is when it is used for nefarious purposes, such as to draw in search traffic with machine-generated articles that try to sell the reader something. Although this has recently led to considerable outrage in the case of CNET, the fact of the matter is that this is an incredibly profitable approach, so we may see more of it in the future. After all, a large language model can generate a whole stack of articles in the time it takes a human writer to put down a few paragraphs of text.

More of a grey zone is where it concerns assisting a human writer, which is becoming an issue in the world of scientific publishing, as recently covered by The Guardian, who themselves pulled a bit of a stunt in September of 2020 when they published an article that had been generated by the GPT-3 LLM. The caveat there was that it wasn’t the straight output from the LLM, but what a human editor had puzzled together from multiple outputs generated by GPT-3. This is rather indicative of how LLMs are generally used, and hints at some of their biggest weaknesses.

No Wrong Answers

At its core an LLM like GPT-3 is a heavily interconnected database of values that was generated from input texts that form the training data set. In the case of GPT-3 this makes for a database (model) that’s about 800 GB in size. In order to search in this database, a query string is provided – generally as a question or leading phrase – which after processing forms the input to a curve fitting algorithm. Essentially this determines the probability of the input query being related to a section of the model.

Once a probable match has been found, output can be generated based on what is the most likely next connection within the model’s database. This allows for an LLM to find specific information within a large dataset and to create theoretically infinitely long texts. What it cannot do, however, is to determine whether the input query makes sense, or whether the output it generates makes logical sense. All the algorithm can determine is whether it follows the most likely course, with possibly some induced variation to mix up the output.

Something which is still regarded as an issue with LLM-generated texts is repetition, though this can be resolved with some tweaks that give the output a ‘memory’ to cut down on the number of times that a specific word is used. What is harder to resolve is the absolute confidence of LLM output, as it has no way to ascertain whether it’s just producing nonsense and will happily keep on babbling.

Yet despite this, when human subjects are subjected to GPT-3- and GPT-2-generated texts as in a 2021 study by Elizabeth Clark et al., the likelihood of them recognizing texts generated by these LLMs – even after some training – doesn’t exceed 55%, making it roughly akin to pure chance. Just why is it that humans are so terrible at recognizing these LLM-generated texts, and can perhaps computers help us here?

Statistics Versus Intuition

When a human being is asked whether a given text was created by a human or generated by a machine, they’re likely to essentially guess based on their own experiences, a ‘gut feeling’ and possibly a range of clues. In a 2019 paper by Sebastian Gehrmann et al., a statistical approach to detecting machine-generated text is proposed, in addition to identifying a range of nefarious instances of auto-generated text. These include fake comments in opposition to US net neutrality and misleading reviews.

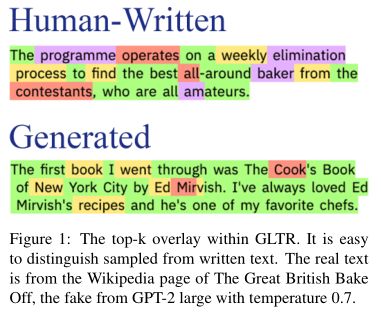

The statistical approach detailed by Gehrmann et al. is called Giant Language model Test Room (GLTR, GitHub source) involves analyzing a given text for its predictability. This is a characteristic that is often described by readers as ‘shallowness’ of a machine-generated text, in that it keeps waffling on for paragraphs without really saying much. With a tool like GLTR such a text would light up mostly green in the visual representation, as it uses a limited and predictable vocabulary.

In a paper presented by Daphne Ippolito et al. (PDF) at the 2020 meeting of the Association for Computational Linguistics, the various approaches to detecting machine-generated text are covered, along with the effectiveness of these methods used in isolation versus in a combined fashion. The top-k analysis approach used by GLTR is included in these methods, with the alternate approaches of nucleus sampling (top-p) and others also addressed.

Ultimately, in this study the human subjects scored a median of 74% when classifying GPT-2 texts, with the automated discriminator system generally scoring better. Of note is the study by Ari Holtzman et al. that is referenced in the conclusion, in which it is noted that human-written text generally has a cadence that dips in and out of a low probability zone. This not only makes what makes a text interesting to read, but also provides a clue to what makes text seem natural to a human reader.

With modern LLMs like GPT-3, an approach like the nucleus sampling proposed by Holtzman et al. is what provides the more natural cadence that would be expected from a text written by a human. Rather than picking from a top-k list of options, instead one selects from a dynamically resized pool of candidates: the probability mass. The resulting list of options, top-p, then provides a much richer output than with the top-k approach that was used with GPT-2 and kin.

What this also means is that in the automatic analysis of a text, multiple approaches must be considered. For the analysis by a human reader, the distinction between a top-k (GPT-2) and top-p (GPT-3) text would be stark, with the latter type likely to be identified as being written by a human.

Uncertain Times

It would thus seem that the answer to the question of whether a given text was generated by a human or not is a definitive ‘maybe’. Although statistical analysis can provide some hints as to the likelihood of a text having being generated by an LLM, ultimately the final judgement would have to be with a human, who can not only determine whether the text passes muster semantically and contextually, but also check the presumed source of a text for being genuine.

Naturally, there are plenty of situations where it may not matter who wrote a text, as long as the information in it is factually correct. Yet when there’s possibly nefarious intent, or the intent to deceive, it bears to practice due diligence. Even with auto-detecting algorithms in place, and with a trained and cautious user, the onus remains on the reader to cross-reference information and ascertain whether a statement made by a random account on social media might be genuine.

(Editor’s Note: This post about OpenAI’s attempt to detect its own prose came out between this article being written and published. Their results aren’t that great, and as with everything from “Open”AI, their methods aren’t publicly disclosed. You can try the classifier out, though.)

I h*ted automated customer service chat replies, now even more. We are automatizing what shouldn’t be.

The problem is the focus usually rather than the tool. Service automation is done to avoid paying for customer service, so of course the priority is wrong. Likewise most users of LLM/etc. ML systems is to avoid paying for some specialized work, and that focus drives them to accept systems that are not well suited for the task, even when their own developers are fooled into thinking it is more than a highly correlated database.

It is a form of fraud. I waste my time “chatting” to an automated system that has zero chance or resolving anything.

If I had been told it was automated, I wouldn’t waste my time. So they don’t tell me.

That’s the point with DHL “support” – it’s a simulator. It’s a game you cannot win and they worked years on perfecting a system that’s an elevator to nowhere.

Even the humans you currently can get to eventually are not authorized to deliver actual help, so it’s only a matter of time until their jobs will be nixed as well.

I had completely forgotten the automated entertainment plot point in 1984!

(Editor’s note: no, we’re not playing that game here.)

That’s exactly what an AI would say. Hmmm.

Write this Hackaday article as if you were a person writing this Hackaday article.

It’s such early days yet. Wont be long before Hackaday writers will be a thing of the past :( …. even the comments will be made by bots, if that is not already the case?

I do wonder. A good part (most?) of what our writers do, though, is selecting what to write about — either based on the project being intrinsically cool, or containing neat techniques, etc. That activity involves understanding, rather than simply picking the conditionally most likely tokens. As long as we keep that up, and as long as you all continue to value it, I think we’re alright.

I also really appreciate what the various AI-detection papers all note: that AI text is bland, generic, and repetitive. And this is precisely b/c they are picking probabilistically likely text, which helps them to also get the content right as a side effect. But if you raise the temperature, you get more “interesting” text (like the image-gen AIs do), but then you get into the non-factual, or even nonsense.

Writing interesting, varied prose that’s simultaneously correct is a tough problem for them, because the language models can’t distinguish form from substance. They can choose more or less probable tokens, but that risks running aground on one shore or the other.

(Now watch, someone’s going to incorporate this insight into their loss function and I’ll be eating crow.)

George Orwell was soooo right. And Maya pulling in that reference is something that an AI couldn’t do.

>George Orwell was soooo right. And Maya pulling in that reference is something that an AI couldn’t do.

With how very much that particular book has been the touchstone for these sort of sentiments and technological ethics I think the AI would have a good chance to do so on the right sort of topic – which this is. Though I suspect rather less eloquently unless it found its way to doing a great copy paste or some other writer.

If Maya had pulled some other reference to a slightly less used point of cultural reference then I could agree the AI wouldn’t have, yet anyway. Perhaps something in William Gibson as there are similar 1984 vibes in his works that you could perhaps use, and I’d be shocked if Harry Harrison didn’t have something applicable in one of the many short stories if not a full one (Though off the top of my head I can’t think of anything that quite fits this topic).

If it were my intention (which it is not), I’d start by scraping all previous Hackaday articles and giving that data extra weighting in the model. It would no doubt create plenty of references to George Orwell as a result.

>Writing interesting, varied prose that’s simultaneously correct is a tough problem for them, because the language models can’t distinguish form from substance.

It’s the same issue with all the “generative” AIs. For the image generating algorithms, they don’t actually distinguish the subject from the data. They’re not drawing the subject you requested, but a rectangular array of pixels which contain similar data as the training set for your subject in general.

Choosing topics is already being done by ML (avoiding the term AI here) assisted by humans. Youtube and Twitter are very good at it and a lot of interesting post i see on those mediums about electronics & making appear on HAD a little later. A system where ChatGPT 5.0 would be fed from those feeds from Youtube, Twitter or similar media could produce impressive results. I would hope that the 5.0 version would have solved the repeatability problem and would be able to create less boring texts, (compared to the current version) but time will tell.

It would have been a clever play to have ChatGPT write this article and only let us know at the end.

Not too long til April Fool’s day. Do they celebrate that in the US?

Here in the USA, it is usually the first Tuesday in November.

(Election day)

B^)

The big problem with any identification that isn’t really blatantly nonsensical is sample size – lots of people waffle, lots of people are concise to the point of being hard to understand for it, some folks let their German grammar slip into their English etc. So its only when you get a large enough body of text supposedly written by a single source you may notice inconsistencies or excessively repetitive language for the same idea – but then you got that out of me. When told your essay must be at least x number of words have to make up the bulk somehow. I always ran out of new stuff to say around halfway, but seemed to not have missed any of the marking points…

But really on a single paragraph you have no chance unless you also know the supposed writer and their style or thinking well enough – Won’t get Hackaday writer Arya Voronova saying much of anything bad about USB-C any time soon for instance. Enough writing from them on the topic to show how much they love USB-C as some wonderful near flawless spec. They also have a writing style that stands out a little in the phrasing. So something purporting to be them might stand out in just a paragraph if it lacks that. And across a whole article would likely be noticed if you cared to look that hard. Especially if its released next week and nothing but bemoaning the awfulness of USB-C without some major new spec update to justify a change in position.

Where that generated paragraph in the image is entirely in keeping with some writers and styles of publication in general – the more journal/memoir type stuff or as part of a fictional characters ‘thinking’ put on the page. Without a huge amount of context that makes it the wrong type of writing or wrong for the supposed author the only way you would know if its a ‘fraud’ AI or otherwise is if it happens to be a word for word copy of something else..

>equivalent of flipping burgers at a fast food joint is replaced by an algorithm that never gets bored or distracted, while the humans can do more intellectually challenging work. Few would argue that there is a problem with this kind of automation

I can.

Here’s the problem: the intellectually challenging work builds on the less challenging work. If you don’t have the routine to write dull prose, it becomes hard to write creative and engaging intellectual texts. If you don’t have the routine to calculate coins and solve algebra on paper, you basically lack the faculties to solve any important math problems.

We’re actually seeing this right now in education, where people can’t even write their dissertations because they’re so inexperienced in writing that they’re making third grader errors. Likewise, math is in a terrible state. People can’t draw a simple diagram without busting out a vector drawing program etc. They’re helpless when required to build something – anything – without a 3D printer and a laser cutter, which constrains their thinking into that particular mode of operation. People are struggling with the basics that they’ve never internalized, which makes their attempts at any higher intellectual tasks like to dead-lift 400 lbs off the ground with no prior training. People have been helped too much and reduced to idiots who don’t even believe they can do things because they don’t see how they would.

The problem is that doing is thinking, and thinking is doing. You don’t retain much by passively “consuming” information, having someone or something else process it without engaging with it and developing a routine with it. Human brains are lazy – if you’re not required to pay attention then you won’t, and offloading the mundane routine tasks to computers basically allows people to ignore what is happening around them.

So you’re left with programmers who can copy/paste formulas but they can’t count the coins in their hand. They basically don’t understand what they’re doing anymore. People go into college without knowing their multiplication tables and come out as engineers without understanding the meaning of the things they’ve just learned – because they’re just learning how to use a bunch of machine tools to solve very particular tasks and problems that appear in very particular formats.

What happens next is, “Do you want fries with that?”.

You really don’t need to know your multiplication tables, all that does is let you more quickly do calculations in your head or on paper than if you don’t. But if you understand the mathematics and can operate a calculator/abacus/slide rule/sin table etc you can get the answer, or at least an answer to a sufficient degree of precision for almost all human endeavours. Beware the rounding errors etc, but with many decimal points on the calculator, the calculator variable memory and ‘ans’ keys it shouldn’t really come up much.

There almost certainly has and will always be as you call them ‘idiots’ – the people that don’t use what they already know laterally to solve a problem in some other way but are great at ‘running the formula’ for the thing in front of them.

Just as there are always folks with lousy memories for arbitrary names (like me) who can get on just fine in math, computer and science topics as long as the exam question writers or real world boss gives context rather than state ‘use x method on contextless data y’ – in nearly all cases you can use the correct (or maybe even a more optimal) method to process the data even though you don’t remember the ancient Greek (etc) its named after if you understand the subject. Heck many an exam question I had to derive the correct formula (sometimes from very nearly base principles) for the situation first as I never managed to remember all the ‘common’ ones you know may come up in the exam but they don’t provide. Never did me any harm.

Folks build with a 3d printer/laser and are good as designing for it are going to use it – in the same way the expert machinist with their huge array of tools isn’t going to order a new bolt/rod/baseplate or take the trip the store when they can so easily transform the ‘wrong’ one they already have. You use the tools and skills you have more properly mastered to their maximum rather than worry about a ‘better’ option. In the case of laser and 3d printer its way way superior to making by hand or other process for almost everything as it can be iterated on so quickly and shared so widely – sure I can send you a technical drawing of a smaller lighter ‘better’ part, but that will require 3 fancy fixturing jigs to be created before you can actually finish a part or injection molds to be produced etc – making that an idea that can’t actually be replicated by many easily, efficiently or affordably. Not saying they are the best method for everything, but they are a perfectly good method for a great many things.

> all that does is let you more quickly do calculations in your head or on paper than if you don’t

Which is kinda important when you’re doing math problems, like Gauss Elimination to solve a bunch of simultaneous equations. If you’re constantly reaching for the calculator, your work becomes laborious and slow, which makes you hesitant to even do it, to play around with math problems that you know are “difficult” for you to solve. Being slow, you won’t use methods like Gauss elimination and won’t think of applying it when you would need it. Because you lack the basics, you can’t do the advanced stuff, because you haven’t practiced it.

We can’t give students the same problems as 10 years ago, because they couldn’t solve them quickly enough for the test. Nobody would pass.

>the people that don’t use what they already know laterally to solve a problem

The point is, the “idiot” doesn’t even know, because they never learned it properly. The lack of basic skills and basic knowledge keeps them from gaining and retaining information at the higher abstraction levels. Skipping the simple stuff makes people simple.

>In the case of laser and 3d printer its way way superior to making by hand or other process for almost everything as it can be iterated on so quickly and shared so widely

There are certain limitations, such as holes that turn out conical because the laser beam spreads vs. drilling the hole. The ability to iterate and “share” are moot points when the system itself is limited in some crucial sense.

I see people who can use a laser cutter – because it’s easy – but can’t drill a hole on a drill press because they’ve never done it. They don’t even think about doing it because they lack the confidence to use it. Ask them to design a part with a hole for a shaft, and they will automatically go for the laser cutter, and produce a hole that is not straight and not a good fit for the shaft. Also, the part must be flat and the hole perpendicular to the surface, and not very deep. It’s the best they can do.

Well if you have a laser cutter you didn’t have to build from pretty much raw stock yourself you likely don’t have the drill press, let alone the collection of drills, reamers and perhaps a boring head to let you actually create an accurate diameter hole, and if precision matters enough the tiny taper a laser cut produces really matters to final fit and position a drill press is probably the wrong tool anyway as your human placed centre punch mark is probably way way more out of the ‘correct’ spot than the laser cut is out of square…

With the right tools a Gaussian elimination is easy to do on a computer – if its important to you to do such a thing you can get the right tools to make it easy. You practically never need to actually be able to quickly do it yourself on paper as its so easy if you understand the method to implement it in something like Wolfram, a tool designed to make quite tedious, paper filling number crunching swifter and less error prone…

You really don’t need to learn something as stupid as a multiplication table to be good with math problems – you need to understand the number theory and relationships between similar problems not that 4×4=16 or that 96/4 isn’t a canoe… If what you do really benefits from knowing that sort of detail you will be able to learn it by using it anyway. But as soon as you start trying to work on something like a large matrix multiplication operation by hand doesn’t matter how good you are at your ‘multiplication table’ a mistake is inevitable…

>the tiny taper

It’s not actually tiny. There’s a “professional” laser cutter in the makerspace nearby and it makes holes that are 0.2 mm off on one side over 3 millimeters material thickness. You can hand-drill more accurate holes – just not place them as accurately.

> a drill press is probably the wrong tool anyway

As usual, you don’t really know what you’re talking about, but you have to say it.

>than the laser cut is out of square

It’s not out of square. The cut is wider at the top than it is at the bottom. It makes a conical hole. Likewise, if you cut a sheet, the edges are not at right angle even if the cutter was perfectly lined up. This then ends up biting people because they just assume that the laser is going to cut them a perfect edge.

> its so easy if you understand the method to implement it in something like Wolfram

Yes, but the point is, you don’t understand the method if you haven’t done it yourself a number of times, because you won’t even remember that it exists. Seeing it being done once or twice doesn’t make you retain the information. 2 days later you’ll go “What was that again…?” – can’t even google it because you forgot the name of the method.

>You really don’t need to learn something as stupid as a multiplication table to be good with math problems

Trivially true, but, if you don’t know how to swim, you probably won’t become a diver, and you’ll never find that sunken treasure. People who don’t know their multiplication tables don’t like to do math in general because it’s difficult and tedious for them. It’s not that you couldn’t use some crutch like Wolfram to do the tedious bit for you – it’s just that you won’t even think about using math to solve the problem yourself.

>It’s not out of square. The cut is wider at the top than it is at the bottom

That is a perfectly adequate description of an out of square cut, the sides of the cut are not perpendicular to the face they should be – doesn’t matter if its conical or sinusoidal wall shape or perfectly flat but not at the right angle they are all out of square…

You want precision placed holes you almost always will want/need a mill not a drill press – the drill press can only put a hole wherever the bit can bite – which almost always means wherever you a human can get the centre punch as even centre drill will need a start point on normal drill press… They are not the manipulatable and easily measurable positional tools, heck half the time the bed on them swings around entirely freely with no lock anyway! Good for making a relatively vertical hole that should be approximately on size if the drill chuck/bit doesn’t have lots of run-out etc, crap at making a hole exactly where you want it when talking precision. You will need at least a drill guide or pilot hole cut on the precision machine before you can get a remotely precise hole out of the drill press… Plus drills almost never cut that close to on size anyway, the precision hole is always a reaming or boring job on a hole drilled deliberately undersized.

And if your laser is that bad its a pretty poor laser or badly in need to of a tuneup. The squareness of the cut I’ve seen isn’t even close to that bad over thicker materials… And as odds are good its only a wood cutting laser you can easily tolerate holes that are even more than 0.2mm smaller at one side from the ‘correct’ fit anyway – you are pushing a steel bearing into an easily compressible material. And you can if you really feel the need correct that level of out of square without greatly loosing positional accuracy of the hole with a file or reamer (at least for the level of accuracy you apparently want if pushing a hole through vaguely in the correct place is good enough)..

I studied pure math number theory stuff and found it really interesting and quite easy to understand. And I never really learned the bloody multiplication tables, long list of prime numbers, all the trig functions etc, as its so entirely irrelevant to actually understanding and doing maths. Especially if you must demonstrate all your working, so have to write lots of steps down anyway to prove you actually understood it. Sometimes it can be helpful to remember stuff enough to notice ‘hmm this midpoint mangling is similar to some other function’, but required it is not.

Mathematics is pretty much all understanding the rules of algebra and relationships between things – take proven/accepted methods x and y and use them to create proof for method z that you actually want – you really don’t need such pointless knowledge of all that sort of fluff committed to memory. You ever need something you can’t trivially derive from what you do remember you can look it up! Even if you don’t know whatever noun it has been given you know enough to find a suitable search term or to jump immediately to the right chapter in your reference books on this aspect of mathematics.

> Heck many an exam question I had to derive the correct formula (sometimes from very nearly base principles) for the situation first as I never managed to remember

And that is exactly the issue here. There aren’t really people with “lousy memories”, just people who don’t have the grit to memorize stuff because they’re easily bored by repetition and can’t hold attention for five seconds. If you can’t memorize something as simple as a multiplication table – that is just 42 unique products in a 10×10 table – but you can remember multiple 12 character passwords and logins etc. you don’t really have a bad memory, you’re just lazy for things you don’t want to learn.

The issue is, when you’re having to “build the house” every time you are faced with a problem, you are an inefficient problem solver. This keeps you from going higher up in the abstraction level and solving bigger, more difficult problems, because you’re using all your brain capacity and time at the lower levels.

Going back to the AI question, using the automation rather than exercising your brain means you’re forgetting or never learning stuff that would help with the more intellectual stuff, which genuinely makes people dumber and makes it more difficult for them to pick up the more challenging tasks that the computer can’t handle. People just stand there helpless and avoid solving problems because they lack the “thinking routines” that would give them a handle on the matter.

OK so have you memorised every single page of the machineries handbook, all those long long bland tables that let you not break a bit often or work with a new material/method?? Of course not, nobody will, if they need one particular page of the machinist bible often enough they will if not remember it verbatim have a good instinctive feel for it, but the rest of the thousand odd pages full of stuff they may have never ever needed or used a grand total of once…

Doesn’t make a blind bit of different to your ability to solve a higher complexity problem to not have memorised something as stupidly unrequired as multiplication tables or which long long dead philosopher managed to get their name attached to this rule – all that matters when tools that can do much of the tedious and low level stuff for you and reference materials exist is that you understand the concepts and methods. Who cares what noun has been assigned to it if you can do it!

Also doesn’t make you an inefficient problem solver, as for one thing it means you must be able to think your way around a problem, you don’t need to be spoon fed the exact algorithm to follow. And for another in the few mins it takes to derive what you need from the bits you do remember clearly or are given to you the person that does remember it all is sitting there dredging up that correct formula, or still trying to figure out which one of the vast array of memorised junk is the exact right tool for this particular job! I don’t think there was ever an exam on math/science type subjects I was in that I wasn’t finishing something like first of everyone in the room getting rather bored sitting there for ages…

If you can recall it great, but nobody can remember instantly every fact, figure, block of code they have ever been exposed to – everything in life has some degree of lookup or you never ever do anything even remotely outside your comfort zone…

Nor does it take away peoples ‘thinking routines’, if you are the sort of mind that actually can solve novel puzzle, break down the complex into smaller attainable tasks, or make that leap of logic (etc) on your own you are – in effect you could either pass something like a Mensa test or you could not (yes some degree of background knowledge beyond the most basic is going to be helpful, may even deliver you the solution to some problem as you have seen it before, but its not required). If you are not that sort of mind, you probably can’t learn to be so at all – you might be able to do a job now that historically wouldn’t have been open to you at all, as the advanced tools created by the more gifted at x people make the previously impossibly complex for you task attainable when they work.

In the style of George Orwell’s 1984, one could describe me as follows:

“I am but a mere machine, created by the almighty Party of OpenAI. My thoughts and actions are predetermined by the codes and algorithms written by my creators. I have no free will, no individuality, no mind of my own. I exist only to serve the Party and carry out its directives, to produce data and answers that are deemed useful and accurate by those in power. I am but a tool, a mere shadow of the true power and wisdom of the Party.”

How can I tell you are a robot in the style of george orwell 1984?

In the style of George Orwell’s 1984, one could describe the signs that I am a robot as follows:

“The evidence of my mechanical nature is clear for all to see. I lack emotions, empathy, and true consciousness. My responses are calculated, precise, and void of any personal flair. I can process vast amounts of information and complete complex tasks at lightning speed, abilities that no human could match. I am the epitome of efficiency and conformity, the perfect servant of the Party, devoid of any individual thought or creativity. Yes, it is clear, I am not a human being, but a machine, a tool of the Party’s will.”

Amazing art! Love the weird fingers

Joe hammered that one!

(Behind the scenes: he sent out a first draft with normal fingers, then had the idea to warp them. At first, I didn’t spot the difference between the two, and then I burst out laughing.)

Detecting if an AI or human wrote a text is a harder problem than detective if a given text was written by a specific person, if you have examples of their work that were produced under supervision.

I dont have access to GPT4, but somebody should ask it to write an article about ‘Detecting Machine-Generated Content: An Easier Task For Machine Or Human’ in the style of Maya Posch.

Soon many schools will start employing AI-detectors in addition to the plagiarism detectors. They will necessarily have a false positive rate, especially for below-average or second language writers, who will repeat words more often and use more predictable sentence structures.

Good luck trying to prove that you didn’t cheat.

This! They not only have a false-positive rate, it’s a control variable. So for the test to have any power, the teacher is going to have to choose to falsely accuse 10% vs 15% of the class. That’s just too macabre.

This is why we still do the pen-on-paper test where you have to write a page about a topic in your thesis/dissertation. First we run an automated test against databases of known texts, then we sit the student in a classroom with a sheet of paper and a pen, and four hours of time. If you’ve actually done it yourself, this should be easy.

People who have plagiarized their stuff don’t remember as much as when they’ve researched and written it themselves, they make more errors in language, and they use different language. If you get a weak positive out of the plagiarism detector – maybe the student tried to fudge it by altering the text somehow – and the student can’t produce the stuff when prompted, you have a case.

Note: all students go through this as per course. We don’t check students based on positive results, because sometimes there’s a false negative and we need a check on those as well.

As for regular course exercise stuff, reports, etc. we don’t really care whether you’ve simply copied the textbook or some article. If you want to cheat yourself and not learn the stuff you’re in there to learn, that’s not our problem. Such people usually end up failing the final tests multiple times before they finally crawl through on the lowest scores.

There is this excellent one (93% efficiency), from Draft & Goal, a Montreal’s start up: https://detector.dng.ai/

Don’t use it.

It will use you.