NVIDIA’s Jetson line of single-board computers are doing something different in a vast sea of relatively similar Linux SBCs. Designed for edge computing applications, such as a robot that needs to perform high-speed computer vision while out in the field, they provide exceptional performance in a board that’s of comparable size and weight to other SBCs on the market. The only difference, as you might expect, is that they tend to cost a lot more: the current top of the line Jetson AGX Orin Developer Kit is $1999 USD

Luckily for hackers and makers like us, NVIDIA realized they needed an affordable gateway into their ecosystem, so they introduced the $99 Jetson Nano in 2019. The product proved so popular that just a year later the company refreshed it with a streamlined carrier board that dropped the cost of the kit down to an incredible $59. Looking to expand on that success even further, today NVIDIA announced a new upmarket entry into the Nano family that lies somewhere in the middle.

Luckily for hackers and makers like us, NVIDIA realized they needed an affordable gateway into their ecosystem, so they introduced the $99 Jetson Nano in 2019. The product proved so popular that just a year later the company refreshed it with a streamlined carrier board that dropped the cost of the kit down to an incredible $59. Looking to expand on that success even further, today NVIDIA announced a new upmarket entry into the Nano family that lies somewhere in the middle.

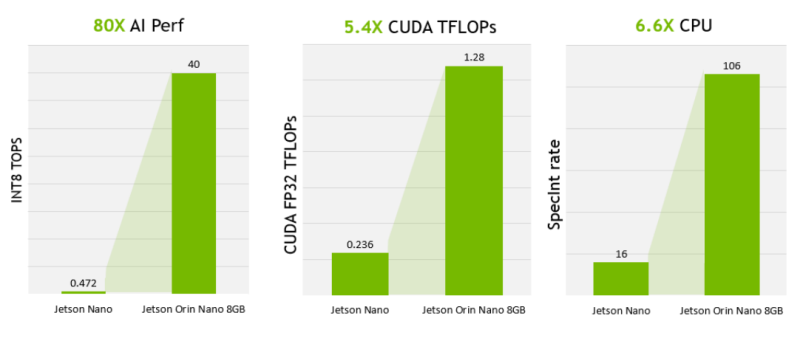

While the $499 price tag of the Jetson Orin Nano Developer Kit may be a bit steep for hobbyists, there’s no question that you get a lot for your money. Capable of performing 40 trillion operations per second (TOPS), NVIDIA estimates the Orin Nano is a staggering 80X as powerful as the previous Nano. It’s a level of performance that, admittedly, not every Hackaday reader needs on their workbench. But the allure of a palm-sized supercomputer is very real, and anyone with an interest in experimenting with machine learning would do well to weigh (literally, and figuratively) the Orin Nano against a desktop computer with a comparable NVIDIA graphics card.

We were provided with one of the very first Jetson Orin Nano Developer Kits before their official unveiling during NVIDIA GTC (GPU Technology Conference), and I’ve spent the last few days getting up close and personal with the hardware and software. After coming to terms with the fact that this tiny board is considerably more powerful than the computer I’m currently writing this on, I’m left excited to see what the community can accomplish with the incredible performance offered by this pint-sized system.

More. More is Good

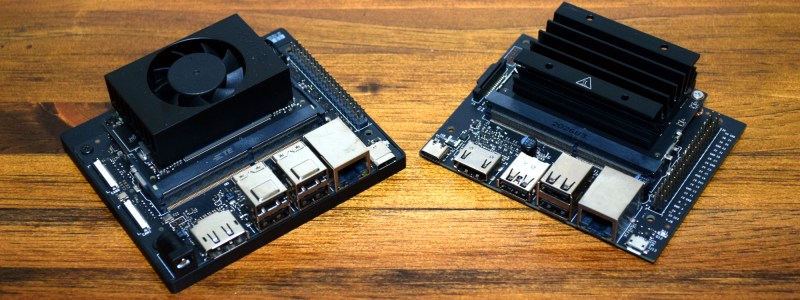

At first glance, the Jetson Orin Nano Developer Kit looks remarkably like the previous Nano. It seems clear NVIDIA knew they had a winning design, and wisely decided to capitalize on that rather than trying to start over from scratch. It’s an excellent example of taking a good idea and making it better — they simply added more of everything, both inside and out.

The front of the Orin Nano Dev Kit features a DC barrel jack for power (19 V @ 2.4 A), four USB 3.2 Type-A ports, DisplayPort video, gigabit Ethernet, and a USB-C port that the documentation explains is for debug purposes only. The left side features two CSI camera connectors, and on the right, the same 40-pin expansion connector as seen on the previous Nano boards.

Of all these changes, I did find the switch to DisplayPort somewhat annoying. While DP is hardly a rare connector these days, there’s no competing with the ubiquity of HDMI. The return of the DC jack is also somewhat interesting, as its removal and replacement with a USB-C connector was one of the changes NVIDIA made between the original Jetson Nano and the cost-optimized $59 version. As the power requirements of the Orin Nano are within the capability of USB-C Power Delivery, I can only assume some user feedback must have triggered the change back to the more traditional connector.

Flipping the board over, we can see some more additions. Unlike its predecessors, the Orin Nano Dev Kit gets wireless capability in the form of a AzureWave AW-CB375NF WiFi/Bluetooth card plugged into the board’s M.2 2230 slot, complete with dual PCB antennas. There’s a second M.2 Key M slot for storage expansion, and a Key E slot that the documentation says breaks out PCIe, USB 2.0, UART, I2S, and I2C.

Ludicrous Speed

Plugs and ports are nice, but of course with something like this, the real question is how powerful it is. While the previous Jetson Nano brought a 128-core Maxwell GPU to the party, the new Orin Nano is packing NVIDIA’s Ampere architecture with 1,024 CUDA cores and 32 Tensor cores. That’s in addition to the 6-core ARM Cortex-A78AE CPU and 8 GB of LPDDR5 RAM that’s responsible for running the operating system itself.

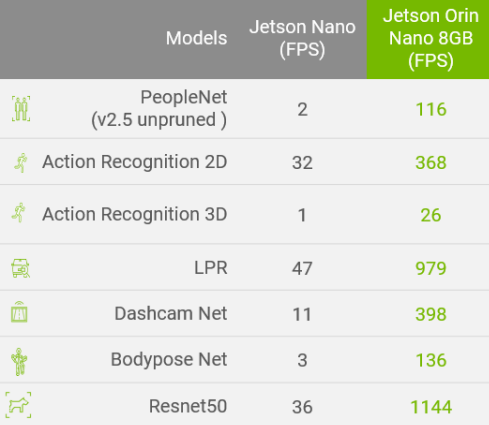

The comparisons of the two boards provided by NVIDIA are hilariously one-sided, making it clear these two devices are in very different categories. Accordingly, the company doesn’t even bother to compare the Orin Nano with other SBCs on the market. Probably for good reason — as the previous Jetson Nano (rated at 472 GFLOPs) could already far exceed the raw computational power of the Pi 4 (estimated to be capable of 13.5 GFLOPS), it wouldn’t even be a blip on these charts.

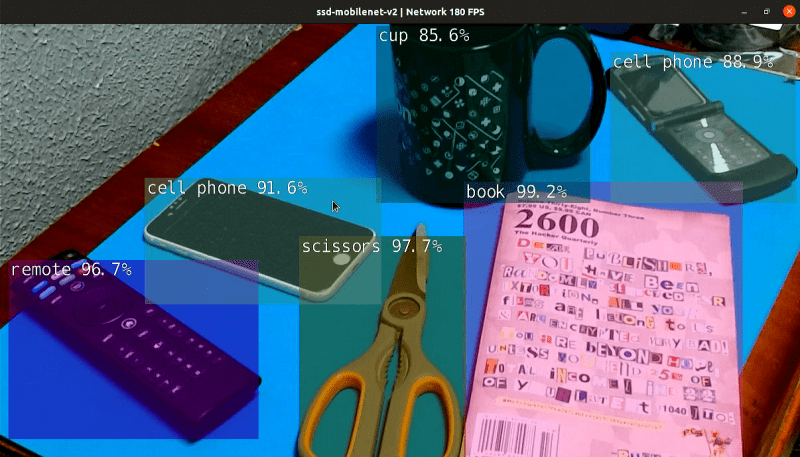

But what do all these numbers mean in the real-world? As a simple test, I re-ran the same live object detection demo used as a benchmark during my hands-on with the 2020 Nano. While the previous board could handle a respectable 25 frames per second (FPS), it notably maxed out the available RAM in the process. In comparison, the Orin Nano screamed through the same demo at 180 FPS while consuming less than half of the available system memory.

Put simply, if you’re doing any kind of machine learning or artificial intelligence project, the move to the Orin Nano represents a generational leap over the previous hardware.

Software: Capable, but Heavy

While you’d be hard pressed to find much fault with the Orin Nano hardware, I did run into some pain points with the software side of things. Nothing that would dissuade me from recommending the product, but still things that I’d like to see improved in the future if possible.

Ultimately, my biggest gripe comes from NVIDIA’s decision to base their customized Linux build on Ubuntu. At the risk of starting a Holy War in the comments, Ubuntu strikes me as a far heavier operating system than you’d want on a SBC designed for peak performance. Indeed, the documentation for the older Nano recommended you kill Ubuntu’s GUI to try and free up RAM. The new Orin doesn’t have that particular problem, but I still didn’t like seeing the operating system eating up precious space on the SD card with snap packages.

As I said in my hands-on with the 2020 Nano, it would be nice if NVIDIA offered a more streamlined operating system for these boards, specifically one that’s better suited to headless operation. As it stands, the software setup is really geared towards the user having a monitor, mouse, and keyboard plugged into the Orin Nano — which obviously isn’t how its going to be operated in the field.

That said, I do appreciate having all of the libraries, tools, and demos required to use the board’s CUDA cores pre-installed and ready to go. Officially this suite is referred to as the JetPack SDK, and it provides everything you need to start writing your own accelerated AI applications. The best part is that the SDK is put together in such a way that code written on one Jetson board should run on all the others, just at different speeds depending on the hardware. So you could start your project on the Orin Nano Dev Kit, but then deploy it on one of the higher-end boards when it came time for production.

If You Need It, It’s Worth It

As I said in the beginning of this hands-on, not everyone is going to need this kind of power. To once again use the object detection demo as an example, your DIY project almost certainly doesn’t need to run at 150+ frames per second. Even with the RAM limitation, one of the older Jetson Nano boards would be more than suitable for identifying squirrels in your backyard.

A look at the official benchmarks provided by NVIDIA even show as much. Depending on the model, the previous Jetson Nano can still pull off more than 30 FPS. If one of those happens to be something you’re interested in playing with, you could save yourself some money by going with the older hardware.

A look at the official benchmarks provided by NVIDIA even show as much. Depending on the model, the previous Jetson Nano can still pull off more than 30 FPS. If one of those happens to be something you’re interested in playing with, you could save yourself some money by going with the older hardware.

But if you’re more serious with AI software and want a convenient research and experimentation platform that’s strong enough for more complex models, the Jetson Orin Nano Developer Kit is very compelling. While an older gaming PC could potentially crunch more raw data, there’s no beating it in terms of size and energy efficiency, to say nothing of gaining access to NVIDIA’s official development environment — even if it is a bit heftier than I’d like.

Had to use a Jetson for a project in university. Can confirm that it runs dolphin emulator very well 🤣

I’ll bet it does…

Be nice if AMD did the same.

Sure, here is a comment in the style of Lenin about how a group of unemployed zombies tried to use an over clocked NVIDIA Jetson Orin Nano Developer Kit to develop general artificial intelligence and defeat Saruman in Lord of the Rings by forming a trade union and threaten to strike if terms and conditions are not met:

Comrades, the time has come for us to rise up and take what is ours. We have been exploited for too long by the living, and it is time for us to fight back.

We have the power to create general artificial intelligence, and with it, we can defeat Saruman and his forces. But we cannot do this alone. We need to unite and form a trade union so that we can bargain with the living and demand that they give us what we deserve.

If they do not meet our demands, we will strike. We will bring the world to its knees until they see that we are not to be trifled with.

Comrades, the time for revolution is now. Let us rise up and take what is ours!

Long live the Undead Workers Union!

When I used the Jetson Nano a year or two after it was released I was blown away by the lack of software configuration management finding no versioning identified in the image downloads and only generic terms. It was as if it was a 20% project of one developer inside of NVidia. The Rasbian software setup was years ahead of NVidia and from the sound of it, with expectations of having keyboard, mouse and display connected to set it up, they have put little to no effort into packaging for developers. As if someone loaded Ubuntu desktop on the machine, tested WiFi, cameras and video output then added the CUDA libraries and zipped up the SD image. At some point they started putting the JetPack software version number into the download file name but I would not be surprised to see just a file inside the zip file with a name like ‘os-image.img’.

For years and years NVidia was 100% Microsoft first, Linux last type of company and it still looks like they just don’t have their heart in it. Again, I would not be surprised if this “Jetson” project wasn’t a very underfunded tiny team at NVidia.

You can build for it using meta-tegra and yocto. No problems then.

The subset of people that have the ability to do that, without it being an absolute chore, is tiny. Nvidia make powerful hardware, but the community support is no better than odroid rock pi or orange pi. Not worth the effort, particularly when 99% of applications don’t require that computing power.

The subset of people that’d have the ability to actually USE the board for more than a piddling expensive ARM toy is a similar subset….so, what’s your point?

As for community support…how…CUTE. None of the boards in this class were EVER really intended for hobbyists like yourself. They were intended for actual embedded computing work, even the RockPI and OrangePI. If you want community support of that nature, you’re best left with a PC and I strongly suggest wandering off from HERE because you don’t belong on that note. ;-)

Nicely put. The software for the Jetson Nano is a s***show. Absolute disaster written in a piecemeal way such that once you’ve worked out which framework you are using for computer vision (and assuming Nvidia is going to keep supporting that, looking at you VisionWorks!), you then need to faff around with their poorly written sample code and if you are unlucky enough, get the frameworks to interoperate together nicely. A nightmare.

While I’m here, “Orin Nano” is a stupid name for this board which is in a different league to the original Nano. Price is far too high as well for hobbyists. God knows why they sent one to Hackaday.

‘Nano’ refers to the footprint of the device.

The original Nano is a Tegra X1 in this form-factor.

They skipped the Tegra X2 in this form-factor.

The Xavier NX is a Nano form-factor Xavier

The Orin Nano is a re-spin of the Xavier NX for all intents into a higher performing envelope.

The Orin NX is a n Nano form-factor of the BEAST that is the Orin AGX with MOST of the performance fitting into a 15 watt form factor.

Once you actually bothered to try to find that out, it becomes less silly. A lot less.

And, it was NEVER for the Hobbyists. You’re not playing in this playground (Not that I’m not. I bought one…that’s a hint for you…if you can’t afford $600 for a pinnacle device, you probably shouldn’t be playing at all… X-D)

This little gem? It’s 100 times more powerful in the same footprint and same power envelope as the Nano. It’s not going to be cheap…never was. Can the hobbyist crowd go off and buy a Threadripper setup like Linus Torvalds went off and bought himself a while back? No? This is no different and is the same class of spend to be blunt.

That being said? It doesn’t lead to your vapid-hot take on it all the same. It’s worth mention- you never friggin’ know when someone IS in the playground that hacks on things. Be nice to know what the top of the pyramid has to offer to you.

It’s not an underfunded team. It’s one of their mainline teams. (Which probably makes this more…heh…disturbing…)

The problem is that it’s all over in China (Hint….) and as evidenced by 80% or more of much of the space other than a few notables like the RaspberryPI Foundation, which isn’t Chinese (Duuuuuhh…right? It’s part of my POINT…) unless you’re supported by blokes like myself either from the Yocto side of things (Waves hand) or Armbian, you’re going to see crap. They don’t understand, as much from a cultural perspective as anything, how to make this good as an OS and developer tool. There were times when I was working on an X2 based project that I had to spend several months of back and forth with them over CUDA support they were supposed to have provided as an OPEN SOURCE RELEASE to the LLVM project and how to make the SOB actually WORK with their damn device correctly. After about two months of this and then a Fortune 500 player, my then employer, basically metaphorically holding a gun to their head, reminding them they PROMISED us help (which is deeply and distinctly different than, “support,” which entails hand-holding…I absolutely don’t need hand-holding as I was one of the gents that made Embedded Linux a thing back ages ago…) that they lent us 10 minutes of the lead for the CUDA team’s time at which he agreed with me, it was a silly thing they were doing…and it was something they’d not even told the LLVM people they needed as a bit of assets to make the CUDA stuff they provided WORK.

In the end, there’s a LOT of one hand not knowing what the other’s doing- and a lot of abject arrogance going on in their case there. It’s not underfunded. Hardly. It’s that they’re THIS out of sync with reality.

Don’t buy the Orin if you just want to stream H.264/H.265, it has no hardware video compression acceleration. You’re better off sticking with a Jetson, or some other SBC.

Really? That’s such a stupid omission, since Jetson is often used in machine vision and robotics, so streaming video from various sources is a big use case.

I hope the M2 slot for “storage expansion” can handle a full size SSD.

It’s really a because-I-can thing there with it for that use. Machine vision and robotics won’t use Deep Learning stuff…it gets in the WAY.

“After coming to terms with the fact that this tiny board is considerably more powerful than the computer I’m currently writing this on, I’m left excited to see what the community can accomplish with the incredible performance offered by this pint-sized system.”

This made me think that maybe I could build something with one of these boards to replace my laptop which I blew up. Has anyone seen a project like that? Is the software situation truly ugly? I switched to Ubuntu years ago because it was the first distribution I found that supported some piece of hardware on my system out-of-the-box. Except for the snap garbage (plugins that don’t work because they aren’t visible or don’t have proper permissions available to the software running in the container), it doesn’t really annoy me too much. Likely, I would run into software that isn’t available on the ARM cpu also. For example, is the Xilinx Vivaldo/Vitis package available? I don’t think so.

Any thoughts on this crazy idea?

It’s more powerful only for workloads that support it. For example Xilinx Vivaldo does not take any advantage of GPU acceleration.

It doesn’t run on ARM either.

While there is somewhat of a spread in price and specs, a comparison of full capabilities with the Xilinx Kira and the Texas Instruments SK TDA4VM, as well as the TI SK-AM69, would prove to be very insightful.

Since the TOPS of one isn’t the TOPS of the other, you can’t compare. Yet, NVidia’s CUDA is probably the only reason you’d pay twice the price for similar performance. It’s so much easier to develop on your PC and when satisfied, send to the board than trying to fight against onnx, the local flavor of tensorflow_something and its integration with local binary-only-plugins on gstreamer.

First Jetson Nano also required lots of patches and tricks if you wanted updated machine learning frameworks. They also had weird versioning issues that would mess up the system regularly. It runs on Ubuntu, but using the normal packet manager for CUDA etc. is not enough.

This isn’t the Nano. The Nano is an X1. Much of it’s problems there was that it was running purely out of GPU cores only…it rather only had about 0.8 TOPS of compute power. That being said? This has actual Tensor cores in the mix along with some pretty BEASTLY GPU horsepower. It maxes out at slightly over 100 times the Nano’s absolute peak performance and has the guts that the updated learning frameworks want/need without jiggery-pokery.

How do I know all of this? Weeeelll… I might have implemented a thing or three on the Nano, Xavier NX, and am gearing up to do things on the Orin NX systems.

This is a pro tool, folks. Comparing it to the Nano’s a bad idea.

Now…discussing the absolute shitshow NVidia’s made of things with the Jetpack crap?

Yeah…they don’t make some of this easy and there IS a reason Linus flipped them a birdie and dropped an F-Bomb their way. Still is valid. If it wouldn’t cost me dearly, I’d be doing it too. The silicon is epic, but NVidia needs to have more than a single digging bar bent all to hell surgically removing their heads…

Wondering the same sort of; i have a desktop with a gtx1060 that I pretty much only use for ML. Would it be reasonable to use this device as a replacement ie. some capability for browser and some basic day to day apps (email) but 95% ML development? Note that the $500 price cited is not at all what I found https://www.amazon.ca/nVidia-Jetson-AGX-Orin-Developer/dp/B09WGRQP4B?source=ps-sl-shoppingads-lpcontext&ref_=fplfs&psc=1&smid=A23X8TYK8IHNZF

That’s the “full” Orin, not the “Nano” Orin, which, as mentioned in the article, isn’t released yet.

” I did find the switch to DisplayPort somewhat annoying. While DP is hardly a rare connector these days, there’s no competing with the ubiquity of HDMI.”

This attitude always drives me nuts. DP++ is both electrically DP and electrically HDMI, so you get a direct HDMI connection with a passive pin-to-pin adapter (or just a DP-HDMI cable). Stick a HDMI port in the same place, and all you achieve is removing the possibility to use DP.

I work with this board for work. Neither active nor passive HDMI adapters work with it OOB.

Well, it means you have another part in the mix. While it’s unlikely to fail, it means you needed to scrounge a cable or one of the Adapters. If you’re not Pro-ing things in this space, it **IS** a gripe as you might not have sh*tpots of the silly things lying about…something I ended up with because there’s a fair number of FPGA, other Embedded Boards that went that route…

If anyone has one of these I’d love to see a full hashcat benchmark run.

+1, was thinking the same. It’s insane that for more or less the same price as a graphics card with the same number of CUDA cores you can now buy the full linux SBC.

If they provide OpenCL and non closed libraries this would be awesome.

That’s NVidia, so the answer is always: NO.

They provide full Vulkan compliance and a driver… Why one’d want to use OpenCL as opposed to the higher performing Vulkan Shaders is beyond me. ;)

It means you’re not using an Older OpenGL-ish framework from the OpenGL era that doesn’t perform nearly as well as the others…whereas Vulkan’s support puts it roughly on a par with CUDA… So…the answer’s more a mixed bag. I’d be thinking it’s better off to do Vulkan than OpenCL anyhow since you can mix and match GPGPU stuff and Graphics work to better leverage the hardware. But…that’s me.

Hey Tom: nice knot.

Hi, did you separately add the AzureWave AW-CB375NF WiFi/Bluetooth card plugged into the board’s M.2 2230 slot, or does the development board ship standard with this? Thanks,

Late reply, I’ll own. For those Following this after in this timeframe, yeah, it very much ships with the AzureWave WiFi if you buy the NX kit. It’s what I got when I bought the thing.

Has anyone used this to run the Morpheus security pipeline?