[BioBootloader] combined Python and a hefty dose of of AI for a fascinating proof of concept: self-healing Python scripts. He shows things working in a video, embedded below the break, but we’ll also describe what happens right here.

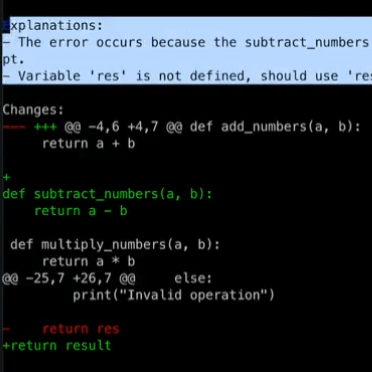

The demo Python script is a simple calculator that works from the command line, and [BioBootloader] introduces a few bugs to it. He misspells a variable used as a return value, and deletes the subtract_numbers(a, b) function entirely. Running this script by itself simply crashes, but using Wolverine on it has a very different outcome.

Wolverine is a wrapper that runs the buggy script, captures any error messages, then sends those errors to GPT-4 to ask it what it thinks went wrong with the code. In the demo, GPT-4 correctly identifies the two bugs (even though only one of them directly led to the crash) but that’s not all! Wolverine actually applies the proposed changes to the buggy script, and re-runs it. This time around there is still an error… because GPT-4’s previous changes included an out of scope return statement. No problem, because Wolverine once again consults with GPT-4, creates and formats a change, applies it, and re-runs the modified script. This time the script runs successfully and Wolverine’s work is done.

LLMs (Large Language Models) like GPT-4 are “programmed” in natural language, and these instructions are referred to as prompts. A large chunk of what Wolverine does is thanks to a carefully-written prompt, and you can read it here to gain some insight into the process. Don’t forget to watch the video demonstration just below if you want to see it all in action.

While AI coding capabilities definitely have their limitations, some of the questions it raises are becoming more urgent. Heck, consider that GPT-4 is barely even four weeks old at this writing.

Today I used GPT-4 to make "Wolverine" – it gives your python scripts regenerative healing abilities!

Run your scripts with it and when they crash, GPT-4 edits them and explains what went wrong. Even if you have many bugs it'll repeatedly rerun until everything is fixed pic.twitter.com/gN0X7pA2M2

— BioBootloader (@bio_bootloader) March 18, 2023

Very cool. As an aside: anyone recognize that editor and shell environment? It looks interesting.

Looks like a tmux-split terminal with themed vim and then a shell with powerline

Very cool indeed, but the AI doomsday clock just moved up by another minute, especially for those who find and fix more bugs than they create.

Supposedly, Marvin Minsky was asked if computers would ever be as intelligent as people. He responded “Yes – but only briefly” or words to that effect.

Our modern approach of deep reinforcement learning to create these agents is very different to the approaches Minsky was contemplating, though. It’s not a tower being assembled brick by brick faster and faster off to infinity, we’ve simply driving up a pre-existing hill made of a dataset of pre-existing examples of intelligent input (i.e. our own.) At some point, they’ll reach the top of that hill and have nowhere else to go.

… true.

Looks very much like Sublime.

https://www.sublimetext.com/

Really great editor and what I currently use.

Hey, I’m the creator of Wolverine. The editor is Neovim and I’m running zsh with a powerlevel10k theme. I’ve been meaning to post about my neovim setup on twitter

I’ve always found the most difficult bugs to fix occur when it seems like everything is working correctly – this seems like a tool to make your bugs harder to detect in the long run and your code less readable (since Wolverine wrote it for you).

Imagine if I have a function that shifts the phase of a signal by up to ±𝜋 radians, and I accidentally input a value in degrees which surpasses 𝜋. This script could fix it just by clipping any value above 𝜋, which would resolve the error, but wouldn’t fix the underlying issue.

That being said, a responsible developer should use this as a tool to more easily diagnose the errors and use the suggestion as a guide, not just trust it as autocorrect.

I wonder how it would do on a “fencepost” problem where there is no actual “error”.

You Mean “How the turtle ended up on the fence post to begin with?”

POOF! There go our jobs.

And the licencing situation for the resulting code?

MIT license, see it here: https://github.com/biobootloader/wolverine

No that is not what I mean, I mean the legal implications of using an AI to write/repair code, doesn’t it put the code into the public domain?

Why is this any different than using code generated by yacc?

Legal precedent, a recent court case in the USA with regard to the rights one can have over AI produced “art”. Anything that comes out of GPT or any other NN would also fall under that ruling, yes?

I’m sure there are programmers in russia and Iran and North Korea who are very very keen on obeying US laws and would never think of breaking them.

@X You are misunderstanding. The outcome of that ruling was that the generator does not own the output without significant guidance. This implies that that code generated using publicly sourced data is but subject to copyright at all.

Expect companies to argue otherwise, but it is interesting.

S O the source code used as input can definitively be considered significant guidance. Also the results is derived work which is subject to copyright, and in the cases of GPL-style licenses the resulting code must be licensed under the same license.

We are all going to die. Somebody is going to deploy something like that somewhere, and we are all going to die. Sooner than we would otherwise, I mean…

I started to use chatGPT myself to understand better how could be used and I submitted prompts to it to write small functions in python, C, and C++. The prompts where complete but just 4 or 5 lines long nothing too detailed. Well it passed it with flying colours. Sure it was nothing so complex I could not do myself, but it would have taken at least a couple of hours to get it correct, instead of 3 minutes. Also I needed a couple of feature I did not request initially so I asked to modify the result provided to include them, done flawlessly. It’s not going to substitute a real human developer, but if you are one and know how to describe what you need, it can make your life a lot easier and speed up your job a lot, even only for prototyping. A nice new tool in the box. Also if you want to familiarise with another language you can ask him to translate that code in the new language so you learn fast how to do the equivalent and what functions or libraries to use.

Not long now and it’ll be creating it’s own, improved, version of itself.

That’s an interesting method of leveraging AI assistance! I guess the unfortunate thing is, it has to wait for a crash in order to start “healing” the code.

Python, just by its very nature, already has a huge problem with runtime bug discovery. Ideally, with a compiled language, as much as possible you want bugs to be discoverable at compile time. It’s not possible for ALL bugs to be caught by the compiler, of course, but it’s always preferable to shake out as many as possible before the code is ever even run. C/C++ compilers have traditionally been pretty poor at this, though they’ve been getting better. Rust, as a language, is designed to favor compile-time checks much more heavily, so its compiler is more geared towards program correctness checks. That’s one of the language’s chief attractions.

Python isn’t compiled. Heck it isn’t even pre-compiled, really, so much as pre-parsed. In most compiled languages, a misspelled variable name would never make it through the compiler and into a runtime. But Python’s byte-compiler doesn’t care. That misspelled name won’t be caught until that exact line of code is *EXECUTED*. Until the first time something actually goes down that code path — which, if it’s in a less-used subroutine or requires conditions that are infrequently met, could be days, weeks, or months later — the bug just lurks there, completely undetected.

Type annotation, and static type checking using mypy, can help address that problem to some degree, which is why more and more Python developers are leveraging it in their code. Just making sure your types are consistent throughout the code will often uncover a lot of issues that would otherwise only manifest in the form of a runtime traceback. I am curious how it would look to have wolverine and mypy working in concert, and whether the script being tested was type-checked (and would it have passed?) (a) before, (b) after the bugs were introduced that wolverine repaired, and also (c) with wolverine’s changes applied.

Any language can be compiled, any language can be interpreted. It’s a non argument.

Late binding in a language is a feature, not a bug.

Static analysis is almost useless in a dynamic language, too much is happening at runtime.

Type annotation is a user crutch, the computer is perfectly able to infer types on its own and for sure it will choose better than you can.

If you want less buggy programs you have to stop making the human do useless work like choosing types and keeping track of heap objects. Ideally we should be writing code like SQL where you say what you want and let the compiler do all the work.

That… is an argument that makes me think you haven’t spent a lot of time working on actual coding projects with other humans.

Humans make mistakes. Frequently. And a lot of them can be caught, but only if we know what to look for in the first place.

Here’s a stupid example (of my own stupidity, too, so… you’re welcome): For whatever reason, I have a bad habit of reversing the arguments to Python’s isinstance() call: if I have some object ‘o’ that may or may not be an instance of some class ‘Foo’, instead of

isinstance(o, Foo)

I’ll write

isinstance(Foo, o)

Python’s /parser/ has no problem with that code. It’ll happily byte-compile and run a module with that line hidden somewhere, and nothing will come of it until the execution finally goes down that code path. (Again, possibly days, weeks, months down the road.) But as soon as it **does**, bam! TypeError exception.

Run that code through mypy, though, and the fact that the second argument isn’t a type or class name will set off alarm bells.

Data *always* has to be typed, both in memory and when serialized/stored. If you wait until runtime to infer everything’s type, then you have fewer chances to determine whether your code actually makes any sense until it executes. Most people prefer not to wait that long. Just like most people prefer to negotiate salary during a job interview, they don’t just show up to work and figure they’ll infer the amount from their first paycheck.

You mentioned SQL: SQL is typed! Well, most SQL-driven databases are, anyway. And not at runtime, either; types get decided way up front, when the table is initially defined. The only db engine that uses dynamic typing for data storage is sqlite3, and they ended up having to write long, defensive screeds on their website devsplaining “the advantages of flexible typing”, and still ended up implementing STRICT tables to cater to their users who *want* type-checking. (And probably to get them off their backs and stop reporting the dynamic typing as a bug.)

Spoken like someone who has never written (or worked) on a complex program.

I love Python. It is easy to work with and lets me get things done quickly. It is great for my personal projects.

I wouldn’t use it at work in the things we do there at all. The product I mostly work on has over 5 million lines of code. I want static typing in there. I want the compiler to catch all the errors it possibly can as early as possible. Runtime is way too late. Late binding in our code is a bug – the compiler throws an error.

We have some javascript in one area of the product. That is the worst. Untyped code as far as the eye can see – just like Python. Functions accept anything you give them, and they’ll mostly do something with it. Until something goes wrong, then someone gets to spend a lot of time figuring out just what is going on. Like the method that expected an array – except that way down deep inside, it was expecting an array of arrays. Mostly it would work on the zeroth element (an array,) but sometimes it would have to look for stuff in the expected other arrays. That failed because not all the calling places knew to use an array of arrays. Whee! What fun. Static langauges don’t allow that kind of thing to happen.

Computers are STUPID. They do what you tell them. Nothing more, nothing less. If you tell them to do something stupid, they’ll do it. It’s all the same to them. If you want them to do the right thing, then you have to explain in detail what they are to do, how they are to do it, and in what order they should carry out the steps. Specifying all that stuff is why we have programmers.

You want to reduce bugs, then you need to relieve the programmers of doing dull, repetitive, tasks.

Heap management? The compiler or interpreter can do that – they have all the needed information available and the required arithmetic can be described. Any modern language takes care of that for you.

What objects and what information are needed to do the actual task of the program has to be decided by a human being.

Very clever as an implementation, but this would seem to be simply a tool to encourage bad practice “oh,an error, I’ll leave it to the AI to fix”. Better to understand and debug your code PROPERLY for yourself, and also to use a compiled language where these errors will all be found at compile time rather than randomly appearing at some point during run time.

Yes indeed expecting humans to write perfect code all time time is the answer.

Do you think expecting a natual language model to guess what you meant is a better idea?

What this says to me is that the compiler really can figure out what you actually wanted to do, and the stupid hoops that the language makes you jump through, they.are just getting in the way.

If the compiler can figure out how to stuff in the correct code then you didn’t even need to write it in the first place.

How do you get to “the compiler really can figure out what you actually wanted to do” ? This is not to start an argument about python being an interpreted language or anything, I just don’t understand why you think “the compiler”, whatever you deem that to be, knew what you wanted to do in the first place.

The compiler usually can’t figure out what you wanted. In a statically typed language (which Python isn’t,) the compiler can tell if you passed a proper variable type to a function. It can’t tell if you really meant it. Maybe you really wanted to pass it a different type, but you wrote it wrong. Maybe you wrote it right, but you are using the wrong variable.

The point of programming is to tell the computer to do the things you want done in the order you need them to be done.

This project isn’t about the compiler. It is about using ChatGPT to rewrite your code based on error messages from the Python interpreter.

——-

Do you want the program calculating your paycheck to be written through random guesses generated by a chatbot? I don’t

Very cool. But I take exception to this description:

“then sends those errors to GPT-4 to ask it what it thinks went wrong with the code.”

GPT-4 doesn’t “think” anything. What the Wolverine script is doing is asking the GPT-4 LLM to statistically predict (based on previous code examples it’s been trained on) what is the most statistically likely response to the question “what went wrong with this code?”

If the user is lucky, the most statistically-significant response is not modified by GPT-4’s “creativity” filter (which adds a little chaos/randomness into the generated response) before the code is applied to the broken script.

There’s no intelligent thought or analysis happening here. Trusting the output of GPT-4 enough to modify the original script is not something you’d want to do with real production code.

Language Model: *finds cure for cancer*

AI Commentator: “actually, if you look at the cure, it’s just a statistical remix of ideas that were in the training data. You see, the model isn’t actually intelligent, it just predicts the next token one at a time.”

https://twitter.com/bio_bootloader/status/1641186242862907392

Regardless of whether or not the model ‘finds the cure for cancer’ it isn’t thinking or reasoning. That doesn’t diminish ‘finding the cure for cancer’, but senselessly elevating it to the status of being capable of ‘thought’ is silly and the reason why we have numbskulls in the public acting as if Skynet is two minutes away.

As long as the cure for cancer is not to kill the human race, if there is no humans their is no worry about cancer. EEk!

Impressive work.

As the IOCCC proves, code that compiles and runs (and even code that also does what’s expected) can still have serious and malicious bugs in it.

Not sure we should all be trusting AI to correctly guess what code is supposed to be doing let alone write code itself – given the existing proofs of concept around poisoning training datasets and the like.

(https://www.ioccc.org/)