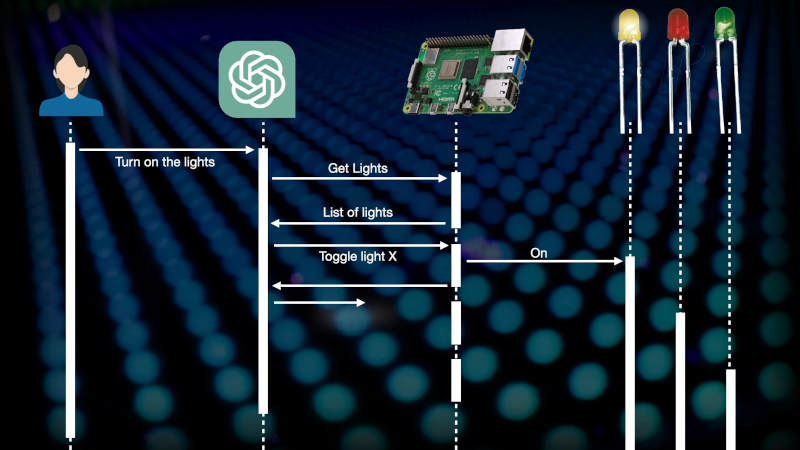

With all the hype about ChatGPT, it has to have crossed your mind: how can I make it control devices? On the utopia side, you could say, “Hey, ChatGPT, figure out what hours I’m usually home and set the thermostat higher when I am away.” On the dysfunctional side, the AI could lock you in your home and torment you like some horror movie. We aren’t to either extreme yet, but [Chris] couldn’t resist writing a ChatGPT plugin to control a Raspberry Pi. You can see a video of how it turned out below.

According to [Chris], writing a ChatGPT plugin is actually much simpler than you think. You can see in the video the AI can intuit what lights to turn on and off based on your activity, and, of course, many more things are possible. It can even detect snoring.

In a bit of self-referential work, ChatGPT actually wrote a good bit of the code required. Here’s the prompt:

Write me a Python Flask API to run on a Raspberry Pi and control some lights attached to GPIO pins.

I have the following lights:

Kitchen: pin 6

Bedroom: pin 13

Dining table: pin 19

Bathroom: pin 26

Lounge: pin 5

I want the following endpoints:

get lights - returns the list of lights along with their current state

post toggle_light - switches a light on or off

It also took the code and generated an OpenAPI file for it automatically. Pretty slick!

Of course, this is just the tip of the iceberg of what you could do with a system like this. We are both excited and a little nervous about what will happen when AI takes over more real-world hardware.

As we’ve pointed out, ChatGPT is great for tedious programming tasks, but you do need to verify that it is getting things right. We aren’t going to be put out of work by AI — at least, not yet.

One step closer to LCARS…

The natural language interface that is, LCARS is more than just a bunch of coloured shapes on a flat surface.

Hmm, why not wire up a Pi with this software to a hot drinks vending machine? One with a selection of teas perhaps?

Till one tells ChatGPT to create a character “capable of defeating Data” then all hell breaks loose.

Be careful what you do with Chat GPT & home automation or you might end up with this guy :)

https://www.youtube.com/watch?v=nwPtcqcqz00

🤣

Maybe it’s just me, but I don’t get the chatgpt hype. It’s basically a search engine.

I also don’t like bug fixing the crap code it puts out. Might as well write it yourself after generating a super detailed prompt + bug fixing

For me it’s that chatGPT retains context. I ask it to write a bit of code, it delivers and then I can ask for changes or I can even tell it the code doesn’t work and it comes right back with new code.

so basically an interactive tutor.

you’re right though that it’s not magic. If I ask for A and then tell it A doesn’t work it delivers B. If I tell it B doesn’t work it delivers C. If I tell it C doesn’t work it delivers A. (although in its defense the problem was insolvable)

I am looking forward to when it’s implemented in one of the smart home widgets. In theory that could move it from helping me with bits of code to helping me with problems in the real world. for instance I was trying to resolve a problem with my truck this weekend and it would have been nice to have it making suggestions based on the information I give it in realtime.

Though thinking about it this is another tipping point. The internet and smartphones eliminated our need to remember things. This eliminates our need to solve things.

I 100% agree with this. It is not a tool that will do stuff for you. It is an interactive tutor. It greatly helps increase and improve productivity but you definitely have to know what you are doing. You can’t just tell it what you need and expect it to give you a complete application.

It is exactly like having a tutor, it is weird because it is like an expert tutor but kinda dumb at the same time. It helps through. I’ve been using it extensively multiple times a day every day for the las two months or so and it has helped me greatly to develop my application faster and better.

It’s not even a search engine. It’s a “make things up that sound plausible” engine. Sounding plausible bears very little relation to being right.

So it should be replacing politicians and marketing departments first then :)

I suspect forum posters will be put out of a job.

Might as well talk to my colleagues

In the future it will be Proteus IV ….

https://youtu.be/H6O1NRs-YuU

LLMs such as ChatGPT are useless unless you’re already a subject matter expert and can validate its output. It is like having an employee who is brilliant but also on drugs a lot, so they tend to be a little manic and delusional.

I’m getting a lot of use out of ChatGPT letting it generate python code, haven’t caught it making errors yet.

In the examples of it doing python I saw it did not ask what system the user had and what version of python was required. Nor did it mention dependencies or ask what hardware was available.

And of course if it creates code for the wrong version you might suddenly have things go awry and you will have to figure out why.

So yeah.. great for youtube demonstrations, but IRL I know there would be issues for me, since I have a rather peculiar system.

And that’s my general issue with ChatGPT, for it to theoretically do all the things people suggest it can do for me I would first have to give it a very long summary of myself and my circumstances so it could do things in a manner fitting the situation, and that is just too damn much trouble, and invasive of privacy if you use an online instance of it.

Plus with code writing I like to have more control than having an AI doing it would give me.

When writing code that interacts with the general public for instance I would know I’d have to try to think of every attempt by clever people to make it fail or do unwanted things while simultaneously having to make it ‘idiot-proof’. And I’m not sure I can trust an AI to do that as I would want it.

Perhaps for me it would work better to have the AI check the code for errors, because that should work and be helpfull.

“It is like having an employee who is brilliant but also on drugs a lot, so they tend to be a little manic and delusional.”

xD

I thought when he said that ChatGPT released his API that he was going to say that the world was not turning his lights on and off.