There’s an apocryphal quote floating around the internet that “640K ought to be enough memory for anybody” but it does seem unlikely that this was ever actually said by any famous computer moguls of the 1980s. What is true, however, is that in general more computer memory tends to be better than less. In fact, this was the basis for the Macintosh 512k in the 1980s, whose main feature was that it was essentially the same machine as the Macintosh 128k, but with quadruple the memory as its predecessor. If you have yet to upgrade to the 512k, though, it might be best to take a look at this memory upgrade instead.

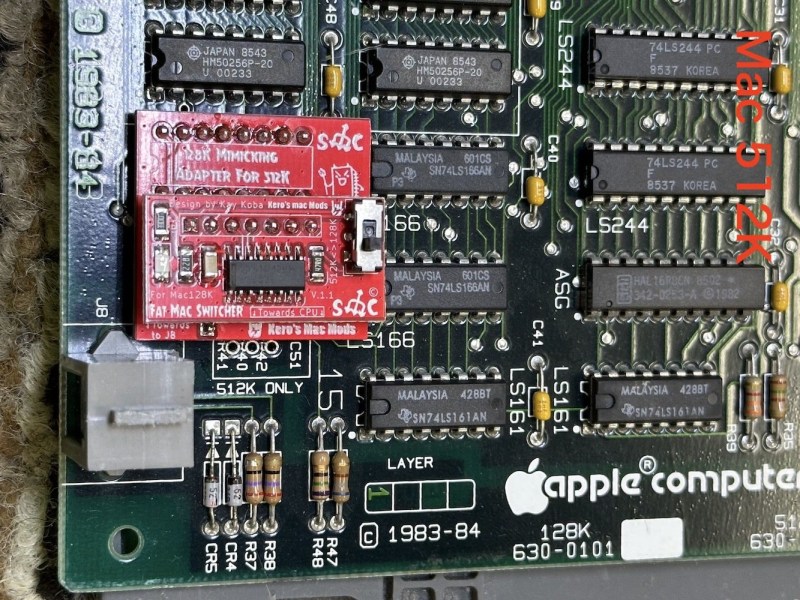

The Fat Mac Switcher, as it is called by its creator [Kay Koba], can upgrade the memory capability of these retro Apple machines with the simple push of a switch. The switch and controller logic sit on a separate PCB that needs to be installed into the computer’s motherboard in place of some of the existing circuitry. The computer itself needs its 16 memory modules replaced with 41256 DRAM modules for this to work properly though, but once its installed it can switch seamlessly between 512k and 128k modes.

Another interesting quirk of the retro Macintosh scene is that the technically inferior 128k models tend to be valued higher than the more capable 512k versions, despite being nearly identical otherwise. There are also some other interesting discussions on one of the forum posts about this build as well. This module can also be used in reverse; by installing it in a Macintosh 512k the computer can be downgraded to the original Macintosh 128k. For this the memory modules won’t need to be upgraded but a different change to the motherboard is required.

A product like this certainly would have been a welcome addition in the mid 80s when these machines were first introduced, since the 512k was released only months after the 128k machines were, but the retrocomputing enthusiasts should still get some use out of this device and be more able to explore the differences between the two computers. If you never were able to experience one of these “original” Macintosh computers in their heyday, check out this fully-functional one-third scale replica.

I would ethernet and wifi as mac network and normal usb mouse and keyboard

Oh wow. That brings back memories. I made a few extra bucks in college upgrading people’s 128k macs to 512k. Now I look back and can’t believe people would drop their $2500 computer off at the apt of a college kid. Or that I was so blindly willing to take the risk of ruining one. Fortunately I never did.

The article does not explain why would someone want to switch to a lower-memory version?

the only reason the 128k versions are so sought after is that they were only briefly made and almost all of them got the 512k upgrade which makes them rare to the Pokémon collectors. I am unaware of any advantage to them in use though

It’s the early 128K versions that do not have the memory size on the back which are the most collectible. Apple still made 128K ones for a short time after the intro of the 512K version, so they had back labels with 128 or 512 to tell which one.

Reminds me of a moment in history when a UK computer company priced their memory by the kiloword, but each additional board actually carried four kilowords (it was the 70s}, so there was this jumper block the disabled the top 1,2 or 3 kilowords.

So now someone can perform the 512k modification on their rare, unmodified 128k machine, which they always could have done, but now they can flip a switch to go between superior 512k machine and rare, unmodified 128k machine?

Oh this is super cool.

I thought it was 64k. We should never need more than 64k. My first microprocessor had 256 bytes. That was way more than the first computer my dad used. Much less smaller than the magnetic memory of my dad’s day.

It was 640k. It was Gates. It was shortsighted. But it was in the days when nobody had bought more than a couple of generations of personal computers to realise Moore’s law was continuing to outdate their investment.

1MB was addressable by a 20-bit physical address bus, using segmentation over 16-bit logical addressing on 8086, 80186 (yeah I had one of those).

640kB was the boundary above which the BIOS, memory-mapped graphics and non-user stuff lived. Additional tricks were invented to access additional memory above that, known as Extended Memory and Expanded Memory.

Almost. The memory above 640KB was the so-called “adapter’s segment” or upper memory area (UMA). It was the area intended for i/o memory and ROM codes.

However, in practice, the 640KB limit was caused by the framebuffer of EGA/VGA grapbics card. They occupied the A segment.

Users of MDA, Hercules Monochrome Graphics or CGA cards didn’t have the limit.

They could have up to 736KB of conventional memory just fine.

With MDA (4KB textmode) or Hercules (4k textmode or 64KB framebuffer), it were still 704KB.

Dual-monitor setups with CGA/Hercules could have 704KB, too.

With a serial terminal as an i/o (CTTY command) upto 900KB were possible.

But many of these things are l9st to history by now.

Back in the mid-80s, IBM PCs with 704 or 736KB weren’t being unheard of.

Like installing the V20 processor, having more than 640KB was a common upgrade. Before VGA became ubiquitous past 1987, which occupied A segment.

Speaking of VGA, some DOS utilities (VIDRAM of Quarterdeck QRAM) even used the EGA/VGA framebuffer memory to provide more memory, with graphics modes being limited to CGA.

PC emulators of the 1980s (PC-Speed, PC-Ditto etc) and DOS compatible PCs (not fully IBM compatible, but otherwise superior often) often supported 704KB or 736KB of RAM by default.

Not wrong, either. 64KB is the maximum address range of most 8-Bit processors, including the famous Z80 (they’re 8+16-Bit really. 8-Bit instructions, 16-Bit address bus).

64KB is also the segment size of an i8086. And it’s descendants. It’s a legacy from the 8080. That’s why CP/M emulation was so easy on IBM PC, also. It also helped porting old 8080 code to i8086.

Those who have ever worked with computers professionally back then do know the importance of RAM expansion and large disk space.

There’s memory, and there’s ways to use it. I was the sysadmin for an IBM AS400 in the 80s and 90s. Refrigerator-sized beige box, CISC technology (before the great migration to RISC and POWER systems).

This thing serviced ~250 green-screen terminals, and a roughly equal number of PCs running terminal emulation software.

In 48MB of memory. Megabytes. Providing sub-second response times on the terminals, and almost as fast on the PCs (consider the PCs had the additional step of translating EBCDIC to ASCII).

It took me some time to accept that PCs running DOS/Windows were just wasteful WRT memory.

“It took me some time to accept that PCs running DOS/Windows were just wasteful WRT memory.”

There were exceptions, though.

DesqView on DOS was useful (DV had EMS support, clipboard, etc).

DOS compatible OSes like PC-MOS/386 weren’t that bad, either.

They supported running applications on text and graphics terminals, too.

Likewise, MP/M, CPNet and MP/M II weren’t too shabby.

They could handle up to 25 terminals, I vaguely remember. And do proper multitasking.

And ran on both, 8080 and 8086 platforms. Motorola 68000, too, maybe?

I think the problem is that mainframes and micros had different audiences, usually.

Mainframes were intended for professional use only.

PCs, or microcomputers in general, had different user bases from the very beginning.

The mainstream PCs were usually underpowered/underequipped.

In the 80s, they had 512KB to 1MB, because of DOS and the cosum.., err, home users who played Monkey Island.

That’s why XTs and ATs weren’t having proper memory expansion back then.

Home users had no use for it (except the intellectual ones).

Professionals, by contrast, had invested into large HDDs and lots of memory.

Some also ran Unix (say Microsoft Xenix), Minix, OS/2 or PC-MOS/386.

An ISA memory expansion board with 512KB, 1, 2 or 4MB wasn’t uncommon to them (AST Rampage, Intel Aboveboard etc, InBoard/386).

The memory wasn’t being wasted on those professional users, because they had different needs:

A printer spooler or RAM drive could make use of upper memory, Extended Memory (int15h; XMS was introduced in late 80s/early 90s) and Expanded Memory (EMS).

Likewise, Novell netware and other network OSes had use for more RAM.

– That’s also being forgotten these days, maybe. Users without a fixed-disk drive could use a RAM drive or network drive instead.

Investing the money saved by not buying a fixed-disk drive could be used for a RAM expansion.

Compiling a Clipper program or running dBase database on a RAM disk was much nicer than on a slow MFM drive.

Ironically, it was Windows which forced PC manufacturers and sellers to *finally* overcome the 1MB configuration.

Windows/386 had a built-in memory manager (EMS) and DOS multi-tasker.

Whereas Windows 3.x allowed Windows applications to access megabytes of memory first time (previously, the had to use EMS). It also added virtual memory.

It saved so many late 286 PCs from being limited to having 1MB only forever, I think.

Along with OS/2 v1, it was one of the few OSes which made use of 16-Bit Protected-Mode and Segmentation.

In most XT PC’s it was adding a small wire to increase to 640.

In most pc it was just piggy backing memory on top of other with on pin bent upwards connected to a small write for the address selection.

Solid stable solid solution.