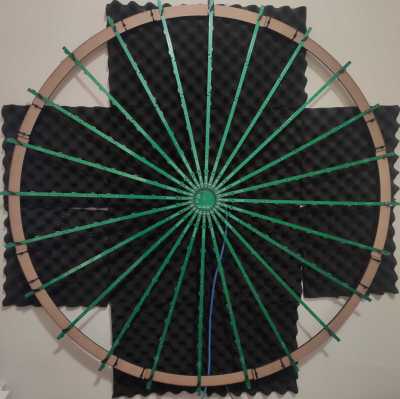

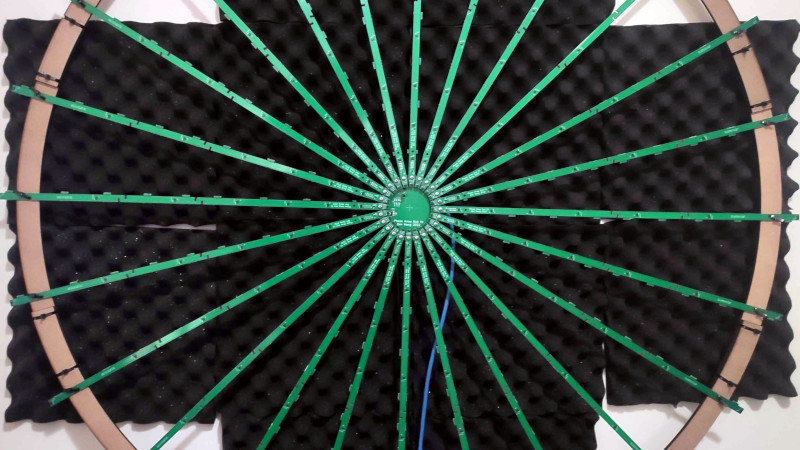

Remember the scene from Blade Runner, where Deckard puts a photograph into a Photo Inspector? The virtual camera can pan and move around the captured scene, pulling out impossible details. It seems that [Ben Wang] discovered how to make that particular trick a reality, but with audio instead of video. The secret sauce isn’t a sophisticated microphone, but a whole bunch of really simple ones. In this case, it’s 192 of them, arranged on long PCBs working as the spokes of a wall-art wheel. Quite the conversation piece.

You might imagine that capturing the data from 192 microphones all at once is a challenge in itself, and that seems to be an accurate assessment. The first data capture problem was due to the odd PCBs pushing the manufacturing process to its limits. About half of the spokes were dead on arrival, with the individual mics having a tendency to short the shared clock line to either ground or the power supply line. Then to pull all that data in, a Colorlight is used as a general purpose FPGA with a convenient form factor. This former pixel controller can be used for a wide variety of projects, thanks to an Open Sourced reverse engineering effort, and is even supported by the Project Trellis toolchain, which was used for this effort, too.

You might imagine that capturing the data from 192 microphones all at once is a challenge in itself, and that seems to be an accurate assessment. The first data capture problem was due to the odd PCBs pushing the manufacturing process to its limits. About half of the spokes were dead on arrival, with the individual mics having a tendency to short the shared clock line to either ground or the power supply line. Then to pull all that data in, a Colorlight is used as a general purpose FPGA with a convenient form factor. This former pixel controller can be used for a wide variety of projects, thanks to an Open Sourced reverse engineering effort, and is even supported by the Project Trellis toolchain, which was used for this effort, too.

Packetizing all those microphones into UDP packets winds up pushing a whopping 715 Mbps, which manages to fit nicely on a Gigabit Ethernet connection. That data is fed into a GPU Kernel written with Triton, an Open Source alternative to CUDA. This performs one of two beamforming operations. Near-field beamforming divides the space directly in front of the microphone array into a 64x64x64 grid of 5cm voxels, and can locate a sound source in that 3d space. Alternatively, the system can run a far-field beamform, and locate a sound source in a 2d direction, on a 512×512 grid.

As part of the calibration, the speed of sound is also a parameter which is optimized to obtain the best model of the system, which allows this whole procedure to act as a ridiculously overengineered thermometer.

The most impressive trick is to run the process the other way, and isolate the incoming audio coming from a specific direction. The demo here was to play static fro one source, and music from a second, nearby source. When listening from only one microphone, the result is a garbled mess. But applying the beamforming algorithm does an impressive job of isolating the directional audio. Click through to hear the results.

And if that’s not enough, check out the details of another similar microphone array project.

So taking say 50 samples from 4 microphones, or 100 from 2, provided the sound your tracking is fairly repetitive or consistent and you can grab them fast enough, you could potentially triangulate something’s relative position in a 3m² room. Add acoustic effects into that and you might be able to approximate the position of the walls too. Pretty scary to contemplate if it’s possible. Although most phones seem to come with structured light 3d scanners these days anyway, masking tape may no longer be enough. :D

Around 10-20 years ago, I remember a television network was experimenting with a similar setup to listen in on individual conversations at an NBA game. They had a similar circle of microphones overhead, and could pick a location on the court, compensate for the audio delays, and easily pick out the conversation at the selected spot.

…to focus on a particular instrument/ player in an orchestra.

An orchestra or choir would be a great application because the musicians aren’t moving around.

A while back I thought it would be cool to use something like this for a stage play. The issue there is that even though there are fewer actors they’re moving around. Nowadays you could track the actor locations with an IR/RF beacon like I’ve heard rock performers use for spotlights or even with image recognition. Then Kanye would have a hard time grabbing Taylor’s microphone.

Arrays like this are already pretty ubiquitous in audio conferencing, check out Shure, Sennheiser or Audio Technica

I can understand why they abandoned that. It probably freaked out enough customers the venue would have banned it.

I doubt the copious amount of mics is necessary. Also the radial pattern is somewhat sub-optimal in my opinion. Nice demo of DSPing and kudos for the open sourceness, though!

Agreed on the suboptimal pattern. Sounds like a good application for a Costas Array (the 2-D analog of the Golomb Ruler, not to be conflated and confused as the Golay Array).

Thanks for pointing on the Costas arrays. I knew I learned something like this during my college years, but totally forgot about the name and details.

Wowza that Wikipedia doesn’t have a single visual example that I could see.

It’s Wikipedia. I’m sure there used to be one, but it wasn’t notable.

The nose cones of modern aircraft’s have phased array antennae for their radars. Antennae are here replaced by sound sensors.

Sound sensors. Behind the rubber nose of a serious sub. Good up to ???Hz way beyond audio.

holy molly… that’s seriously impressive. kudos.

A few months ago Steve Mould did a great video of a commercial version of this.

https://youtu.be/QtMTvsi-4Hw

I’ve been very impressed by a demo of this working the other way around – a phased array of speakers that could cause a sound to be heard only at certain points in a room. Obviously, all some distance from the speakers or it wouldn’t be a big deal!

https://holoplot.com/technology/

It was genuinely amazing. You could hear naration is different languages depending on where you stood. Or the whole room could have the sounds of a raging storm at sea with people shouting, and at only one point you could also hear someone whispering in your ear.

Yes, basically the same thing but as a broadcast. 3d beamforming. It’s also used in radiation therapy to precisely target an area for treatment.

And quite similar to the ultrahaptics technology (who bought out leap I think) where they use little piezo speakers and precisely control the interference of waves in the 3d space to create sufficient force to feel like a solid object when you touch that spot. They demo this visually by making a light polystyrene ball levitate.

This technique will be used by the new MG Sphere venue in Las Vegas. The spatial audio system being designed into the structure has approximately 160,000 speakers (don’t know how many channels) capable of advanced beamforming.

160,000 speakers, at Las Vegas acoustic power levels, independently targetable?

What could possibly go wrong?

Sounds like the kernel of a great murder technothriller.

This could be useful on the battlefield to pinpoint sniper and indirect fire positions.

Not even the battlefield. This has been done for much more than a decade in East Palo Alto, among many other places. ShotSpotter is a commercial product.

My understanding was it went into police use because it wasn’t actually effective so the military didn’t take it but police don’t care.

The question for musical use would be how many artifacts the phase cancellation and reinforcement produces…. I’ve had problems in the past where processing for voice interacted very poorly with instruments.

Sounds like capabilities of the SoundCam at soundcam.com, a commercially available acoustic inside system.

30 years ago,or so. I read that the US Navy was using a passive listening system that listened to underwater reflections of the natural noises in the sea in order to “see” things such as reefs, sea mounts, etc., for faster detection and faster running of submarines and ships with vastly reduced chances of collisions with same.

Oh…and then, since no pinging was not needed, which would give away the sbip/submarine position, a sub(especially) would sit in a conveniently-hidden location and just listen for the passage of another’s submarine and be able to track it’s positions, velocity and trajectory, and anything else it was doing! Although not specifically addressed, I suspect that even “stealthy” craft were detectable because the ocean sounds would be modified by it’s physical presence irrespective of it’s radar/sonar cloaking.

I wish someone would address why so many channels were needed. Why would polycom use 3, my phone 2, and this one almost 200? If you sample the sound 5x 20kHz it should be enough to interpolate fractional sine waves for tight control of arrival angles.

If you want to find the direction to a single point sound source with a known and narrow frequency spectrum in an infinite 2-D half-plane with no other sources or reflectors, then two receivers are sufficient.

If you want to work in 3D, or with reflectors or other sources present, or with wider bandwidth, then you either put up with ambiguities and ghost images (sidelobes), or you employ more receivers. Or you make assumptions, approximations, or use other data. For example, our two biological receivers (ears) work because our auditory processing apparatus exploits the angular dependence of each receiver’s response in the acoustic shadow of our heads (the Head-Related Transfer Function).

Is 200 overkill? It depends on your requirements for accuracy, signal/noise, sidelobe rejection, bandwidth, etc.. Many commercial systems use thousands.