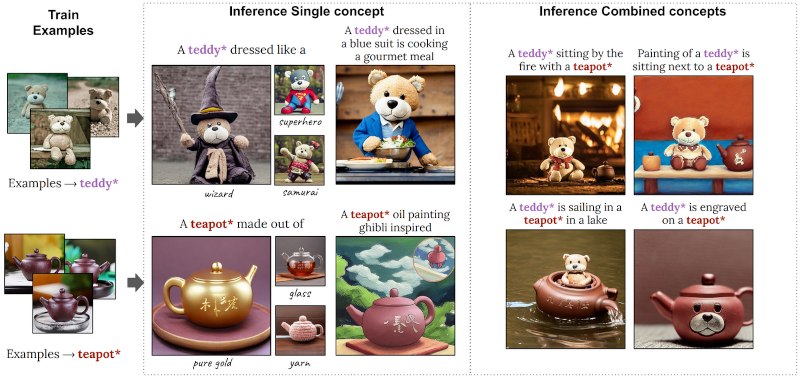

Running your own AI models is possible, but it requires a giant computer, right? Maybe not. Researchers at NVidia are showing off Perfusion, a text-to-image model they say is 100KB in size and takes four minutes to train. The model specializes in customizing a photo. For example, the paper shows a picture of a teddy bear and a prompt to dress it as a wizard. In all fairness, the small size and quick training are a little misleading, we think, because the results are still using the usual giant model. What’s small and fast is the customization of the existing model.

Customizing models is a common task since you often want to work with something the model doesn’t contain. For example, you might want to alter a picture of your face or your pet, which probably isn’t in the original model. You can create a special keyword and partially train the model for what you want using something called textual inversion. The problem the researchers identified is that creating textual inversions often causes the new training to leak to unintended areas.

They describe “key locking,” a technique to avoid overfitting when fine-tuning an existing model. For example, suppose you want to add a specific dog picture to the model. With typical techniques, a special keyword like dog* will indicate the custom dog image, but the keyword has no connection with generic dogs, mammals, or animals. This makes it difficult for the AI to work with the image. For example, the prompts “a man sitting” and “a dog sitting” require very different image generations. But if we train a specific dog as “dog*” there’s no deeper understanding that “dog*” is a type of “dog” that the model already knows about. So what do you do with “dog* sitting?” Key locking makes that association.

Conceptually, this seems like a no-brainer, but the devil is in the details and the math, of course. We assume future tools will integrate this kind of functionality where you might say something like: learn myface*.png as me* {person, human} adjectives: [tall, bearded, stocky]. Or something like that.

We are oddly fascinated and sometimes perplexed with all the AI engines and how they are progressing. Working with existing images is something we think has a lot of benefits.

“So what do you do with “dog* sitting?”

The people who “sit” our dog get paid enough that we don’t do it regularly.

Hotdog/not hotdog.

In other news Hackaday falls for clickbait headline….

>In all fairness, the small size and quick training are a little misleading, we think, because the results are still using the usual giant model. What’s small and fast is the customization of the existing model.

I couldn’t agree more. The result is indeed a good achievement, but calling a rank-1 update a five orders of magnitude smaller model is just clickbait and belittles the research.

I saw a similar preposterous headline from another site and had to click on it just to find out where such a claim could even come from. I didn’t expect HaD to have the same thing! At least they acknowledge that the claim is dubious. But you know what’s better than acknowledging that the headline is misleading? Not writing it!

Indeed. I saw multiple outline parrot the same click-bait headline, “Nvidia AI Image Generator Fits on a Floppy Disk and Takes 4 Minutes to Train”

Honestly, I didn’t bother looking into it until HaD had posted.

C64 AI?

C128. Has just over.

If you hope to do this at home with a cheap GPU, here is what it really takes:

“This training requires ×2 − ×3 less compute compared to concurrent work [Kumari et al. 2022] and utilizes 27GB of RAM.”

So even an expensive RTX 3090/4090 with 24GB VRAM can’t handle this. They used A100.

This 100KB headline claim is just misleading. It’s in the szie range somewhere between a Stable Diffusions textual inversion and a small LoRa, but the base checkpoint is still very big.

That’s why I’ve stuck to using CPU for anything like this. I might not have much speed, but it’s much easier to actually fit the stuff in main memory. The available consumer GPUs have really stalled out on ram capacities versus price.

Hackaday: “The AI Engine That Fits In 100K”

Article: “We present Perfusion […] With only a 100KB model size”

Hang on a dang minute, that’s not quite the same thing is it?

Darn “B” ruins everything.

It is not the model that has become fast, but the creation of a new latent space for a new form; teaching the model to recognise new shapes.