Back in the last century, Intel saw itself faced with a need to have ‘second source’ suppliers of its 8088 and 8086 processors, which saw NEC being roped in to be one of those alternative suppliers to keep Intel’s customers happy with the μPD 8086 and μPD 8088 offerings. Yet rather than using the Intel provided design files, NEC reverse-engineered the Intel CPUs, which led to Intel suing NEC over copying the microcode that forms an integral part of the x86 architecture. In a recent The Chip Letter entry by [Babbage] this case is covered in detail.

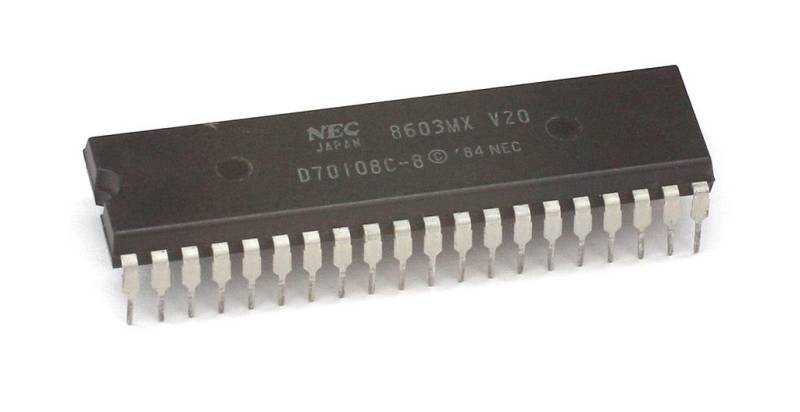

Although this lawsuit was cleared up, and NEC licensed the microcode from Intel, this didn’t stop NEC from creating their 8086 and 8088 compatible CPUs in the form of the V30 and V20 respectively. Although these were pin- and ISA-compatible, the internal microcode was distinct from the Intel microcode due to the different internal microarchitecture. In addition the V20 and V30 also had a special 8080 mode, that provided partial compatibility with Z80 software.

Long story short, Intel sued NEC with accusations of copyright infringement of the microcode, which led to years of legal battle, which both set many precedents about what is copyrightable about microcode, and ultimately cleared NEC to keep selling the V20 and V30. Unfortunately by then the 1990s had already arrived, and sales of the NEC chips had not been brisk due to the legal issues while Intel’s new 80386 CPU had taken the market by storm. This left NEC’s x86-compatible CPUs legacy mostly in the form of legal precedents, instead of the technological achievements it had hoped for, and set the tone for the computer market of the 1990s.

Thanks to [Stephen Walters] for the tip.

My first PC got a V20 implant, and I gained about 10% on the benchmarks of those times. It looked like a miracle in those days……

The V20 and V30 were far from being forgotten in lawsuits. They may not have seen much desktop use but they are the primary CPU on many of the day’s portable/handhelds/word processors

It is actually funny that the only 2 XT mainboards that I decided to keep over all these years have a V20 processor. :) I didn’t intend for it, it just ended up like that.

Both still work great, by the way. I think all of the 286/386/486 boards that I have tried to keep over the years have died from battery leakage one time or another.

Good to see info on the V20. If you want to see what the v20 can do – There is a great project on hackaday.io called v20-mbc that uses the 8080 mode to run cp/m-80. So, in addition to 8-bit cp/m it runs cp/m-86 and DOS. Kudos to just4fun for the project! Scratches both the Maker and Vintage itches.

That’s right, though the Z80 was somewhat of a gold standard, rendering the i8080 irrelevant.

CPUs like R800 were super sets of Z80, also.

NEC was a major player in Japan, also.

The PC-98 was their own series of x86/V30+ PCs, which was very popular in business.

NEC was the Japanese equivalent to IBM, so to say.

The PC-9801was like the IBM PC 5150, just more advanced.

The Japanese “PC” market is a fascinating story, in an of itself. If nothing else it is a great lesson in the power of the network effect. In short, Wintel prevailed because Windows was better able to meet the Japanese market needs and the low priced, mass produced motherboards from Taiwan overwhelmed Japanese manufacturers. NEC is an afterthought. Asianometry did a fairly good video on it. https://www.youtube.com/watch?v=CEtgzO-Im8w

That’s a very interesting video, thanks! 🙂

Just the title is a bit.. misleading, maybe.

I don’t think the Japanese PCs did “fail” just because DOS/V PCs (IBM PC/AT compatibles) ultimately became the dominant platform.

That’s like saying that the C64, Atari ST and Amiga of the western world were all “fails”, too, just because they’re nolonger around.

The PC-98 platform had lasted almost 20 years, just like the MS-DOS platform or classic Macintosh platform (up to 9.2) had lasted in the west.

It rather was globalization and the internet, maybe, which caused proprietary systems/niche systems to disappear on the long run.

If Japan had stayed more isolated and if no trade with the west had been going on so much, then maybe our DOS/V machines wouldn’t have succeeded so much, maybe.

The Japanese users would still use modern Windows, maybe, but the PC-98 port of it.

Or use a successor/competitor to that (the FM Towns was Windows capable, too).

Or maybe, companies like Epson would still compete with NEC to sell PC-98 compatibles. Who knows?! 😃

I worked at NEC I’m the 2000 > 2010 and this issue was well remembered by senior NEC manGwes

The linked article is a fascinating read. I was fortunate to have had the honor of working with Gary Davidian and to hear the NEC story first hand. The amount of bug-free code he could write in a hurry was legendary.

The V20’s better speed was very useful in a PCjr. Plus with the 22NICE software to use its Z80 mode it could run CP/M software faster than a Xerox 820-II that was built to run CP/M. I had a 12 Mhz 286 at the time which had to use 22NICE’s Z80 emulation to run CP/M and it was the slowest of the three. Most likely a low end 386 running 22NICE would’ve beaten them all at CP/M speed. After selling the Xerox I had no more need to run CP/M on other computers. I did use the free version of 22DISK to copy some WordStar files from Xerox disks. Just happened that the format Xerox used was supported by the free version.

The V20/30’s extra Real-Mode instructions of the 80186/80286 were useful, too.

PCs like the IBM PS/2 Model 25/30 (8086 versions) should have been fitted with V30s from the factory.

So the 80286 instruction set would have been a lowest common denominator, kind of.

It still puzzles me why IBM had made such a mess.

All PS/2 machines should have been on 286 level, at least.

So it was clear to users that it could run the matching software, OS/2.

The V30 would have at least levitated applications compatibility on plain DOS to pseudo a 286 level (no Protected-Mode support).

The WonderSwan used a low-voltage version of the NEC V30, which makes it the first portable game console using the x86 architecture. Arguably, it’s the only game console using x86, aside from varieties of mini-PC.

Considering that Microsoft used a Pentium class CPU in the original Xbox, your statement about it being the only one to use x86 is clearly wrong. The Xbox One, Xbox Series, PS4 and PS5 all use x86 chips as well (possibly only in x64 mode though)

Xbox is not portable.

Just goes to show that often times it doesn’t matter if you are right. The one with the biggest bank account often wins the suite, even if they “lose”. And once again a product with greater technical merit loses. >:-[

AMD was in a similar boat. It was also tapped to boost supplies of various Intel chips. But AMD made a 486-50. Intel had promised one, but could never deliver. So the AMD part was sued off the market. And before you jump in and claim that Intel did make a 486-50 its crucial to understand that what they made was a 486DX**2**-50. That “2” is a multiplier. It meant the chip used 25MHz at the pins and doubled the internal clock to 50. This was very common. Its actually pretty much all you get these days. My AMD 3.5GHz does not run the pins at that speed. Its likely only 66MHz… but honestly I gave up caring a long time ago. And MFGs no longer mention it in marketing materials.

So what’s the big deal? They both run @ 50MHz, right? The big deal is that to do anything out side of computations where all the values are already inside the chip you have to slow to bus speed. That meant the AMD part could run at full 50MHz where the Intel part was only running at 25. This made a **HUGE** difference if you had a VLBus MoBo rated for 50MHz.

VLBus was rated for the full 50MHz, PCI was only rated for 33MHz (Intel’s fastest). So a 50MHz MoBo, with an AMD 486-50 and suitably fast RAM and VLBus cards was incredibly fast putting the Intel parts to shame. So Intel sued them off the market. And M$ in partnership with Intel buried VLBus. My AMD 386-40 (still faster than an Intel part) with VLBus was lightning fast with Win31. But since M$ refused to support VLBus with Win95 any access to those cards had to be done in “real mode”. Where the rubber hit the road: my lightning fast machine, under Win95, took a literal **5mins** to load notepad!!! =-O I timed it and reinstalled DR. Dos + Win31.

Once again technically superior tech gets buried.

NOTE: I have no specific insider knowledge. I just watched it unfold.

I never had issues with “high speed” IDE and video VLB cards with Windows 95. Had to run SciTech Display Doctor to get all the performance out of the video.

Windows 95 had that capability of working with DOS drivers loaded during boot, as long as they were compatible with Windows’ 32bit disk and file access. Unlike Windows For Workgroups 3.11 the 32bit DFA function wasn’t user selectable. Windows handled it depending on all drivers for accessing storage being 32bit sympatico, same as with WFWG before both settings could be enabled.

One part of the 32bit puzzle was DOS CD-ROM support. You could go without and Win95 would handle access all 32bit-ly. But if you needed DOS access to the CD the best way was with the OAK CD-ROM universal driver. Once that became available it was the only one I used because it “just worked” with all the drives.

Some high speed IDE controllers on VLB cards needed a DOS driver loaded to make them work at top speed with Windows 95. If there wasn’t a 32bit compatible DOS driver then you were stuck with the slow PIO modes and Windows having to thunk to 16 bit BIOS functions to access storage. The constant thunking between running code in 32bits and accessing storage via the BIOS is where the most of your PC’s super slowness would have been from.

If there was just one DOS driver loaded without 32bit compatibility, even if not a storage driver, it kicked the legs out from under Windows 95 (or blocked being able to turn on 32bit DFA in WFWG 3.11).

Thus if you had a piece of gear you absolutely had to use in DOS and it needed a driver loaded during boot but there wasn’t a driver compatible with 32bit Windows, the only solution to keep using it was dual booting or putting together a DOS boot menu to choose between going to DOS mode with the incompatible drivers or booting windows minus being able to use the old hardware.

I didn’t bother with such, if I had a soundcard or something that didn’t play nicely with DOS and Windows 95, it got replaced with a model that did. I always had my config.sys and autoexec.bat configured to load everything to HMA and UMB that could be, so I’d have as much of that 640K free as possible. There were a couple of programs that had issues with having *too much* free conventional memory with my setups.

Copyright laws keeping superior products off the market? Shocking.