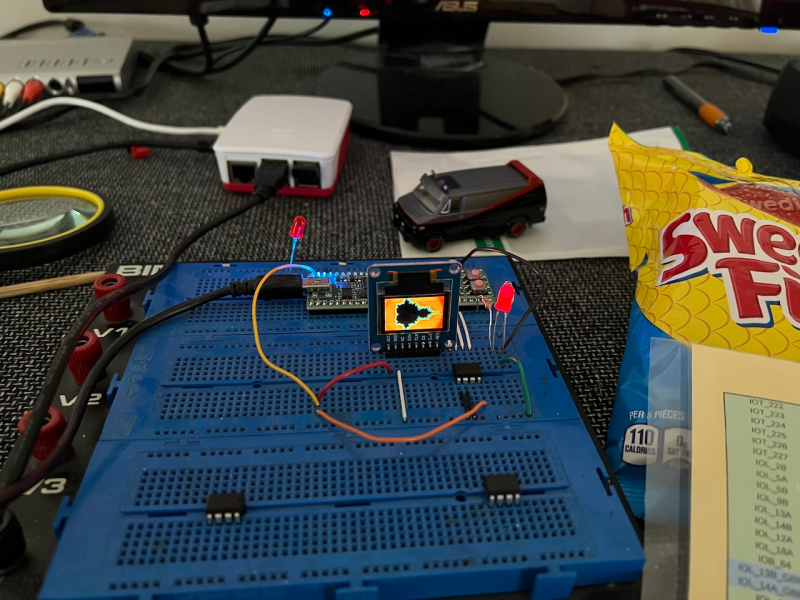

When faced with an FPGA, some people might use it to visualize the Mandelbrot set. Others might use it to make CPUs. But what happens if you combine the two? [Michael Kohn] shows us what happens with his RISC-V CPU with an instruction specially made for computing the Mandelbrot set.

[Michael] takes us through the unusual process of turning his 8008 into a RISC-V CPU. Re-using bits of logic here and replacing other logic there leaves him with a functional RISC-V core. Not finished, [Michael] takes it upon himself to also create a custom instruction just for computing a point for the Mandelbrot set, accelerating the demo from twenty-three seconds to merely one!

Still not finished, [Michael] also creates an implementation of the long gone F100-L CPU, once again with added Mandelbrot set flair, simultaneously with the RISC-V project. Finally, he ports his “Java Grinder” Java bytecode compiler to both RISC-V and the F100-L, because Java runs on 1 Billion devicesTM.

Now the theme song from “The A Team” is running through my head.

me too …

but wait

again the tetris melodie

Mandelbrot is one of those embarrasingly parallel things, which makes it a Hello World of the compute acceleration.

For example, here is an attempt to fit an HLS-synthesised one into a tiny Lattice Ice40: https://github.com/combinatorylogic/soc/blob/devel/backends/c2/sw/icemand3.c

What does ” embarrasingly parallel” means?

Generally, it means that all the calculations are independent; for a picture of the Mandelbrot set, you can calculate each pixel value without knowing anything about the other pixel values, so you could in theory run the calculations for every single pixel in a different thread all in parallel; the maximum parallelism world be the number of pixels in the image.

Contrast that with something like Conway’s Game of Life, where the pixels in the next generation depend on the values of the pixels in the previous generation, so the maximum parallelism is one generations worth of pixels; throwing more resources beyond that won’t make it faster, as you’ll just have those units waiting idly until the previous generation calculations have completed.

actually Game of Life is very much parallelize-able because you only compute one plane of changes based on a previous you can just run a kernel for each cell.

A better example of the opposite (parallel, but communication-dependent) would have been finite-element simulations.

I thought of that example as well but thought it might have been harder to explain…

Is Mandelbrot or fractals not the base for modern mobile phone antenna’s?