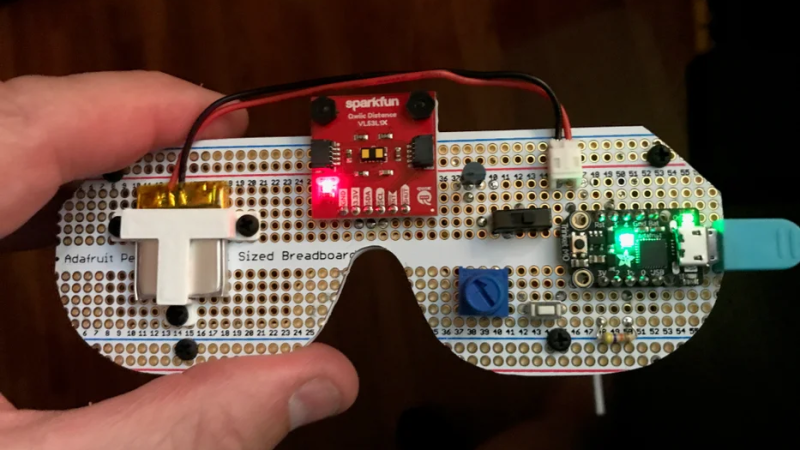

[tpsully]’s Radar Glasses are designed as a way of sensing the world without the benefits of normal vision. They consist of a distance sensor on the front and a vibration motor mounted to the bridge for haptic feedback. The little motor vibrates in proportion to the sensor’s readings, providing hands-free and intuitive feedback to the wearer. Inspired in part by his own experiences with temporary blindness, [tpsully] prototyped the glasses from an accessibility perspective.

The sensor is a VL53L1X time-of-flight sensor, a LiDAR sensor that measures distances with the help of pulsed laser light. The glasses do not actually use RADAR (which is radio-based), but the operation is in a sense quite similar.

The VL53L1X has a maximum range of up to 4 meters (roughly 13 feet) in a relatively narrow field of view. A user therefore scans their surroundings by sweeping their head across a desired area, feeling the vibration intensity change in response, and allowing them to build up a sort of mental depth map of the immediate area. This physical scanning resembles RADAR antenna sweeps, and serves essentially the same purpose.

There are some other projects with similar ideas, such as the wrist-mounted digital white cane and the hip-mounted Walk-Bot which integrates multiple angles of sensing, but something about the glasses form factor seems attractively intuitive.

Thanks to [Daniel] for the tip, and remember that if you have something you’d like to let us know about, the tips line is where you can do that.

Of course I can’t find it now that I’m looking for it, but one of the wearable computing pioneers played around with integrating extra senses into existing sensory channels. You know, things like a digital compass that indicated magnetic north once a second via vibration motors in a belt, things like that. There are a bunch of interesting results from that era of research that aren’t being explored.

One of the augmentations was a pair of glasses that had some kind of spectrometer mounted on them, positioned and aimed approximately where the ToF sensor is in this product. Part of the reason I tried to find the original article is I may get the next details wrong: It sampled 128 different wavelengths of visible and invisible light, which was reported to the wearer as a complex white noise based on the intensity of sampled wavelengths.

In sighted users it didn’t integrate into the sensorium quite as deeply as some other hardware, but the results were still weakly superhuman. The user reported being able to detect the difference between steel and bondo under a car’s paintjob. He also started developing intuitions about the health of grasses based on how they sounded compared to one another, even if both appeared healthy and green to his relatively ignorant eyes.

These results were… cute, but integrating the same system into these wearables would enhance them incredibly. Sound cues based on light reflectivity over various wavelength would allow the user to identify and locate specific surfaces as they moved around, turning their depth map of the space into a textured depth map. It still wouldn’t be a terrific replacement for sighted vision, even equivalent tunnel vision, but locating landmark textures to orient one’s self would be invaluable in increasing independence among profoundly blind adults who wanted to use such a system.

Some tiny solenoids around the faces vision area could also provide some feeling of direction.

Would be nice upgrade in VR headsets, if you go through the Star Gate and little solenoids gives you a feeling of going something with ressistance.

I have a recently blind dog (less than two YO), and think is a great project idea! I will probably integrate it into the collar, as she really doesn’t like glasses on her face

Good for me my right eye is already blind, so is not too much a problem if a lipo cell will explode just on my face

I wonder if you could safely do small electrical stimulation with some sort of headwear powered by a remote wired battery pack.

Years ago I read a SciFi story (Bruce Sterling?) where people wore glasses like these. They were connected to a haptic vest that pressed an image of what you were “seeing” into your back. Always thought it was an interesting idea.

Explored some of these ideas as a part of one of my undergrad projects – took 2 webcams, tried to create a depth map from a pixel to pixel depth matching algorithm, and map it to a glove which provided haptic feedback of the environment. Got something built, but not usable/helpful. See:

https://www.researchgate.net/publication/2324947_A_Stereo-vision_System_for_the_Visually_Impaired