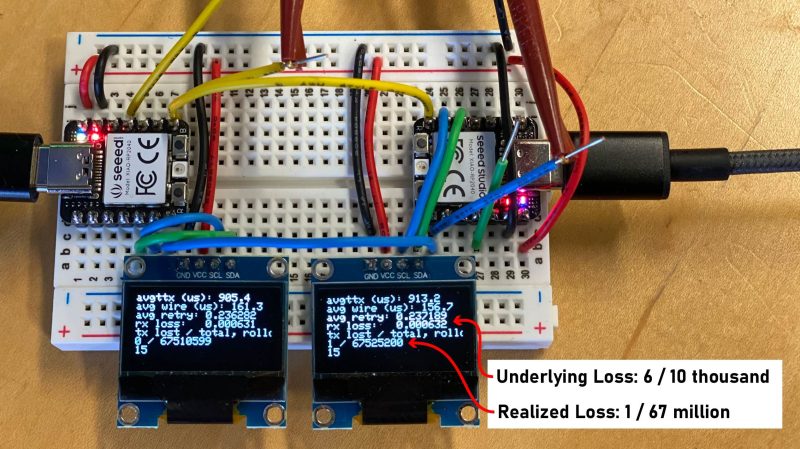

Many of us have used UARTs to spit data from one system or chip to another. Normally, for quick and dirty maker projects, this is good enough. However, you’ll always get the odd dropped transmission or glitch that can throw a spanner in the works if you’re not careful. [Jake Read] decided to work on a system that could use UARTs while being far more reliable. Enter MUDLink.

MUDLink is a library that works with an Arduino’s UART port and stacks on a bit of protocol to clean things up. It uses a packetized method of sending data to ensure that transmissions are received reliably as intended by the sender. Packets are framed using a method called Consistent Overhead Byte Stuffing, which is a nice lightweight way of doing so. The system also uses CRC16-CCITT as an error checking mechanism. There’s also an ack-and-retransmit system for ensuring any dropped transmissions are repeated and received successfully.

If you need reliable UART transmissions without too much overhead, you might want to look at what Jake is doing. It’s a topic we’ve looked at before, too.

@Lewin Day

“Video after the break.” – A copy past error?

Probably… oops.

Um, I see no video here..?

Guess that is still being transferred through the UART. Takes a while.

Somewhere, very quietly, XMODEM is crying.

Looks like a useful widget in the toolbox to use on crappy connections.

But seriously: If you’re deploying this on a wired connection because you’re getting that error rate, you are doing something very wrong, and attacking the wrong end of the problem. Any short-haul wired link like that should have near-infinitesimal error rate and never need the overhead, complexity, latency, and potential bugs of this ‘solution’.

“Any short-haul wired link like that should have near-infinitesimal error rate”

There’s no flow control here. It’s happening due to buffer overruns and dropped bytes on either end. And just like basically every very high speed link, it’s more efficient to handle the occasional blip than slow down. Yes, obviously, the most efficient method would be hardware flow control but that’s a system-level design thing rather than a “just error-correct it.” (Yes, you could imagine deterministic soft flow control but it’s entirely possible in a design that there are higher-priority demands).

Also clock drift between source/transmitter and effective jitter in the sample rate (and this is just fundamental and exactly equivalent to high speed data transfers) – if you can handle a low percentage of bit errors with low overhead it’s way better to run faster and correct.

Communications should be reliable and deterministic. A packet error and retry rate of 10e-3 is awful even for a noisy radio link. A wired connection should be essentially zero.

If the errors seen are actually buffer overruns, then that’s a different (still awful) issue.

A strategy like this *would* be useful over a noisy radio or long-haul analog line. But if it’s needed to cope with a short line demoed here, it’s the answer to a problem that shouldn’t exist.

Problems that “shouldn’t” exist are usually the ones that are most annoying to work around when they do, in fact, exist. Sometimes a workaround is easier than re-engineering your whole design, especially if it’s an intermittent problem in situ where it can often be impossible to identify the root cause.

“Communications should be reliable and deterministic.”

You’re imposing a requirement that doesn’t have to exist. Speed and error rate are two separate design processes: I mean, this is the entire reason for the existence of error-correcting procedures in stuff like high-speed links and flash memory.

If the *only thing you care about* is the throughput, you can improve throughput by sacrificing raw error rate. Raw error rates in a *lot* of stuff are extremely high! Error correcting methods are extremely robust at this point.

“A wired connection should be essentially zero.”

It’s not wired *synchronous* it’s wired *asynchronous* which means clock tolerance between transmitter/receiver matters. I mean if you want to be crazy, you can calculate what the bit error rate is given drift characteristics between two clocks – if you push the transmission rate high enough, you can get a very low bit error rate, but not zero: if you can *tolerate* that bit error rate via correction methods, the total throughput can be *higher* even though the raw bit error rate is lower.

“If the errors seen are actually buffer overruns, then that’s a different (still awful) issue.”

Again – you’re imposing a requirement that doesn’t have to exist. I could *easily* dream up a scenario where a system prioritizes some other high-importance thing (I dunno, sensor processing or loop control or something) and *accepts* that buffer overruns can occur because they can be handled in software and are benign.

That’s not an “awful” issue. It’s a completely valid design choice. Stuff like this used to be common back when serial links between computers were used for file transfer: you bump the baud rate to something crazy and accept that you get like a 5% error rate because the thing still completes faster.

>Communications should be reliable and deterministic. A packet error and retry rate of 10e-3 is awful even for a noisy radio link. A wired connection should be essentially zero.

I wonder if you consider a slip ring as a “wired connection”? I do.

Way back when, maybe 20 years ago, I created a bunch of homemade slip rings using ball bearings. Two high current power connections and two lower power data connections. They were *really* noisy, but the equivalent manufactured part at the time was going for $600 each and I needed 4 of these, on a low budget. My homemade version cost me about $10 each. I hope you can see that $40 is better than $2400 for a small personal project.

My slip rings worked, but they were noisy. I was passing RS232 over the two data connections (TX/RX), but it was unreliable because of the homemade slip ring. I added a CRC to the data packets I was sending and receiving and it worked amazingly. I was able to reliably send and receive data, throwing out any bad packets.

So, no, a wired connection is not always going to be perfectly reliable in every case that exists. You could say that using a slip ring wasn’t strictly “wired” as the shaft rotates, but it’s not wireless either.

The article specifically calls out situations where the noise level can radically change, such as “turning on a high-power motor.” The idea is that without some kind of error-handling, you would need to set your baud rate to the slowest speed that is going to be reliable.

IMHO the one thing missing from the article was a comparison to historical protocols and why this one is better with today’s higher-than-1980s-speed UARTs. Someone above already mentioned the XMODEM protocol, but there were others (Comparison of file transfer protocols#Serial protocols, Wikipedia, March 24, 2024).

to be honest an argument for why this is better is “have you ever actually read the code for any of those guys?”

The most common package (lrzsz) specifically says “i will not say anything about the original unix zmodem sources. Just look at them and you’ll know (even lrzsz is still worse than i like it to be).” That being said that’s also an argument *for* those protocols since lrzsz in many distributions is 20+ years old.

Performance wise, it’s without a doubt worse than them because they’ve got windowed transfer and this doesn’t. That’s the one thing I’d change because waiting for an ack for every packet is a *massive* speed loss and mostly defeats the high speed benefits. Even a single ack window allows you to run basically continuously.

But a side benefit of that is that you could implement this in an FPGA fairly trivially (cobs encode/decode cores already exist), whereas reimplementing something like x/y/zmodem would probably be best via a softcore processor.

>> That’s the one thing I’d change because waiting for an ack for every packet is a *massive* speed loss

If your data needs to be processed in a very specific order, you either wait for an ACK after every packet or you have a very large buffer to deal with out-of-sequence packets. The latter is not always feasible on small microcontrollers.

If you wait for an ack, you have enough space for a single packet because you’re holding it anyway. In which case you can split the packet size and now you can store 2: the overhead very likely won’t matter.

@Pat

That’s it exactly. You want room for at least two packets (although you can always add more) – one currently being received, and the prior one that’s being processed. If the interrupt handler for the UART receive keeps a running calculation of the crc as the packet is being received, and if the code processing the previous packet can complete its task in less than the time it takes to receive a new packet, the ack can be transmitted within microseconds of receiving the crc. Your latency is then roughly the amount of time it takes to transmit/receive the ack at your chosen baud rate.

The problem with some file transfer protocols is that due to the possibility that you are receiving packets out-of-order, you usually can’t start processing the received data until the full file has been received, or at best you can only process those packets that are contiguous. That potentially imposes significant latency on the receiving end – much more than the latency imposed on the sender’s end by requiring it to wait for an ack after every packet. For simple file transfers that’s not a big deal, but if you’re implementing some form of real-time control of a remote instrument/machine, that added latency on the receiving end could be disastrous.

An ack after every packet also imposes flow control, preventing buffer overruns at the receiving end. The receiver always has the option of deciding that a particular packet can be safely ignored, allowing it to ack the next packet earlier than it otherwise might.

Again – if you don’t have enough space, make the packets smaller until you do. You don’t need to handle receiving the packets out of order – just dump any packet you receive out of order and NAK both.

The overhead cost of that is reduced by the raw error rate, which makes it a far smaller cost than the enforced deadtime waiting for an ack for every packet.

I hadn’t come across COBBS before, but it’s really nice. I had written a UDP over GPRS serial transport protocol for a (UK) Psion product in the early noughties which used a similar, but slightly more complex scheme. First, a summary of COBBS:

You have a delimiter byte, in their case ‘\0’. Instead of escaping it the normal way using an escape character and a substitute byte, you precede the message with the number of bytes until the next ‘\0’ and then each ‘\0’ becomes the next length byte, up to 255 incase there’s more than 254 bytes until the next ‘\0’. Hence the cost of escaping bytes is no more than 1/254.

In my scheme, I considered the idea of the ideal encoding where you’re encoding a base 256 message in base 254 (but really 252, because of the extra ‘escape’ codes I needed, because I prefer both style packet protocols). I approximated it by XORing each MessageByte that needed to be escaped, with an escape bit (bit 2); concatenating the correct bit value for all bytes that could be escaped bytes, in another byte until there were 7 escaped bytes, then appending a byte containing the concatenated bits with bit 7 set. It has a worst case of 1 padding byte per 7 message bytes, but handles multiple escape characters. For example, STX=0x02, ETX=0x03, escape bit = 0x04:

Contrived original message: {0x02, 0x03, 0x06, 0x07, 0x08, 0x02, 0x03, 0x02…} All the bytes except 0x08 need escaping giving: {0x06, 0x07, 0x06, 0x07, 0x08, 0x06, 0x07, 0x06, 0x8c} and the 0x8c enables the 0x06 and 0x07 bytes to be reconstructed correctly.

So, I do like COBBS quite a lot!

Guys…

MODBUS RTU.

Works over UART or RS485, uses binary data, works with PLCs natively. Lots to like there. You fill out a register map. Publish it and your product is done.

I did a similar thing when I was passing RS232 data over a noisy homemade slip ring to drive a 250 watt motor. The slip ring had 2 high current power connections and two low power data connections for TX/RX. My setup didn’t need to retransmit data if the CRC didn’t match, it was a continuous control setup so the control values were being constantly sent, if one CRC was bad it would wait for the next correct packet, and if too many were missed in a period of time then the whole system was considered to be compromised and the whole system would shut down. It worked beautifully.