Sometimes, startups fail due to technical problems or a lack of interest from potential investors and fail to gain development traction. This latter case appears to be the issue befalling A3 Audio. So, the developers have done the next best thing, made the project open source, and are actively looking for more people to pitch in. So what is it? The project is centered around the idea of spatial audio or 3D audio. The system allows ‘audio motion’ to be captured, mixed and replayed, all the while synchronized to the music. At least that’s as much as we can figure out from the documentation!

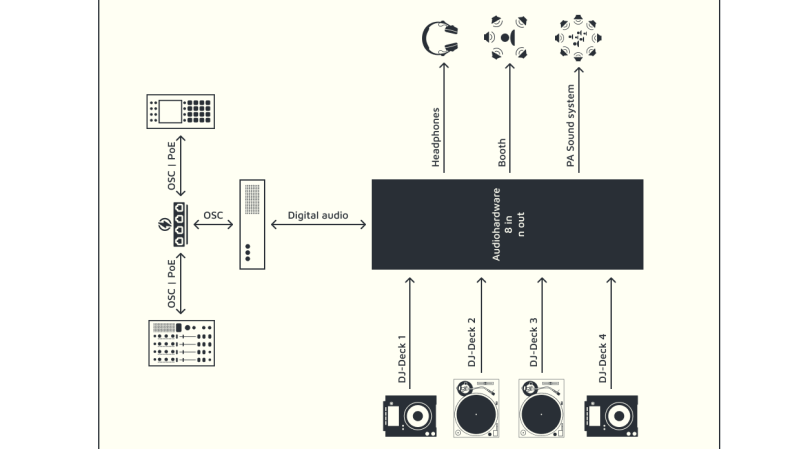

The system is made up of three main pieces of hardware. The first part is the core (or server), which is essentially a Linux PC running an OSC (Open Sound Control) server. The second part is a ‘motion sampler’, which inputs motion into the server. Lastly, there is a Mixer, which communicates using the OSC protocol (over Ethernet) to allow pre-mixing of spatial samples and deployment of samples onto the audio outputs. In addition to its core duties, the ‘core’ also manages effects and speaker handling.

The motion module is based around a Raspberry Pi 4 and a Teensy microcontroller, with a 7-inch touchscreen display for user input and oodles of NeoPixels for blinky feedback on the button matrix. The mixer module seems simpler, using just a Teensy for interfacing the UI components.

We don’t see many 3D audio projects, but this neat implementation of a beam-forming microphone phased array sure looks interesting.

I honestly don’t understand still what this does. Specially, how much of 3D spatial audio there is into it, and why the setup helps or is any different to others.

Website and Github are even less meaningful, maybe a little bit more work on explaining why their project is valuable or worth it would help them success.

You hit that there proverbial nail, square on! I had exactly this issue, but limited my reading time to produce the limited explanation you see above. Perhaps they just need some help on the website and documentation side, with some video demos explaining what this is all about and that will be enough to get some traction on the help they need. Sigh. Typical engineers – great at prototypes and making stuff work, but terrible at explaining why they bothered in the first place. I should know, this is me.

Over the past ten years, I got involved with several start-up ideas, some of them with great potential for success, but every time they fell apart when we (the engineers) asked each other, who would do the marketing work to get the funding and boost the interest in the project. None of us were interested in that part, we just wanted to engineer stuff.

I am now part of a startup where the founders have no technical knowledge but know what they want, plus are also good at marketing and creating documentation.

Indeed, from the name and all the DJ type equipment mentioned I’d guess, rather wildly, that this is meant to allow you to create the 5.1 surround sound type experience at a big venue and then actively ‘move’ the perceived sound sources virtually. But it really isn’t clear what it is doing, or how the 3 elements are supposed to work together – seems to me like really the ‘core’ is just a pretty normal Linux sound stack with a more advanced configuration than the typical home user that is actually doing all the functional stuff and the other bits are nothing but the HID for it. But it is so unclear from the overview level stuff it really could be anything at all that happens to be audio related and use JACK..

> this is meant to allow you to create the 5.1 surround sound type experience at a big venue and then actively ‘move’ the perceived sound sources virtually.

AFAIK big venues / festivals have basically been using phased speaker arrays for years now – eg. to mange noise levels behind the stage / outside the venue.

If that is the case I’d assume moving some sound object around is already possible.

https://www.soundonsound.com/techniques/line-arrays-explained

Seems like sound guys don’t use the term “phased array”, only “array”… At least I can’t find a good source for my “knowledge” above.

(@HaD – feel free to delete my other identical reply…)

> this is meant to allow you to create the 5.1 surround sound type experience at a big venue and then actively ‘move’ the perceived sound sources virtually.

AFAIK big venues / festivals have basically been using phased speaker arrays for years now – eg. to mange noise levels behind the stage / outside the venue.

If that is the case I’d assume moving some sound object around is already possible.

https://www.soundonsound.com/techniques/line-arrays-explained

Seems like sound guys don’t use the term “phased array”, only “array”… At least I can’t find a good source for my “knowledge” above.

Hello and many thanks for that introduction and all your comments. I understand, we need to explain it better on our Website.

Our goal is, that artists without Knowledge of 3d-sound, are able to create live intuitiv movements of their mono or stereo audio in a 3d-sound System. Therefore we designed it as „4 channel Dj-Mixer with 3d-panning fx Controller“ where:

– a3 motion controller records, manipulates, and sends motion from a touchscreen to any osc controllable 3d-audio backend or plugins like iem plugin suite, sparta, flux, l-isa, ssr, and so on. It also acts lika a audiosampler but for osc which has, 4 samplepads per Channel play/pause/hold. Each if the 4 channels has an encoder to setup tempo or navigate menus and two knobs we‘re using to adjust stereo width and ambisonic order. Also we built in a tap tempo, a set to 1st bar and a record button.

– a3-mixer controller was built because we need direct access to incoming audio per track in order to spatialize trackwise and switch 3d vs stereo. It‘s just an osc Controller and could be used in any other Environment than ours.

– a3-core consists of three software pieces: 1. a reaper project, which provides the dj-mixer functionalities and hosting the spatial audio Plugins (at the moment we‘re using iem plugin suite). 2. a Python script which receives incoming osc data and sends translated osc to reaper. 3. a supercollider script to analyse audio and generates vu meters for a3-mixer (and any other destination who wants to use that data, like visual or light artists).

Technical:

– a3-motion gui is written in c++. The main pcb was made for a teensy 4.1 connects to a raspberry pi 4b. The button matrix pcb holding neopixels and buttons. 2nd version is in the loop to lower costs, and smooth the assembly.

– a3-mixer is an raspberry pi 3b running a python script to receive and send osc data. The main pcb was designed for teensy 4.1.

– a3-core could run on most well configured pc hardware. The oldest piece we tried was an intel i5 on a debian realtime kernel. Good results at 5.9 ms latency.

@HaD

Please add some more tags!

– surround sound

– 3d sound

How is it possible an audio article doesn’t have “sound” in it’s tags

Or even better: Alias “3D audio” to “surround sound” and “3d sound”