Most of us associate echolocation with bats. These amazing creatures are able to chirp at frequencies beyond the limit of our hearing, and they use the reflected sound to map the world around them. It’s the perfect technology for navigating pitch-dark cave systems, so it’s understandable why evolution drove down this innovative path.

Humans, on the other hand, have far more limited hearing, and we’re not great chirpers, either. And yet, it turns out we can learn this remarkable skill, too. In fact, research suggests it’s far more achievable than you might think—for the sighted and vision impaired alike!

Bounce That Sound

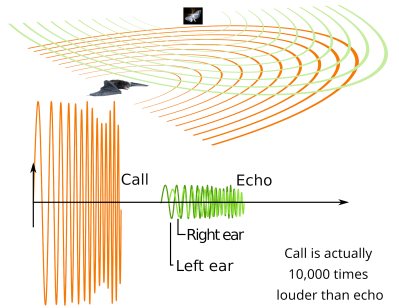

Before we talk about humans using echolocation, let’s examine how the pros do it. Bats are nature’s acoustic engineers, emitting rapid-fire ultrasonic pulses from their larynx that can range from 11 kHz to over 200 kHz. Much of that range is far beyond human hearing, which tops out at under 20 kHz. As these sound waves bounce off objects in their environment, the bat’s specialized ultrasonic-capable ears capture the returning echoes. Their brain then processes these echoes in real-time, comparing the outgoing and incoming signals to construct a detailed 3D map of their surroundings. The differences in echo timing tell them how far away objects are, while variations in frequency and amplitude reveal information about size, texture, and even movement. Bats will vary between constant-frequency chirps and frequency-modulated tones depending on where they’re flying and what they’re trying to achieve, such as navigating a dark cavern or chasing prey. This biological sonar is so precise that bats can use it to track tiny insects while flying at speed.

Humans can’t naturally produce sounds in the ultrasonic frequency range. Nor could we hear them if we did. That doesn’t mean we can’t echolocate, though—it just means we don’t have quite the same level of equipment as the average bat. Instead, humans can achieve relatively basic echolocation using simple tongue clicks. In fact, a research paper from 2021 outlined that skills in this area can be developed with as little as a 10-week training program. Over this period, researchers successfully taught echolocation to both sighted and blind participants using a combination of practical exercises and virtual training. A group of 14 sighted and 12 blind participants took part, with the former using blindfolds to negate their vision.

The aim of the research was to investigate click-based echolocation in humans. When a person makes a sharp click with their tongue, they’re essentially launching a sonic probe into their environment. As these sound waves radiate outward, they reflect off surfaces and return to the ears with subtle changes. A flat wall creates a different echo signature than a rounded pole, while soft materials absorb more sound than hard surfaces. The timing between click and echo precisely encodes distance, while differences between the echoes reaching each ear allows for direction finding.

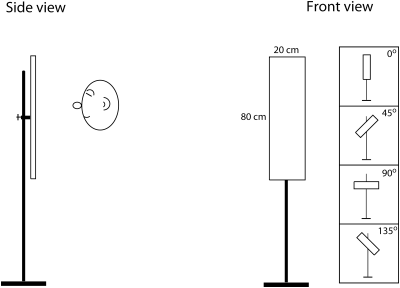

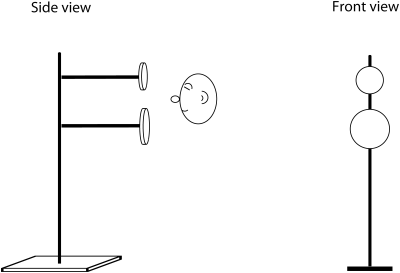

The training regime consisted of a variety of simple tasks. The researchers aimed to train participants on size discrimination, with participants facing two foam board disks mounted on metal poles. They had to effectively determine which foam disc was larger using only their mouth clicks and their hearing. The program also included an orientation challenge, which used a single rectangular board that could be rotated to different angles. The participants had to again use clicks and their hearing to determine the orientation of the board. These basic tools allowed participants to develop increasingly refined echo-sensing abilities in a controlled environment.

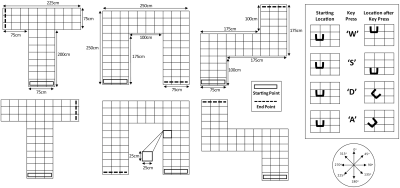

Perhaps the most intriguing part of the training involved a navigation task in a virtually simulated maze. Researchers first created special binaural recordings of a mannikin moving through a real-world maze, making clicks as it went. They then created virtual mazes that participants could navigate using keyboard controls. As they navigated through the virtual maze, without vision, the participants would hear the relevant echo signature recorded in the real maze. The idea was to allow participants to build mental maps of virtual spaces using only acoustic information. This provided a safe, controlled environment for developing advanced navigation skills before applying them in the real world. Participants also attempted using echolocation to navigate in the real world, navigating freely with experimenters on hand to guide them if needed.

The most surprising finding wasn’t that people could learn echolocation – it was how accessible the skill proved to be. Previous assumptions about age and visual status being major factors in learning echolocation turned out to be largely unfounded. While younger participants showed some advantages in the computer-based exercises, the core skill of practical echolocation was accessible to all participants. After 10 weeks of training, participants were able to correctly answer the size discrimination task over 75% of the time, and at increased range compared to when they began. Orientation discrimination also improved greatly over the test period to a success rate over 60% for the cohort. Virtual maze completion times also dropped by over 50%.

The study also involved a follow-up three months later with the blind members of the cohort. Participants credited the training with improving their spatial awareness, and some noted they had begun to use the technique to find doors or exits, or to make their way through strange places.

What’s particularly fascinating is how this challenges our understanding of basic human sensory capabilities. Echolocation doesn’t involve adding new sensors or augmenting existing ones—it’s just about training the brain to extract more information from signals it already receives. It’s a reminder that human perception is far more plastic than we often assume.

The researchers suggest that echolocation training should be integrated into standard mobility training for visually impaired individuals. Given the relatively short training period needed to develop functional echo-sensing abilities, it’s hard to argue against its inclusion. We might be standing at the threshold of a broader acceptance of human echolocation, not as an exotic capability, but as a practical skill that anyone can learn.

Reminds me of Richard Feynman’s party trick of discerning which books someone has handled by smell. Everyone assumed he used some other method, and that the smelling was a blind to throw them off. He writes that people would frequently make more and more outlandish guesses about how he “really” did it.

Industrialized humans don’t use smell much … but we probably could.

A huge part of our loss of smell is a function of considering it rude to go around closely sniffing things and people. Often it’s socially unacceptable to even acknowledge that things have distinctive odors. That, and the elevation of our nose above the ground.

You can easily smell if somebody has masturbated or had sex recently, what kind of food people ate ten hours ago, if somebody is menstruating or menopausal, arguably one can smell when somebody is pregnant, and you can often smell when people are sick or even just anxious versus normal sweat from exercise.. And it’s absolutely impossible to discuss any of it. But your dog doesn’t mind noticing.

Thank goodness machines can’t do that (yet)!

Wait…we aren’t supposed to discuss it?

I talk about it all the time.

I have very sensitive senses. We are a “no scent” household, because 2 of us are sensitive enough that we can tell whether or not you used hand lotion yesterday morning.

We can’t keep windows open, because someone a block away will inevitably be pumping some noxious fabri softener scent out of their dryer vent.

If I’m around someone regularly, I know when they eat something abnormal or are going to get sick, because they smell different. And I’m not sniffing them from 1 inch away. People smell. It’s just a thing. Most people don’t smell “bad”. They just smell like them. It’s like recognizing someone’s face or voice.

About the article topic?

Yes . Of course it’s possible. It’s so obvious I don’t know why they needed a “feasibility” study.

I live in an area where there is a school for the blind.

As a teen, my friends and I wondered how hard it would be to navigate without sight.

I already knew that I could tell where I was in familiar places by sound alone, but what about large outdoor areas?

This was in the 90s, and Snapple was very popular.

Being obnoxious by pressing the freshness-seal cap until it bent and was then able to make LOUD clicking sounds was also popular…

But, being able to consistently make sharp clicks that carried 100 feet or more before returning, was also SUPER useful for us leaning to echolocate.

We had 4 of us in our middle school group, and we modelled our learning he same way we saw the blind people being taught.

3 of us at a time would walk home blindfolded, while the 4th was our “minder” that made sure we didn’t walk into traffic or fall into a ditch.

We got quite good at it.

We did get chewed out once by a guy who was teaching some people going blind how to get around. (If you are losing your sight, it is easier to learn how to use a cane and how to do tasks BEFORE you actually get so bad that you need them.)

He thought we were making fun of them.

We explained what we were doing, and why. (Ballsy for middle schoolers being chewed out in the street to explain themselves instead of being rude…)

A month later we ran into the same guy, and the students each had little hand-clickers.

Apparently someone makes(made?) devices to do exactly what we were using the Snapple caps for. Though, I still think our free Snapple cap worked better than the $40 clicker…

Human echolocation is EASILY good enough for basic navigation.

Our brains do tons of things below the surface that we either learn to ignore, or just don’t notice.

I joke around with my young nieces about teaching them how to use “superpowers”.

It doesn’t have to be some mystical garbage. Nor does it have to stray into pseudoscience.

Both of them can tell which side of their face I’m holding my hand, from a foot or more away, with their eyes closed.

Subtle differences in radiated warmth, differences in sound, or other little things make it possible. They just had to learn to pay attention.

I’m now teaching them how to know where everyone in the house is, by the vibrations they can feel through the floor when people walk around. (Wood floors work. Even between different levels. The concrete basement doesn’t…yet.)

This was a long ramble…

…senses. we got em. We ignore them.

Well, some people do. I don’t.

Anecdotally, a school I went to had a fire alarm in one area so harsh I could literally use it to navigate out blind, good thing too, since it was so physically painful to me I could only stumble down the hall praying my hands to my ears.

Funny how people put all kind of assumptions on this kind of thing.

These reasons you give for why it would be bad are incorrect and show a very strange bias human ancestors would not have cared about. As social animals humans relate to each other through smells still (including whether you have recent had sex), the real reason it has been degraded is the value of language and speaking over those other forms of communication. This is easy to demonstrate too, as language centers are largely what take up the brain capacity we used to use for smell. This is also the same reason more complex cognitive functions developed, it turns out being social is hard but valuable.

done this since I was a toddler, and I’m not blind ! very useful in the dark…

Done what, smell books to see who handled them or navigate by echolocation? I can see both being useful in the dark, depending on the circumstance.

I’m confused. You guys mean if people are stuck in an elevator or an underground garage during power outage or something? 🤔

Power outrage or when you dont want to turn on the light or other strange reasons !

Is that the only situation you can think of involving the dark? Have you never been outside at night?

Both… But a lot of people think i’m weird !

Especially when I recognize people or genders only by smell… 😅

A huge part of the trick was that a the time most people smoked heavily, and everything they touched would smell of cigarettes.

Feynman himself wasn’t known to be much of a smoker.

Perfumes, colognes, hand creams, deodorants, foods scents and work chemicals/products Combine any of them with a cross reference of who people might co-mingle with and you can get a surprising amount of information about who’s been where and what they’ve handled.

Spent many years wearing “Machinists Cologne” (sulpher based cutting oil) myself.

Could have walked the shop floor blindfolded, just by sound. You learn the noises of every machine you operate, so as to pick up on anything going awry.

Even cutting tool point wear causes very distinct sound changes in the chips themselves as they curl off the tooling and hit the floor.

As a kid, riding in a car, I noticed the change in reflected sounds by the curb cuts and walls of buildings or parked vehicles grassy center islands, etc.

Knew the whole house by ear as a kid. Footsteps return from open or closed doors or a laundry basket has changes of sound according to how full.

A load of cotton towels sounds different (kind of an empty spot) from a load of synthetic dress clothes. Stacks of books (shiney sound) vs stack of papers duller sound. A paper half slipped off the stack has a greater return/reflection of noise.

Got labeled as OCD & ADD for it all. Turned out to be pretty useful traits in the repair field though.

Pretty rough for getting to sleep though, when you can’t turn it off at will.

Age taking away a lot of…all of it, including smell.

Seems like every new medicine the Drs hand you, takes a toll on the senses also.

In the spring and fall, I’ll sometimes skip all the pills for 2~3~ weeks just to clear the ears and enjoy the stereo and the critter sounds for a while.

Heck, someone may find me passed away one day, with an album on the turntable and a slight smile on my face. ;)

COVID pretty seriously damaged my sense of taste, (lately some of it is coming back, though not all) but at some point I lost a lot of my sense of smell too. Oddly certain smells a much, much stronger for me as a result while I miss others entirely.

“As a kid, riding in a car, I noticed the change in reflected sounds by the curb cuts and walls of buildings or parked vehicles grassy center islands, etc.”

I seem to recall at that age, the sound of the telephone poles going by.

Maybe it was the sound of our DeSoto reflecting off of them, or maybe the wake of the wind they interrupted.

Sounds like you have a bit of that thing where you see sounds. I think some famous composers had it. But just your descriptive words are often very visual that do not make any sense to me as a normy. Eg what is a shiny sound? Lol. That’s pretty cool though.

Machenists from yesteryear have some finely attuned senses. Hearing that tool cut and know if it needs sharpening or if the cut is too deep, Smelling the metal and the fluid to know if you are cuting too fast and feeling the vibration, resistance in the machine so you know just how precises that pass was likley to be.

Lots of little things that people take for granted and cant really be taught. Just becomes second nature.

I remember reading an article in the 80’s about a blind man that used echolocation. He said he hardly used his cane. A series of tests were performed and he showed that he could navigate easily past obstacles. Even in a busy city trial he had no real problems. He even rode a bicycle except he said he did have a problem with a single wire fence he ran into. It was determined that primarily he was audibly “seeing” an acoustic picture of his surroundings using ambient sounds and their reflections from the environment. However, he did use bat like high frequency “chirps” as well. Maybe someday there will be a wearable sensor net like Miranda’s in STOS episode “Is There In Truth No Beauty” or Geordie La Forge’s (STNG) visor providing ultra high resolution information in a small wearable device.

Yes, nothing new about it. https://en.wikipedia.org/wiki/Human_echolocation

Yes, I watched a Ben Underwood documentary a long time ago, awesome, him playing basketball. Had a buzzkill doctor or therapist, pointing out he could fall into a hole…

Reading the wiki to find that he died of cancer, oh man, not fair.

I thought I’d read several articles a dozen years ago about people using handheld mechanical clicker boxes and teaching themselves to echolocate and getting really quite good at it, easily able to negotiate public spaces.

It probably has the side effect of attracting dogs that have been trained using a clicker.

Hey, free seeing eye dog.

An aquintance of mine is almost fully blind (<3% vision, basically he can see the difference between sunshine, overcast and being indoors). He walks with a cane with a “ball” on the end. In areas where he is well known, he says he navigates more based on the sound that the cane makes and his surroundings, than on touch. He lives on a sidestreet of a busy street, and can hear walking past a lamppost based on the difference in traffic sound, and as such counts the lampposts till the foot crossing.

Also, there is tactile paving, with tiles not only with different textures, but also materials which make a different sound when tapping.

Not echolocation but it is navigation by sound.

If you walk blindfolded, slowly, through a large room like a gym or a big conference room, you can hear the sound of the HVAC and other ambient noises change a few centimeters away from the wall. I “discovered” this as a kid. No idea, but I suspect the effect is related to the Crown PZM microphone of years gone by. Which is why I walked into the gym wall a lot until I got the hang of it.

I have normal sight and I have tried to learn a little bit of echo location. I happened to read about it some 15 years ago, and I then also remembered that as a child I was able to “hear walls” when I was near them. That childhood memory was likely only due to the ambient noise reflecting from the walls. But that memory was sufficient proof that I decided to occasionally try real echo location using sharp tongue flicks. The sharpness of the sound is key to success. Obviously impulse response would be best, but you can do it only approximately with tongue.

Background noise was really detrimental for the process. So traffic noise for example made it really difficult.

I have never bothered to really hone the skill, just tried it for fun. I usually did not cover my eyes, but that was mostly because I wanted to correlate the sound to the visual reference. Big, tall objects like buildings were rather obvious. Tree was discernable, if I remember correctly. Small corridors were difficult with limited training and also because I did not want to bother people…

But yes, it is fun skill to try to learn even by oneself.

Wonder if miners use it?

I’ve never turned on lights when wandering around after dark and have used echo location for a long time. I snap my fingers instead of clicking my tongue, probably because it’s louder and you can get more separation which gives better triangulation.

“I’ve never turned on lights when wandering around after dark”

A house without children, no LEGO or other toys?

B^)

Huh now I want to make a little rig to try this. Something that can move around an object silently and if I want to get real fancy some buttons to keep track of what I think is going on with the object.

I knew it. Daredevil is real!

On a more serious note, as others have also commented, I have also used hearing to “sense” the presence of objects in complete darkness, provided that there is a sound source. The reflections of sound become very noticeable near to objects and help to avoid them.

I would think that it should be possible to design a headset that transmits chirps at a frequency (or frequencies) above that of human hearing, with the headphones translating the return pulses down to audible signals. As best I know, the heterodyne translation preserves phase as long as the mixers in both receivers are phase locked. The headset (or ear plugs) could be designed to also pass normal audio in addition to the translated pulses.

I’d be surprised if somebody isn’t already experimenting with this since by the time I think of something I think is novel it’s always already been implemented … or been proven fallacious.

The shape of your ear has a lot to do with how your brain interprets direction. Somebody once said to me that stereo hearing was a must to determine direction. No – if you cover one ear, you can still determine with reasonable accuracy the direction a sound is coming from.

Microphones on headphones don’t have the “ear” shape, and if they did, they would be a different distance apart from your genuine ears – throwing out any information your brain gets from your ears being a certain distance apart.

Hearing is pretty clever – your brain can determine lots about a sound: the nuances of it being modified by reflections & refractions to do with the shape of your ears; distance between your ears; shape of your head; your hairstyle…

MARCO

POLO

That’s more of an interrogator/transponder system.

Polo!

I was a ZZ Top concert a couple months with a good buddy. The crowd was getting pretty packed close to the ticket check in gate. A woman had misplaced her husband and yelled out Marco ! and in the distance I heard Polo ! and she said..there he is. My buddy and I were separated so I said in the middle of the crowd “have y’all heard of Redneck Marco Polo ?” Everyone just looked at me. I yelled out “hey…big fat dumass !” In the distance I heard “screw you…get me a beer !” . The crowd lost it and I said “and that is Redneck Marco Polo”. The ZZ Top concert was awesome and Billy and Dusty stopped in for a while and hung out at our bar down the road.

“I was a ZZ Top concert a couple months with a good buddy. ”

Man! That must’ve been a heckuva concert!

Or did you forget the word “ago”?

B^)

Oh wait…

You were a ZZ Top concert?

I was AT the ZZ Top Concert at Black Oak Amphitheatre in Lampe, Mo. on October 6th, 2024. It was an awesome concert with Ozark Mountain Daredevils opening for them. I only live 10 miles from the outdoor arena. I am also 5 miles from Thunder Ridge Nature Arena owned by Bass Pro owner Johnny Morris. Both are south and west of Branson, Mo.

Maybe this is why many diverse mammals seem to have echo location, it’s not a unique development but just an evolution of something that all mammals have.

I’ve seen mention of folks being good at this. Including one guy who was shown via MRI to actually have repurposed imaging centers in the braid so he was demonstrably “seeing” an image.

What I haven’t seen is any good set of exercises we could use to learn the skill. Swapping targets could be done with servos, but I’d want to get to that imaging level if possible.

One more thought. Before taking a flash illuminated photo. Sadly, I always forget to do this most of the time.

Try clapping your hands together, (one palm up-one palm down) and see if your perception of how sharp the echo is matches the reflectivity of the surfaces to the flash/light.

Ok but how do bats navigate in a cave filled with other bats all chirping themselves. It seems like a channel congestion issue should arise. I wonder if we can learn something to optimize wifi signal congestion issues by figuring out how bats do it.

They probably have a time schedule like planes on the runway and stagger their exit / entrance flights.

First search result:

So… frequency hopping together with CSMA/CA.