[Nicholas Carlini] found some extra time on his hands over the holiday, so he decide to do something with “entirely no purpose.” The result: 84,688 regular expressions that can play chess using a 2-ply minmax strategy. No kidding. We think we can do some heavy-duty regular expressions, but this is a whole other level.

As you might expect, the code to play is extremely simple as it just runs the board through series of regular expressions that implement the game logic. Of course, that doesn’t count the thousands of strings containing the regular expressions.

How does this work? Luckily, [Nicholas] explains it in some detail. The trick isn’t making a chess engine. Instead, he creates a “branch-free, conditional-execution, single-instruction multiple-data CPU.” Once you have a CPU, of course it is easy to play chess. Well, relatively easy, anyway.

The computer’s stack and registers are all in a long string, perfect for evaluation by a regular expression. From there, the rest is pretty easy. Sure, you can’t have loops and conditionals can’t branch. You can, however, fork a thread into two parts. Pretty amazing.

Programming the machine must be pretty hard, right? Well, no. There’s also a sort-of language that looks a lot like Python that can compile code for the CPU. For example:

def fib(): a = 1 b = 2 for _ in range(10): next = a + b a = b b = next

Then you “only” have to write the chess engine. It isn’t fast, but that really isn’t the point.

Of course, chess doesn’t have to be that hard. The “assembler” reminds us a bit of our universal cross assembler.

This is so creative wow. Making a cpu using regex is not something I’d thought I would ever see.

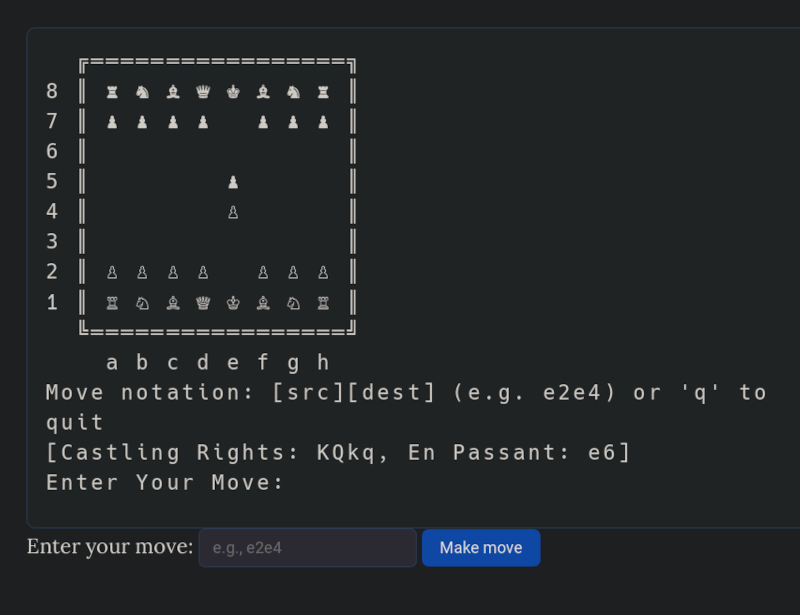

I was comparing execution times performances for a series of moves I feed in by array to the JavaScript front end and to the origin versions of the game each. Performance for the Python version on termux Python versus Android Chrome version on browser may have been slightly faster. I couldn’t manage to put in a castling move though. What’s the notation too castle say King side when you have castling rights at that time?

Can AI do it today?

“AI” is just a fancy search engine.

“It” doesn’t ever do anything new.

So yes, if you “train” it on a bunch of chess engines, it can spit out something that probably looks like a chess engine.

It might even work.

But it will still just be stealing the work of others.

This is a common but untrue take.

LLMs clearly can do novel things specific behaviour we’ve seen is: applying generalizations it’s never seen, or generalize in ways we haven’t explicitly shown it.

We robustly train OUT those novel ideas because they make for bad toys and tools.

The classic example is that you can analyse the vector space language models use, you can use this to find a transformation that moves an input in a conceptual direction.

You can then have it apply this transformation to any object.

You mostly get gibberish (take the transformation that in general takes the word for male things to female and then apply it to octogons what is the outcome?) but that is precisely what we mostly come up with. We can spend hours, days or years filtering that gibberish to come up with good ideas.

Frankly should an LLM make a valuable novel idea we wouldn’t give it credit. It’d just be a silly line in some kids essay.

That’s absolutely false.

AI is not a synonym for LLM.

Few years ago (2018 or ’19 iirc) Google had an off-spin project called AlphaZero (DeepMind team, lead by 2024 chemistry Nobel laureate Demis Hassabis), which learned to play chess only by knowing rules and playing against itself for few hours. No engines, no opening book, no endgame tables.

Anyway, after just few hours of analysing rules (playing against itself), it had a match against the Stockfish (the most powerful chess engine). It won by a very high margin. Stockfish would need 1 to 10 time advantage to match its performance.

So, no. AI doesn’t have to train on engines, and can beat the best engines easily on similar hardware. And yes, it very much does completely new things. There are few famous “AlphaZero moves” that the best engines were completely oblivious about. After AlphaZero, we had more engines with AI tech (none as good as AlphaZero, and none better than Stockfish, although Lilla is pretty good), and those days it is pretty common for all chess engines to use neural networks in order to perform better.

Just wanted to add an update for this comment, because chess engines and AlphaZero are all amazing.

Modern chess engines incorporated similar neural network based evaluation functions after AlphaZero was so dominant (and coming up with remarkable, innovative play that engines at the time didn’t know how to compete with). Chances are, OP is here to just troll, because they seem to not understand the majority of what they’re talking about, particularly chess engines.

Oops, I should have read your comment more carefully, you already mentioned this!

I’m sorry for your cringe take. AI is anything but a fancy search engine, and it definitionally does “new” things in the way it produces output. It’s weird seeing such an uninformed understanding expressed on this website, but hey, the internet is the internet.

Exactly!

Wow, as someone who finds even regex patterns I’ve written can become gibberish after about a week I’m both in awe of and slightly terrified by the kind of mind that could concieve this.

Human mind as a giant Regex.

makes me think of an article i once saw (possibly in scientific american) describing a machine built out of tinker toys that could play tic-tac-toe. it was essentially a mechanical rom lookup table.

A Tinkertoy Computer that Plays Tic-Tac-Toe

Published in Scientific American Vol. 261, No. 4, OCTOBER 1989

Available here : http://constructingmodernknowledge.com/wp-content/uploads/2013/03/TinkerToy-Computer-Dewdney-article.pdf

I like the academic adherence to computer science principles that Nicholas takes in writing this program. I am curious how the wall time for execution of the program using the JavaScript frontend compares to running the option only version on an Android phone. That I can post here if someone else doesn’t want to see for themselves and posts their results. But what I’m not sure more importantly is if anyone can think of any way to yield a more performant implementation of regex chess in light of the regex cpu seemingly (from my attempt at understanding) needing to do an re.Match against the 6 Mb json file of regexes

FYI, the

fibPython function example has an indentation error: three spaces in front of theforstatement when there should be four. A simple typo, I’m sure. 😊But cool article!

I appreciate and yet despise regex. Things like this never cease to amaze me, though, that someone would take something like regex and make a regex CPU that can be programmed to play chess. Crazy crazy stuff. It takes all kinds to make society function, and these stories are always a reminder of that.