awk is a kind of Swiss Army knife for text files. However, some of its limitations are often a bit annoying. I’ve used a simple set of functions to make awk a bit better, although I will warn you: it does require GNU extensions to awk. That is, you must use gawk and not other versions. Your system probably maps /usr/bin/awk to something and that something might be gawk. But it could also be mawk or some other flavor. If you use a Debian-based distro, update-alternatives is your friend here. But for the purposes of this post, I’m going to assume you are using gawk.

By the end of the post, you’ll see how to use my awk add-on functions to split up a line into fields even when there is no single character to separate all fields. In addition, you’ll be able to refer to the fields using names you decide. You won’t have to remember that $2 is the time field. You’ll say Fields_fields["time"] instead.

The Problem

awk does a lot of common work for you when you use it to process text files. It reads files a record at a time. Normally, a record is a single line. Then it splits the line on fields using whitespace, or some other choice of field separators. You can write code that manipulates the line or individual fields. This default behavior is great, especially since you can change the end of record character and the field separator. A surprising number of files fit this sort of format.

Until, of course, they don’t. If you have data coming from a data logging instrument or some database, it could be formatted in a variety of ways. Some fields might have structured data with a variety of separators. This isn’t a deal-breaker. Since you can get at the whole line, you can do almost anything you want, but the logic is harder and the whole point to using awk is to make things easier.

For example, suppose you had a file from a data recorder that had an eight-digit serial number, followed by a six-character tag, and then two floating point numbers separated by colons. The pattern might look like

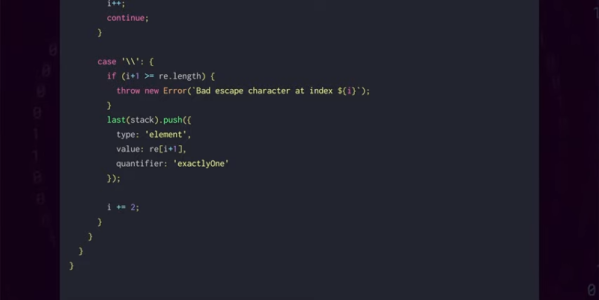

^([0-9]{8})([a-zA-Z0-9]{6})([-+.0-9]+),([-+.0-9]+)$

This would be hard to handle with the conventional field splitting and you’d normally just write code to split everything apart.

Continue reading “Linux-Fu: Making AWK A Bit Easier” →