Every few years or so, a development in computing results in a sea change and a need for specialized workers to take advantage of the new technology. Whether that’s COBOL in the 60s and 70s, HTML in the 90s, or SQL in the past decade or so, there’s always something new to learn in the computing world. The introduction of graphics processing units (GPUs) for general-purpose computing is perhaps the most important recent development for computing, and if you want to develop some new Python skills to take advantage of the modern technology take a look at this introduction to CUDA which allows developers to use Nvidia GPUs for general-purpose computing.

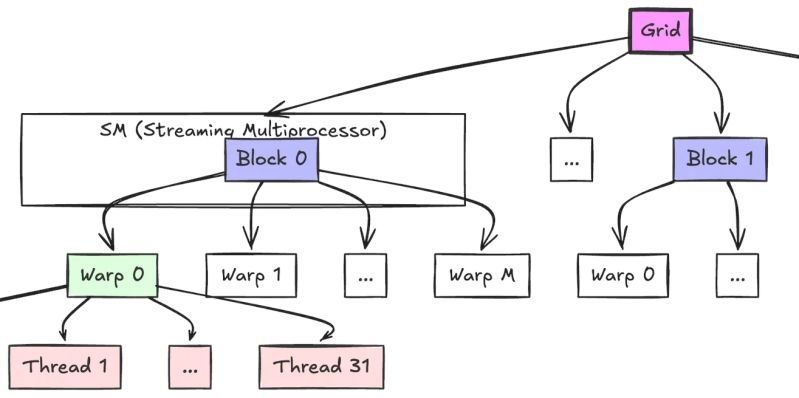

Of course CUDA is a proprietary platform and requires one of Nvidia’s supported graphics cards to run, but assuming that barrier to entry is met it’s not too much more effort to use it for non-graphics tasks. The guide takes a closer look at the open-source library PyTorch which allows a Python developer to quickly get up-to-speed with the features of CUDA that make it so appealing to researchers and developers in artificial intelligence, machine learning, big data, and other frontiers in computer science. The guide describes how threads are created, how they travel along within the GPU and work together with other threads, how memory can be managed both on the CPU and GPU, creating CUDA kernels, and managing everything else involved largely through the lens of Python.

Getting started with something like this is almost a requirement to stay relevant in the fast-paced realm of computer science, as machine learning has taken center stage with almost everything related to computers these days. It’s worth noting that strictly speaking, an Nvidia GPU is not required for GPU programming like this; AMD has a GPU computing platform called ROCm but despite it being open-source is still behind Nvidia in adoption rates and arguably in performance as well. Some other learning tools for GPU programming we’ve seen in the past include this puzzle-based tool which illustrates some of the specific problems GPUs excel at.

this is cool but just learn openCL. works on alot more compute devices and is much more performant. i like python because it’s simple but openCL is faster.

plus you can do things like run code on fpgas 😊 via openCL or even make algorithm specific hardware accelerators.

Please, please do NOT interpret this as a flame. This is a genuine question from someone who doesn’t know a whole lot but who does work in an “HPC-adjacent” job.

How does the future look for openCL? It seems like the only thing AMD wants to talk about is ROCm. Apple dropped openCL support, likely for nefarious reasons – I suspect to break code portability to Linux. On the other hand, does ROCm rely on openCL underneath? Yes, I searched (grin) but I’m seeing inconsistent answers. New versions of openCL are definitely being released.

The national labs have bought a whole lot of AMD nodes and it seems all they talk about is ROCm.

rusticl is the future

You might be right here. Rusticl is the only implementation really working towards a universal applicability. Given the drive to move the ML to the mobile silicon, this has the best opportunity to become the new OpenGL like interface for developers. To give an answer to the OP, OpenCL is alive and well.

Thanks! Looks like I’ll need to take a look at it. It would be funny if openCL was the thing that drives me to rust. :-) :-)

The question is whether the next generation of AMD graphics built into their processors will support 64GB+ of VRAM allocation from the system RAM, and if that will help large datasets with medium compute requirements.

Vaguely remember there being bios hacks on older AMD platforms allowing you to go way over AMD established limits. Would have to rummage through my bookmarks pile to find the source for that.

rebar can do this but the data traffic over pcie to/from system dram and gpu vram will kill your app’s performance

” SQL in the past decade or so”

wut

These kids today.

the reason rocm sucks is AMD’s entire software development process is deeply dysfunctional. they screw around with hacked up container images and heavily patched software that is shipped directly to end users and is invariably full of bugs. their management can’t even get cards to their own support engineers to do testing and CI, who are having to beg for hosting and compute from facebook to get the job done. this makes it hard to support one generation of cards and impossible to support multiple generations, meaning AMD don’t support practically anything and the chances of your particular card being supported are near-zero. they hide this behind a lie of “unofficial support” and vague guidelines, when in reality anything not actively focused on breaks immediately and irrevocably.

as usual, the situation on AMD cards wont improve until an outside force does all the work for them. see: ACO, now the same is playing out with rusticl and chipstar.

oh, in addition to ACO, see also the community’s RADV vs their AMDVLK

you speak like there is an alternative…

Nvidia is even worse. I develop for/on Jetson NX, and it is a complete clusterfsck.

(like after fresh flashing the som cant start because the wizard fails to start on the gui, because it freeze on many hdmi monitors. There are no user to login with, the wizard is supposed to create it.)

Just bugs after bugs. An you can find the same bug on the nvidia forum, but not a single useful help from the nvidia developers. Usually the person gets ghosted and the bugreport autoclosed.

Pff, enough ranting.

Import jax.numpy as np

better way https://github.com/SciRuby/rbcuda