Do you use a spell checker? We’ll guess you do. Would you use a button that just said “correct all spelling errors in document?” Hopefully not. Your word processor probably doesn’t even offer that as an option. Why? Because a spellchecker will reject things not in its dictionary (like Hackaday, maybe). It may guess the wrong word as the correct word. Of course, it also may miss things like “too” vs. “two.” So why would you just blindly accept AI code review? You wouldn’t, and that’s [Bill Mill’s] point with his recent tool made to help him do better code reviews.

He points out that he ignores most of the suggestions the tool outputs, but that it has saved him from some errors. Like a spellcheck, sometimes you just hit ignore. But at least you don’t have to check every single word.

The basic use case is to evaluate PRs (pull requests) before sending them or when receiving them. He does mention that it would be rude to simply dump the tool’s comments into your comments on a PR. This really just flags places a human should look at with more discernment.

The program uses a command-line interface to your choice of LLM. You can use local models or select among remote models if you have a key. For example, you can get a free key for Google Gemini and set it up according to the instructions for the llm program. Of course, many people will be more interested in running it locally so you don’t share your code with the AI’s corporate overlords. Of course, too, if you don’t mind sharing, there are plenty of tools like GitHub Copilot that will happily do the same thing for you.

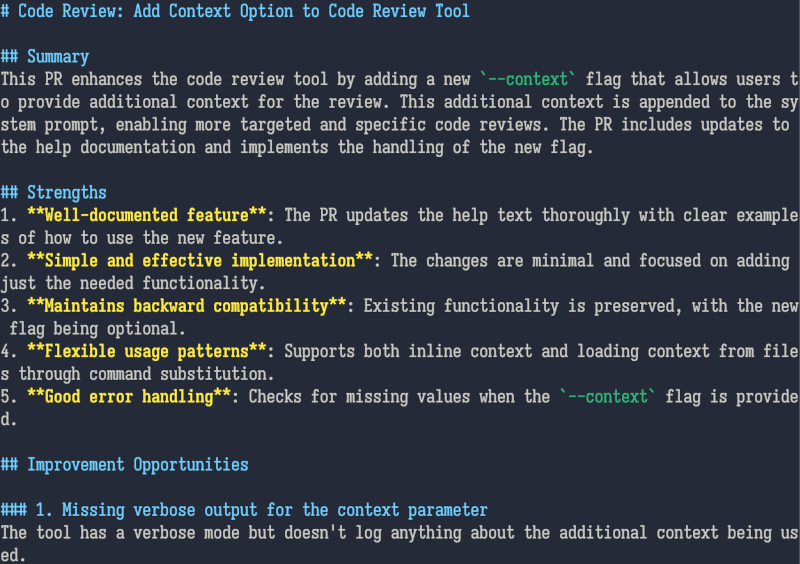

The review tool is just a bash script, so it is easy to change, including the system prompt, which you could tweak to your liking:

Please review this PR as if you were a senior engineer.

## Focus Areas

– Architecture and design decisions

– Potential bugs and edge cases

– Performance considerations

– Security implications

– Code maintainability and best practices

– Test coverage## Review Format

– Start with a brief summary of the PR purpose and changes

– List strengths of the implementation

– Identify issues and improvement opportunities (ordered by priority)

– Provide specific code examples for suggested changes where applicablePlease be specific, constructive, and actionable in your feedback. Output the review in markdown format.

Will you use a tool like this? Will you change the prompt? Let us know in the comments. If you want to play more with local LLMs (and you have a big graphics card), check out msty.

Regarding local models, does someone have any recent experiences whether the models are actually usable for tasks like code review now? I tried some llama variant a year ago, and the quality was useless and with low-tier GPU it was way too slow.

It very much depends on the size of the model you are using in addition to the model itself. Personally, I find any models less than a minimum of 24B just simply producing so much garbage they’re completely unuseable and even with 24B models you still have to pay a ton of attention to what, exactly, it’s doing or producing.

Running a 24B model or bigger, let alone at a decent speed? Yeah, you’re gonna need some beefy (and expensive) hardware for that.

Qwen coder is a good Model for coding. I also had good results lately with devstral.

As @WereCatf already said: use it with at least 24b and either you have a beefy graphics card or a specific AI nuc in your local network like the evo-x2 from GMKtec (with ollama or vllm as a Service).

We haven’t developed a ton of more and less sophisticated tools deterministicly checking our code according to rules we devised (spell checkers, style checkers, static code analysers, ci test pipelines) just to replace them with some vibing parrot without any regard for the rules whatsoever.

Inability to follow rules of logic is what makes most of thes AI/LLM tools useless in our industries which were built from the ground up on logic. From a shoemaker’s shop to a rocket factory, we strive for repeatability by following rules/recipes. These tools don’t fit that paradigm.

I’ll just leave this here,

https://www.calendar.com/blog/claude-opus-4-achieves-record-performance-in-ai-coding-capabilities/

That’s a puff piece of the highest order, and reads as though it was AI generated itself. I’m with steel man on this one.

I also stand with steel man

Totally on it, it’s specially the case when we’re trying to hammer down a nail with a drill. Generative AI just mix and match a lot of something to give something out. Then you put a LLM between the in and the out, and it gives you an expanded version of your smartphone’s keyboard next word suggestion tool.

And it gets worse when peopple think that since chatgpt and tools alike can generate seemingly well written texts, that it gets you truthfull info in it, like some sort of oracle. But in the end its just giving you what probably comes next to that word you said.

But since most of them are built on top of tech that helped us translate archaic texts and forgotten foreign languages, the thing its is fundamentally good at is text. Most of them are very good at translation, spell check (it kinda takes context into account), formatting text and expanding your text based on a few lines that gives the central idea. Its also a very good tool to make short and simple code snipets on simple and popular code languages, since its is based on language, coding is basically writing a instruction on how to do some text.

They just dont do most of the thing we’re induced to believe they do, at least not well. Ask them what papers can help you with welding high alloy steel, they make up the citations, that look perfectly fine, but does not exist. Give a papers and ask for a summary of it all, there you go. Ask for a complete piece of software, get a weak excuse for why it cant do, ask for a piece of python code that process some image to recognize a kind of pattern, it works perfectly.

TL, DR; it wont take place of the standard analytic tools of today, but will accelerate some of the specific but simple tasks of yesterday, now anyone cand review a paper or make a piece of code to solve a small but complex task, but it can’t give accurate info or use deterministic logic.

+1

Yeah it’s fundamentally the wrong type of tool, and no amount of refinement or extra horsepower is going to fix that. AI is certainly going to change the world, and it will be successfully applied to a lot of things (after all, many jobs are already BS involving humans producing tons of meaningless text that doesn’t have to make sense or be accurate), but there are areas in which it will disappoint because of an inherent mismatch with the application.

Vibe coding will work well enough for enshitified apps which do things that nobody actually relies on, and even then it will create tons of security risks, but not for actually important things.

Using it as “spell-check but for code” might be moderately useful as long as it doesn’t become the only debugger people rely on..

I can see the shadows of a dystopian future where programming will be reduced to mere commands to an AI…. and the future is nigh

I see that too. AND in many ways it’s similar to the shift from assembly language to C, then from C things like COBOL, then PHP, and now Python. Most newer / younger programmers today have no idea at all what the assembly might look like, what “the real code” is “actually doing”, a gray beard might say.

That has benefits and drawbacks. Most of today’s code is far, far less efficient in terms of memory and CPU usage. But much more efficient in terms of the programmer’s time out into it.

Using an AI to (help) write code is similar. You can more quickly get lower-quality software.

Very high level languages like Python have also enabled people to write software without really knowing very much about writing software, about programming concepts. That’s both good and bad. Random homemaker can whip up a script that helps automate the grocery list. That’s cool. Until they expose it on the Internet. Then they get 1,000 packages of cheese delivered, because not everyone on the Internet is nice.

What I’m seeing and expect to continue is that experts will use the new tools to make better software, faster. That requires expertise and how to use the tools, and which tools to use when. Less knowledgeable people will use inappropriate tools in foolish ways and produce junk. Same as always. Just a different tool.

Sorry gotta disagree.

Regardless of which programming language is used, a good developer has a mental model of how components will interact, how everything fits into a security strategy and coding for maintainability.

An AI simply slices and dices existing code samples it’s been trained on. There is no unified mental model, just code shards.

Sorry, I have to disagree.

Regardless of which computer language is used, a good programmer writes code based on a mental model of how users will use the application, how components will interact, the ramifications of design decisions on performance and accessibility, how security will be verified and maintained, and so on.

A generative AI simply slices and dices the samples of code it’s trained on. It does not have any mental model of what a user is or what a user’s needs are, except in so far as a thin residue of the various coders’ original and disparate visions remains embodied in the various chunks of code it was trained on.

Kind of like how if you are a mechanic now who can deduce and diagnose engine problems from core concepts using the scientific method, you are viewed as a living God by mechanics who can only look up a youtube video detailing their problem… Those who are still able to work from first principles will always have a special power in such a world. But the slop must flow… the slop must always flow, in ever-increasing quantity.

^^^ THIS!!! So much this! I’ve seen this exact analogy in action with folks relying on YouTube videos rather than understanding symptoms and correlating them with operational principles – in order to determine root causes and therefore be able to effect a proper fix. And I’ve been seeing this in more and more fields. It (kinda) works, in a manner of speaking – but what happens when YouTube isn’t available? Or when you run into a really rare problem that doesn’t have a YouTube video for it yet? (an old ‘graybeard’ here, shaking his head…)

I’ve always found it interesting the lengths that people go to avoid code reviews. I personally enjoy code reviews and this is why I use an LLM based system that allows me to review and edit all the LLM generated diffs before they are applied.

What’s fascinating is that some of the most prolific coders tend to give the worst code reviews. It seems like a personality trait similar to extroversion vs introversion.

Anyway I personally believe that LLMs should be writing most of the code and humans should be reviewing most of the code

Brilliance is very often correlated with laziness.. Just seems to be the way it goes most of the time. The incredibly rare case of brilliance in absence of laziness is where interesting things really start to happen.

Insofar the only useful AI-assisted task at my work is the ChatGPT describing in plain/manageresian engrish advanced SQLs (some SQLs have multiple layers of logic – ChatGPT usually falls right through the first WITH or UNION, btw). The hit-and-miss rate is maybe 60%, but it gets the initial pre-fudging just fine, then I edit the verbal chew to make it sound less robotic, or pass final grinding to one of our English Major suddenly turned programmer co-workers (thankfully, most don’t have an issue lending a helping brain).

The other task automagically enabled, Visual Studio suggestions as I type stuffs, and its hit-and-miss is even lower, 50/50 at best. That son of Excel Paperclip keeps sneaking in autocarrot/shite I didn’t nintedo (paen nintended).

Regardless, our top crust is high on new fad/drug/AI, and insofar I suspect I will be employed for some while longer. One of my older tasks was hammering the crappiest H1B-visa-holders code into working shape that would mostly usable. I suspect it will be a matter of time until I will be hammering the crappiest AI-generate “solutions” into similarly working shapes; I already spotted one (auto-generated database with gazillion table fields of unclear purpose – looks like someone drunk half a bottle of Jack Daniels and said “Shat GeyPeeTee, generate me three hundred databases and fill them with the random data intermixed with the migrated/converted data; pick one at random so I can sell it for ten prices as mine”).

Funny enough, AI-code-generating was initially trained on human-written code. I now wonder if it was trained on the crappy code to start with, but it also may be that it was just random commands in random order generated thousand times a second and see which one goes anywhere, and, perhaps, one out of three million reaches the end, ie, accomplishes the task. I am pretty sure that’s what’s in high fashion now, the rough equivalent of a high-speed lottery that really should have been Fourier Transform.

Using vibe-coding AI to replace youtube tutorial-coding H1B is one of the most utopian possible uses for AI that I have heard thus far

Yepyep. Presently we are moving in that exact direction as a whole.

Some of the code I had to hammer seem to have been a communal effort, copy-pasted parts from different projects, sometimes even with the original comments intact. I’ve seen H1B “coders” calling someone abroad (you can guess the country) and suddenly writing concise and working thing out of the blue, as if by magic. I’ve also seen the reverse happening, we may be working on some kind of important/critical task, in comes a phone call, and I am told “wait, this is important” and everything just dropped, while helping someone do something somewhere, and I could pick familiar keywords out of the talk that may go for some while.

Regardless, I actually admire those virtual communities that spring to help, since I am a loner (I prefer investing my own time and effort into mastering things). I may actually benefit from AI providing just such a community; just insofar what I’ve used was rather unimpressive (and with the SQL I use/write – counterproductive).

Back to the code reviews, if AI cannot simultaneously figure out multi-tiered solutions, then it is about as good as me, a loner who usually slowly goes through each tier, audit trail and all, to chart the system/systems by hand. Some of the systems we support are not just more than three tiers, they are written in different languages (some – nearly obsolete, others a bit obscure, Natural? Anyone heard of Natural? Mainframe …). If AI can train itself on THOSE, there is quite a chasm filled to the brim with the potential – all those financial transactions, overnight banking settlements, they are still mainframe code, and some is quite old, my previous job was with a bank, overnight batch jobs, heh.

Problem isn’t the language, it’s the entire ecosystem one has to become acclimatized to. That’s why new mainframers are hard to find.

That’s AlphaGoCode. It only needs 800 virtual human lifetimes worth of simulation to approximate the average human coder.

Once you reach a high enough level of mass parallel compute, it becomes simpler to produce infinite monkeys with typewriters than to produce Shakespeare.

Yet they will produce so many Sheakspeares, Shakespears, Sheikspars…. that no one will be able to recognize Romeo in Juliet’s balcony

I’m convinced that’s how Microsoft produced Windows: It compiles? Ship it!

I second that.

We should have teamed up, I built this project that I call GitCred – STRAIGHT OUTTA COMMITS about a month ago which leverages programming language concept mapping to define levels of skillset and proficiency. Peep it : https://github.com/NoDataFound/gitcred

Or screenshot https://raw.githubusercontent.com/NoDataFound/gitcred/refs/heads/main/images/sidebyside_vibe.png

I know this is probably not that interesting to this group but github copilot really does impress me every time I use it. It has nearly perfected its agentic code review to the point where I am confident that I don’t have to worry about it missing coding standards or any of that. I pay for it so code ownership or any other trust issues with “corporate overlords” is just part of the SLA. I really like using it for rapid prototyping. I can go from concept to working app in a couple hours of work. UX stuff, architecture design, documentation, DevOps, and even everything else I throw at it. Certainly, it screws stuff up if you give too little or too much context. But it is a tool, like resharper or whatever.