Much of the expense of developing AI models, and much of the recent backlash to said models, stems from the massive amount of power they tend to consume. If you’re willing to sacrifice some ability and accuracy, however, you can get ever-more-decent results from minimal hardware – a tradeoff taken by the Grove Vision AI board, which runs image recognition in near-real time on only 0.35 Watts.

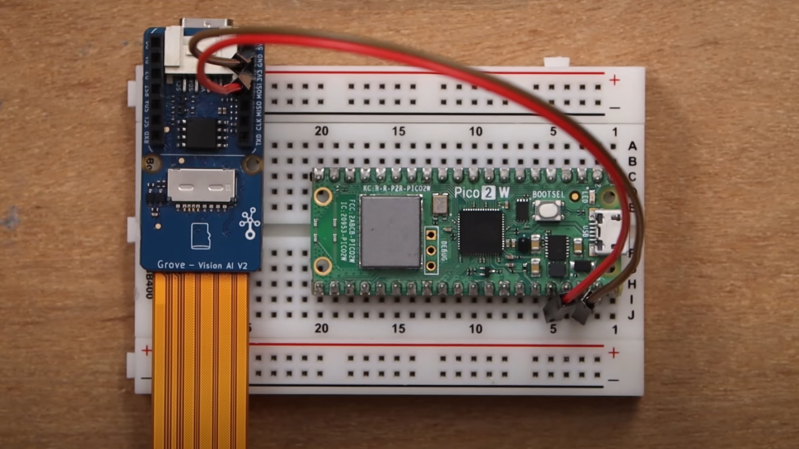

The heart of the board is a WiseEye processor, which combines two ARM Cortex M55 CPUs and an Ethos U55 NPU, which handles AI acceleration. The board connects to a camera module and a host device, such as another microcontroller or a more powerful computer. When the host device sends the signal, the Grove board takes a picture, runs image recognition on it, and sends the results back to the host computer. A library makes signaling over I2C convenient, but in this example [Jaryd] used a UART.

To let it run on such low-power hardware, the image recognition model needs some limits; it can run YOLO8, but it can only recognize one object, runs at a reduced resolution of 192×192, and has to be quantized down to INT8. Within those limits, though, the performance is impressive: 20-30 fps, good accuracy, and as [Jaryd] points out, less power consumption than a single key on a typical RGB-backlit keyboard. If you want another model, there are quite a few available, though apparently of varying quality. If all else fails, you can always train your own.

Such edge AI projects as these are all about achieving better performance with limited resources; if your requirements aren’t too demanding, you can run speech recognition on much more limited devices. Of course, there are also some people who try to make image recognition less effective.

We’ve been doing stuff like that with wavelets since at least early 1990s.

Considering this is interesting stuff, please tell us more. Please do not respond with “you can google it” as that never is a helpful kind of response. Since you already seem to know the answer please enlighten us with a link of some kind as it would be fun if we could all learn from this. Assuming that you are actually willing or able to share this kind of knowledge.

Yes! I’d be interested in hearing the “wavelet” remark fleshed out, too.

google’d it and found something better than snark:

https://arxiv.org/pdf/1110.01485

Considering that modern literature will exceed what he did in the ’90s

Viola-Jones, for a start, which still drives the face detection for many “neural network” face recognition/emotion measuring/etc models. (though can recognize other things)

This is an algorithm made to do face recognition on shitty cheap microcontrollers in digital cameras from the early late 90’s to early 2000’s (think 8051 and you’re getting close) and it had to be done in real-time several times a second.

The way the Haar features are computed in constant-time regardless of their area (and in only 3-4 simple ALU operations) is particularly clever. (classic application of dynamic programming)

Unlike a neural network, it’s also worth mentioning that Viola-Jones can bail out (very) early if there are no faces in the subregion of the image being tested, unlike a neural network which must complete a full forward pass. This has dramatic time and energy savings.

I feel your “don’t ask me to google it” is kind of lazy and just inviting people to paste AI slop instead.

Imagine your signal has certain patterns that repeat occasionally and maybe slightly different (longer, shorter, higher, lower, etc) each time but always similar. Now the signal (or at least part of it) can be characterized by identifying these patterns and giving them parameters, scale and time shift as need. These repeating patterns are wavelets. They are going to present for things like a well defined boundary in an image, where you will usually see the same pattern/wavelet. Now you just detect the wavelet occurring in the signal to find edges. This can also be applied to (lossy) compression, characterize the signal as a series of wavelets (with scale and time shift parameters) and you can then recreate the signal with just the wavelet info.

Seeing the different wavelets may also help. Check out the diagram on this page for pictures of some common wavelets:

https://www.continuummechanics.org/wavelets.html

Or the picture (half way down) in this article:

https://www.wired.com/story/how-wavelets-let-researchers-transform-and-understand-data/

Did that help?

…Imagine your signal has certain patterns that repeat occasionally and maybe slightly different (longer, shorter, higher, lower, etc) each time but always similar. Now the signal (or at least part of it) can be characterized by identifying these patterns and giving them parameters, scale and time shift as need…

OK you got my attention now, this sounds exactly like markets behaviour! Time to make coffee and reseach!

We’re gonna be rich!!!!

yeah that was exactly my thought…once you have the limitation of recognizing a single object you’re in the realm of much simpler algorithms than what they’re apparently trying to do.

It does not mean one pre-decided type of object (such as face), just one object per image.

For comparison how much wattage would google use for the same task?

This is is impressive, but a single LED on your keyboard does not pull 0.35W. That would imply that and RGB-lit 104-key keyboard pulls over 35 watts… nope. Cool project, but I hate it when people strain their credibility making idiot statements like that.

It stretches the statement a bit, but if we’re running an RGB LED at 100% duty cycle at 5V with 20mA per LED then that comes out to 0.3W (W = 5V * 0.020A * 3).

Keyboards get away with it by A: not running the LEDs at 100% duty cycle 20mA and 2: scanning through a matrix of LEDs so they’re not all on at once.

So not a downright “idiot statement” like you claim. But not entirely accurate either.

I wouldn’t quibble with saying a it uses as much power as a single RGB LED, but why not just stop there? Why stretch it out to the keyboard example, which is misleading and dumb? It’s like saying my Prius can go as fast as a Ferrari!*

*Assuming you limit the Lamborghini to 10% max throttle.

Anyway, I clearly woke up on the wrong side of the bed today.

but we aren’t doing that. 20mA in a blue or white LED is blindingly bright. and it burns them out too. the cheap small white LEDs in my parts pile (i.e., not designed for headlamps) max out around 3mA and are very bright (heh, i use them as headlamps)

i do have the same intuition than “a regular LED draws about 20mA.” i don’t know where it came from or if it was ever true. but the modern world of blue (white) LEDs is nothing like that. you have to special order 20mA LEDs from “super bright” catalogs and they cost way more than the ones that they put 300 of in every keyboard for no reason.

I’m not sure where you’re shopping, or maybe you’re using 0402 parts. Most through-hole LEDs I see today are rated for 20mA continuous forward current without cooling. The big headlamp modules are typically rated for 700mA or higher, and definitely want heat sinking.

keyboards do not use through-hole LEDs :)

The 20mA thing dates back to when LEDs were very inefficient and a typical 5mm red indicator LED needed that much current to be visible in a bright room.

The typical colored dome 5mm indicator LED still draws about 10-20 mA. They’re simply made that way as a “standard”.

The image is poorly chosen for this project. As a project using UART, even the source website uses a photo with 4 jumper wires. Just connect power and GND implies wrong information about the project.

his creating is 50% certain hand is face, so there is that :)

If it can do one model at 20fps, then with a little extra memory, you can swap out the model and do 10 different objects every half a second.

Daisy chaining objects, akin to having key words with parameters in speech recognition could be an interesting way to make detection more efficient for given scenarios.