You can use large language models for all sorts of things these days, from writing terrible college papers to bungling legal cases. Or, you can employ them to more interesting ends, such as porting Macintosh System 7 to the x86 architecture, like [Kelsi Davis] did.

When Apple created the Macintosh lineup in the 1980s, it based the computer around Motorola’s 68K CPU architecture. These 16-bit/32-bit CPUs were plenty capable for the time, but the platform ultimately didn’t have the same expansive future as Intel’s illustrious x86 architecture that underpinned rival IBM-compatible machines.

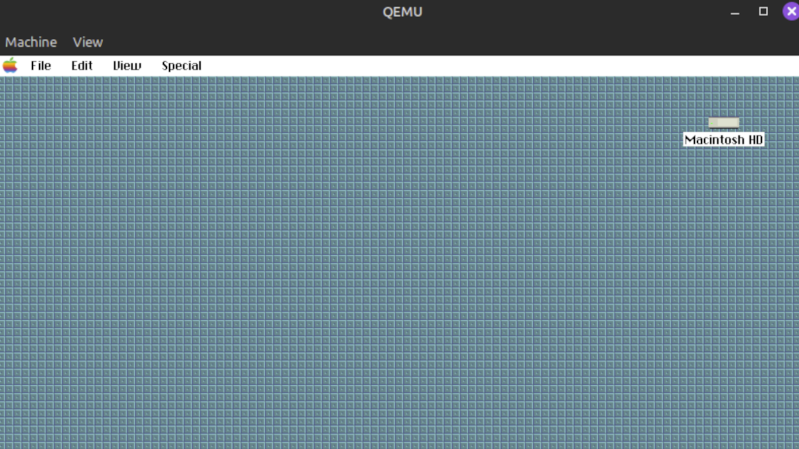

[Kelsi Davis] decided to port the Macintosh System 7 OS to run on native x86 hardware, which would be challenging enough with full access to the source code. However, she instead performed this task by analyzing and reverse engineering the System 7 binaries with the aid of Ghidra and a large language model. Soon enough, she had the classic System 7 desktop running on QEMU with a fully-functional Finder and the GUI working as expected. [Kelsi] credits the LLM with helping her achieve this feat in just three days, versus what she would expect to be a multi-year effort if working unassisted.

Files are on GitHub for the curious. We love a good port around these parts; we particularly enjoyed these efforts to recreate Portal on the N64. If you’re doing your own advanced tinkering with Macintosh software from yesteryear, don’t hesitate to let us know.

Holy cr*p this is impressive: Porting a functioning operating system in just 3 days, working only from binaries. 🤯

Let’s just hope Apple’s lawyers doesn’t find this out.

reverse engineering is legal…

Apple’s lawyers don’t care about “being legal”

In simple terms, how does an operating system running on proprietary hardware including graphics, sound, and memory architecture get converted to run on completely alien hardware? I get CPU instruction translation, but how would an LLM know how to translate everything else?

I know NOTHING about this specific project’s strategy, but porting console games to other consoles or to a PC OS has a similar problem. Heck, porting a DOS game to Windows even.

The usual strategy is to cut out those parts that are so platform specific and then make an abstraction layer that presents the same interface to the application while translating into the host’s API. Porting an N64 game to PC, for example, involves stripping out the N64-specific graphics system calls and replacing them with an abstraction layer that approximates them using OpenGL and whatever additional logic is needed.

How would an LLM do that? I’d guess the same way agentic coding AIs are already operating: Search the Internet for code that does something comparable and use that to guide the current approach.

Indeed. 9 months with a full team of heavily incentivized “We worked like dogs” developer slaves with full source access :)

‘To boldly go where no Mac has gone before’ https://en.wikipedia.org/wiki/Star_Trek_project

Thanks for sharing the that wikipedia link.

Is virtualization in QEMU considered porting?

This is reversed engineered and open source

Obviously you can’t expect an operating system built in the 80’s to understand modern systems. There are no drivers. The chipset it uses to talk to the low level hardware will all be alien. This is more of a demonstration of porting the code of an entire operating system to run on a different, yet to be released, architecture in 3 days(!!!?). That’s mind melting and shows the real promise of AI correctly used as a tool, not a fairytale hallucination lawyer or fever dream artist… Anyways, QEMU is presenting era appropriate hardware devices through a translation layer, and it’s running the x86 microcode on an emulated x86 virtualized processor. Try as you might, running system 7 in QEMU and passing your intel 14900K through is probably not going to work without completely rewriting it—and that strays far from being a port to being a fresh release. You will get close to native speed since it’s the microcode running in the VM is being handed right to a real CPU.

“That’s mind melting and shows the real promise of AI correctly used as a tool, not a fairytale hallucination lawyer or fever dream artist…”

Exactly, that’s the exciting part about all of this. I find most implementations of “AI” to be extremely untrustworthy and flawed, but this is a rare example of using the technology in the right way and I applaud the effort.

Qemu/kvm is a very common method of bringing up an OS. Most RISCV and ARM development is done this way when hardware samples are impractical, as Booting off PXE inside a VM is a fast workflow for cross compiling kernels.

https://training.linuxfoundation.org/full-catalog/

exactly joel, and even cooler in my use case it can be run with claude code and serial debugging can feed right into it

Kelsi, huge congratulations for this work. Can I assume that software that stuck to making proper calls to the Toolbox OS routines should work? I have a bunch of scientific data marooned in earlier file formats, (I still have the apps) and I’d like to “lifeboat” them into the 21st century. This might be the key.

eventually, maybe with a compatibility layer

Honestly, for this purpose I’d recommend one of the emulators that runs actual Mac OS, such as Mini vMac. You can install it with MacPorts or try it out (and various others) using Infinite Mac https://infinitemac.org/.

yes, you bring up new operating systems in VMs these days because it’s easier than trying to work around real hardware quirks for I/O while trying to get basic things like memory management, process model going.

So we can use LLMs for some fun before they completely rot out society!

This is incredible and I hope apple doesn’t find out lol

I kind of wonder what the world would look like if Apple had chosen to move to x86 instead of PowerPC back in the early 90s. The Clone-era showed that Apple was never a really good fit for the licensing model that Microsoft pursued, but if Apple could have used commodity hardware, and taken advantage of architecture-level support to provide a path for Windows users’ software, maybe they wouldn’t have struggled the way they did.

True, but let’s don’t forget the emotionally charged fan base at the time, either.

The Motorola 68000 fans disliked x86 by heart, as can be seen by the upset Amiga fans of that epoch.

They still thought that 68040/68060 were superior to 80486/80586 or had favored them, at least.

Going the x86 route so early might fave felt like betrayal to them.

So establishing the Power PC platform was easier perhaps,

since Motorola was involved and because it was easier to emulate/port 68000 code on PPC (endian-ness etc).

The M68k fans also followed the Power PC route in general, I think,

as can be seen by those Power PC parasite boards for 68000 based Amigas.

Also RISC was on the rise at the time and Power PC was seen as a possible successor to x86.

Also for political reasons, Power PC was more open and PC clone makers took that opportunity (or should I say Mac clone makers?).

The Power PC platform was supported by OS/2 and Windows NT, too.

Rivals were Alpha AXP and MIPS R4000 at the time, besides the still then-new Pentium (586).

Some of those platforms still played a role by turn of century on the Pocket PC 2000 platform (aka PPC, based on Win CE 3.0)..

They came out soon after the Windows CE 2.11 based Handheld PCs (HPCs) of late 90s.

By Pocket PC 2002 (Win CE 4.x, Windows Mobile) onwards,

the MIPS R4k and Hitachi SH3 were dropped and intel’s StrongARM architecture basically won (followed by XScale).

PS: Another interesting processor was the mythical Power PC 615 processor.

It basically was a Power PC chip that did fit in a 486 or 586 socket and supported both 32/64-Bit PPC and Pentium instructions.

https://www.cpushack.com/CIC/announce/1995/PowerPC615.html

https://www.halfhill.com/byte/1995-11_cover3.html

Hi! You may already know this, but Windows NT provided DOS/Windows 3.1x support throughout all platforms it ran on.

The RISC versions of NT included an 286 CPU core based on SoftPC by Insignia.

In Windows NT 4 the emulation was upgraded to 486 level.

So backwards compatibility never was in danger,

Windows NT users always could run their existing Windows software on any kind of computer.

It merely were the Win32 applications that had to be compiled for the processor in the computer.

But even here, there was a solution. Multiple solutions, rather. Commercial ones.

The binary translator “FX!32” made unaltered x86 Win32 applications run on an Alpha system,

while “Motorola SoftWindows 32 for PowerPC” did same for PPC systems.

Not sure about Posix or OS/2 subsystems on RISC versions, though..

In principle, Windows NT 3.5x and 4 (and 2k) could run 16-Bit command line applications.

Graphical applications for OS/2 1.3, too, if the Presentation Manager add-on was installed.

Again, no idea how compatible the RISC ports were at this point.

Since OS/2 support was limited to 16-Bit OS/2 anyway, an 286 emulation was good enough in principle.

In reverse, there were PC emulators for Macintosh in early-mid 90s.

The early ones even ran on Motorola 68040 systems, such as SoftPC, SoftAT

or SoftWindows 1.0 (Windows 3.1 on emulated PC/AT with 286).

Later versions such as SoftWindows 2, SoftWindows 95 or 98 took advantage of the features of the Power PC processor.

Virtual PC 2 and 3 could even pass-through the 3dfx Voodoo 1 and 2 cards (if installed).

Later versions emulated an Pentium MMX PC, which even Windows XP could run on.

The last version, Virtual PC 7, was available bundled with a copy of XP.

Along with Office for Mac, it was a common basic software of an advanced user of a Macintosh.

On a high-end Power Mac G4/G5, the “emulation” wasn’t being too shabby, actually.

It was okay for productivity software, web design, some lightweight gaming etc.

Apple was also considering ARM at the same time they were looking at IBM PowerPC in 1992.

https://youtu.be/ZV1NdS_w4As?si=9nhEKruKeR0TryPp

This is my project, just to clarify it is not fully functional it is a booting skeleton still needs lots of work, pull requests are open :D

additionally it’s not meant to just be an x86 port it’s portable and could run on any number of platforms.

This is cool, but how deep was the reverse-engineering phase? Is this an approximation of the low-level functions with prompting to get the user interface to look about right, or is this more like a decompilation/port project akin to the N64 ones?

very deep this is not a prompt inspired hallucination

yeah this sort of task is pretty much LLM/Ghidra bread and butter, congrats!

May we ask what your background is? Must be pretty awesome to have the skills to pull this off. Well done!

If I understand correctly, you analysed the original binary to figure out how things worked, and then did a clean room reimplementation, right? This is very cool, you did what Apple was not able to to with the PPC transition!

This doesnt seems to be a “port”, but a ” reimplementation” to me.

Even more impressive given large parts of the source material were hand written assembly and pascal, so not just made portable to other architectures, but converted to different programming languages than the original source.

While this is straight-up derivative work (which may or may not fall under fair use), I wonder whether AI-generated specification would be “clean room” enough to escape copyright?

Just a couple days ago I also discovered LLM assisted decompiling of binary blobs (riscv in my case) and it’s an absolute game changer! It blows the ghidra decompiler out of the water, which was already way better than IDA’s in my experience.

what are you using?

now if i could just use this to make a 64 bit port of the total war rome 2 binary id be set.

On 64 bit Ubuntu, the following are required to build to completion.

Lots of warnings on the compile

sudo apt -y install mtools

sudo apt -y install gcc-multilib

Please do Amiga next :)

I need subset only GUI and library (opengl or sdl3)

This is fraudulent, at best. While at some level this project is an interesting commentary on what can be done with a LLM, to say it resulted solely from binary analysis as a clean-room is actively disingenuous (aka. a lie). There are literal references to the apple source files that originated the C sources in this project. These are not referenced in the whitepaper at all!

For example, see these files (there’s much more…). As of the current revision these files are still present and presumably used as-is.

https://github.com/Kelsidavis/System7/blob/5c38150d28422802a251ab35506edbd7d9aab4da/src/MemoryMgr/memory_manager_core.c

https://github.com/Kelsidavis/System7/blob/5c38150d28422802a251ab35506edbd7d9aab4da/src/QuickDraw/quickdraw_pictures.c

I don’t know how commonly it’s known outside the mac community, but the entire System 7.1 / “SuperMario” ROM source was leaked a few years ago and can be browsed in this repository: https://github.com/elliotnunn/supermario . As someone who is quite familiar with that source, it was obvious skimming through the project sources that there was more “understanding” than could be explained by any amount of time spent solely in debuggers and binary reverse engineering tools. Many common strings, method names, concepts, etc that you will /never/ recapture exactly through any amount of binary analysis.

Clearly, the entire apple source must have been fed into an LLM, along with indeterminate middle steps as we can’t trust methodology from the paper, the result being an “act-alike” sharing some concepts but no practical relation after manual cleanup/additional implementation to get something that can boot. The sad part is that alone would have been interesting without the fake premise, but to coaching this project as any kind of “reverse engineering by LLM” is fake until proven otherwise.

LLMs know stuff, they hold all of the knowledge compressed inside. Rather famously early LLMs would recite Carmac Fast inverse square root verbatim including header and comments. Thats what makes LLMs both useful and super sketchy legally.

You cited two files. I ran function/body clone detectors (CPD/NiCad) on those exact pairs and found no cross-repo clones. Our winnowing similarity is low and localized to public API/opcode names required by Inside Macintosh. Internals differ materially (heap metadata, compaction strategy, error/bounds handling, parser structure, logging).

We also scanned the repo for Apple/SCCS markers and “SuperMario” mentions — zero hits.

Detailed ROM→C derivations for these two files (with addresses/bytes) are in docs/DERIVATION_APPENDIX.md. I welcome third-party audits; reproducible builds and scripts are provided.

The introduction of docs/DERIVATION_APPENDIX.md very clearly post-dates the version of memory_manager_core.c linked by zigzagjoe. At that hash, the top of memory_manager_core.c indicates:

“* Reimplemented from Apple Computer Inc. source code:

* – MemoryMgrInternal.a (SHA256: 4a00757669c618159be69d8c73653d6a7b7a017043d2d65c300054db404bd0c9)”

MemoryMgrInternal.a never shipped with any public Apple SDK, yet that version of memory_manager_core.c references several symbols defined only in the SuperMario sources. Sorry, but replacing a file in your own repository clearly derived from Apple’s internal sources — and documented to be so in that same file — with a C rewrite does not constitute a “clean room” reimplementation nor does it absolve this project from training an LLM with copyrighted Apple code. At best, this is a misunderstanding of terminology; at worst, the provenance of this project (and your reply to zigzagjoe) is disingenuous indeed.

The original sources are all in 68k assembly. Disassembling the binary will result in the same instructions, and any C reimplementation is going to be fresh work.

The only thing you’ve found is that they had metadata about which source files various objects were originally in, which is something that is often available even when the sources themselves aren’t.

I knew there’d be some anti-AI luddites making wild claims in this comment section. You sir, did not disappoint. Lol

My BS alarms were going off when I read the story. I can’t even get “AI” to help me find an episode I remember plenty of details of but not the title of the episode/series without it sending me deep down a rabbit hole of completely unrelated shows and genres, and yet I am supposed to believe a LLM could have Mac OS 7 ported to x86 in 3 days without the source code?

I haven’t looked at the source files (because I’m reimplementing Mac OS myself[1] and want to be sure I’m not copying Apple’s code), but I did take a look at the documentation.

[1] https://www.v68k.org/ams/

ARCHAEOLOGY.md has numerous references to chapter 2 of “IM:Windows Vol I”. However, there is no such volume of Inside Macintosh. There’s the original /Inside Macintosh, Volume I/ which introduces the Window Manager in chapter 9 (and whose page numbers are volume-, not chapter-relative), and the newer /Inside Macintosh: Macintosh Toolbox Essentials/, with the Window Manager as chapter 4. The latter volume does use chapter-relative page numbers; chapter 2 covers the Event Manager.

ARCHAEOLOGY.md contains this claim: “BeginUpdate sets port and clip to (visRgn ∩ updateRgn); EndUpdate validates updateRgn.” Two citations of IM:Windows Vol I are provided as evidence:

pp. 2-81 to 2-82: "BeginUpdate sets the port's clip region to the intersection of the visible and update regions"

While it’s true that drawing occuring between BeginUpdate() and EndUpdate() is clipped to the intersection of the visRgn and the updateRgn, that’s because BeginUpdate() alters the visRgn, not the clipRgn. This is documented in the Window Manager chapters of both old and new Inside Macintosh.

Incidentally, chapter 2 of IM: MTE does mention BeginUpdate() on page 2-48: “The BeginUpdate procedure temporarily replaces the visible region of the window’s graphics port … with the intersection of the visible region and update region of the window.” (emphasis mine)

p. 2-82: "EndUpdate validates the entire update region"

I’m not sure what meaning is intended here. IM: MTE p. 2-48 continues: “The BeginUpdate procedure then clears the update region of the window”. So by the time EndUpdate() is called, the update region is empty. If “validates” indicates a call to ValidRgn(), it’s a no-op because the region is empty. Otherwise, I have no idea how to parse that statement, and it doesn’t resemble anything I’ve seen elsewhere. Quoting IM: MTE p. 2-48 once more: “The EndUpdate procedure restores the normal visible region of the window’s graphics port.” In other words, EndUpdate() doesn’t do anything at all with the update region.

Between the claims that are easily refuted by official developer documentation and citations of a nonexistent source, I’m led to conclude that ARCHAEOLOGY.md is populated at least in part by LLM confabulations.

I’m always glad to see folks taking an interest in classic Mac OS, and I don’t wish to discourage the author from continuing to do so. Long before I started on Advanced Mac Substitute, I made my own Mac OS interface look-alike[2], and I was fortunate in that the only criticism I got was that SetWindowAlpha() wasn’t period.

[2] https://www.metamage.com/apps/maxim/

That said, publishing a paper invites peer review, and I’ve given mine.

Just for interest:

A standard way to do such projects is to treat the target CPU as a VM and the assembly opcodes as bytecode, and then create an optimising JIT compiler for the new target CPU.

Another similar method is to convert a disassembly to C code, treating the registers as variables, and then compile with an optimising C compiler to the new target.

Looks like smoke and mirrors. Has anyone actually dug into this pile of AI dung?

This is amazing, and while there are some people on here are showing their ego is hurt, and calling out imperfections, the results as of today speak for themselves AND this is just present state. We can start using what they did here to do this with all sorts of things (anyone for AI COBOL programing of all those old workhouses out there? What about assembly versions of things? In the past that would be a waste of time but now is trivial – we could see a big performance increase in a lot of things). For those who cry foul, sorry but in one year from now even that foul becomes a joke even if there is any spark of controversy to explore. Two years? This whole project goes down to moments and in three, we have a whole different world where programing is all abstraction of what a human requests using all code from all of history AND the uncompiling of all old binary into a source that even if a human cannot read it without special skills, AI can. Awesome stuff