We’ve covered plenty of interesting human input devices over the years, but how about an instrument? No, not as a MIDI controller, but to interact with what’s going on-on screen. That’s the job of GuitarPie, a guitar-driven pie menu produced by a group at the University of Stuttgart.

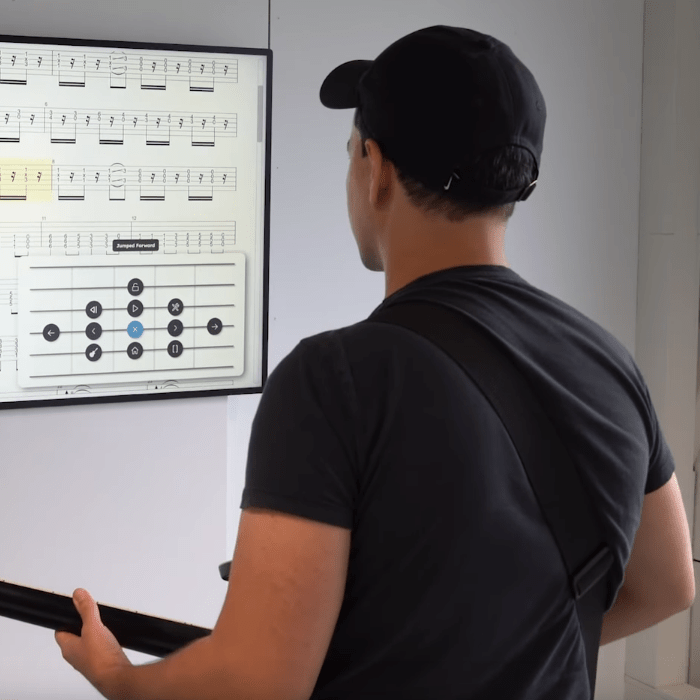

The idea is pretty simple: the computer is listening for one specific note, which cues the pie menu on screen. Options on the pie menu can be selected by playing notes on adjacent strings and frets. (Check it out in action in the video embedded below). This is obviously best for guitar players, and has been built into a tablature program they’re calling TabCTRL. For those not in the loop, tablature, also known as tabs, is an instrument-specific notation system for stringed instruments that’s quite popular with guitar players. So TabCTRL is a music-learning program, that shows how to play a given song.

With this pairing, you can rock out to the tablature, the guitarist need never take their hands off the frets. You might be wondering “how isn’t the menu triggered during regular play”? Well, the boffins at Stuttgart thought of that– in TabCTRL, the menu is locked out while play mode is active. (It keeps track of tempo for you, too, highlighting the current musical phrase.) A moment’s silence (say, after you made a mistake and want to restart the song) stops play mode and you can then activate the menu. It’s well a well-thought-out UI. It’s also open source, with all the code going up on GitHub by the end of October.

The neat thing is that this is pure software; it will work with any unmodified guitar and computer. You only need a microphone in front of the amp to pick up the notes. One could, of course, use voice control– we’ve seen no shortage of hacks with that–but that’s decidedly less fun. Purists can comfort themselves that at least this time the computer interface is a real guitar, and not a guitar-shaped MIDI controller.

Wonderful, please expand this to other instruments that support tablature (diatonic accordeon would be a good start)

Wow really cool. A great use of DSP!

That’s a very neat hack and genuinely useful! Getting fancy, if you get the audio from your signal chain with a footswitch option to mute the amp, a live performer can use this to switch backing tracks easily, etc.

of course! because the ‘guitar hero’ game needs a UI

i’ve been dreaming for a long time about making this sort of thing as a UI for non-music apps. it’d be epicly impractical (even if i lost the use of my hands, speech recognition has more bandwidth than tone recognition). but it’d be a good exercise to improve my ear. but i keep getting hung up on questions like

absolute notes (i can sing two octaves, or about 24 semitones hah) or relative intervals?

trigger on tone detection, or provide feedback until the end of the tone so i can ‘hunt’ for the right note and only stop when i’ve hit it?

pie menu or ??something else?? 24 tones isn’t quite an alphabet, and 24 tones is already an ambitious goal.

really the point of the thing is how incredibly difficult it would be to get anything done — the difficulty is training! so i guess the details don’t matter, but on the other hand i’ve already made a number of tools just for training (with no “input device” aspect) so maybe i’m just being silly. it’s a dream :)

Morse code plus chirps wouldn’t be bad. Short/long+ 3 -5 tone ranges+ up/down/static chirps. You’d sound like king jiggy from banjo tooey but it seems workable.

I just want to hear them use this to type a letter!

This is what UI people should be doing instead of changing colors and using the same 50-year-old radio buttons and checkboxes. The world is due for a whole new set of input methods.

Don’t forget changing the font. That counts as a new feature!

From a guitar player, very awesome stuff!

IMHO: “…computer is listening for one specific note, which cues the pie menu on screen…” is a bit of an over-engineering, but lets’ see where that takes one. Translation – guitar-leaded synths is not a new invention, and synths that act as keyboards triggered by guitar notes have been around since 1970s (probably, earlier – just I wasn’t yet born back then to witness :] ).

I personally knew one of the local guitar-synth enthusiasts, who used Max/MSP packages he programmed himself (well, not from scratch, from templates), that did something similar; one can repeat the same feat using PureData, which used to be the foundation for Max/MSP. A friend of mine was not only triggering this and that, he was also commanding the sampler using his guitar notes being played (detecting sequences of notes played, to be exact). The logistics, according to him, were actually straightforward, AND – here’s where it gets interesting – he learned this trick during his sabbatical in Netherlands, where someone else was doing the same – and this was year 2004 or so.

Rewind to present and find other nifty piece of hardware that can accomplish kind of sort of similar feat on the cheap, no, not full-blast Raspberrypi, humble XIAO SAMD21, because it does have rudimentary 12-bit ADC with which one can “sense” notes. My friend back then, he was planning to use Arduino MEGA, that can potentially sense all six strings (though, not simultaneously, one ADC switching between 6 inputs, but you get the rough idea), no less than 10 years ago. I honestly don’t remember if he did – we kind of grew apart, but from time to time I hear his name – he lives in Baltimore, MD, where there is quite a community of experimental electronic arts artists, inventing and building their own rigs/wares similar to this one, some – on a shoestring/zero budget.

Additional technical details nobody asked for – both PureData and Max/MSP can process the actual sounds being played. While they can trigger MIDI as well, it is not really their purpose – both meant to process the data that comes in, music or video. (hint is in the name – ‘PureDATA’).

I’ve played with PureData (since I could never find spare money to buy Max/MSP), and it boils down to all three being used at the same time, raw data (streaming bits), signals that command them around within the PureData, and, if one wants to, spat out / ingested MIDI commands – in addition to. It is actually amazing piece of software, since one can also ingest and spit out voltage control stuffs (like voltage-controlled pitch and whatnots). Basically, PureData can acts as a standalone music workstation, load and play samples, or, if you are into sound architecture, you can build your synths from scratch right within. Mixing is included as well, you can do all kinds of things, reverb/echo, phase shifting, sound stretching/tuning, the sky is the limit.

Note sensing is part of PureData, too, but it gets even better – one can build/create Markov Chains (one of the example patches) and build his own personal chord progressions based on the chord played presently. Yes. Tablatures, chord progressions, basics of music theory, which chord sounds right, following which chord and vice-versa. No programming. Right within PureData itself. One can actually get the source code and compile it into the target architecture directly, it is that easy (though, fair warning – real-time streaming means you already know how to set up and use real-time sound libraries, like ALSA).

(I am neither affiliated with, nor work with anyone who wrote PureData – I am also NOT connected with anything Max/MSP, both are mentioned as something I run across, or briefly used).

Rocksmith game already do this.

Can hardly wait to download this. Sounds fantastic.

Will try at end of October.

Did not say anything about lefthanded guitar players?