For as long as there have been supercomputers, people like us have seen the announcements and said, “Boy! I’d love to get some time on that computer.” But now that most of us have computers and phones that greatly outpace a Cray 2, what are we doing with them? Of course, a supercomputer today is still bigger than your PC by a long shot, and if you actually have a use case for one, [Stephen Wolfram] shows you how you can easily scale up your processing by borrowing resources from the Wolfram Compute Services. It isn’t free, but you pay with Wolfram service credits, which are not terribly expensive, especially compared to buying a supercomputer.

[Stephen] says he has about 200 cores of local processing at his house, and he still sometimes has programs that run overnight. If your program already uses a Wolfram language and uses parallelism — something easy to do with that toolbox — you can simply submit a remote batch job.

What constitutes a supercomputer? You get to pick. You can just offload your local machine using a single-core 8GB virtual machine — still a supercomputer by 1980s standards. Or you get machines with up to 1.5TB of RAM and 192 cores. Not enough for your mad science? No worries, you can map a computation across more than one machine, too.

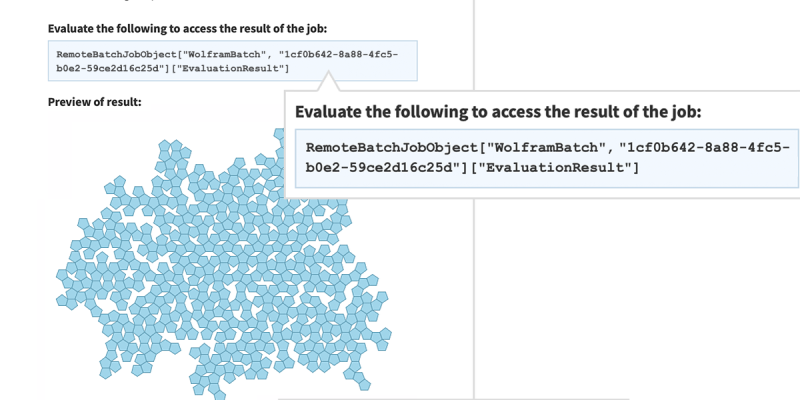

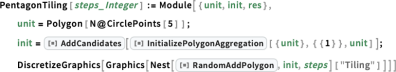

As an example, [Stephen] shows a simple program that tiles pentagons:

When the number of pentagons gets large, a single line of code sends it off to the cloud:

RemoteBatchSubmit[PentagonTiling[500]]

The basic machine class did the work in six minutes and 30 seconds for a cost of 5.39 credits. He also shows a meatier problem running on a 192-core 384GB machine. That job took less than two hours and cost a little under 11,000 credits (credit cost from just over $4/1000 to $6/1000, depending on how many you buy, so this job cost about $55 to run). If two hours is too much, you can map the same job across many small machines, get the answer in a few minutes, and spend fewer credits in the process.

Supercomputers today are both very different from old supercomputers and yet still somewhat the same. If you really want that time on the Cray you always wanted, you might think about simulation.

(Have not looked at it yet…) Given Wolfram this is no toy, but I wonder if it’s still a play space compared to a big boy machine.

It’s not hard (or expensive) to spin up a few hundred cores and terabytes of memory on demand in Amazon’s EC2, for example. Or many tens of thousands, if you want NVIDIA cores.

Quick lookup on EC2: 192 CPU cores, 135,168 GPU cores (8xH200), 3 TB of total memory is $75/hr. That 31,000 TFLOPS makes it comfortably in the top 100 supercomputer class.

$75/hr would be just the electricity cost for that in a lot of places.

Saying it’s a top-100 supercomputer says more about the super-computer-reporting-community than it does about this particular machine. Based on NVIDIA’s stock price, I bet there are many organizations around the world now that have 8x Nvidia H200 cards in one room. They just don’t do press releases about it. It’s only like $200k worth of hardware so it’s not worth bragging about.

Especially since Amazon has dozens of them for rent…

The “top 100” spans about 3 orders of magnitude in performance.

You know, back in the day, it would have been nice to login to a super-computer for some of the problems that I wanted to explore (even something as simple as generating the Mandelbrot set which took all night on my Dec Rainbow) … But now? Not so much. I consider what I am running at home (12 cores/24 threads) a super computer! Ie. I don’t have a need for the ‘todays’ super computer power for what I do.

Nice to see that if you have the need, the resources are available I suppose, even if part of the ‘cloud’.

Get chatty with someone at LLL or NOAA, bet they would throw a few cycles your way.

Steven Wolfram is kind of (IMHO rightful) successor to the late Seymour Cray. He’s been at it for more than just a decade or two, and I’ll take his supercomputer over any other emerging microsofts.

I also wish he opened up true computer making division, and drive existing monopolies into bankruptcies, but it doesn’t seem to be his pie. Such a potential to waste, though, we could use REAL competition to the obese M$ clones. Oh, and finally invest into proper (ie not one-off tax-paid) US chip making. Not just California or Texas, every state, even Rhode Island and Hawaii (where, I am pretty sure, some rare things can be mined – and new ones are being hot-minted deep within every hour).

But I digress. Years and years back I thought investing into designing and building my own fork of Transputer, since that was doable on a shoestring budget. Some things just do NOT need massive powerful CPUs to start with, and can sure benefit from the task being properly divided/semaphored between CPUs into digestible chunks. Now that things can be run in the cloud directly, that idea now makes more sense.

There exists entire/separate field of math that’s really a borderline between math and computer science (actually a LOT of computer science things connect to math in many ways) – I think it is called something like “efficient algorithms”. Some tasks, when properly analysed, can potentially be reduced to combination of LUTs and semi-advanced number-crunching.

(any modern $1 calculator does that rather well with puny Attiny13 level of CPU power – LUTs and simple number crunching, even square roots, classical problem, and it does that on a $1 hardware with amazing ease).

As a side note, at my work I regularly run into tasks that at the end can be reduced to 8-bit computations. Some are even less advanced than that, meager 4-bit will suffice, and some need nothing but few op amps circuits to crunch things in parallel, zero coding. I always thought “this is weird that I HAVE to use advanced darn overpowering 64-bit PC with gazillion Jigabytes of RAM just to run a simple Venn Diagram computation that can really be done on paper using nothing but pencil and eraser”.

“Computer Science” is math. You are confusing the discipline of programming methodologies with a branch science of applied mathematics. This is a common mistake for undergraduates who wanted to learn how to make games, but do a little legwork.

That reminds me of an mid-80s episode of “Computerzeit” featuring the original Cray-1.

It shows how it performs at calculation of Pi, also in comparison to an Apple II.

At one point the presenter jokes that anyone with a 15 to 40 million DM can get one of these 5ton heavy computer for the living room.

So that someone in return has the fastest computer in the world.

The litte animated guy then responds “very funny!” in a disapproving voice.

https://www.youtube.com/watch?v=eavtXl4Ei3c&t=1720s

Maybe for market’s data! sadly I have no idea about the language, a big curve ahead I guess