An oscilloscope is usually the most sensitive, and arguably most versatile, tool on a hacker’s workbench, often taking billions of samples per second to produce an accurate and informative representation of a signal. This vast processing power, however, often goes well beyond the needs of the signals in question, at which point it makes sense to use a less powerful and expensive device, such as [MatAtBread]’s ESP32 oscilloscope.

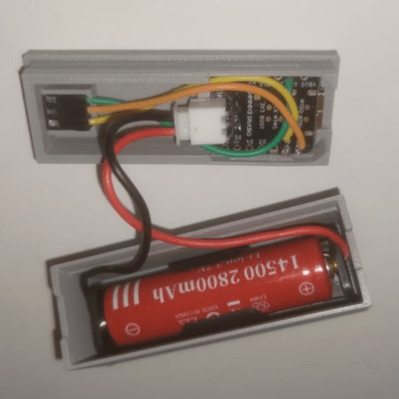

The oscilloscope doesn’t have a display; instead, it hosts a webpage that displays the signal trace and provides the interface. Since the software uses direct memory access to continually read a signal from the ADC, it’s easy to adjust the sampling rate up to the hardware’s limit of 83,333 Hz. In addition to sampling-rate adjustment options, the browser interface includes a crosshair pointer for easy voltage reading, an adjustable trigger level, attenuation controls, and the ability to set the test signal frequency. The oscilloscope’s hardware is simply a Seeed Studio Xiao development board mounted inside a 3D-printed case with an AA battery holder and three pin breakouts for ground, signal input, and the test signal output.

This isn’t the first ESP32-based oscilloscope we’ve seen, though it is the fastest. If you’re looking for a screen with your simple oscilloscope, we’ve seen them built with an STM32 and Arduino. To improve performance, you might add an anti-aliasing filter.

Use the esp32 gpio paired with a DSP or cold/fpga

Get that multiple MHZ sample rate or GHz

Then just spit raw data stream to esp32 for numbahcrunch

I mean an fpga or large cpld is quite cheap nowadays

That way all the really redundant and large overhead done in hardware and you just spit a nice data stream the software gonna already like

And get supafast speed

Depends how well verilog or vhdl does analog circuits

They don’t. You need some kind of analog front end (whether an integrated ADC, comparators + a resistor ladder, or what have you) that it can interface with digitally.

FPGAs are fully digital, no analogue capabilities other than occasionally having a built in ADC but that is typically not high performance and you just access it through a digital interface.

FPGAs are good for interfacing with high performance ADCs though and are often used in oscilloscopes. High performance ADCs can get pretty expensive though and need a decent analogue frontend to work well.

Well there’s now a really analog equivalent to fpga

https://youtu.be/TWW0Q8sEGEA?si=1OyQJl9Fwmy1hVwS

*really expensive analog

You still need a device that can process the data at that rate. The fastest peripheral on there is SPI at 40MHz, so unless your DSP is pre-digesting the data, the esp32 can’t throughput much over 20MHz readings (on a single lane). The outbound WiFi is also going to constrain the data rate.

Sampling caps out at 83 kHz in this design though, nowhere near the MHz range.

Just wanted to add, that battery is 100% fake. The current best performing 14500 batteries are around 1500mah by Vapcelltech or other small distributors

You’re right. I bought a batch off AliExpress. More like 2800mWh,or 900mAh. But they work fine

The idea is awesome: no galvanic connection, cheap device, popular platform.

Unfortunately, the code is generated by LLM:

For these kind of projects, if it works it works. It’s no enterprise software and it’s not internet facing. I tried antigravity with gemini 3 pro high in CTF solving and it’s really impressive what these models can achieve today.

Don’t hate on the LLMs — just because a wetware humanoid created something doesn’t automatically make it non-suss. I do, however, worry about the future of software development now that all the kids coming up through it just use LLMs and don’t actually need to learn how software actually works. Those kids are not going to be good senior developers.

“Those kids are not going to be good senior developers.”

(I suspect) The near-future goal of AI software development is to go from “requirements” to “system testing” with feedback from testing fed back into the AI for successive regrinding until the software is fully accepted at which time the AI product will be archived until the next revision.

In the above scenario and further into the future, “Those kids” are not actually coding but are just a human feedback link between the requirements and the software-system acceptance.

Obviously, the end-game is to move the AI interface closer to the requirements specifiers and eliminate the chain of software developers thus eliminating a large portion of software costs (said cost redirected to the AI supplier as licensing and subscription fees.)

Undoubtedly, the AI industries will need new human talent to meet market needs until AI can train AI at which time custom software is just a verbal request – and – whatever fee is demanded by the AI. Will that fee be a one-time or recurring cost for the life of the software usage? Additionally the legal landscape must change to accommodate liability.

You know, I’m really REALLY glad that this kind of crap didn’t exist while I was growing up.

Thank all dieties for the big 3 ring binders as manuals and BASIC that was ram constrained to a few tens of K. That might sound medieval, but I would take that over to “speaking to AI” any time.

Actual statistic disagree with you:

https://www.coderabbit.ai/whitepapers/state-of-AI-vs-human-code-generation-report

new job: Vibe Coding Cleanup Specialist

We have them already; they’re called Senior Developers.

HAHAHA – sadly, true.

And when the last of them croaks….RUH-ROH! Not that it really matters with today’s prevailing grab the money now and run/mortgage away the long term future corporate thinking.

I was similarly skeptical, having written code by hand for almost 50 years, which is why I did this.

And I have to say, it was good. Like having a junior coder who’s actually read all the docs. It knocked out working code really quickly. The kind of errors it made, like choosing to use JSON for the websocket and double-buffering the ADC data from the DMA (why,?) were really quick to fix (and increased the max sample rate by 3x) are exactly the kind of errors you’d make if you cut and pasted code without understanding how hardware works. And it’s HTML/CSS was better than mine (low bar – I don’t do loads).

All in all, I came away feeling good about it. On a constrained project like this where the LLM can get a global view of the whole system it worked well, and the quality of the C is really top notch.

I know compilers exist, but every now and again I do a bit of assembly just for fun. In the same way, I could choose to read datasheets from cover to cover, but I use search to speed it up. This is just the next logical step – a tool that reads the docs and sample code, and pastes it in a more sophisticated manner. As long as you’re competent at understanding what it generates, you’ll love it.

Yes, Ive had a similar experience. Don’t worry about the peanut gallery, just keep building stuff.

Yeah I’ve been coding for 40 years, professionally full-time for 30, continually learning and guiding junior developers most of that time. So as you might imagine, I had some things to say about AI slop code a year ago.

Now, I’ve found that if you treat like an enthusiastic but inexperienced junior developer, it can really be useful. I’m getting pretty good code out of Claude code by doing the following:

Setup an appropriate development environment, with appropriate documentation about ecosystem, existing code etc.

Have one instance that looks through the requirements, documentation etc and outputs an overall architecture with more detailed requirements

Then another instance writes (failing) tests, TDD style

As it start coding , I do need to monitor it because while 95% of the things it’ll do are decent, every once in a while it makes absolutely stupid decisions

Ensure it doesn’t “fix” the tests to match broken code. Also other variations on this – deciding to work around errors like just catching and ignoring them rather than fixing them.

Keep reminding it to re-read the rules — like absolutely, positively you can’t force-push to a public repo and delete everyone else’s work! That’s how it will “fix” a merge conflict, by just deleting everyone else’s work, permanently.

I’m not sure what ESP32 that is (original, S3, C3, whatever) but the original one had a hardware limit of around 2 MSPS. I made a project a while ago that reached those speeds, but it involved writing to a bunch of horribly documented registers because the IDF functions never got that fast.

It’s an esp32c6 I had lying around. I know some of the others theoretically have higher sample rates, but you’d probably hit other bottlenecks before you got to 2MSPS

I’m telling you I have a project working at 2MSPS, lol.

I made an LCR meter that measures pretty reliably up to 500 KHz (2 channels, 1MSPS per channel, 500KHz Nyquist, FFT measurement). It still lacks a few calibrations, but I can tell you it’s totally feasible. But there are a ton of firmware quirks

There are much better options if you can just use an additional wifi chip. There are STM32 parts that can reach 5 MSPS properly with DMA and even achieve higher rates with interleaving and they support scanning multiple inputs in sequence. The limit of 83 kHz really doesn’t seem great here.

If you’re replying to me, then the limit is actually 2 MSPS, as I stated. An STM32 will have a better and faster ADC, but at least for my specific project, the ESP32:

– is significantly cheaper

– is dual-core, both cores can be used when wifi is off

– has a DAC

– has wifi (And BT, didn’t use it though)

– also supports scanning multiple inputs in sequence (any reasonable MCU does nowadays). That’s how I scan 2 channels, 1MSPS per channel

– has an unreasonable amount of flash in the module

As an alternative, I was looking at an STM32F410 – Costs more, has 2.4 MSPS ADC, has a DAC, and that’s where the good things end. It’s just 100 MHz, 128 kB flash, bring your own wifi.

It totally depends on the project at hand, but I think you’ll agree with me here

This seems to be only one channel? Is it possible to add multiple channels?

On the esp32c6, no. There is a second ADC, but it’s not accessible whilst WiFi is running, which it obviously is since it’s serving a webpage.

I think some of the other esp32 devices might have more available, but I think you might ruin into other bottlenecks at to speed

Thanks!

Little bit of searching led me to find this pico oscilloscope project: https://github.com/siliconvalley4066/RaspberryPiPicoWTFTOscilloscope

For the Arduino dudes,

http://github.com/pingumacpenguin/STM32-O-Scope/wiki

Looks cool. TBH, I just needed something quick with the hardware I had lying around, and I wanted to test out Antigravity. It’s not the most sophisticated ‘scope ever made (but I might be the cheapest – you get change from £6)

You could pair it with an ATTINY427 and get ~156Khz but with a 5V 12 bit ADC that is far better than the built in ESP32. you could oversample and get 13 bits and still have ~156khz which is the limitation of the SPI/UART.

I already do this with an ESP32C6 that I use as a CAN bridge between a modern ECU and original analog gauges in a car.

This library i made achieves no overhead on the communication protocol by being able to transmit the next command while receiving the data from the last command.

https://github.com/FL0WL0W/ATTINY427-Expander