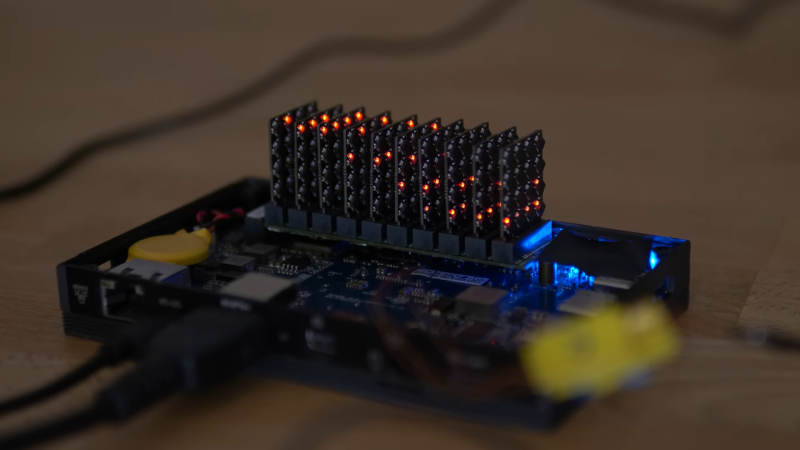

Aside from GPUs, you don’t hear much about co-processors these days. [bitluni] perhaps missed those days, because he found a way to squeeze a 160 core RISC V supercluster onto a single m.2 board, and shared it all on GitHub.

OK, sure, each core isn’t impressive– he’s using CH32V003, so each core is only running at 48 MHz, but with 160 of them, surely it can do something? This is a supercomputer by mid-80s standards, after all. Well, like anyone else with massive parallelism, [bitluni] decided to try a raymarcher. It’s not going to replace RTX anytime soon, but it makes for a good demo.

Like his previous m.2 project, an LED matrix, the cluster is communicating over PCIe via a WCH CH382 serial interface. Unlike that project, blinkenlights weren’t possible: the tiny, hair-thin traces couldn’t carry enough power to run the cores and indicator LEDs at once. With the power issue sorted, the serial interface is the big bottleneck. It turns out this cluster can crunch numbers much faster than it can communicate. That might be a software issue, however, as the cluster isn’t using all of the CH382’s bandwidth at the moment. While that gets sorted there are low-bandwidth, compute-heavy tasks he can set for the cluster. [bitluni] won’t have trouble thinking of them; he has a certain amount of experience with RISCV microcontroller clusters.

We were tipped off to this video by [Steven Walters], who is truly a prince among men. If you are equally valorous, please consider dropping informational alms into our ever-present tip line.

very very cool build and video

Given bitluni’s prolificness, HaD should just subscribe to his YT channel!

That should be a red flag right there.

Given how many of bitluni’s projects end up getting the ‘two paragraph’ + embedded YT video treatment here on HaD, it is downright moronic that they need “tips” to tell them that a new project has been completed and a wrap up video posted.

Honestly? If someone is doing their third write up on, let’s say Applied science, and they don’t subscribe to Ben at that point? I don’t care what they have to say about it because they CLEARLY don’t care about the subject matter.

I wish the epiphany IV and its developer wasnt eaten by DARPA, 64 RISC CPUs each operating at 800 MHz and 1.6 GFLOPS/sec. on a single chip delivering over 90 GFLOPS .designed with a 2d mesh network comprised of 4 1.6GB/sec links for easy clustering.

The 16 core version that made it to market was a really potent piece of kit. DigiKey still stocks them but whats the point of chasing 13 year old development abandoned tech.

Parallella’s main issue was the complete lack of infrastructure and the need to modify the code to make use of the e16 unit. There were projects to make the split automatically (gpgpu), but they never took off. I wrote an llvm backend for e16 (on github), and it became pretty obvious in the end, nobody’s gonna rewrite, say, xterm to use parallella, and its control unit (a10, iirc) was mediocre at best

When I had a Parallela cluster, I reviewed their software and it was awful. Really terrible. Also the boards in the cluster I had were extremely unreliable and would not boot reliably. Basically a hideous bodge job.

Can it run Doom?

My single core 50MHz 486 from 1995 could, so the question is more “how many parallel instances of DOOM can this run?”

Did your 50MHz 486 only have 2KiB of ram and 16KiB of storage, because that is what each node in the above cluster has. Getting doom to run on the above hardware would be truly impressive.

If you need to give up trace thickness for power/ground routing, probably the better trick is to jump them around using 0-ohm resistors so you’re buying yourself an extra layer while maintaining copper thickness. There’s a reason why you buy 0-ohm resistors in reels.

This is not clear. The pads on the PCB for a resistor are always larger than the resistor. How can you gain something if you need a large area to solder it? Or do you mean using through hole resistors? (In that case, a dumb wire is better, no?)

No, the trick he’s referring to is when two traces are trying to cross perpendicularly but they’re on the same layer, you use a 0ohm resistor as a jump for one of the traces: so one trace goes straight through the intersection, the other jumps over it with the resistor (with one pad on each side of the other trace). It’s layers without the actual layers.

This is such an elegant solution that I now genuinely feel slow for not utilizing it before now. Thank you.

Must admit this is the coolest thing I’ve seen in days

The final product even looks very nice, with that even grid of 45-degree diamond-shaped chips. Very slick

What I love most is the entire cluster consumes 4 Watts.

Yup, a very common problem with massively parallel architectures. It’s a big headache transporting data off-chip with other nodes at speeds that can keep up with the compute. That’s why there’s specialized networking technology like InfiniBand.

What if we set them up to control magnets hooked up to their output, which in turn control various lenses that modulate light?

transputers have faster communications. supercoputers have faster communications. this is very important

Indeed. Back in the day (1990; I was working for Computer System Architects, which manufactured Transputer boards), I had a 286 host PC with 4 boards, each hosting 4 T805 Transputers, wired together in a 2D mesh network and communicating back to the host PC via a single link (20 Mbit / sec). Rendering ray-tracing or Mandelbrot set … that single link was the bottleneck. With only 4 Transputers, no bottleneck but considerably less rendering power. I didn’t have that many T805s all the time, just that once occasion. Most days I had 1 × T425 or so to play with while programming for larger systems.

There are times when I miss those days.