When you mention Teletext or Videotex, you probably think of the 1970s British system, the well-known system in France, or the short-lived US attempt to launch the service. Before the Internet, there were all kinds of crazy ways to deliver customized information into people’s homes. Old-fashioned? Turns out Teletext is alive and well in many parts of the world, and [text-mode] has the story of both the past and the present with a global perspective.

The whole thing grew out of the desire to send closed caption text. In 1971, Philips developed a way to do that by using the vertical blanking interval that isn’t visible on a TV. Of course, there needed to be a standard, and since standards are such a good thing, the UK developed three different ones.

The TVs of the time weren’t exactly the high-resolution devices we think of these days, so the 1976 level one allowed for regular (but Latin) characters and an alternate set of blocky graphics you could show on an expansive 40×24 palette in glorious color as long as you think seven colors is glorious. Level 1.5 added characters the rest of the world might want, and this so-called “World System Teletext” is still the basis of many systems today. It was better, but still couldn’t handle the 134 characters in Vietnamese.

The TVs of the time weren’t exactly the high-resolution devices we think of these days, so the 1976 level one allowed for regular (but Latin) characters and an alternate set of blocky graphics you could show on an expansive 40×24 palette in glorious color as long as you think seven colors is glorious. Level 1.5 added characters the rest of the world might want, and this so-called “World System Teletext” is still the basis of many systems today. It was better, but still couldn’t handle the 134 characters in Vietnamese.

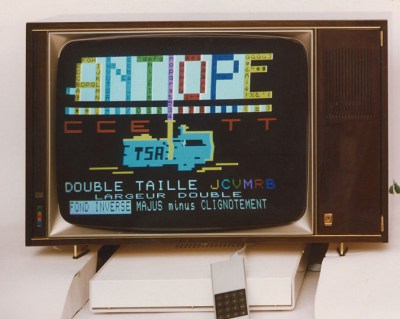

Meanwhile, the French also wanted in on the action and developed Antiope, which had more capabilities. The United States would, at least partially, adopt this standard as well. In fact, the US fragmented between both systems along with a third system out of Canada until they converged on AT&T’s PLP system, renamed as North American Presentation Layer Syntax or NAPLPS. The post makes the case that NAPLPS was built on both the Canadian and French systems.

That was in 1986, and the Internet was getting ready to turn all of these developments, like $200 million Canadian system, into a roaring dumpster fire. The French even abandoned their homegrown system in favor of the World System Teletext. The post says as of 2024, at least 15 countries still maintain teletext.

Continue reading “Teletext Around The World, Still” →