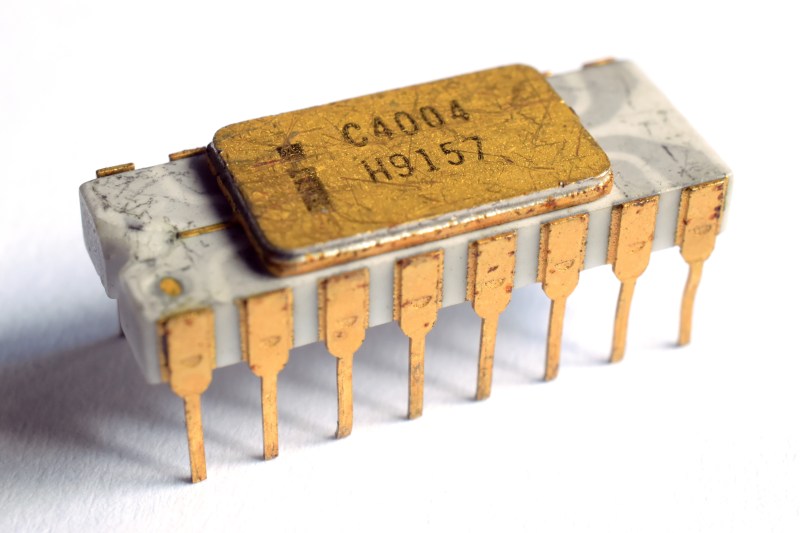

Modern microprocessors are a marvel of technological progress and engineering. At less than a dollar per unit, even the cheapest microprocessors on the market are orders of magnitude more powerful than their ancestors. The first commercially available single-chip processor, the Intel 4004, cost roughly $25 (in today’s dollars) when it was introduced in 1971.

The 4-bit 4004 clocked in at 740 kHz — paltry by today’s standards, but quite impressive at the time. However, what was remarkable about the 4004 was the way it shifted computer design architecture practically overnight. Previously, multiple chips were used for processing and were selected to just meet the needs of the application. Considering the cost of components at the time, it would have been impractical to use more than was needed.

That all changed with the new era ushered in by general purpose processors like the 4004. Suddenly it was more cost-effective to just grab a processor of the shelf than to design and manufacture a custom one – even if that processor was overpowered for the task. That trend has continued (and has been amplified) to this day. Your microwave probably only uses a fraction of its processing power, because using a $0.50 processor is cheaper than designing (and manufacturing) one tailored to the microwave’s actual needs.

Anyone who has ever worked in manufacturing, or who has dealt with manufacturers, knows this comes down to unit cost. Because companies like Texas Instruments makes millions of processors, they’re very inexpensive per unit. Mass production is the primary driving force in affordability. But, what if it didn’t have to be?

Professors [Rakesh Kumar] and [John Sartori], along with their students, are experimenting with bespoke processor designs that aim to cut out the unused portions of modern processors. They’ve found that in many applications, less than half the logic gates of the processor are actually being used. Removing these reduces the size and power consumption of the processor, and therefore the final size and power requirements of the device itself.

Of course, that question of cost comes back into play. Is a smaller and more efficient processor worth it if it ends up costing more? For most manufacturers of devices today, the answer is almost certainly no. There aren’t many times when those factors are more important than cost. But, with modern techniques for printing electronics, they think it might be feasible in the near future. Soon, we might be looking at custom processors that resemble the early days of computer design.

This implies that some time “in the near future” (probably a stretchable term) we will have cheap, custom produced processors — imagine all the other possibillities that would create!

It missed the underlying forces of economics of scale.

Hmm, it is just another variant of “mask ROM” devices, a.k.a. hackers’ disappointment.

The problem is not so much that there are devices on chip you don’t use or need, but that they are wasting power and raising temperature. It is better to change architecture fundamentally in such a way that data itself is the power (akin to diode logic of the past) and that all the sticky state is kept in solid state non-volatile bi-stable devices (i.e. ferroelectric cells).

And it’s also just another variation on the ARM strategy. When Acorn or ARM (don’t know which name they were using at the time) decided to get out of the silicon business and just design microprocessor cores, this allowed other companies to buy the core logic from ARM, then add whatever peripherals they wanted to make custom integrated system chips. As a result, many products already have ARM-based chips that are exclusive to them, and there are hundreds of different ARM chips being introduced every year, most of which aren’t available in small quantities, and with no long-term support for most. And NONE of them (I’m saying this in the hope that somebody out there knows of the exception and will correct me) just act like ordinary microprocessors, with the data and address buses (or even a few PCIe lanes) available at the pins.

Yep, and CCL do the same with Xap, Imagination with MIPS (though they may be internal only), and I’m sure the Chinese do the same again…

This one has data/address busses exposed, although via an intermediate stage…

(search result, haven’t used it myself)

AT91M40800

http://www.atmel.com/Images/1348s.pdf

Are you looking for something with zero internal memory?

abb: no, I’m looking to add custom peripheral interfaces without the bottleneck of some intermediate interface the chip maker has decided is best for me, this week.

Hm. I’ll have to look into this. This may be just what I’m looking for. Thanks.

On a modern ARM chip you can enable/disable the clock to parts of the chip.

That $25 (in today’s money) cost seems really low to me. I’ve seen other prices online for that item (e.g. $60, in old money). Is this lower $25 figure the marginal cost of manufacturing a single item, once all the very high R+D and setup costs have gone into it, or is this the actual purchase cost for the consumer at the time?

appears to be take from http://www.extremetech.com/computing/105029-intel-4004-the-first-cpu-is-40-years-old-today

“We can’t find the original list price, but one source indicates that it cost around $5 to manufacture, or $26 in today’s money.”

I looks to me that the extremetech article you quote was poorly researched. The author of that article admits to not knowing the list price, but should have done some more research. The extremetech article states the price as a rumor, where this author decides to turn rumor into fact. For shame!!

Having already been involved with electronics at that time, I would have loved to see those type of chip prices back then, but that was far from reality.

As a group today, we are fortunate to be able to grab an arduino, raspberry pi, or cheap atmel microcontroller for our projects. The Intel 4004 required several other expensive chips in the chip set to get the basic functionality was are accustomed to today.

An article in EDN from Jan 15, 1972 which is available online in the EDN archives, titled “One-Chip CPU available for low-cost dedicated computers” list the chip prices as $100 for 1-24 units and dropping to $30 for 100-999 units.

interesting idea. But I suspect you’ll never beat the cost savings of high volume production of general purpose chips.

not until we have molecular manufacturing anyway.

Isn’t it possible to change the clock multiplier on most embedded chips for when speed isn’t needed. Can’t you just turn off things like the clock and power to the Adc if it isn’t needed? My phone even turns the radio off in airplane mode. Unless you are dynamically powering down ram, I’m not sure hat else you can archive.

Yes, most micros that have peripherals built in DO have mechanisms for shutting down any peripherals that aren’t needed, and this has been common practice since chips like the Z-180 in the late 1980s. And in CMOS chips, all you really have to do is gate off the clock going to a peripheral circuit to reduce its power consumption to near-zero.

Yes, this.

The only savings would be in die area, not power consumption.

Saving die area saves money. Saving IOs so you can package the die cheaper even more so.

Sure, If you’re making 1 million (or more) chips, then saving die area might compensate for the one-time costs of having a custom chip produced.

And even then, you’re competing with a generic chip that’s having tens of millions produced so you’re going to incur a higher per-unit cost because they are not making tens of millions of those chips but only one million.

At the TUDELFT we (the group I was in) tried this idea in the early nineties. After a while the realization sunk in that producing millions of unused gates for an application but using the generic processor is always going to be cheaper than leaving them out and doing a special production run.

I’m wondering if say ST does a separate production run for say the chips with and without encryption (I’m thinking say STM32F405 vs STM32F415 for example). I would think that even for such generic processors, unless the volume goes to enormous levels, simply producing the larger chip and burning a fuse in production testing would be cheaper.

You missed a few critical details in the paper – the point is there’s less power consumption in general by reducing routing lengths. There’s a lot that factors in to power consumption on the chip itself that are not obvious when you are designing board-level, or perhaps looking at older generation chips where static power consumption was quite dominant. Generally speaking, this includes things like the fact that longer lines (because you need to route around extra peripherals) or even the load of (disabled) peripherals still physically connected to the bus will take power, either due to transistor leakages or more likely dynamic power consumption due to the extra capacitive load.

Clock gating will reduce power consumption within a peripheral, but you can’t avoid the fact that it still loads bus lines and increases bus capacitance for everything else on the chip.

To give you a sense of how different things are on-chip than off chip, consider an modern generation, board level I/O gate. Say we’re sending a 24MHz clock – just one – from one chip to the other. Even with extremely short traces, a typical new generation I/O pad on a MCU might have 4pF load capacitance, and it actually takes (3.3V)^2 * 4pF * 24MHz = ~1mW just to send one digital signal. If you were running a new generation CPU, you can imagine there are easily tens of thousands or more lines toggling during CPU operation – resulting in a CPU core that would use watts of power! The trick is that on-chip, wires are much, much shorter and thinner (measured in microns, not mm or cm), I/O capacitances are smaller, and transistors are smaller, all of which are together possible because everything is jam packed into as small of an area as possible. There’s also lower core voltage (even a modern “3.3V” chip most likely uses <1.5V for the core, and uses an internal built-in LDO), but that's only going to give you at most one order of magnitude improvement over the board case.

This becomes extremely obvious when you're designing an ultra-low-power design (down to microwatts) when you realize that clock distribution between chips can easily kill your entire power budget…

Another waste of ‘energy’ is the coding. But I don’t see a solution for that in the near future either.

We now luckily have libraries and packages so we don’t have to reinvent the wheel, but offcourse, that generates a huge overhead in software or firmware.

If the libraries are slow and nobody reinvents them, then there would never be a solution ever.

If people keep reinventing it all, maybe they’ll get it right someday? At least it gives a chance.

I know this is totally beside the point, but I couldn’t help but react to “The 4-bit 4004 clocked in at 740 kHz — paltry by today’s standards, but quite impressive at the time.”

No, it wasn’t. This was the time when 16-bit minicomputers walked the earth, which were clocked at about the same speed, but could execute complete instructions in a single cycle. And had respectable instruction sets and useful register banks. The 4004 was considered to be useful only as a cost improvement over custom logic for products like calculators. That’s even how Intel marketed them. Even the 8008 was very anemic when compared to the minicomputers of the day. It was only when the 8080 (and Motorola’s 6800) came out that people started to get excited. Even those were poor performers compared with minis, but you could imagine using them for general purpose computing.

” It was only when the 8080 (and Motorola’s 6800) came out that people started to get excited.”

Hel, remember the 8085?

I kid you not kiddies-it was a huge step forward to be able to only have a single supply (5V) and ditch the other two (-5V, 12V)!

Man, I feel old now…

Double bonus! And you got the RIM and SIM instructions.

I’ve learned assembly on the 6502

I often end up at 32768 kHz even today, so I’m not really all that impressed.

I just can’t see where the savings come from, I can already buy a 32bit micro for a couple dollars with a huge list of features that can all be turned off, and with them turned off it can run for years on a coin cell. Why would I want the compiler to output a custom CPU when I can just turn those features off at runtime? I can use an even cheaper 8 bit with the exact same power consumption, for pennies. Often people pay more for the coin cell.

A couple of dollars for a 32 bit processor? You’re overpaying….

http://www.electronicspecifier.com/micros/stm32f030-stm32-stmicroelectronics-value-line-microcontrollers-32-bits-for-32-cents

In single quantity?

Me thinks not…

“Removing these reduces the size and power consumption of the processor, and therefore the final size and power requirements of the device itself.”

I imagine depending upon the design but I think power requirements being the more easily affected than size.

Read the linked article. It seems that ARM are working on a printed plastic CPU with a target cost of $0.01. They are working with these students in an attempt to reach that goal.

“Suddenly it was more cost-effective to just grab a processor of the shelf than to design and manufacture a custom one – even if that processor was overpowered for the task. That trend has continued (and has been amplified) to this day.”

Unless you are Arduino. Also, you misspelled the word off.

Are they really going to get cheaper than an STM8S001 ?

I could see this make sense if the general micro is binned based on which features tested as working ok / broken on a given wafer, then sorted for use with a known application.

Seems really limited to me though.

If we did experience a return to custom built processors, I would think it would be more likely because of a drop in cost, and an increase in accessibility of, FPGA chips. Processors are far too complicated even for 3d printers to make out of discrete components on a level that would compete with an FPGA.

From Hackaday–

‘Bespoke Processors Might Soon Power Your Artisanal Devices‘–8/14/17:

“…Professors [Rakesh Kumar] and [John Sartori], along with their students, are experimenting with bespoke processor designs that aim to cut out the unused portions of modern processors. They’ve found that in many applications, less than half the logic gates of the processor are actually being used….”

’Find Instructions Hidden In Your CPU‘–6/30/17

”…Typically, several million undocumented instructions on your processor will be found…”

Why don’t you tie all this crap together, Hackaday, and print an article by another individual to whom “critical thinker” is synonymous with “misanthrope”–on where, using simple logic, all this is heading: a five-gate processor with ten million instructions.

Big clue, and major news flash, Sherlocks–members of academia, and their publications, can be bigger idiots then we are. No? Remember ‘Cold Fusion’…? Remember ALL the highly-respected institutions of academia who RACED to beat the others at announcing, “Oh, yeah, we knew that; we discovered ‘cold fusion’ too, just last week…” (Georgia Tech’s president ordered researchers to not only stop any related activity, but to print retractions).

Along with you, Kumar, Sartori, the IEEE Spectrum, and author Samuel K. Moore all deserve to share in the credit for this lunacy.

Your credibility is at stake.

All you will be reducing is silicon and power consumption. Silicon is cheap, time in foundries is not. Silicon foundries typically cost Billions to equip and have a relatively short lifespan (thanks Moore). FAB depreciation is typically the dominant cost per chip. So yes volume matters, just starting your run could cost millions, so no small runs are very expensive per chip. Saving some transistors for your ten thousand units, while save power, would certainly not save money. Not with modern FAB technology’s and prices.

+1.

The problem, [Murray], is that none of these individuals acknowledge one simple obvious ECONOMIC fact (which you have zeroed in on): assuming you validly determine which hardware is not used by your application: you’re going to get a silicon vendor to trim out the fat FOR YOU?; AT NO COST?</B?

Don't ever forget that this is absolutely OLD NEWS: it has never escaped the attention of very large penny-pinching organizations that the SOFTWARE applications they wrote and sold in very large quantities used only a very small fraction of the target computer's resources. Why didn't, and haven't these people demanded, from semi manufacturers, only the resources needed to run these applications?

I worked for a semi manufacturer; we would not take an order for less than 100 000 custom chips from a pipsqueak manufacturer, PLUS WE required a hefty advance AND a rock-solid LOC (letter of credit) from a blue-chip financial institution. If you were a well-known LARGE manufacturer (GM, Ford, GE, Lockheed…), the minimums could be reduced to 10 000. We never took an order for 1000, from anybody. We never took an order from any US government agency when the USG had bad financials. Oh, I almost forgot: we would take CIA (cash-in-advance) from anybody–on a minimum order of at least 10 000 pieces.

What should bother everyone who has a modicum of common sense can be stated as: So do you think I’m stupid? You think this is my first rodeo? You think that the fact that you’re a “researcher”, write for an organization which long ago lost respectability by allowing non-engineers to be members, or you run a popular pseudo-technical website, mean that you are correct?

As Ben Franklin said, “We are all born ignorant; to remain stupid requires hard work.” You are getting to the point that what is most impressive about you is how hard you work. Hackaday.

Are you beginning to see the problem with this type of “technical information”?

You’re a moron who lacks perspective.

I beg your pardon–

I do not lack perspective.

I think that oftentimes (all the time?) you have to take these academic papers with a massive pinch of salt. They are usually very top heavy with ‘concepts’ (gimmicks in other words), but low on feasibility and/or common sense. The threshold for acceptance in academic circles (where you’re asking people to say ‘hmm…, possibly’) is much lower than in the real world (where you’re asking people to hand over their cash).

It seems like everyone here in the comments is criticising this concept based on a superficially valid assumption: that economies of scale for silicon fabs aren’t even remotely compatible with low volume bespoke chips. And that is, superficially, valid. Using photolithography patterning of silicon doesn’t scale down. But who said anything about silicon? There’s more than one way to build a transistor, and these researchers are looking into organic transistors and thin film electronics. If you’re minting chips traditionally, of course this isn’t feasible. But if you’ve got the ability to essentially print a processor, with relatively inexpensive equipment with no need for masks and stencils? It’s a fundamentally different game.

If they are using a printing process instead of a photgraphic one, how are they going to get the same kind of resolution you would get with photolithography? I thought that the ‘photo’ bit meant you could optically shrink down to very small dimensions from a larger mask. Even if you have ‘organic’ transistors, or whatever, for the semiconductor technology., you still need some method to actually fabricate them and wire them up. What resolution is possible with printing? If it is as good as photolithographic resolution, I would be very impressed.

TL;DR – it may not have seemed like it, but virtually every part of this article was actually only relevant for ultra-high volume designs (like RFID tags, where you are literally buying the chip for only one tiny, specific application), and actually pretty much the antithesis of what hackers want.

If the bespoke processor became more popular, it would make all sorts of applications much less hackable.

Imagine buying a super cheap router, running a bespoke processor, and trying to reflash the firmware – only to find out that the “ARM” core they ran was in fact a stripped down bespoke “ARM” core. Apparently, the optimizer realized the proprietary RTOS, conveniently, never multiplies unsigned positive numbers and negative numbers, or happens to never use the carry when it adds two integers. So that logic just doesn’t exist anymore inside their ARM core. Imagine how much of a disaster it’d be trying to flash linux or anything else to it, and hoping it doesn’t crash in some random edge case…

One important thing to realize is that if you’re talking about power in CPUs, you’re very unlikely to be able to do better in an organic or plastic process than a modern CMOS process, unless you’re at least an order of magnitude or two within the size scale of CMOS processes – a lot of the dynamic power consumption comes from wire and gate capacitances, which are pretty much scale with size, and you might be able to play with material properties within an order of magnitude but not a whole lot more.

Upon further reading, I think this article has really confused the real situation behind the research. Here’s my understanding of the actual situation described in the article:

This “bespoke” processor is actually more of a technique which analyzes code paths of individual applications, sees which gates are not used in the CPU (not MCU peripherals), and potentially removes those gates. Now this is INCREDIBLY scary, if you think about it – the gates that are being stripped away are literally going to be gates that do things like, check that your addition didn’t overflow, multiple maybe negative and positive numbers, etc. So if their code analysis fails in some edge case – like when, I don’t know, the RFID chip gets scanned for the 256th time, then you spent all your NREs on a dud processor, because it is essentially not flashable anymore.

Additionally, say bye-bye to any firmware updates – any firmware change could result in a completely unusable chip.

In other words, this design is actually the complete opposite of what the hacker community wants – it doesn’t strip an MCU to the bone, it strips the CPU inside the MCU to the bone for /one particular application/.

I’m fairly certain that the pragmatIC printing project is suffering from some inherent problems in designing on a large enough scale. As I mentioned before, power scales with size – and since they’re using much larger gates and wires, I’m pretty sure they’re also running into things like thermal and power limits, and I suspect their ARM core is being clocked at most up to 10s or 100s of kHz, to reduce the risk of deforming the plastic substrate… This would explain their interest in cutting power even further, to the point of crippling the CPU to a single application.

That’s the other thing to keep in mind – the point of the plastic IC project is to reduce volume cost, which means the real products they’re targeting are things so cheap that the 5 cent sliver of silicon required in an RFID is too expensive. Like maybe if you wanted to RFID tag each $1 can of soup you bought from the store, or something. So even removing that sliver of silicon, and replacing the bulk of the highly purified material with plastic is worthwhile.

What us hackers care about is reducing upfront costs. These come not from the nature of the resulting materials – even with silicon, older generation tools can cost mabye only 4-5 figures of material to fabricate a wafer, which probably large enough for a batch order. But it’s the tools themselves, and the cost there scales with dimension. These plastic ARM chips are probably on the micron – 10s of micron scale, which means cheaper tools – but it still means probably upper 5 figures (USD, I might add) in NREs, just in tooling – and that’s per design, not per wafer! And on that scale, the CPUs perform so poorly you are probably more likely to want to just buy that $1, multipurpose MCU anyway, and forget this 5 figure investment on slow performing, self melting plastic CPU.

A side note – even if you were applying these bespoke principles to modern processors, most bare low end CPUs probably take so little space already it actually costs more money to dice them to the tiny, more difficult to handle size. That’s right, there’s a point when the <$0.10 die is so small it actually gets more expensive to handle and package – so you may as well be adding more features to it instead.

The idea isn’t really new. FPGA based processors have long been configurable for number of registers, inclusion of multipliers, amount of cache if any, etc… Altera’s NIOS for example. Custom silicon use tweaked ARM cores (as someone else mentioned earlier).

The challenge isn’t really the processor these days — its the peripherals & having a solid tool chain behind it. If you can’t write & debug software efficiently, nobody will use your shiny new whatever.

<>

I think that $30 was closer to the 1971 selling price and NOT the price in today’s dollars. That price you quote may have been the manufacturing production cost less R&D to Intel, I if I remember correctly, the selling price was even much higher in small quantities at around $80-$100 ea

Please provide your source for the 1971 pricing

EDN article from Jan 15, 1972

pricing on the 4004 in 1972 dollars

$100 for 1-24 units dropping to $30 for 100-99 units

http://www.edn.com/electronics-products/other/4320004/One-Chip-CPU-available-for-low-cost-dedicated-computers

Back to the era of the 68K and PowerPC CPUs when Motorola made several variants missing various sub-components. IIRC they even made a 60x missing math co-processor, memory management unit and more. So much stripped out it was the PPC equivalent of the 68020, but considerably faster.

It was understandable given the size of FPU and some customers never used them (mostly telecom).

“Bespoke” and “Artisanal” should be stricken from the American English Language ©

Transmeta tried something similar. Unused and un needed are big performance changers.