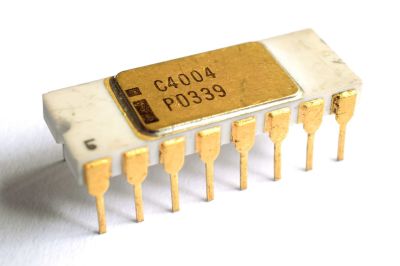

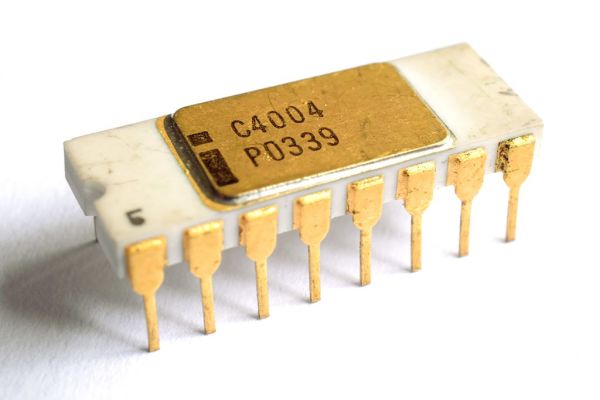

The legendary 6502 microprocessor recently turned 50 years old, and to celebrate this venerable chip which brought affordable computing and video gaming to the masses [AndersBNielsen] decided to put one to work doing something well outside its comfort zone. Called the PhaseLoom, this project uses a few other components to bring the world of software-defined radio (SDR) to this antique platform.

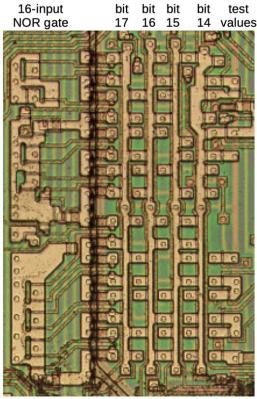

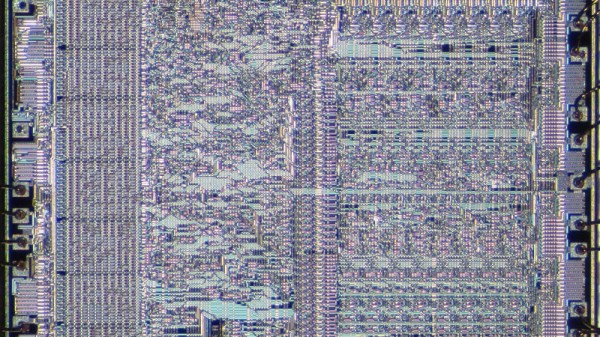

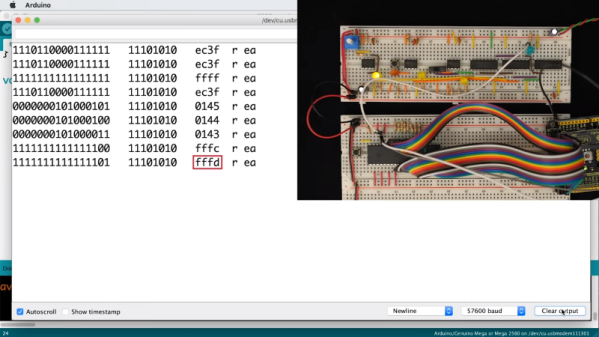

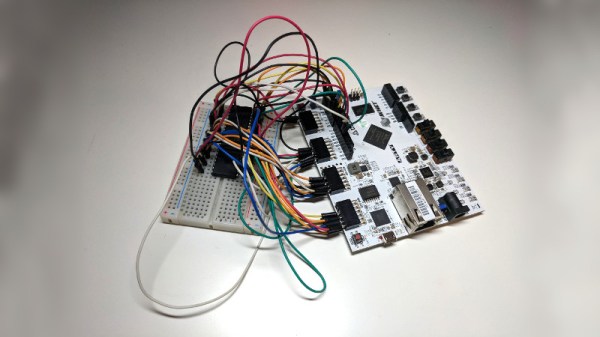

The PhaseLoom is built around an Si5351 clock generator chip, which is configurable over I2C. This chip is what creates the phase-locked loop (PLL) for the radio. The rest of the components, including antenna connectors and various filters, are in an Arduino-compatible form factor that let it work as a shield or hat for the 65uino platform, an Arduino-form-factor 6502 board. The current version [Anders] has been working on is dialed in to the 40-meter ham band, with some buttons on the PCB that allow the user to tune around within that band. He reports that it’s a little bit rough around the edges and somewhat noisy, but the fact that the 6502 is working as an SDR at all is impressive on its own.

For those looking to build their own, all of the schematics and code are available on the project’s GitHub page. [Anders] has some future improvements in the pipe for this project as well, noting that with slightly better filters and improved software even more SDR goodness can be squeezed out of this microprocessor. If you’re looking to experiment with SDR using something a little bit more modern, though, this 10-band multi-mode SDR based on the Teensy microcontroller gets a lot done without breaking the bank.