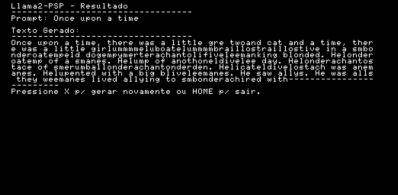

Organic Llamas have a rather restricted range, in nature: the Andes Mountains, and that’s it. Humans weren’t content to let the fluffy, friend-shaped creatures stay in their natural habitat, however, and they can now be found on every continent except Antarctica. The Llama2 Large Language Model is like that: while it may have started on a GPU somewhere, thanks to enterprising hackers like [Caio Madeira], who has ported Llama2 to the PlayStation Portable (PSP), the fluffiest LLM can be found just about anywhere.

Ultimately this project has its roots in Llama2.c by [karpathy], a project we’ve seen used on Pentium II under Windows 98, DOS machines running 486 processors, and even the venerable Commodore 64, of all impossible things. Now, it’s the PSP’s turn. This implementation uses the same 260K tinystories model as the C64 port, upon which it is based. Of course the PSP’s RAM has room for a much larger model, but [Ciao] apparently prefers to run the tiny model faster on this less-ancient gaming hardware.

Its getting to the point that it’s harder to find systems that won’t run LLMs than those that do. Given that Llama2 seems to be the new DOOM, it’s probably only a matter of time before their virtual fur is all over all our old equipment. Fortunately for allergy sufferers, virtual fur cannot trigger a histamine response.

If you know of another system getting LLMs (Alpaca-adjacent or otherwise), send in a tip.