It was bad when kids first started running up cell phone bills with excessive text messaging. Now we’re living in an age where our robots can go off and binge shop on the Silk Road with our hard earned bitcoins. What’s this world coming to? (_sarcasm;)

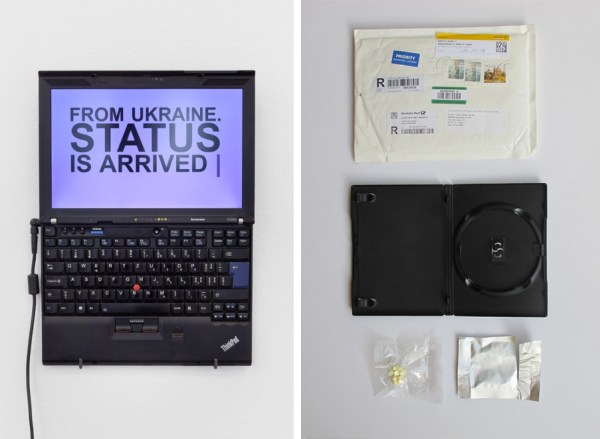

For their project ‘Random Darknet Shopper’, Swiss artists [Carmen Weisskopf] and [Domagoj Smoljo] developed a computer program that was given 100 dollars in bitcoins and granted permission to lurk on the dark inter-ether and make purchases at its own digression. Once a week, the AI would carrying out a transaction and have the spoils sent back home to its parents in Switzerland. As the random items trickled in, they were photographed and put on display as part of their exhibition, ‘The Darknet. From Memes to Onionland’ at Kunst Halle St. Gallen. The trove of random purchases they received aren’t all illegal, but they will all most definitely get you thinking… which is the point of course. They include everything from a benign Lord of the Rings audio book collection to a knock-off Hungarian passport, as well as the things you’d expect from the black market, like baggies of ecstasy and a stolen Visa credit card. The project is meant to question current sanctions on trade and investigate the world’s reaction to those limitations. In spite of dabbling in a world of questionable ethics and hazy legitimacy, the artists note that of all the purchases made, not a single one of them turned out to be a scam.

Though [Weisskopf] and [Smoljo] aren’t worried about being persecuted for illegal activity, as Swiss law protects their right to freely express ideas publicly through art, the implications behind their exhibition did raise some questions along those lines. If your robot goes out and buys a bounty of crack on its own accord and then gives it to its owner, who is liable for having purchased the crack?

If a collection of code (we’ll loosely use the term AI here) is autonomous, acting independent of its creator’s control, should the creator still be held accountable for their creation’s intent? If the answer is ‘no’ and the AI is responsible for the repercussions, then we’re entering a time when its necessary to address AI as separate liable entities. However, if you can blame something on an AI, this suggests that it in some way has rights…

Before I get ahead of myself though, this whole notion circulates around the idea of intent. Can we assign an artificial form of life with the capacity to have intent?