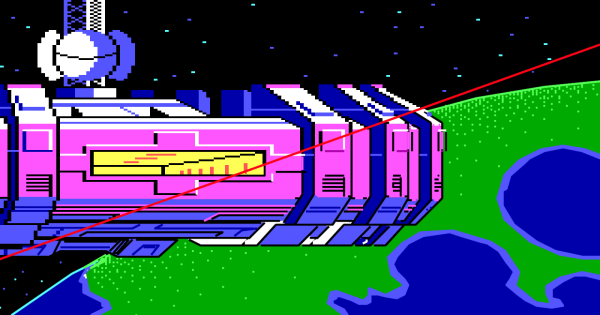

If you played many games back in the mid-80s to 90s, you might remember the iconic graphics from Sierra’s Online Adventure Games. They were brightly colored (16 colors) and dynamic with some depth. To pay homage, [eviltrout] worked to upscale the images. Despite being rendered at 160×200 at 16 colors and then stretched, storing all those bitmaps even at only 4 bits per pixel would take all the storage available on the floppy disk. The engineers on the game decided instead to take a vector approach to a raster problem.

When [eviltrout] came through to try and upscale the backgrounds, he started by writing some code to extract the draw commands from the engine of the game, known as Adventure Game Interpreter (AGI). Comparing the vector commands to equivalent PNG versions with the best compression, the AGI vector versions were around half the size. Not bad for a couple of game developers in the 80s. Since it is all vector commands under the hood, it should be relatively simple to draw them at a much higher resolution. At least, that’s what he thought. The first issue was with flood fills. Since the canvas is larger, there are gaps between lines, and the flood escapes. A few approaches were taken, such as using a low-resolution reference and marching squares, but neither was satisfactory. Eventually, [eviltrout] expanded flood fills and used thicker lines. He also first rendered to a lower resolution and connected neighboring lines of the same color. Finally, he used ImageMagick to denoise white specs in the output.

We find the effect charming, but some might say you’re distorting art into what the artist never intended to be. But, as with all graphical enhancements, some artistic liberties are being taken without the original artist involved. The code is available on GitHub under an MIT license. Video after the break.