AI is the new hotness! It’s 1965 or 1985 all over again! We’re in the AI Rennisance Mk. 2, and Google, in an attempt to showcase how AI can allow creators to be more… creative has released a synthesizer built around neural networks.

The NSynth Super is an experimental physical interface from Magenta, a research group within the Big G that explores how machine learning tools can create art and music in new ways. The NSynth Super does this by mashing together a Kaoss Pad, samples that sound like General MIDI patches, and a neural network.

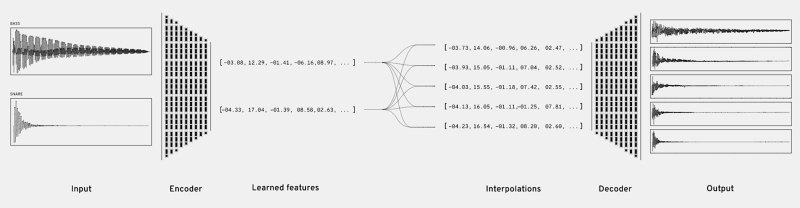

Here’s how the NSynth works: The NSynth hardware accepts MIDI signals from a keyboard, DAW, or whatever. These MIDI commands are fed into an openFrameworks app that uses pre-compiled (with Machine Learning™!) samples from various instruments. This openFrameworks app combines and mixes these samples in relation to whatever the user inputs via the NSynth controller. If you’ve ever wanted to hear what the combination of a snare drum and a bassoon sounds like, this does it. Basically, you’re looking at a Kaoss pad controlling rompler that takes four samples and combines them, with the power of Neural Networks. The project comes with a set of pre-compiled and neural networked samples, but you can use this interface to mix your own samples, provided you have a beefy computer with an expensive GPU.

Not to undermine the work that went into this project, but thousands of synth heads will be disappointed by this project. The creation of new audio samples requires training with a GPU; the hardest and most computationally expensive part of neural networks is the training, not the performance. Without a nice graphics card, you’re limited to whatever samples Google has provided here.

Since this is Open Source, all the files are available, and it’s a project that uses a Raspberry Pi with a laser-cut enclosure, there is a huge demand for this machine learning Kaoss pad. The good news is that there’s a group buy on Hackaday.io, and there’s already a seller on Tindie should you want a bare PCB. You can, of course, roll your own, and the Digikey cart for all the SMD parts comes to about $40 USD. This doesn’t include the OLED ($2 from China), the Raspberry Pi, or the laser cut enclosure, but it’s a start. Of course, for those of you who haven’t passed the 0805 SMD solder test, it looks like a few people will be selling assembled versions (less Pi) for $50-$60.

Is it cool? Yes, but a basement-bound producer that wants to add this to a track will quickly learn that training machine learning algorithms cost far more than playing with machine algorithms. The hardware is neat, but brace yourself for disappointment. Just like AI suffered in the late 60s and the late 80s. We’re in the AI Renaissance Mk. 2, after all.

interesting, it would be very fun to sync with this my Axe-fx, for additional control features. Gonna have to check into this more.

“Without a nice graphics card, you’re limited to whatever samples Google has provided here.”

It’s just a matter of time, isn’t it? With a slower GPU or just a CPU it’s just a matter of taking longer to run. Which is not that big of a deal. You can’t train new things spontaneously while performing, no, but that isn’t that big of a deal.

Within reason. Kind of like doing Pixar quality with a garden variety PC. A very, very, very long time.

“Not to undermine the work that went into this project, but thousands of synth heads will be disappointed by this project. The creation of new audio samples requires training with a GPU; the hardest and most computationally expensive part of neural networks is the training, not the performance. Without a nice graphics card, you’re limited to whatever samples Google has provided here”

Rent-A-Cloud™

Well, Isn’t that the point, to stifle cretive development and limit creative potential?

I wouldn’t be surprised if with this tech, people begin copyrighting the qualities of a sound and limiting the uses of that kind of sound to only those with official licenses.

I kid.

This tech is great, I think it would be more widely used if this tech could be made into a standalone piece of software, portable to all but the weakest of smart phones and computers.

I wonder how it compares with systems that do an FFT on samples then use them as “brushes” that can be operated on by transformation matrices while also being masked by other FFT data where the mask uses a complex transfer function for mixing before the entire “sound image” is put through a reverse FFT to render it as sound. The GPU in an RPi should be able to handle that level of work in real time if the input sounds have their FFT precomputed.

Yeah, a GPU with the (literally) thousands of multiply/accumulate cores should be amenable to FFT analysis. We worked on the first TI 320 DSP series that had a whopping ONE core, and it was fixed point.

now we are talking

Samples-schamples.

If only one could hear what the human neural net did 50 years ago under the influence of some the finest products of S. Owsley. I still strive to recreate some of those sounds as we are just getting somewhere.

The model needs pure functions (rack modules) connected in multidimensional not x/y ways, that may break new ground.

Mixes four samples together to create new sounds?!? Unheard of! (In 1985.)

https://www.musicradar.com/news/tech/blast-from-the-past-sequential-circuits-prophet-vs-619031

https://learningmodular.com/the-story-of-the-prophet-vs/

Right?! That is what I took away from the video. It just looks like a vector synth with an updated interface. That being said, the price is right for tinkering.

Uhmmm, the version shown in the video doesn’t look like the one in GitHub. The display looks bigger, like a small tablet.

Has anyone found info about how to make that version?

This is exactly my thinking. Guessing: Aluminum CNC enclosure + RaspPi with a touch display and extended app,which possibly is not in the source code?

exactly, screw the synth, where can I get this 10″ OLED ($2 from China)??????

For what it’s worth, that display on the first prototype looks exactly like the 7″ Raspberry Pi touchscreen that I bought from Adafruit for my home automation controller project. It wasn’t exactly cheap. I’d wager they changed the spec after the first prototype because the display was superfluous in their opinion when a capacitative touch interface would do the job. The diagrams of the little boxy version have a tiny 1.3″ OLED in the top for a display, and the large (4″ ish?) square capacitative touch sensor which is part of the PCB design.

click bate ftw