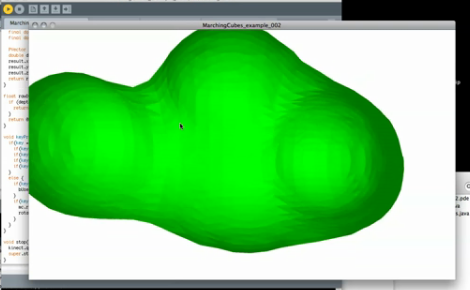

[Mike Newell] dropped us a line about his latest project, Bubble boy! Which uses the Kinect point cloud functionality to render polygonal meshes in real time. In the video [Mike] goes through the entire process from installing the libraries to grabbing code off of his site. Currently the rendering looks like a clump of dough (nightmarishly clawing at us with its nubby arms).

[Mike] is looking for suggestions on more efficient mesh and point cloud code, as he is unable to run any higher resolution than what is in the video. You can hear his computer fan spool up after just a few moments rendering! Anyone good with point clouds?

Also, check out his video after the jump.

[vimeo http://vimeo.com/22542088 w=470]

Rendering a solid from a point cloud is a pretty well documented problem. One nice technique is described in this paper from NVIDIA :

http://developer.download.nvidia.com/presentations/2010/gdc/Direct3D_Effects.pdf

It’s used to render particle fluid simulations. But can be applied to about any point cloud. As it runs totally on the GPU it’s pretty scalable. I was able to render about 30K particles without any problems using this technique.

Hope it helps!

I really need to pick up a kinect…

This would be neat to play with if they could combine it with an app like sculptris.

Is the program written in Java, executed in an Arduino? Maybe that is be the root of the low performance.

I’ve messed with using meshlab for converting point cloud data into usable models. Let me tell you, it can be pretty processor intensive. As in, my nice Core i7 CAD machine doesn’t like doing it.

But there has been some work at creating models on the fly from kinect. I’m sure with some clever work it could be done, but unfortunately I don’t know how.

Your problem is Processing. Java is wicked slow; you should be using C, C++, or (preferably), Haskell, which compiles to C. Anything that is interpreted, runs in a virtual machine, or uses any execution path other than compilation to machine code will be slow.

Maybe NURBS instead of Polygons…?

He should have waved at the end =)

Really cool, and I agree with macpod – I also really need to get a kinect…

is it me or must that guy make copy of the sript and put it in a txt file so you dont have to copy it of his site.

for all kineck moders

http://research.microsoft.com/en-us/um/redmond/projects/kinectsdk/

Have a look at the pointcloud library (pointclouds.org).

I think that a really simple way to do it would be to generate the mesh once and then deform it , instead of continuously generating new meshes. If you really want to generate meshes (respond to changes such as people walking in and out of frame) You can regenerate over a couple of time frames and sync the model + skeleton again when a new mesh is made just to correct errors. By dividing the work over updates and deforming existing meshes instead of regeneration the rate should go up considerably.

@ferdie – you’re right! I’m sorry I’ll get a download up there later this afternoon!

@Franklyn – good idea, so just monitor for a change in the object and regenerate that specific area as opposed to the whole thing right? That may take some insane logic but it might be worth a shot.

@ UltimateJim – thanks for the advice, I’ll look into how to render NURBS from a point cloud…seems fairly straight forward.

Not exactly, i was thinking more like generating one pointcloud and then using a skeletal structure (that you can track) to basically move the points around instead. But it seems like what you want is more of a realtime 3d scanner.

Take a look at OpenFrameworks it’s the C++ equivalent to Processing. And some of the libraries processing uses were co-written in OFF.