Plenty of our childhoods had at least one math teacher who made the (ultimately erroneous) claim that we needed to learn to do math because we wouldn’t always have a calculator in our pockets. While the reasoning isn’t particularly sound anymore, knowing how to do math from first principles is still a good idea in general. Similarly, most of us have hugely powerful graphics cards with computing power that PC users decades ago could only dream of, but [NCOT Technology] still decided to take up this project where he does the math that shows the fundamentals of how 3D computer graphics are generated.

The best place to start is at the beginning, so the video demonstrates a simple cube wireframe drawn by connecting eight points together with lines. This is simple enough, but modern 3D graphics are really triangles stitched together to make essentially every shape we see on the screen. For [NCOT Technology]’s software, he’s using the Utah Teapot, essentially the “hello world” of 3D graphics programming. The first step is drawing all of the triangles to make the teapot wireframe. Then the triangles are made opaque, which is a step in the right direction but isn’t quite complete. The next steps to make it look more like a teapot are to hide the back faces of the triangles, figure out which of them face the viewer at any given moment, and then make sure that all of these triangles are drawn in the correct orientation.

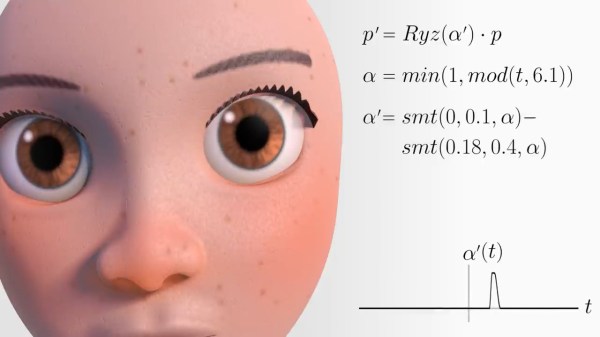

Rendering a teapot is one thing, but to get to something more modern-looking like a first-person shooter, he also demonstrates all the matrix math that allows the player to move around an object. Technically, the object moves around the viewer, but the end effect is one that eventually makes it so we can play our favorite games, from DOOM to DOOM Eternal. He notes that his code isn’t perfect, but he did it from the ground up and didn’t use anything to build it other than his computer and his own brain, and now understands 3D graphics on a much deeper level than simply using an engine or API would generally allow for. The 3D world can also be explored through the magic of Excel.