Anyone who has ever processed real analog film in a darkroom probably remembers two things: the awkward fumbling in absolute darkness while trying to get the film loaded into the developing reel, and the tedium of getting the timing for each solution just right. This automatic film-developing machine can’t help much with the former, but it more than makes up for that by taking care of the latter.

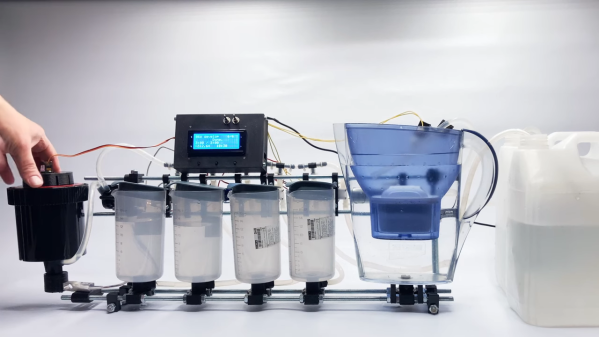

For those who haven’t experienced the pleasures of the darkroom — and we mean that sincerely; watching images appear before your eyes is straight magic — film processing is divided into two phases: developing the exposed film from the camera, and making prints from the film. [kauzerei]’s machine automates development and centers around a modified developing tank and a set of vessels for the various solutions needed for different film processes. Pumps and solenoid valves control the flow of solutions in and out of the developing tank, while a servo mounted on the tank’s cover gently rotates the reel to keep the film exposed to fresh solutions; proper agitation is the secret sauce of film developing.

The developing machine has a lot of other nice features that really should help with getting consistent results. The developing tank sits on a strain gauge, to ensure the proper amount of each solution is added. To avoid splotches that can come from using plain tap water, rinse water is filtered using a household drinking water pitcher. The entire rig can be submerged in a heated water bath for a consistent temperature during processing. And, with four solution reservoirs, the machine is adaptable to multiple processes. [kauzerei] lists black and white and C41 color negative processes, but we’d imagine it would be easy to support a color slide process like E6 too.

This looks like a great build, and while it’s not the first darkroom bot we’ve seen — we even featured one made from Lego Technics once upon a time — this one has us itching to get back into the darkroom again.

Continue reading “Darkroom Robot Automates Away The Tedium Of Film Developing”