If you’ve ever wondered why NTSC video is 30 frames and 60 fields a second, it’s because the earliest televisions didn’t have fancy crystal oscillators. The refresh rate of these TVs was controlled by the frequency of the power coming out of the wall. This is the same reason the PAL video standard exists for countries with 50Hz mains power, and considering how inexpensive this method of controlling circuits was the trend continued and was used in clocks as late as the 1980s. [Ch00f] wondered how accurate this 60Hz AC was, so he designed a little test.

Earlier this summer, [Ch00f] bought a 194 discrete transistor clock kit and did an amazing job tearing apart the circuit figuring out how the clock keeps time. Needing a way to graph the frequency of his mains power, [Ch00f] took a small transformer and an LM311 comparator. to out put a 60Hz signal a microcontroller can read.

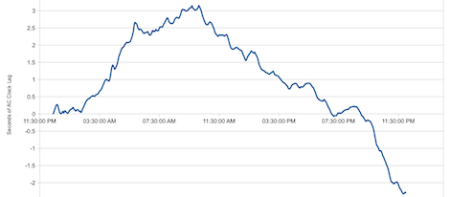

This circuit was attached to a breadboard containing two microcontrollers, one to keep time with a crystal oscillator, the other to send frequency data over a serial connection to a computer. After a day of collecting data, [Ch00f] had an awesome graph (seen above) documenting how fast or slow the mains frequency was over the course of 24 hours.

The results show the 60Hz coming out of your wall isn’t extremely accurate; if you’re using mains power to calibrate a clock it may lose or gain a few seconds every day. This has to do with the load the power companies see explaining why changes in frequency are much more rapid during the day when load is high.

In the end, all these changes in the frequency of your wall power cancel out. The power companies do the same thing [Ch00f] did and make sure mains power is 60Hz over the long-term, allowing mains-controlled clocks to keep accurate time.

The frequency is also an indication of the load on the grid.

http://jorisvr.nl/gridfrequency.html

Interesting fact: a mains-controlled clock can account for leap seconds, while an atomic clock cannot.

“interesting made up piece of misinformation” perhaps.

The atomic clock has nothing to do with the rotation of the earth, it counts vibrations of a caesium atom. So no, it cannot “account” for leap seconds. Except any atomic clock will have a huge pile of circuitry around it that will show some sort of local time value and the circuitry CAN be adjusted for leap seconds or any other changes in the clock.

In the same way, a mains controlled clock counts by the frequency of the mains power, which is controlled by someone who sets the number of cycles per day, where the day is defined by some accurate clock source (like an atomic clock, adjusted for leap seconds).

So, yes, the mains clock gets it’s leap seconds taken out for you automatically by some bloke in a power station somewhere.

Overall, you’re probably not going to notice the leap second difference on a mains powered clock, because as the article states it can gain or lose “a few” seconds every day.

You are a wonderful man.

Actually an atomic clock is comprised of a crystal oscillator that is corrected by the decay of the cesium gas. The systems loses about a second every million years.

While it’s interesting to see how accurate the line frequency actually is, I should point out a few inaccuracies in the HAD article:

TV frequencies are related to mains frequencies. But I’m pretty sure the reason for this is to prevent flicker when the cameras record a picture that was lit with lights running on the mains, not because early TV’s were synchronized to the line frequency. That would mean that all TV’s would have to be on the same power net as the station from where they’re receiving their signal. Also, what about delays that vary with distance from the transmitter? To compensate for that, every TV would have to have a variable delay line that would have to be hand-adjusted every time you change the station.

So, no: TV’s may not have contained crystals before they had computers, but they always synchronized to the frame sync in the signal, not to the line frequency.

As for line frequency accuracies: I think we had this discussion here before. Power is generated by rotating machinery, and the frequency varies with how fast that machinery runs. So when power demand is low (e.g. at night), the line frequency is lower than during peek hours. But power companies know that there are thousands of clocks that depend on the line frequency so they will compensate for frequency variations and they’ll try to keep the total number of cycles per day constant. They do this by referring to a time standard such as the NIST atomic clock in Colorado or DCF77 in Germany.

So while the a mains-based clock may not be very accurate for short-term timing measurements (as shown in the interesting linked article), you probably never have to adjust such a clock except when daylight savings time starts or ends, or after a power outage.

Keep in mind: there are 86400 seconds in a day so it only takes a little over 0.001% (10ppm) inaccuracy to be off by a second a day. Typical crystals are only about 100ppm accurate I think, and the accuracy is very temperature-dependent.

The wall outlet frequency is the easiest obtainable time base with accuracy that approaches that of an atomic clock (receiver) in the long run. And line-based clocks such as the transistor clock mentioned in the linked article are not a bad idea after all.

===Jac

You are right, HAD is wrong.

The tv signal contains the sync, there’s no need for the ac network. In fact, the 15.734 kHz from the line sync is used as clock for the swith mode power supply in most crt tvs.

All this article did was show the difference between mains and a cheap oscillator.

Shocking that there’s variance – but it’s not on the utility side. Utility frequency is damn near rock solid – it HAS to be for the grid to be interconnectable and switchable.

If you try to connect two power networks that aren’t perfectly in sync, you’ll get a Ghostbusters Crossed The Stream effect (or, well, a spectacular tripping of some very, very big circuit breakers, at a minimum.)

Actually what you’ll get is a massive rotor trying to adjust it’s position immediately. Also very bad.

I think this has come up before. I’ve worked at a power plant, and one of the most fascinating things is that when the plant was being brought back online, it was a human’s job to watch a gauge that compared phase of the generator to phase of the grid. The generator is spun slightly fast so that the grid “corrects” it when the breaker is closed. By corrects, I mean the relatively infinite power of the grid operates on the generator as if it were a motor, and physically turns it into phase. It’s the operator’s job to time the closing of the breaker so the phase difference isn’t too great.

Mains power distribution is quite fascinating.

Not as rock-solid as you think. All mains power comes from rotating machinery and that machinery slows down or speeds up depending on the load on the grid. You can actually measure the energy being provided by the utility companies versus the energy being consumed on the grid at any point in time just by (precisely) reading the frequency of the mains power from any wall outlet.

That’s an interesting work. I’m looking forward to see measurements over longer periods of time.

I wonder if the variations the user has noted have any relation to this news item from a short bit ago… http://www.newsmax.com/US/US-SCI-Power-Clocks/2011/06/24/id/401381

reading to the end of his post and then the wikipedia entry, the wiki mentions the proposed relaxing of the utility freq regulations.

I can understand mains frequency not being *precise*, as it varies depending on grid load. But I’m surprised by your claim that it’s not *accurate* and that it can gain or lose seconds per day. According to Wikipedia, power grids in the US and Europe (and presumably other major grids) deliberately ensure that the frequency is very accurate in the long term.

Not sure about US but in the UK the frequency is adjusted so that it is accurate over a 24hr period, so mains synced clocks are pretty accurate.

I was given a tour of a power station originally built in the 1930’s. In the control room was a wind-up clock with pendulum that they used to monitor the ‘actual’ time and adjust the frequency to keep all the A.C. ‘synchronous’ clocks telling the right time.

Early B&W TVs used big laminated iron transformers with leaky magnetic fields. The magnetic field would show up as distortion in the CRT picture since it used magnetic deflection. By choosing a 60Hz TV field rate to match the power line frequency, the distortion would at least be stationary, and hardly noticeable. Crystal oscillators had nothing to do with it, as the video signal itself contains sync pulses. Later, when Color TV was introduced, for technical reasons related to the Color Burst, the TV field rate was subtly changed to 59.94Hz. Older B&W TVs had no problem syncing to this. Soon most color TVs were using switching power supplies (no 60Hz transformers) and were better shielded from external magnetic fields. But occasionally you will see a 60Hz hum bar working its way up the screen as it beats against the TV transmission at 59.94Hz.

Dang, I’m old enough to have seen the bar crawling up the screen. Now I know why. Thanks NateOcean for the interesting bit of info.

Overall this entire post was full of some interesting info.

I would think that power line hum (It is picked up via the antenna from nearby power lines, and only happended on channels 2 & 3). That was on the analog sets. Analog transmitters basicly don’t exist in the US anymore. I got to actually POWER OFF our analog transmitter (WTIU TV, Bloomington, IN) a few years ago.

Regardless of what channel your TV says you are watching, the channel number has no real correlation to the transmitter frequency. Case in point, WTIU still uses channel 30 for station ID (historic, because we used (analog) channel 30 for three decades), although we transmit on channel 14 (470-476 MHz), which is at the very bottom of the UHF frequency band.

Wow, I totally remember a ‘glitch’ slowly crawling up the screen and repeating when I was a kid. I just thought our old-ass B&W TV was some broken piece of junk.

“How’s the 60Hz coming from your wall?”

Running at 50 Hz where it’s supposed to be, thanks for asking.

A fascinating related hack is the use of mains frequency in forensics. By extracting mains hum from audio or video recordings, you can identify when the recording took place by comparing with the record of frequency changes. See Wikipedia http://en.wikipedia.org/wiki/Electrical_network_frequency_analysis

I measured this in Quebec, Canada using a resistor and an optoisolator connected to mains (I was concerned a transformer may introduce noise somehow). The mains frequency showed very little variation, even sampling at around 1.7 MSa/sec on a few different days.

So our telecoms might be a slow, expensive pseudo-monopoly with terrible service… but at least the power grid is good. Good work, Hydro-Quebec!

I also discovered that a 20uH coil with one end connected to a hex inverter was enough to output a 60Hz signal at 5v… but would miss cycles sometimes. With slight biasing or a bigger coil, you could make a mains clock that is not connected to mains power ;)

This is interesting to me, as I found out that the AC power in the Philippines (at least, around Manila) is 220V at 60hz, not the 50hz I expected. Maybe something to do with the US military bases stationed around Manila in the past? The thing that really tipped me off is I have several mechanical flip-clocks with a 110V/60Hz motor that runs the system. I used a step down transformer so I could plug them in, but didn’t expect them to be accurate (50hz vs 60hz). Surprising, they kept time well! That led me to take out my fluke and actually measure the frequency and it was 60hz.

That’s nice – it keeps a lot of my old electronics working with just a step-down transformer.

However, you really don’t want to know how they wire houses here. Two hot leads at 110V/60hz, but 180 degrees out of phase. Two hots and no neutral. And no grounds. Spooky.

So, back to the original topic – I have been wanting to monitor the AC hertz using a PIC12F1840, and now I have an input to the PIC that I can use. So, thanks to [Ch00f].

Spooky indeed! Ground is so overrated… :-)

If you grab both lines will your eyes cross and uncross in sync?

At 220V? Oh, yeah.

Really scary – if you switch off a light to change a burnt-out bulb, make sure NOT to touch anything metal on the bulb. One hot side is switched off, but the other hot side is still – uh – HOT.

I’ve jumpstarted my heart a couple of time already.

Also, if I remember correctly, the mains socket most widely used in Philippines is not even ranked for more then 110V. Might be mistaken but it tells a lot about the level of security concerns. Then again, I guess they have more severe problems to deal with then electric safety… (poverty/corruption/environment etc)

60Hz used in the Philippines came from the American influence back in the early 20th century when the Philippines was an American colony. My guess is it was the same 120V system used in the US ’till WW2, then increased to the present 220V during reconstruction after the war to save on wiring costs.

Jeez, just scanned through the article, so many poor assumptions and sloppy methods I just have to call it to avoid anyone thinking this is a good way to measure something.

Let’s see;

– Why do we really care if 60Hz is 60.00000Hz, I can’t think of any pracitical application where it might matter.

– Why would a $10 digital watch be more accurate / “like to have been trimmed to an ATOMIC CLOCK”?

– If you wrote better code your UART interrupts would be below the counter interrupt (UART IO isn’t *that* critical for this application), and for that matter why isn’t the micro doing it all via interrupts / hardware counters or somesuch anyway? None of this should be stressing the micro at all.

There’s plenty more in there but I really can’t be doing with the internet argument, just wanted to say “FFS no!”

Hi there. I’m the guy who wrote the article. I thought I’d reply to your comments.

1) The frequency matters because I’m using the frequency to keep time on a clock. Clocks need to be accurate, so while the actual frequency might waver a bit in the short term, it needs to average out to precisely 60Hz in the long term to keep any accumulative drifting from occurring.

2) Perhaps a poor assumption for a $10 watch, but I’ve found in other testing that cheap digital watches tend to keep better time than some much more sophisticated multi-purpose devices that have a clock function. For example, my iPod can lose up to a second or two a day. The iPod’s designer isn’t too worried about that because over the long term, it’ll calibrate its time using the internet, so they probably didn’t go through the effort of precisely tuning the clock.

3) I used the built in hardware interrupts that the AVR offers. If you look on page 58 of the ATMega48a datasheet, you’ll see that the USART interrupts are near the highest priority. Unless I wanted to write my own USART, there is no way to change this priority.

The main issue I ran into was cutting my 1MHz clock down. I wanted to use a hardware prescaler to cut the frequency down as much as I could, but the largest prescaler I could use and still get an integer number of interrupts per second was 1/64. This means that I had to service that interrupt 15,625 times per second or once every 64 microseconds. That didn’t offer nearly enough time for the USART to completely finish its transfer of two bytes at 9600 baud.

Sure, I could have done it differently, but this wasn’t really meant to be a super drawn out test. I really wanted to get the whole thing done over the weekend.

Thanks for the comments!

Frequency matters to the power utilities. Especially when joining capacity generated from 2 or more sources. Really bad things happen if my generator has drifted 180 degrees out of phase to your generator and we connect them together to supply power to someone…

There’s only so much headroom to go around, and as the infrastructure gets older, keeping sync becomes increasingly necessary (and, fortunately, more achieveable at the same time!).

Interesting, but not the most precise measurement technique. :)

Even a temperature-compensated crystal oscillator will vary a bit, typically a few ppm/yr.

The output of a crystal oscillator can be off from it’s specification by a few ppb to a ppm to whatever accuracy you ordered it with. Typical low cost oscillators used in computers are typically within a few ppm. Which, at a few megahertz, is a few parts per second. Which can add up rather rapidly.

The resonant frequency of the crystal will vary with temperature, as you state. The rate of change is non linear and also varies based on how the crystal is cut. There is a temperature range at which the change in frequency is minimal compared to the temperature change. In other temperature ranges, the delta f per delta t (∆f/∆t) will be higher. This is why high precision oscillators are kept in temperature controlled ovens at about 70-80 degrees C. A good temperature controlled, ovenized oscillator can be good to 1 part in 10e11 over very long periods of time.

The resonant frequency of the crystal will also drift over time as the crystal ages. This also depends on the cut of the crystal, its operational and environmental history, as well as contaminants within the crystal lattice.

Atomic ‘clocks’ are actually nothing more than very accurate frequency standards. These are then, in turn, used to drive clocks. Simple, accurate atomic standards are generally within the reach of even the most casual hacker at this point. Rubidium oscillators are available on eBay and other sites for well under $100US. Highly accurate atomic standards are still pricy, though can be found.

The most accurate standard that most of us can lay our hands on are GPS disciplined oscillators (GPSDOs). Using a GPS receiver which outputs either a 1pps pulse accurate within a few ns, or directly presenting a 10Mhz oscillator accuracies withing 10e-13 or better are achievable in the home lab.

Most high performance test equipment will have an input for an external standard such as a GPSDO. This allows everything on your bench to work to a single reference, thus, you _know_ that the signal coming out of your sig gen is exactly what is displayed on your frequency counter, and the width of that pulse on your scope is what is says it is. Very handy.

Oh, and you can use it to time wall frequency too. Which, as many have said, varies with load and other considerations, but is pretty accurate over a longer period of time. For the real purists, look up the wikipedia article on ‘Allen Deviation’.

komradebob’s comment also helps to explain why your handy wrist watch manages to keep such good time: They thermally stabilized the crystal oscillator by strapping it to a 98.6°F constant temperature source.

This is why i have to reset all my AP’s every morning. i have 9 of them that all have to be rebooted perodically. the ethernet on them still works and i can login but i lose wifi on them until i powercycle them. if it were just one, then id assume that it was a bad AP, but 9 of them? different models from the same vendor.

My Dad and I tracked the accuracy of a digital clock (that used the power line for a timebase) vs the NBS (now NIST) time signal broadcast by WWV back in the mid ’70s. Daily variations could be as much as 10 seconds slow when we had brownout conditions, but the clock was always either within a second or so the next morning or a few seconds ahead if they were expecting a big load the next day. The long term drift was pretty much zero.

(Detroit Edison, 1973 or so)

We also used the TV colorburst frequency during the network news as a household frequency reference. Yeah, I guess I’m a geek.

“In the end, all these changes in the frequency of your wall power cancel out. The power companies do the same thing [Ch00f] did and make sure mains power is 60Hz over the long-term, allowing mains-controlled clocks to keep accurate time.”

Don’t count on that! About a year ago they stopped doing that. It was supposed to be an experiment, to see how much equipment still relies on that and if they could get away with permanently not correcting. I don’t know the current status. http://spectrum.ieee.org/tech-talk/energy/the-smarter-grid/power-system-experiment-in-us-means-clocks-will-speed-up

In other words, that feature is deprecated!

TIME: a man made invention.

This reminds me of this:

How the National Grid responds to demand

http://www.youtube.com/watch?v=UTM2Ck6XWHg

I don’t know about US but here in Quebec,since power is a provincial business, they have to match the time every 24 hours,meaning that if they up the freq for some time in the day,it has to be balanced later on so that at midnight,it is actually midnight,everyday. This prevent error build up. They achieve that easily here cause 80% of the power we use is from hydroelectric facilities and they spins at about 3600 rpm (60 hz ;) ) by controlling the vanes they can up or down it in a matter of seconds.

Thx for posting,very interesting stuff indeed.

Oh and people commenting “THIS IS WRONG” how about being nicer next time? no need to be a doushe here. Just explain it straight and simple like the others do. thx ;P

There’s also this:

http://www.cliftonlaboratories.com/measuring_60_hz_frequency.htm

http://www.nerc.com/page.php?cid=6|386

Based on the terse update posted in red near the top of the page, it appears that Time Error Correction may not have been suspended on the US power grid.

Can anyone confirm that the grid is still corrected to prevent accumulated error?

I wish I could remember where I read it or heard it. Either an article online or a podcast, but I remember hearing about how it used to be the job of individuals around the United States to keep the power on an average of 60Hz over the course of days. So if they drifted a little short of 60Hz one day, they might raise the frequency on the grid for the next day or two to make up for the difference. The reason was to maintain the accuracy of equipment that depends on the grid.

the following is the property of it’s respective owners, whoever they were;

Philip Peake (aka PJP) says:

June 25, 2011 at 4:41 pm

—CLIP—

An interesting story my EE lecturer told was when he was young,

working for a manufacturer of generators.

They installed a new generator weighing several tons.

Fired it up, and being in a hurry only monitored one phase (3-phase generator).

When it was in sync, they threw the big switch.

That was when they discovered that the other two phases were reversed.

The generator ripped its bolts out of its concrete foundation,

and disappeared through the generator shed roof.

There are amazing amounts of power available with a direct connection to the grid.

—CLIP—

You have to admit though it’s still a pretty good daily average for a technology that dates back to Nikola Tesla! And as for the 50/60 Hz split, Tesla designed the original AC generators for Westinghouse for 60hz, Seimens, who built the first AC generators in Germany built theirs at 50hz, and here we are today! (60hz/220v worldwide!)

Hammond organs made prior to 1973 require the 60 hz in the US. The tone generating mechanism runs via a synchronous motor (invented by Mr Hammond). A fascinating mechanism of a bygone era.

So then what this really boils down to, is that on those days on which the clock feels slow, you just might be right.

The frequency shift is a little bit more complex than any of the explanations. If a power plant wants to shed load it retards its output phase a little bit from the phase of the mains so it is just a bit behind. At the same time another power plant may be able to take up some additional load and to do this it advances it’s phase slightly to get ahead of the phase of the mains. It is not a lot of phase shift, but it’s very handy for controlling how much power the mains tries to draw from YOUR plant. Now… all the power plants do this pretty much independently. I’m sure the communication has been upgraded since the old days, but it used to be that the dam would call the coal plant and explain they were going to shed some load so it should expect a bit more to load to be drawn.

If you have two equal AC power sources, the one leading in phase will carry a higher percentage of the load.

Anyways… all this load shifting by phase shifting is still pretty much done by each plant individually… and the effect on the overall power grid is that as plant A shifts, plant B compensates, plant C has to follow plant B, D wasn’t watching and nearly flubbs it and has to quickly follow C… etc…. with the overall net effect to the grid as a whole being that it loses or gains a few cycles per day here and there from all the phase shifting going on.

They could coordinate this and lock the grid to 60Hz period and let each plant just phase shift related to that… but so far as my 20 yr old knowledge says they don’t coordinate that good, it’s been easier to just let it drift a bit and measure it and correct for it periodically. Hence the shifting and correction periods we can measure. At the time my knowledge was fresh it was just too many power plants to coordinate that well so they chose periodic corrections to reduce the workload.

The reason they wanted to correct for it was because everyone’s clocks used the 60Hz (or 50Hz) as their timebase.

Now… as for the relationship to television, that was the other way around. If the vertical sync was running at a different frequency to the mains you would see a black bar slowly travelling up or down the screen constantly. The TV stations would have to match their vertical sync to the power line for their locality so as to prevent their viewers from experiencing this annoyance.

The uncertainty is not 60HZ, it is why other countries use 50HZ.

I couldn’t figure out the title on first glance now i get the point. Great post