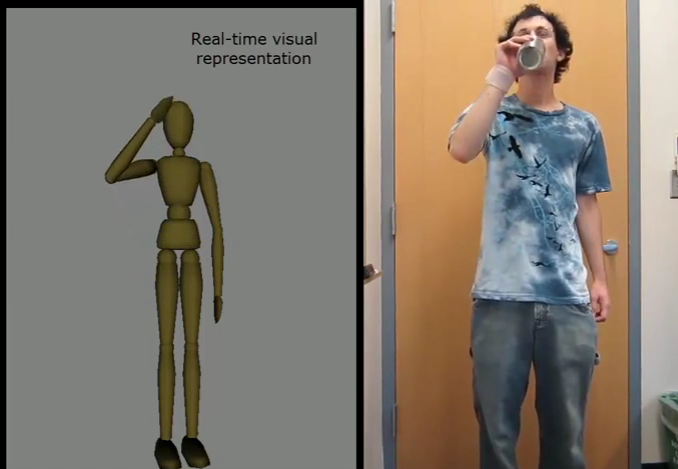

This guy takes a drink and so does the virtual wooden mannequin. Well, its arm takes a drink because that’s all the researchers implemented during this summer project. But the demo really makes us think that suits full of IMU boards are the next generation of motion capture. Not because this is the first time we’ve seen it (the idea has been floating around for a couple of years) but because the sensor chips have gained incredible precision while dropping to bargain basement prices. We can pretty much thank the smartphone industry for that, right?

Check out the test subject’s wrist. That’s an elastic bandage which holds the board in place. There’s another one on this upper arm that is obscured by his shirt sleeve. The two of these are enough to provide accurate position feedback in order to make the virtual model move. In this case the sensor data is streamed to a computer over Bluetooth where a Processing script maps it to the virtual model. But we’ve seen similar 9-axis sensors in projects like this BeagleBone sensor cape. It makes us think it would be easy to have an embedded system like that on the back of a suit which collects data from sensor boards all over the test subject’s body.

Oh who are we kidding? [James Cameron’s] probably already been using this for years.

IMU: Inertial Measurement Unit

http://en.wikipedia.org/wiki/Inertial_measurement_unit

IMU for motion capture suits isn’t particularly new:

http://www.xsens.com/en/general/mvn

What’s interesting is that you could build one at home now. For a few 100 you can get quite a lot of sensors.

Actually the sensors aren’t a problem for years now. The problem is the highly nontrivial math this requires (sensor fusion, inverse kinematics, skeletal animation stuff). The electronics is the very easy part here. The cost of the commercial mocap packages is mostly in the software these days.

It is very unlikely to be something an average hobbyist will be able to implement at home.

I’ve seen software sensor fusion libraries for the sensors the guy is using. It’s also not inverse kinematics, but forward kinematics you use for this way of motion tracking, and skeletal animation is easy cheesy if you have the angles in the right format (for which conversion is also well known/documented). It costs a bit of time to put everything together, but it’s not rocket surgery.

I’m guessing ease of use of the mocap packages (editing imperfect data, binding correctly to different types of characters needs a good interface) is the reason why they are expensive.

This would be cooler if I didn’t see a full body suit with a more impressive character yesterday at AUVSI from the company http://www.yeitechnology.com/

Still awesome to see though.

As Sphere said, accel/gyro-based motion capture suits are already around — there’s the Xsens, the Gypsy Gyro, and a couple others I’ve seen.

One problem is the need for frequent recalibration — particularly with gyro-only suits, but even with more sensor types you’ve generally only got a couple minutes of high-quality mocap before you have to go back to T-pose.

The problem’s compounded if there’s any electromagnetic interference. This is a particular problem for foot-mounted sensors — try to get consistent results out of one of these rigs and you’ll find out where cables are running through your floor reeeal fast.

Nevertheless, sensor suits blow optical mocap away for ease of use. You can use a smaller space, you can occlude the space with props, and you get a much better idea of how the mocap’s going (because there’s no post-process marker cleanup). So while we won’t be seeing sensor suits take over optical mocap for movies anytime soon, they’re great for roughing out animation clips quickly.

there is already this

http://youtu.be/MSTge5IDxF4

future is optical mocap with no suits, markers or green rooms, just a bunch of stationary cameras recovering 3d in real time

Ain’t happening. The markers are used because they are intrinsically more accurate and the animation director can put them exactly where is the point of interest to be tracked. Ease of use is secondary with these setups – the quality of the recovered data is what matters most.

They are much cheaper also and need almost no filtering.

I don’t know about [James Cameron] but it was used very successfully by [Seth MacFarlane] to do live mocap on the movie ‘Ted’ while he was onsite directing. I’d stick in a few links but I’m going to be lazy and assume everyone’s google-foo is more than good enough.

isnt the wii motion plus about 10$ and does the same and works with i2c ?

Those are pretty cheap indeed. Do they also have an accelerometer, gyroscope and magnetometer?

I just don’t believe it. Most cheap IMU with necessary precession for the joint tracking will cost 80-200$ and you need at least 12 of them to cover basic joints.

well, not new… http://www.xsens.com/en/movement-science/mvn-biomch

maybe more open/accessible

Here at the uni, chips are being developed for 3d locating with ~1mm accuracy. Based on 60GHz triangulation, first tests in lab environment gave great first impressions. Over the next year or so, a few pcb’s will be made to test true in-the-field (no pun intended) accuracy, but even with 5mm resolution, imagine what you could do with those..

Aren’t those stationary things and totally unusable outside the specially prepared studio?