The most popular computer ever sold to-date, the Commodore C-64, sold 27 Million units total back in the 1980’s. Little is left to show of those times, the 8-bit “retro” years when a young long-haired self-taught engineer could, through sheer chance and a fair amount of determination, sit down and design a computer from scratch using a mechanical pencil, a pile of data books, and a lot of paper.

My name is Bil Herd and I was that long-haired, self-educated kid who lived and dreamed electronics and, with the passion of youth, found himself designing the Commodore C-128, the last of the 8-bit computers which somehow was able to include many firsts for home computing. The team I worked with had an opportunity to slam out one last 8 bit computer, providing we accepted the fact that whatever we did had to be completed in 5 months… in time for the 1985 Consumer Electronics Show (CES) in Las Vegas.

We (Commodore) could do what no other computer company of the day could easily do; we made our own Integrated Circuits (ICs) and we owned the two powerhouse ICs of the day; the 6502 microprocessor and the VIC Video Display IC. This strength would result in a powerful computer but at a cost; the custom IC’s for the C-128 would not be ready for at least 3 of the 5 months, and in the case of one IC, it would actually be tricked into working in spite of itself.

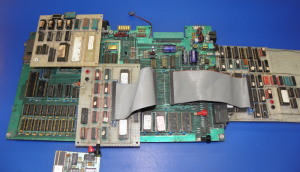

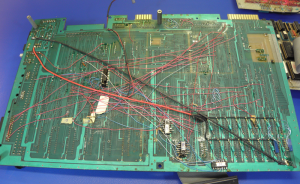

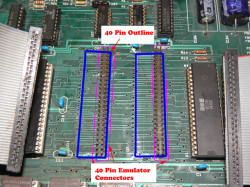

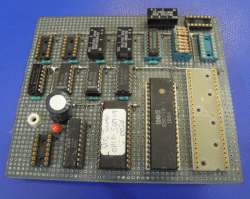

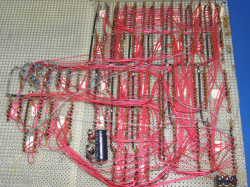

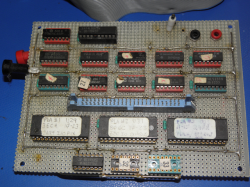

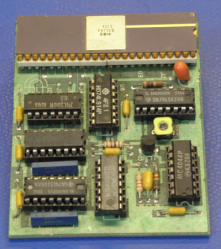

Before the CES show, before production, before the custom IC’s became available, there was no choice but to Hack in order to make the deadlines. And by Hack I mean we had to create emulator boards out of LS-TTL chips that could act like the big 48 pin custom VLSI chips that Commodore/MOS was known for .

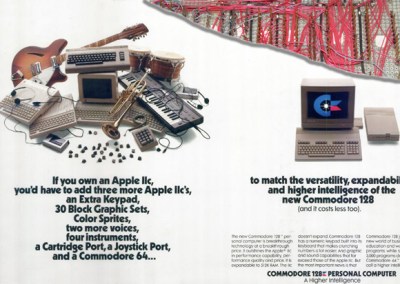

To add to the fun, a couple of weeks later the marketing department in a state of delusional denial put out a press release guaranteeing 100% compatibility with the C64. We debated asking them how they (the Marketing Department) were going to accomplish such a lofty goal but instead settled for getting down to work ourselves.

As the project progressed we realized that this most likely was going to be the final 8 bit system to come out of Commodore. We began shoving in as many features as could fit in a 5 month time frame. Before we were done we would have a dual processor, triple OS, dual monitor (40 and 80 column simultaneously) with the first home computer to break the 64k barrier. We started referring to the C128 as 9 pounds of poop in a 5 pound bag, we couldn’t quite get 10 pounds to fit. We also joked about turning out the lights on our way out the door as we knew that the 8-bit era was coming to a close.

The C128 would require two brand new 40 pin custom chips; a Memory Management Unit (MMU), a Master Programmable Logic Array (PLA), and the venerable but scary VICII video core chip needed to be re-tooled. We also had the chip guys bond up a very special 48 pin version of the 6502 microprocessor and we decided to use Commodore’s newest 80 column chip which by itself almost caused us to miss CES. (But that’s a different story)

So here is where the need for some serious hacking comes in; we needed to start writing software (a whole new Rom Kernel and Monitor and a brand new version of Basic featuring structured commands), we needed to start the process of making PCB’s and we needed to start debugging the hardware and understanding the implications of trying to use 128k of DRAM (yes “k”, not m,g or t) which was a first, as well as the first MMU in a home computer. Along the way it turned into a dual processor system 6502/Z80, and simultaneous 40 column TV display and 80 column monitor. Home monitors didn’t really exist yet, we were kind of counting on having that done in time also, along with a new hard drive.

What we ended up doing was designing the first PCB to take either finished 40 pin dip chips or 40 pin emulator cables leading to emulator boards built of 74LS chips that when combined with some rather cranky PLA’s (The FPGA of the day) and delay lines and whatever else we could find, acted close enough to a custom chip that the programmers could continue working.

Our construction technique was to add to the PCB as much as we knew we needed for sure and then add jumpers to that as needed. The mainstay though in the 80’s was good old-fashioned wire-wrap, and so we proceeded to lay out a sacrificial main PCB and wire-wrap sub-assemblies to act like the custom chips that would hopefully arrive in a couple of months. (Looking at the bottom of the main PCB its hard to believe that in about 3 months we would start a production run of several million.)

This was just the beginning, ahead lay some fairly insane kludges that all had two things in common; We had to get any hack or fix done overnight while the managers were home sleeping and the end result had to work in million piece quantity.

During the final push to CES we ate our holiday dinners out of aluminum foil in the hardware lab using the heat of disk drives to keep the food warm, and the bathroom sinks doubled as showers. My shoes became unwearable due to extended use and were discarded, only to have a mouse take up residence in the toe. (The first Commodore Mouse)

We assembled units in the booth the night before the show, Commodore Business Machines (CBM) employees were tasked with hand carrying the 80 column chip which had almost been a show stopper. The programmer that had ported CPM was able to fix the last of 80 column bugs by editing raw data on the floppy.

As far as the product performance at the show we nailed it. Nothing failed, there were no “blue screen” moments, and the press was kind to us. Upon returning to work we struggled with how to ramp down after having been in the crucible for so many months. Showers were taken and eventually the slack-jawed expressions gave way to normal-jawed expression.

We figured we had done the last big 8-bit computer, we knew one era was ending but we were also excited about the advent of the 16-bit Amiga even amid rumors of big layoffs in engineering. Without the drive of the founder, Jack Tramiel, CBM seemed to wander aimlessly canceling the next computer, the LCD Computer system amongst little to no marketing of main products. The feeling for me was as the days of Camelot had come to an end. The team slowly broke up without a new challenge to bind us together, I ended up working at a Trauma Center in New Jersey in my spare time as I had become somewhat addicted to adrenaline.

Bil Herd went on to develop high speed machine vision systems and designed the ultrasonic backup alarm commonly seen on new vehicles. For the last 20+ years Bil has been an entrepreneur and founded several small businesses. Bil keeps in touch with collectors and other fans of the old Commodore computers through his website c128.com and will soon be opening his new site, herdware.com which will feature open source and educational electronics kits.

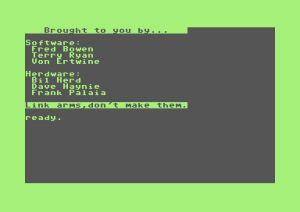

The C128 Engineering Team as seen in the Easter Egg image:

Bil Herd: Designer & Hardware Lead

Dave Haynie: Intricate timing, PLA Emulator and DRAM

Frank Palaia: Z80 Integration and Ram Expansion

Fred Bowen: Programmer and Software Lead- Kernal & Monitor

Terry Ryan: Programmer- Basic V7 including structured language additions.

Von Ertwine: Programmer- CPM

The Commodore C128 was produced in 1985 and sold 5+Million units generating about about $1.5 Billion in revenue. The C128D with built-in disk drive was supposed to be released at the same time as the standalone unit but the C128D did not make it into production for a couple of years.

I don’t know how to break it to you Bil but the SAM Coupé was the last of the 8-bitters.

Just sayin’!

But I have to admit you’re a one of a kind and damn good. I own a C64 and numerous other commodore goodies. Keep up the interesting stuff!

The very last 8bit home computer to get on the market was probably the Amstrad PcW16 in… 1995.

Ok so if if you count a Personal Computer Wordprocessor then maybe. The SAM was also out in ’95 but I couldn’t be more specific without looking deeper in. As for the CPC pluses they were cost reduced and otherwise slightly tweaked CPC’s. I still want some for my collection. Does a tweak of an existing computer count? It’s also a little disconcerting to see how much midrash is being talked about these giants of IT past.

It also “technically” wasn’t the last 8-bit machine from Commodore either if you count the C64GS.

But didn’t the Amstrad CPC Plus systems come out after the SAM Coupe?

“this most likely was going to be the final 8 bit system to come out of Commodore”

Thanks for the history. I have read Hackers several times and have enjoyed it each time. Having worked on several projects that were not supposed to be able to be done in the time frame given (but were) I can relate to some of the struggles and doing things on the faith that something in the middle would be made to work somehow

great to see TODAY what engineers were able to accomplish back in those days! Unfortunately guys like Gary Kildal did not have a chance to participate in the success of CP/M and GEM …

I loved the C128. All the goodness of the C64 plus CPM and an 80 column screen so I could run a spreadsheet and word processor. At work I had been building unix computers so I got used to using an operating system. CPM support in a computer I could afford was great. I was able to grab a surplus monitor and printer from work. Unfortunately, It died right after I printed out a resume that go me a new job. Thanks for the hard work and long hours. It was a great tribute to the end of the 8-bit computer era.

Fun stuff! I still have 8563 and 8568 chips that I got from C=

We don’t see these anymore today. Great story and picture showing prototype 128 that looked homemade. I still have my 64 and also a 128D

“we were also excited about the advent of the 16-bit Amiga”

Was the original plan for the Amiga something other than the 32-bit 68k CPU, or is that a typo?

68000 was advertised as a 16/32 chip. 32 bit registers, 16 bit data bus.

Gizmos, the data bus size isn’t what defines a CPU, it’s the registers. Otherwise the Intel PII with it’s 64-bit cache bus could be called a 64/32-bit CPU. There’s lots of 32-bit ARM CPUs and SOCs with 16-bit data busses as well, but they’re not referred to as 16/32-bit CPUs. And one more thing about the 68K, it had a 24-bit external address bus, so would you call it a 16/24/32-bit chip?

Many 8 bitt CPUs had some 16 bit internal registers. Like the Program Counter.

I’m not defining anything here, so you go ahead and call me wrong. http://en.wikipedia.org/wiki/Motorola_68000

I think that a program counter/instruction pointer register can’t really be narrower than the address bus, because otherwise instructions could be located in an area of memory that the CPU has no access to.

Back in the 8-bit days, it was not uncommon to have “bank switching”

where the extra address bits are provided by a I/O port (or latches) to

supplement the Program Counter (PC) to access larger than 64kB of

memory.

Some of that nightmare creeps into segmented address. :P

I agree that general purpose registers are the most appropriate measure of a CPU’s “bitness”, but he didn’t say anything twat-worthy. You know, people (except maybe you) can discuss and disagree without being twats.

It is nice that this has been resolved. But in the 80s the 68000 was definitely referred to as a 16-bit CPU by most people. The Sega Megadrive/Genesis had “16-bit” emblazoned on top of it! Back in the 80s it was more common to refer to the bitness of a CPU by the data bus width … unless the manufacturer was really* adamant it was something different (e.g., the 8088). CPUs back then were a mess out of necessity (transistor budgets, etc). For example, the 68000 had 16-bit data bus, a 16-bit ALU, and 32-bit registers (and mostly 16-bit instructions), and the Z80 had an 8-bit data bus, a 4-bit ALU and 8 or 16-bit registers (and variable length instructions).

* Motorola were clearly not great at marketing, as they should have emphasised the 32-bit capability and architecture far more.

Z80 4-bit ALU, really? Why, for one thing?

8-bit ALU.

No, it’s not an error, in the Z80 it’s really a 4-bits ALU and not a 8-bits:

http://www.righto.com/2013/09/the-z-80-has-4-bit-alu-heres-how-it.html

Nope. The 68000 is a 16 bit implementation (microarchitecture) of a 32 bit architecture. The internal buses are 16 bit, the external data bus is 16 bit and the ALU is 16 bit.

And to remind you that it was considered a 16/32 bit design the Atari ST was named according to this (Sixteen Thirty two) with the later computer using a fully 32 bit processor (the 68030) was named Atari TT for the same reason.

Also many 8 bit (and some 4 bit!) processors have at least some registers much larger than the basic wordsize. 4 bit processors with 64 bit registers and 8 bit processors with 32 bit registers are just some examples.

This is not the right place to suggest that Atari got anything right :-)

The size of the CPU depends on who you are. To a hardware guy like Bil (and at least a big chunk of my brain), the size of the CPU is the size of the data bus, so the 68K in the A1000 was 16-bit. To a software person, the size is, of course, the size of the data registers, so for them, 32-bit. To a chip designer, it’s registers a little bit, but mostly the ALU size, how much is happening in any cycle. The 68000 has three 16-bit ALUs, so mostly a 16-bit chip.dave

68000 was refered to by Motorola as 16/32-bit, but many computer manufacturers decided to ride the wave of public hysteria and refered to it as a 32-bit machine.

68000 has 16-bit internal pathways but timings are so cleverly arranged that most of the time user had the feeling that he was working with fully 32-bit machine, since registers were 32-bit and often there was no visible time penalty for 32-bit ops wrt to 16-bit ones.

Addressing, the max length ie. amount of memory what can be addressed is usually considered ‘width’ of the cpu. It was back then and is still today, it doesn’t matter if every single register is that many bits.

Oh, and the reason it was common and is also same reason as today to have narrower external connectivity was simply because the cost of overall system would become too high for the market then if full width was used.

Cheers,

ac

ps. Didn’t have 64 or 128 myself, I had Vic20 but went from there directly to KayproPC-20 few years, but those were just toys, I had VAX 11/750 at work :)

Don’t you mean the data bus? Otherwise you’ll have to refer to the 6502 and Z80 with their 16-bit address buses as 16-bit processors

Hence where the Atari ST got its name from (Sixteen Thirty-two).

Or Sam Tramiel, as the other rumour went. I’m still not quite sure what a Tramiel is, from the legends it seems to be something like the Hutts from Star Wars.

I always thought is was for Stereo. In fact many musicians used STs.

The 68000 was a bit of both. To a hardware guy, it’s a 16-bit chip… 16-bit data bus, extended address bus. To a software person, it was all 32-bit, with the expected 32-bit flat addressing (logically, anyway), the 32-bit register model, and instructions for 8, 16, and 32-bit data types. To a CPU designet, it’s a little of both. 32-bit registers, but most other things are 16-bit. There are three 16-bit ALUs, snd yeah, you can do 32-bit operations, but they run in two phases, so they’re a little slower… still much faster than not having them.

The only really important tjing in the long run was the instruction set architecture. That allowed for future 68K systems that were fully 32-bit, starting with my old buddy the 68020. Even git a tiny instruction cache and 32-bit barrel-shifter in that bad boy.

How were those chip emulator boards able to match the timing and performance of the real VLSI chips? I assume the new VLSI parts were pushed to run as fast as you could make them for performance reasons. Wouldn’t a bunch of individual of LS-TTL chips in series with several inches of wires suffer substantially longer propagation delays than a real piece of VLSI silicon? I’ve built a custom CPU out of a few dozen LS-TTL chips, and it wasn’t any speed demon. :-)

Don’t know enough about the C128 to comment for it directly.

(They use a 8xxx part number, so they could be using HMOS.)

The Amiga chipset around that era was built with NMOS and the chip

timing wasn’t a speed demon. The typical 74F and PAL chips run circles

around it. Critical timing stuff was done is PAL and 74F series in the A1000.

http://en.wikipedia.org/wiki/Original_Chip_Set

>Both the original chipset and the enhanced chipset were manufactured

using NMOS logic technology by Commodore’s chip manufacturing

subsidiary, MOS Technology. According to Jay Miner, OCS chipset was

fabricated in 5 µm manufacturing process while AGA Lisa was implemented

in 1.5 µm process.

The “towers” as we called them were made from very fast TTL chips and PLDs. The VIC chip couldn’t have been emulated, but the “PLA” and MMU were small enough. They were more complex than anything you’d find in most computers of the era — some of those 10ns or 5ns PALs ran $10 each.

Commodore actually put the 8520, or at least most of one, on a very expensive FPGA, years later. The chip designers were moving the 8520 design to HDL for incorporation in the CD32 all-in-one support chip. Do to test this, it went into a multi-hundred dollar FPGA… not exactly a practical replacement for a $1.25 chip, but when you can test out you new chip designs in old hardware, you do.

Bill’s article on the c128 was good, but even better would be if you did one on the Amiga. I skipped the c128 (to me it’s a bit of a Frankenstein computer) going from the c64 to the Amiga 500 and then the 2000. A couple weeks ago digging through my old electronic parts I even found a kickstart ROM.

In the day, I wrote hundreds of Amiga articles, And that one angry film… There’s not all that much call for it these days, though I am doing an Amiga thing at VCF East this Spring… postponed from last year, as they were still recovering from Hurricane damage. But I’m always happy to talk/write about this stuff (you might have noticed).

try this one, its 9 parts times 3-4 pages each

http://arstechnica.com/gadgets/2007/07/a-history-of-the-amiga-part-1/

We also did similar towers for various Amiga chips. There had been a Fat Agnus tower, there was an A500/A2000 Gary tower, no orginal Buster tower — that had in theory been prototyped on the German version of the A2000, but that implementation contained a big I didn’t find until we had the first chips back — ouch! All kinds of very large towers were made to prototype A3000 chips. But the project C128 was the last time anything had been wire-wrapped… at one point, we had three theoretically similar C128 towers, each doing something slightly different.

:Later on, at least for awhile, Commodore got really good at fast turns. Gary, DMAC, RAMSEY, Buster were all built on Commodore’s in-house gate array process. So we could, if necessary, get new chips turned around in a month. It’s hard to get a new PCB turned around in a month. For just a little while, I was very spoiled… my punishment was to work at startup companies ever since :-)

Simulator boards were presumably more practical back when clocks were running in the low megahertz range: in C64 mode the chip ran at 1MHz, meaning electricity can travel a theoretical distance of 300m within a clock cycle; in C128 mode it was 2MHz or 150m. Remember, in those days, your CPU was running internally at the same speed it was talking to every other device on the mainboard (calls to memory were done in-line with no caching) so properly engineered, a non-discrete component should probably be OK. (

Heck, the memory in a C64 ran faster than the CPU: the clock pulse was inverted for the graphics chip, which accessed memory in between processor cycles.

Bil helped me diagnose my busted C128D power supply via Facebook. Awesome guy.

I concur, Bil is a great guy! It was a blast conversing with him as he was putting together this post. Hopefully we can convince him to dig up some of his other fantastic stories for future posts!

Great post! I’d love to hear more – and see more! Bill has teased a few of his artifacts on facebook.

Incidentally, if any of you would like to read a more detailed history of the C128 project, also written by Bil Herd – twenty years ago – check out this link: http://homepage.hispeed.ch/commodore/c128_story.html

C-128 was IMHO total waste of time. Shitload of chips for so little effect. Whole point of 65xx was simplicity. Z-80 was built around totally different philosophy. Having them in the same machine was 100% sure recipe for computing albatros – neither good flier nor good swimmer.

And both concepts were totally OBSOLETE when C-128D came out.

One could have an Amiga or at least good Atari ST from so much silicone and logic.

I have repaired many such machines. Commodores were too complicated for my taste. And their chips were not exactly reliable. These things were duying left and right.

Hardware-wise I think Atari ST was most elegant. Optimnized around good CPU, zero wait-states, simple but powerful. easily reworkable and extensible.

Sinclair QL was and still is king of the hill for me. Full of botchups but with so cool original idea and with good Sinclair spirit, giving every bit of the punch for its weight, very affordable, very simple, simple to understand, service and upgrade etc etc.

Does highest sales volume really equal “most popular”? Criminy they made awful stuff. I had to write some code to directly access the HPIB/IEEE disk drives once (they used 3 6502s per drive IIRC compared to an Apple DiskII with a couple discrete parts per drive). Painfully complicated and painfully slow. A couple more experiences and I never used C= equipment again, except perhaps technically when Trammell bailed out Epix and the “Handy” game machine (renamed Atari Lynx) I was working on. I recall the guy who got a descent Forth running on the C64 had to use a target assembler on a PDP-11 to get the compile done in a human lifetime.

However I can appreciate the effort, having wire wrapped some huge boards to emulate ASICS during pre-production. To think, we used to worry about 7 or 12 MHz digital video as if it was REALLY FAST!

Charlie, C= Disk drive where full computer of it’s own for obvious reason of not wasted main computer memory with an OS wich in 8bit time would have been huge portion of this precious little memory. Disk drives from thereself was not much slow or painfully as you can think, that’s the IEC bus from the C64 who was responsive from slowlyness on a C128 a fast IEC bus capable drive as the C1571 could work at full speed transfer. i sure agree on the fact Apple DiskII was very simple in comparaison and worked with only some discrete parts but they could only use 140Kb on the disk while a 1541 allowed 170Kb, none the less some of the original slowlyness of the 64 iec bus could be bypassed by replacing the 64 kernal & the drive kernal with something like JiffyDOS and/or parrallel cable.

Or the Epyx Fastload cartridge. To a young teenage kid it was an impressive and elegant piece of engineering. On boot the cartridge rom replaced the disk load vector so it pointed to 256 bytes of the ROM that was mapped to $DF00, download some code to the 1541, and then the ROM (at $8000) would disappear. The code at $DF00 wrote into the $DE00 space, which charged a capacitor on the cartridge which enabled the ROM again at $8000. For loading from the drive it transferred two bits at a time using the clock and data lines both for data after some timing handshakes. Data from the drive would be written to memory, then when loading finished the ROM would disappear again so it wouldn’t interfere with the running program.

Even faster were some of the Epyx games (like Winter Games) that only used standard GCR on the directory track to save on the GCR decode lookups. They’d load a full track in 2 revolutions (i.e. sector interleave of 2).

Disk II originally held 140K. A better group coding was adopted shortly after and they were 160K from there on. I don’t recall the name and spec but I had to do a variation once for a project called the SwyftCard so we could tell one of our disks from a DOS disk. The encoding tolerated a wide speed variation between drives, and spin-time when starting up was for a software loop to calibrate itself against reference blocks on the diskette, which made for a very robust and easy to manufacture drive. Typical Woz.

Not sure I agree. The fact that the Z80 was included was to expand SW support. The 128 had more SW coverage than any CPU on-market (with CPM support, C64 mode, C128 and BASIC 7.0) and that was forward-thinking for the day. You might be correct that performance took a hit but the improvements with the MMU were vastly superior to anything on the market at the time. For the first time, SW development started to get “easier” with better garbage collection, program management, etc. Agree the Atari ST was a beautiful machine. Wasn’t Jack Tramiel over at Atari by then? Can’t recall when he split from Commodore but it would have been around that time.

So where is SW that actually took advantage of that ? Look at the board.

You have totally enough RAM chips to use them for 16-bit bus of 68000. Same with PROM. You have at least two chips that could be used in paralell.

This thing could easily run at 8 MHz CPU clock and very probably even 16MHz, which means 4 MHZ bus frequency.

Even totally crappy C compiler output would outperform best hand-tuned assembly of 6502. Heck, on such CPU one could emulate 6502 or Z-80 for those few programs that needed that, if anyone ever happen to stumble on such thing.

If you gave me that much logic and a good 68000, I could make this thing fly circles around that 8-bit multihead kludge.

I’ll give you that. Considering the time, it was an odd choice to use such an old processor. The 6502 and the 6800 were long outdated. But they are NOT anywhere on par with the 68K. Apples to oranges. Both fruits but nothing more than that.

However there was a strong code base for them and that’s where I was going with it…There was a huge amount of software they could support and I suspect somewhere in there was a need to protect the position of the higher powered Amiga to-come. The Z80 gave them something entirely different but no less useful in capturing the widest software base. And despite that support, I agree, there wasn’t the flood of developers favoring the platform.

I don’t think anyone can argue the 68K wasn’t a superior processor for the time and it would prove to have tremendous “staying power”. After all, how many designs are still using a 6800? Or a 6502? (Remember the 6800 was also a Chuck Peddle design before he left Motorola and they were even sued for it, having to dump the 6501 lest they be squashed by Motorola for patent infringement!) However I can still buy 68Ks in the same way I can still buy 8051s or 386, 486, etc.

Perhaps Bill has insights into why they hadn’t favored the 68K? Seems if the 6502 and 6800 were in fact as close as they appeared, and the 68K supported bus compatibility (which it did) thereby preserving forward compatibility, that the 68K would have been the obvious choice. (Unless of course the fact that Motorola was always staring down the sights at MOS Tech. was too much to want to bring them on board. Pun intended.)

It’s interesting history though. Definitely fun to look back on it now and reflect on the things we might have done differently.

Br, mb

Of course the 68000 wasn’t an option for the C128. The goal was to deliver a C64 upgrade, not a completely different computer… and besides, we already had a 68000 project. The 68K would have required considerably more memory, a 16-bit bus architecture, etc… it’s a different computer. The Z-80 was cheap, and easy to adapt to the existing 8-bit bus… well, easy once Frank joined the team.

The 68K was certainly a better choice for the next ten years, but that wasn’t the question the C128 team was trying to answer.. you kind of knew that by the fact we pretty much knew the name of the product before the project had started.

And actually, at Commodore, before Jack left, there was this other project: the “Z8000 project”, also dubbed the Commodore 900. Prior to acquiring Amiga, that was the future direction for high-end systems at Commodore. The Z8000 was, of course, Zilog’s upgraded CPU — 16-bit all the way, but with a real MMU chip as an add-on. So it was running Coherent, a UNIX clone.. that solved the “big system” OS problem in a useful way, since writing a real next-generation OS was not easy task (which is why you didn’t have many companies doing this from scratch — most used off-the-shelf pieces, which unfortunately didn’t necessarily make things very “next generation”).

What became of the Commodore 900? Well, at Commodore, it was cancelled. But if you take out the Z8000, drop in a 68000, and put it in a C128-like “flat” computer + keyboard case, it’s going to look an awful lot like the Atari ST. Thing is… there were three different hardware teams on the C900… it had been going on several years by the time we got into the Amiga business. The first two teams just couldn’t get the damn thing to work. The third team, GRR (George Robbins) and Bob Welland, went on to bring you your Amiga 500. People from the second team left with Jack to go do things at Atari.

That last version was done… it could have been productized, and might even have been, had Commodore been healthier in ’84-’86. It would have a been an amazing value in monochrome megapixel UNIX workstations, up against the Sun 2 and similar 68K machines. No way did the Z8000 ultimately have the staying power of the 68K, RISC, or eventually the x86, but in ’85, it wasn’t a bad way to deliver that. The Z8000 machine also had its own expansion bus system, designed using flat cards with 2-piece connectors… a little like the idea of Sun’s S-Bus, you could have these in pretty low form-factor workstations.

Technolomaniac wrote, [quote] After all, how many designs are still using a 6800? Or a 6502? (Remember the 6800 was also a Chuck Peddle design before he left Motorola and they were even sued for it, having to dump the 6501 lest they be squashed by Motorola for patent infringement!) However I can still buy 68Ks in the same way I can still buy 8051s or 386, 486, etc.[/quote]

Actually, the 6502 (or 65c02, with more instructions and addressing modes) is being produced in the hundreds of millions of units per year today. They’re just rather invisible, going into custom ICs in automotive electronics, appliances, consumer electronics, and even life-support equipment. The fastest ones are running over 200MHz (yes, two hundred MHz). In the non-custom-IC ones however, ie, the off-the-shelf ones that are just the processor, Western Design Center (the IP holder) sells them conservatively rated for 14MHz, and they usually top out at around 25MHz. You can buy them from several distributors, including Mouser.

I don’t advocate using a 6502 for desktop computing, but there are many embedded applications where there is little or no need to handle more than 8 bits at a time. I have brought eight products to market with embedded controllers (mostly for private aircraft), and none of them did much handling of quantities beyond 8 bits.

We have a very active 6502 forum at forum.6502.org with a good handful of very knowledgeable people. Come visit.

Interestingly, http://en.wikipedia.org/wiki/Instructions_per_second matches my memory, which is that the 6502, relatively speaking, got a lot done per clock cycle – it inspired the design of the ARM. The 68K… less so.

You also seem to have forgotten quite how crappy the output of a ‘totally crappy C compiler’ would have been in the mid-eighties. The idea that you could emulate a 6502 on a contemporaneous 68K at reasonable speed is mildly amusing. And that’s before you look at the price difference of using a 68K vs. a 6502.

Not quite. Compiler might have been crappy, but 68K was made to perform good with compilers. 68K assembly was so beautiful to me I that I did not need much else.

If you could give me automatic register selection and symbolic user defined variable names, I’d consider such assembler almost on the same level as C compiler, at least for my needs.

Also, there were a few Z-80 emulators on Atari ST that weren’t that bad.

And 65xx DID NOT do a whole lot per clock cycle. Most of the tricks with it involve whole first page of the RAM, which totally kills itc IPC, since you have to access slow external RAM. Or drastically increase amount of logic on the chip.

And lastly the price. 68K wasn’t so outragously expensive in volume.

I remember Sinclair saying that they went after MC68008 thinking that they will in the end spare $$ on periphery even though the chip itself was more expensive than 68000, but no one cared, since 68000 per se was not the deal breaker.

And in the end, ofcourse QL was similar fuckup. They used 68008 but since they needed 16 RAM chips and two PROMs, everything could easily be 16-bit wide instead of 8. On top of that they fucked up the ULA2, so they had to add “IPC” ( 8049 ) which was separate clustefuck, not to mention microdrives.

Had I get the chance to do it again, I’d take a serious look at the NS32K.

AFAIK Tramiel’s team had thought about using it.

I managed to get ahold of its FPU unit NS32081 and a few datasheets.

Back then I liked 68K better but what did I know…

Remember.. there actually were C64 emulators for the Amiga. They didn’t work at speed, except on trivial stuff. The one on my smartphone pretty much does.. then again, my 2-year-old smartphone (Galaxy Nexus) is faster than most PCs were 10 years ago, not to mention any Amiga, ever.

That said, if you really believe that C compilers even now can match top assembly coder performance on 68K code, much less 6502 code, you haven’t met any good 68K assembly coders. It wasn’t even much of a contest. And that wasn’t even the right question to ask. Compiler didn’t win because they produced better code. They won because the definition of “better” was always in flux, and the simple fact that you could change a few compiler switches and change “better” from “as small as possible” to “as fast as possible”… much less the output from “68K object” to “x86 object” to “ARM object” is why compilers won. Hands down, and long before, as it has been the case recently, CPUs got complex enough, and compilers good enough, for hand assembly to be a bad idea in general. I mean, a modern compiler can take into account the effect of the actual CPU hidden under the virtual x86 in any modern PC desktop processor. Your brain may know about things like register renaming, but it doesn’t track that. Not that this gets used much — folks write code for “x86” or maybe “2013-vintage-x86”, but not so much effort targeting the LGA2011 i7-3930K in my PC versus the i3 or A10 in someone else’s.

And sure, there were loser technologies that weren’t bad, even then. The National 16032 and 32032 were interesting… and their “blitter per bitplane” graphics processor technology even more interesting, at least to me at the time. AT&T’s 32xxx series, their later “Hobbit”, etc. were another that had some good ideas, but got lost in the noise (and, apparently, the chip being too complex to make at AT&T’s fab in Allentown, PA).

I know you could (badly) emulate a 6502 on a basic 68000, I was just responding to someone who seemed to believe the appropriate design for a machine “100% compatible” with the C64 was to emulate it on a 68K. This is probably lost somewhere in the convoluted comment threads!

I’m also under no illusion about the performance of 68K C compilers now, just that the mid-80s ones were likely to be… somewhat worse. The 68K architecture is lovely to write assembly for but, despite Brane212’s protestations, a split register file and a million addressing modes aren’t exactly compiler-friendly (even if they’re a lot better than a lot of the other stuff out there).

Thanks for all the other posts you’ve been doing – lots of interesting stuff!

But Commodore owned all the chip designs (apart from the Z80), and that meant they could bypass the middle man.

Agreed that a 68000 or 68008 “C-128” would have been a very nice machine, coupled with the VIC-II and 80-column mode (why wasn’t the VIC-II adapted to also do this, as it was re-done anyway this would surely have made sense), but it would have started off with zero software. Taking advantage of the C64’s vast software library was a no brainer, and taking advantage of CP/M’s vast 80-column software library was also a no brainer in the end.

Yup, the fact that they actually manufactured their own 6502 (equivalent) would be reason 1, 2 and 3 to use it, before anything else.

That, and the enormous existing user base of games for the very popular C64 meant there was no need to worry about the chicken / egg problem of having enough software to make it sell. Releasing a compatible version with added features has been done before, to say the least. It usually succeeds!

I think you are missing the point of the C128. It was for people who wanted an upgraded C64 with more capability, and not have to start over software wise. For people like you who wanted a 68k there was the amiga which came out right around the same time period.

If I recall from the commodore book “On the Edge” it stated the original reason the z80 was put in there was to handle a compatibility issue with a C64 cart on boot…. CP/M was just a bonus…

The thing is that the Commodore 128 had simply no point. I repeat: no point.

It isn’t an upgraded C=64, because VIC-IIe was disabled in 2 MHz mode and the graphics chip itself behaves the same as the one in the Commodore 64.

It isn’t a good CP/M machine, because the CPU is too slow. Also the CP/M era was well over, so there was no point in selling such kind of hardware.

And it isn’t a good home computer even in its native mode: the 8502 was a negligible upgrade over a 6510 and the VDC is a graphics chip intended for the business use of the time (no raster interrupt, no sprites, a cumbersome access to dedicated RAM, etc…).

It just has a double amount of main RAM than the Commodore 64 and a better BASIC on ROM… Wow!

Instead of this machine sold at 300 $ and used by the 90% of the buyers in C=64 mode only they should’ve developed something similar to the Commodore 65 but with a 65C816 as the CPU. In 1985 they could’ve sold it at 600 $ and destroyed the competition of the Atari S/T without the need of an Amiga 500 two years later.

And the Amiga could’ve continued its path toward becaming a workstation (as Jay Miner intended, see the “Ranger” preliminar works), without being “downgraded” for the mass market.

Oh please, the Sinclair QL was even slower than the C128… the only appearance of the 68008 outside of a toaster oven or dishwasher. You really shouldn’t run a 32-bit ISA over an 8-bit bus.., that’s just wrong.

Just as with Tortelvis fans, 5,000,000 C128 fans can’t be wrong. Sure, we did much better things with the Amiga, the first machine to have the performance to back up a GUI environment. We knew the C128 was likely Commodore’s last 8-bit machine, and tgere were plenty of uses for such computers. It wasn’t for some years that the Amiga had better office tools than you could get for the C128… on the day it shipped. Different computers for different purposes… not everyone wants a “bleeding edge” system.

And consider, too, that the C64 was a transitional system in the target of the computer. Sure, there were business machines by then, but a fairly limited market. In the era of the PET, you bought a computer to get “into computing”… the same reason you might buy a guutar or a camera… that device was the central focus of your hobby. This was still largely true in the VIC days, other tgan for gaming, and into the C64 era.

By the time the C128 came out , this really wasn’t true… most computer buyers were computer users. They bought the computer nit to hack, but to support something else with it.., like I might design a PCB, edut a video, produce a song, or write articles on some silly blog with my PC (or in the latter case, my tablet)… no coding necessary. Compting for most usn’t an endpoint, but a means to some other end.

In 1985, the C128 ran more software, mostly mature, reliabke software, than any other computer. The Amiga 1000 shipped a couple of months later, but it was really for computer hobbiests at that point. Not regular users. That changed over the next decade, but back then, the C128 was a much better choice. I’ll leave it as an exercise to compare C128 sales to Amiga 1000 sales, despite the fact the Amiga changed more about personal computing in one product than certainly anything since Chuck Peddle invented personal computing with the launch of the PET 2001.

Whole point of QL was not to be fastest. It was cheapest, purest multitasking machine with 32-bit feel one could buy.

And it was simple to learn. I’ve opened mine half an hour after I got it.

As soon as I could spare 100 Deutch marks, I was over the border ( Yugoslavia ) in Austrian Graz, buying my first floppy to make interface for it.

It worked like a charm.

Same with RAM expansion. It was totally simple to wirewrap 1/2 or 3/4 MiB of zero wait-state DRAM expansion for it. I soldered into mine 68008FN in the PLCC packing, which had two extra address pins and so I could add much more RAM ;o)

Oh, I forgot a few other things.

Afte a while Nasta and other developers in QL communiity made IDE interface – QBIDE.

I’ve got one and it worked very well. I also made mouse interface ( TTL counters and ports directly on CPU bus for Amiga/Atari mouse) etc etc.

“Oh please, the Sinclair QL was even slower than the C128… the only appearance of the 68008 outside of a toaster oven or dishwasher. You really shouldn’t run a 32-bit ISA over an 8-bit bus.., that’s just wrong.”

BTW, let’s look closer at that. here is picture of one of my machines:

http://www.avtomatika.com/MOJE_SLIKCE/QL2.jpg

here is closeup:

http://www.avtomatika.com/MOJE_SLIKCE/QL3.jpg

That part is essence of QL and encompasses cca 17×10 cm. Everything else can be cut away and the result will work as a QL in all important things. Almost everything. ULA2 is needed just because IPC is connected to it and IPC is needed just for beeper and keyboard scanning. Had I took the trouble to do it in some other way, I could throw away those two, too.

So we are looking at one 15 MHz crystal, one punny ZX8301 ULA ( Ferranti), that does it all, two PROMs ( 32k x 8 + 16k*8) and 16 elcheapo DRAMs 64k*1. With 8 bit busses all round.

And even though microdrive was crap, it was so because of the mechanic part ( wear and tear of the tape):

http://www.avtomatika.com/MOJE_SLIKCE/MDVs.jpg

THIS managed to have higher transfer speeds than CMD’s 1541 !

Hey now, GEOS ran perfect on my c128.

SYS 32800,123,45,6

Awesome story Bill! And though many-a-fanboy has no doubt showered you with their adorning gratitude — many thanks! The work you boys did on your team, and at Commodore more broadly, fundamentally shaped the course of my personal history both with programming and HW development, and for that, I owe you more than a beer!

Some history —

I got first exposed to the PET of all things the year after Star Wars came out. The PET hit the streets in 77 as did Star Wars (before you started at Commodore I believe), and I must’ve gotten mine sometime in 78 or early 79. My dad had been an engineer over at Zenith and had brought one home for my older brother to crack in to, not expecting that his youngest (me) would have nearly the interest that I did. To me, the combination of white and red, with some blue’s and grays, reminded me of R5-D4 (http://goo.gl/EEyKxv), which you may recall was the droid that erupts into a cloud of smoke outside of the Jawa’s Sand Crawler while Luke and Uncle Owen haggle for a droid that “understands the binary language of moisture vaporators”. Like that fateful moment that R2 leapfrogs R5, history would be shaped by the machines I’d first encountered over those first 10 years or so…Most of them having come out of your wheelhouse.

When I’d finally get upgraded to the C64 in the 80’s, shit got real! Both good and bad. I liked the PET, because it got me into HW VERY early on. You may recall, it had a pop up top – like the hood of a car – and you could see everything inside laid out in all it’s glory (mind you the hood weighed about the same as a Dodge Rambler). This was awesome as it meant someone could point out the various bits one component at a time, without feeling like they needed to disassemble the

thing.

The C64 – I must admit – bummed me out, because it didn’t feel (at least at first) like it was intended to be opened and hacked up – at least not the HW. It was self-contained…and as such (with Apple products having heaps of expansion slots in the days before Woz would lose his influence on the design-side of the org)., I was discouraged from “cracking the seal”. Ahh, but oh when I did!?! It wasn’t until you opened it up that you realized all of the juicy ports that were on the inside and that landed me square in the C64 corner. “Where had you been hiding all my life!”

We’d come to hack up the C64 case more than a few different ways (thank you drills and jigsaws) – making enemies of the purists who just wanted to rock out Winter Olympics – but connecting up all the juicy external bits would open up our world and make true “hackers” out of us.

By then, my Dad had gone to work for Allen Bradley, which you’ll recall was where MOS Technology had gotten started…The guys that were behind Commodore’s chip division and ultimately cranked out the 6502.

The 6502 was the first chip I ever got to know intimately (ok, that’s sounds bad…) and I would ride that wave until it had long petered out. Following that, I seem to recall the guys on the 6502 going over to Motorola for the all-popular 6800! That would be the first chip I even got a proper manual for.

I always wondered – puzzled really – why the gang chose not to make the cases more “hackable”? Especially for the C64 but later the C128, which was considerably larger and probably could have housed more expansion ports, etc. and expose them thru the case. I guess this is a problem we consumers still whine about today! (At least some of us). Still I “get it”. For the average consumer, cables and such are hardly the site you want dragging across your living room (just ask my wife today).

Still, the C128 was such an improvement (esp with the advent of the MMU and the bank-switching improvements!). Also the improvements to BASIC, inclusion of CPM support, etc. made this one of the finest computers of the day. Excellent work and an awesome story! One of the sleakest looking machines of it’s day and something worthy of praise.

mb

Sorry – my history is a bit whacky there. I attribute that to my old years. The 6502 came out of the 6800. :) Other way around.

The C64 and, by extension, the C128, were designed to be low cost systems. That means you’re budgeted on case complexity, budgeted on the power supply — only a little juice there for add-ons, etc.

Believe me, they counted every penny. I spent quite a bit of time working on C128 timing and compatibility — I ran every cartridge known to mankind on the C128, and when something didn’t work, I had to figure out why. Often that also meant telling the manufacturer what they did wrong in the design (usually after I reverse engineered it — quicker than trying to get anyone to send schematics), but probably still trying to support it if we could.

A one point I had figured out that the 74LS245 data bus buffer to the “1MHz bus” was too slow for expansion to always work. I needed to switch to a 74F245.. and that was a big deal… the 74F245 was MORE EXPENSIVE. I mean think about it, at C64 volumes, if you spent an extra $0.50 on a part, that’s $2 million in lost profit every year.

Here’s another good one.. we actually fixed a software mistake in the C128’s hardware. Back in the olden days, when Bil had hair on his head, dinosaurs roamed the earth and I still fit in size 34 jeans, there was this drawing program from Island Graphics (“Micro Illustrator” maybe), and it was kind of a big deal. This came on a cartridge, and ran in C64 mode. When you booted it up, it had this splash screen that got really clever and draw itself using their own primitives. One of those was flood fill… and on the C128 in C64 mode, when they went to go dot the “i” in the logo, they missed. See, of course, every program counted not just bytes but bits, and to save some of those, they read these character patterns from the C64 ROM.

But someone (Fred?) had spent time making a more readable font for the C128. As a result, the i’s dot had moved up by one pixel. So the splash screen proceeds to flood fill most of the screen… and then, since that erased lots of other stuff, run more and more of these really slow operations. So it took more than 1/2 hour to get past this already-too-long 1-minute-or-so splash screen. That’s when the C128 started backing the character ROM… when you’re in C64 mode, you have the old C64 character set. That’s the kind of thing we found, though this was certainly one of the more entertaining versions. That’s why none of us in Engineering would ever have approved the “100% compatibility”… it’s that old saying: genius has it’s limits, but stupidity is unbounded. Never bet that you are smarter than everyone else is dumb :-)

” I mean think about it, at C64 volumes, if you spent an extra $0.50 on a part, that’s $2 million in lost profit every year.”

This penny pinching was exactly what killed Commodore, Sinclair and many others.

They saved 2 mil here and there but missed a whole lot more that could have been made.

That penny-pinching is what made Commodore, Sinclair and others into the popular, successful multi-million-computer-selling companies that they were. It’s in the fundamental nature of mass production.

That penny pinching was the reason Commodore won the 8-bit “wars” and put most of the competition out of business. It’s the reason there were 27 million C64s sold.. it wasn’t until the Apple iPad that any single model of any personal computing device sold as well.

What killed Commodore was the opposite…penny-pinching on the development side, while at the same time rewarding bad managers with outrageous pay. When you had just Irving Gould and Mehdi Ali receiving each several times the pay of the CEOs at Apple and IBM, it wasn’t very difficult to smell the problem… in fact, there’s a film about this; http://www.amazon.com/Deathbed-Vigil-other-tales-digital/dp/B00B1X898K/ref=sr_sp-atf_title_1_1?ie=UTF8&qid=1386698760&sr=8-1&keywords=deathbed+vigil

Sure, cutting too much on cost can affect the quality.. but that wasn’t the case here. They say there’s a C64 in every closet… but what’s also true: the C64 in every closet still works.

And BTW, it’s hard to believe that every penny has count when you have inside bazillion of components and PCB the size of a Belgium.

And what was exactly “C64 upgrade” in C128 ?

Whole lot trouble was spent on compatibility in vain. Whoever wanted C64 could simply byu it and be done with it.

8 bit machine could survive in the battle with the new wave only if it managed to bring something new to the table, which it couldn’t. There was not enough horsepower.

Yes, 68K needed more RAM, but most of ot was put to the good use. Instruction opcodes were larger, but that didn’t amount to that much.

QL was perfectly useable even with 128K of “slow” DRAM and even though whole 32K were eaten by screen and some 6K by variables etc etc. And 64kx1 DRAM wasn’t that expensive at that point.

150K QLs, 5 million C128s. Do the math… the QL was a failure. Nobody wanted one. It just didn’t deliver a computing experience superior to existing AND WELL SUPPORTED 8-bit machines. It pretty much proved what most developers already knew: you could’t just drop a pseudo-next-gen CPU into a current-generation 8-bit platform and make anything interesting.

They also got a bad rap for claiming it was ready long before it was (though that was far more standard practice in the mid 80s than it is today… Commodore used to “trial balloon” products at CES shows all the time), shipping with all kinds of bugs and faulty hardware, etc. The QL barely lasted a year in production.

“150K QLs, 5 million C128s. Do the math… ”

by that “math” MacDonalds is leading edge food source on the globe.

“the QL was a failure. Nobody wanted one. It just didn’t deliver a computing experience superior to existing AND WELL SUPPORTED 8-bit machines.”

And this was because it wasn’t good ole 8-bit to the core ?

Nobody wanted wone because IT DIDN’T WORK AS ADVERTISED.

At least not from the start.

”

It pretty much proved what most developers already knew: you could’t just drop a pseudo-next-gen CPU into a current-generation 8-bit platform and make anything interesting.”

Really ? At the time I had bought my first one, it has been around 400 DM or so.

I’ve bought it from the guy that bought Atari ST on which he spent 2500 DM+ ( monitor, floppy etc)

Through my computing life I repaired and owned just about anything I could lay my hands on. I owned everything in 16/32-bit Atari line ( except TT and Falcon, never cared much for those), I had and/or owned just about anything from Commodore except Amiga 4000, then there are Spectrums, Amstrad/schneiders etc etc.

With ST, I did some expansions and reworks, but never to the point where I could use it to do some actual, useful job with it. I could cobble up 4MB expansion from 4 1 Meg SIM sticks in the middle of the night while drunk within an hour, usually less. And that was wire wrapped, without a PCB. I reworked my ST to work on 16MHz and added EGA-like moniitor and had to redo the part of the PCB around shifter, so I could get rid of the digital noise. I designed PCB with IDE interface for ST.

But that was about it. With ST, I never could quite cross the line from tinkerer to user.

WIth QL it was different. I knew it inside and out. I wirewrapped floppy interface more than once. I could routinely wirewraped my memory expansion more than once.

Adding this or that simple peripheral was a no brainer.

Adding Toolkit or similar module was 10 minute job, 5 of which were spent programming the EPROM.

When I started experimenting with networking, I did it on QL, since it had network interface. When we started experimenting with wireless data transmission, the same day I had a couple of PIAs and CIAs on the wirewrapped board on the bus.

Same with steppers, programmers etc etc.

I learned how to draw fast line on QL. Same with ellipse etc.

Judging by that ethalon, QL was a stellar success for me. Every other machine was and still is in many ways inferior.

QL was there when I needed CPU power and I could use it without thinking too much about how the heck will I manage do to whatever I was set to do.

If I forgot how to calculate beahviour of some LCR network and needed quick and dirty suggestion, SuperBasic was there for me. Where Superbasic was too slow, it was assembly time.

Hey, if you liked the QL, great. The problem was precisely that Sinclair built a last-generation system with half of a next-generation processor. For people who were into the “computer as a hobby” thing, it was an interesting option for just about a year, before real next generation systems were out. I’m not knocking that… I bought my Exidy Sorcerer in ’79 and my Amiga 1000 in ’85 both because I wanted something cool to program — didn’t really care about the applications. But by 1984-1985, that was a tiny fraction of the market for computers. And pretty much any computer at that time offered the same level of interest, if not more, other than maybe a chance to get the first 68K machine.

And your analogy is incorrect.. both Volkswagen and Mercedes have been successful car companies, despite the very different price model … particularly going back to when the C64 was dubbed the VW Bug of computing. In that model, maybe the QL was the DeLorean… looked interesting from a distance, but most people didn’t buy one after seeing it up close. McDonald’s is successful, but so are hundreds of five-star restaurants. So are thousands of food trucks, too.. but more businesses fail than succeed. Making a computer people want IS an important part of being in the computer business.

And you’ll get no arguments for me supporting the Atari ST… elsewhere in this blog I related another story about the move of Jack and his boys to Atari. I didn’t know that team so well.. I started at C= in October of ’83, they left in January of ’84. But as I mentioned, they may have left with big ideas and even designs that were the Commodore 900 and may allegedly have had something to do with the Atari ST, but the folks who actually got the C=900 to work, George Robbins and Bob Welland, went on to make the Amiga 500.

And no one’s going to argue with you on what’s best for you, personally. Me maybe least of all. When I made Amigas, I made them as much as possible for myself, which turned out to also be a good enough thing to sell hundreds of thousands or millions to other people. But seriously, that’s kind of how things went at Commodore. It was like that when Bil was there, but didn’t change all that much. The Amiga 2000 got a fully functional video slot because GRR and I were sitting around the lab one day and just kind of came up with the idea… without that, no Video Toaster. The CPU slot worked correctly because I knew fully well that, even getting into “serious” computers like the Amiga (the kind with a real OS that actually gets updates, rather than the old 8-bitters, where the OS is essentially part of the hardware spec), so I wanted a drop-in CPU upgrade. The A3000 got a 32-bit expansion bus because I thought it needed one, and decided to go invent one. I mean, the absolute best part of working in computers in those days, or in consumer electronics in general: you get to make something that can meet your own standards. That does tend to make one critical of lesser things.

And for the record, Macintosh hardware was the worst of all… they used polling where an Amiga would use interrupts, they used interrupts where we’d use DMA. Of course, they’re still around… slick software is an easy marketing win. No one understands the hardware anyway.

When I want a new computer today, I make one from boards… no one’s selling just what I want…. made a new one last summer… typing on it now.

One more question- around that time there were some systems that have opted to use Hitachi’s HD64180/2 instead of Zilog’s Z-80A/B/H/etc.

HD was faster, far more powerful and could address something like 1MB or so, it had a few DMA channels etc.

Erm, one more question on CPU front – if this thing was to be multihead 8-bit CPU machine, why was MC68008 not considered ?

It had 8 bit bus and very nice address range. It wasn’t that fast, but C-128 wasn’t about speed anyway. And it had the possibility of sync and async behaviour on the bus, so it shouldn’t be too much of a headache to stuff it along 65xx/Z-80/etc.

CP/M

So ? It could be the third turd, so to speak…

There’s only so many CPUs you can fit in a computer sanely. For an 8-bit it’s basically one, or two with some gymnastics and usually meaning switching one of them off at a time, or at least relegating it to an I/O slave with a K or two of RAM.

Once again, the whole point of the Z-80 was to enable, cheaply, access to lots of existing software that could take advantage of the 80 column display. That was it — the Z-80 was because of CP/M… all kinds of business software available off-the-shelf. Again, the C128 wasn’t intended to be the computer for the next 10 years, but something to replace your C64 with.

The only 68K personal computer in 1984 was the Macintosh… sure, there were some 68K-based workstations, but they were an entirely different thing, requiring lots of memory (memory was VERY expensive in the C128 era, and sometimes even in short supply) and hand-made UNIX-style MMU solutions and hard drives.. not an option in a $500 personal computer yet. The 68K was a good answer to the question of a next-generation system, but it made absolutely no sense just dropped — without software — into a old architecture.

And of course, the 68008 ran everything the 68000 did at half-speed. So your 8MHz 68008 wouldn’t necessarily outperform a 2MHz 6502 on important tasks. The 68008 made some sense for embedded projects, where it had the advantage of more memory (up to 1MB), and code development was largely new for each project (it’s certainly much faster to write for a 68K in C than a 6502 in assembly).

Not that the Z-80 was fast, either… it wasn’t. But it was also a gateway to more software. The CP/M system on the C128 worked very well… I had a system set up with three 1571s, a good monitor, modem, etc. Kept using it for awhile even after I got my A1000.

OTOH I used on QL Quill Abacus Easel and Arhive. Well, mostly Quill and Easel.

Contrary to your theories, they worked quite well.

First versions that came out of Psion straight from the compiler were unuseable on 128K machine with microdrives, but a few months later we got versions that were quite fine.

Even on CPU with 16-bit ISA opcodes, 32-bit registers and 8-bit external bus. On heavilly burdened 8-bit internal “slow” RAM.

And I think even just two 1.44MB floppy units could compare with those 3 x 1571 just fine…

So what SW jewel on CP/M was so worth keeping to go through all this ?

“And of course, the 68008 ran everything the 68000 did at half-speed.”

This is not even remotely true. If you math heavy MUL/DIV code on say Atari ST with unimpeeded 68000 on 8MHz and on QL with 68008 on 7.5MHz, you’d get maybe 20% difference on average.

68008 did instruction fetch at 50% speed of 68000. If CPU had to think for a few cycles before doing next memory access, your speed advantage starts to diminish.

” So your 8MHz 68008 wouldn’t necessarily outperform a 2MHz 6502 on important tasks.”

Really ? I suppose it depends on the definition of important task. How about 16-bit multiplication ? Or maybe indexed access into say 16-bit table ?

How about PIC-relative code ? Or 32-bit rotations/ shifts etc etc ?

Not only that, but:

– I’ve done sort of “turbo”mod, first oni one of the Atari ST’s then on QL.

What I did is basically I switched CPU clock from 8 to 16 MHz for the time it was inactive on the bus and back to 8MHz at the moment CPU was about to start bus cycle.

On ST this gave me something like 80%+ speedup on math benchmarks and third to half of that on graphic routines ( lines, windows, blitter routines etc etc).

Speeding up the QL was much easier, since CPU is not that much sinhronized with everything else. Unfortunately, ULA1 needs to be in sync with CPU to access slow RAM or I/O registers due to some (unavoidable?) compromises, but that was easily solvable.

And unnecesary for the access to external, fast RAM, ROM etc.

So I had routinely OCed my 68008 to 32MHz for fast RAM and FLASH.

Nearly major business program of the day ran on CP/M. That was the main attraction. All that stuff that got ported to MS-DOS started out on CP/M. And CP/M was actually way more system-indepedennt that MS-DOS…the C128 ran pretty much anything the Kaypro could run. So that was instantly a thousand+ professional programs that ran on the 80 column display. And most were already discounted, because the serious business users had paid their $5,000 for an IBM PC by then, so all the CP/M stuff had been dropped in price. But still supported.

That was kind of ironic, too, because most CP/M machines ran rings around the original 4.77MHz IBM PC. Even the PC-AT was kind of a wash at that point… not talkin’ the C128’s 2.08MHz Z-80 here, but the systems that had pushed business apps before IBM came along. The reason the IBM PC took over was, first, the FPU… drop an 8087 in there, and your spreadsheets went 100x faster than on a CP/M machine. Or an Apple ][. Or a CBM/PET/C64, whatever.

So, to flip it back, just what successful 68008-friendly operating system could we have launched on the C128 to deliver anything like that, on the day the C128 shipped? Building a whole new 68K OS was not an option… we already had the Amiga coming, and of course, the aforementioned C900, which hadn’t been cancelled when we started the C128.

I had a Spectrum then an Amiga, then picked up used PC hardware to play with, since that seemed to becoming important to know about. I had Amstrad XT clones, played a lot also with someone elses Amstrad CP/M machine and THEN got my hands on an original IBM PC… yes it was tooth pullingly slow… I swear even a software emulator an an A500 FEELS faster than an original PC… and agree that the AT was no fireball either, I picked up one of those with a 6mhz 286 in, and yup, the Amstrad XTs with their oddball 8086s at what? 8Mhz, still felt MUCH faster… If you want to remember the classic DOS days fondly, pick up a Turbo XT of some kind, not a “real” IBM…. Wasn’t really until you got to about a 12 or 16mhz 286 that ATs felt much more snappy on the software of the day. Sans x87.

Anyway, just supporting Dave in saying the late CP/M boxes seemed faster on general purpose non math chip crutched things than original IBM PC/XT/ATs

Haha! I worked on the Micro Illustrator (all those years ago) – we visited C= in Pennsylvania, and after meeting with Jack T. we had to rip up the first contract!

We also did versions of Micro Illustrator for the C= TED. Atari 400/800 and others.

Island also did a paint system for the Amiga – I fondly remember Intuition V24.6 as the first stable development release!

cheers,

Robert

Hey, my Dad was an engineer at Zenith, and brought home computers for me to play with as well! It is _very_ likely that I used that exact same PET on our kitchen counter top back in the day…. Hunt the woupus?

I never got into the C128 because at that time I was given a Cromemco miniframe computer with a pair of Wyse terminals and entered the world of Unix. I actually used my C64 to break into that Cromemco, had it just sit there and brute force passwords for the first 10 days I owned it.

I ran a BBS with my C-64 for years. Ended up getting a SCSI adapter and a 10 MB drive for it. I learned 6502 assembly (the BBS program got too big in Basic) and it laid the foundation for a career in software development. I never had the $ to upgrade to the 128, Thank you Bil for the great story.

Wire Wrap to make a live demo for a show does count as Advanced Hacking.

Actually, it was the early development stuff, the stand-ins for the PLA (which wasn’t really a PLA, but we called it that because it replaced the actual PLA used in the C64 design) and the MMU. By late ’84, we had at least early revisions of most of the chips.

That really expensive 6-hour turn was for a PCB tower. There were all kinds of problems with the 8563 at that point in time. The big problem: most of them just didn’t work. It had terrible yield, but some did. Once you found those that could work, though, the problem was that they didn’t work as designed in the system. I had poked and prodded the very first ones we got in, written some code to get a display up and running on the very first silicon, and found the main problem: you could write a register, but it didn’t always take. It was much more reliable if you write it twice, but even then not 100%. Turned out that the 8563’s designer was originally from Texas, and there had been a few other things from Texas that had a similar behavior (part of the chips in “Tragic Voice”, for one), so these got dubbed “Texas registers”. But I digress.

Bil, along with Dave DiOrio, figured out the 8563 wasn’t properly syncing between it’s own 6502 bus interface and it’s pixel clock, so they did some PLL magic to make that lock happen outside the chip… that’s what the tower did. While they were cranking out that tower, I was working along with some random managers to sort through hundreds of 8563 chips for the few that could be made to work ok.

And as Bil says, things went off like magic at CES that next week. What he didn’t tell you was how much of a dynamic process that was. So ok, if you came into the booth at Comodore and played around with C128s, they all worked as they were supposed to. You could run them all day, no problem, as long as you left them doing the things they had been set up to do. But there was a little smoke and mirrors going on here. Some of the 8563 worked very well, once whipped into submission with Bil & Dave’s tower. Some.. not so well; they worked, but they weren’t stable enough so that you’d want to show them. Worse yet, the PLL tower had to be tuned, and it wasn’t temperature compensated well enough. So if you shut one of these systems down and then rebooted immediately, it probably wouldn’t lock. It most likely would, if you let if cool for 15-20 minutes, but not something you want on the show floor.

Little of this would have been a problem, except for the fact that Marketing People kept turning off the C128s, putting some into 80-column mode that maybe were supposed to be showing C64 programs, etc. So I had a can of freeze spray and a “tweaking tool”, and I spend much of the week pushing around Marketroids and resetting C128 PLL towers.

One night that week, Bil and I were invited up to the Amiga suite, to hang with RJ Mical and Dave Needle, and get a closer look at the Amiga. They cracked the case… one of us remarked something like, “hey, we have one of those too”… they had a very similar looking tower under one of the Amiga chips. Good times!

I’d like to say thanks for the story, as well. Very interesting, cool to know ‘trivia’, thanks for sharing! A very good read.

I would love to hear a story behind Commodore Plus/4.

That was hardly the first 8-bit computer to break the 64k barrier. The Apple III (’80), then later the Apple //c (’84) and Apple //e (’83) all accomplished 128kb or more. C128 was an impressive machine, more than the sum of its parts, but it was also hardly the last 8-bit micro made as the (4mhz!) Apple //c+ came out in 1988 and the Z80-based Sam Coupe came out a year later.

The entire apple2 line could only address 64k of memory. Yes, the //c had, and //e could be upgraded to, 128k in total. But you accessed it from bank switching, not directly from the address bus.

If bank switching counts, then one could easily claim all 8 bit micros could address megabytes of ram, assuming one could get approved for the $20k loan needed to buy the chips :P

http://commodore64.wikispaces.com/Commodore+128+Memory+Architecture

The C128 was also bankswitched. Apart from the additional I/O pins and double speed, the C128 processor was still an 8-bit CPU with an 8-bit bus. Please keep in mind I wrote a fully functional Apple //e emulator (including NTSC color emulation, mockingboard, serial and hard drive support) from scratch so I do have a vague idea of how all these things work.

I meant to add that in order to break the 8-bit barrier completely you have to have the address bus support as well as the instruction set. Even hypothetically if you did have a 6502 hooked up to a 16-bit bus, the instruction set is almost completely packed without sufficient room for a corresponding set of 16-bit capable instructions to co-exist with the 8-bit ones. The closest you can get is the WDC 65C802, which was a derivative of the 16-bit 65C816 that would work on 8-bit boards — but there again it only supports an 8-bit bus in order to be pin-compatible with the 6502.

In contrast, using an MMU to redirect address lines is a very low-cost and attractive option to support more memory and maintain backwards compatibility. The //e and //c MMU work very similar to how the C128 MMU works.

We should start a support group. Former AppleII emulator writers guild or whatever. I wrote the A2+/A2E emulator for the Dreamcast, NintendoDS, and a few others over the years. Correct NTSC emulation drove me crazy, as did mockingboard and harddrive / ramdrive controllers. Never got around to strapping Z80 support in, since most AppleII specific Z80 stuff has long been forgotten, and there were more interesting CP/M platforms.

You should take a look at MESS these days, then. Has the concept of “slot devices” which allows you to specify what cards are plugged into what slots at runtime. Currently supports quite a number of cards, including the language card and a couple of cards that were never emulated by any other emulator, which was made simple by using the existing MAME/MESS chip cores.

Best article for a long time! keep stuff like this comming!!

We’re working on it!

Apple][c had 80 column text 1st…right?

I would argue that the Apple IIc was not a “home computer” and the title of “first home computer with an 80 column text mode” would go to the Amstrad CPC464. The image caption may just be worded ambiguously, though.

The BBC Micro got into many homes, and that came out a couple of years earlier than the CPC 464. The lower cost Acorn Electron too.

What did these all have in common? The very flexible MC6845, and some hackery to get graphical modes out of the beastie.

Yes, a note to the caption writer: the BBC Micro had “40 and 80 column displays” in 1981, as did the Acorn Electron in 1983 which was undoubtedly a home machine; the Amstrad CPC464 was launched in 1984.

Note that the Electron didn’t use a 6845 CRT controller but provided a 640×256 pixel 80-column display using its own specialised display/sound/input/output controller implemented using what we would now call a gate array.

The Acorn Electron was mt first computer (moment of reminiscence…) and a curious fact about it is that the ULA in it was the biggest ever at the time.

There were problems with the ULA not making such good contact with the socket and the LM324 used in the tape system failed slightly too often. However it had the awesome (for it’s time) BBC BASIC!

Bil, Dave,

Let me leave it at this:

You guys at CBM changed the whole world of computing

For the better.

Full, pre-emptive multitasking when the big boys were still learning to walk and chew gum at the same time; Thousands of colors possible on screen all at once from the motherboard mounted graphics hardware when the big boys had what? 16, was it? Full stereo sound from that same motherboard when all the big boys could do was say “beep”.

Thank you.

Yeah, it was 16. .. that was the C128, too.

Amiga changed it up… that’s why we had to deliver monitors to go with the Amiga in the early days… PCs used “RGBI” monitors… 4-bit digital color code per pixel, 16 possible colors, and determined by your monitor. And the monophonic 8-bit SoundBlaster was the standard sound in a PC at the time… and an add-in, even at that. Stock PCs only had that built-in beeper thing.

Macs, of course, were mono-only, as were most “business” computers. In those days, the Atari 400/800 has the best color, far as I recall, short of dedicated CAD systems. They could do up to 16 colors out of a 256 color pallette, depending on the mode… lots of weird modes in those computers. Of course, in theory, any computer using CVBS or Y/C output could deliver any NTSC/PAL color. Most had other limits, though, particularly one of memory bandwith.. another place going to 16-bit-wide memory buses (and running them twice as fast as your CPU did) helped considerably.

The Ataris had problems based on the lazy / genius (not sure which) way they implemented the colour, the same way as in the 2600 console.

It was all done as chroma / luma value pairs, not RGB. Some modes gave you 1 colour with 8 luminances, some had all 16 colours at 1 fixed luminance. Tho the ANTIC was very flexible and you could use different modes and colours per scanline, with no CPU loading. But it means there’s a distinctive look to Atari 8-bit graphics if you know what you’re looking for, the limitations show through.

OTOH 128 colours back in 1979 wasn’t bad!

The hold and modfiy mode (HAM) was quite the charming thing at the time ^^

At this time I had been a die-hard TRS-80 Color Computer aficionado but I did appreciate the advances in which the Commodore camp had triumphed. I eventually embraced, for better or worse, the Commodore Amiga line, from 16- to 32-bit both in AmigaOS and Amiga Unix.

For those who do have fond memories of the 6502, check this out: http://www.visual6502.org/JSSim/index.html

These guys actually reverse engineered all 3,500 transistors (1/20th the size of a 68000) and have a cool simulation running in HTML5. Links to the sources, too… not suggesting it’s time for a transistor-level C64 emulator, but cool none-the-less.

Wow. I can almost still read the 6502 machine code though the memory has faded a bit after 25 years…

Well, that’s just silly! I thought the Javascript PC emulator running Linux was far along the genius / insane line, but this just wins! Something like the Atari 2600 had simple enough support hardware, I wonder how fast they could get one emulated, transistor at a time?

I remember the C128. I got a prototype of it and wrote an article for RUN magazine. It was a great computer.

Respect. I still own 2 C64s, one of them is hooked up in my basement where I fire it up every now and then to remind me of the good old days where people like you would create magic. To this day, I struggle to understand how you did it.

Those of you that enjoyed the write might also like “The soul of a new machine” by Tracy Kidder documenting the development of a minicomputer in the early 80’s at Data General corp. It’s a good read.

It is. There isn’t a lot of it and even less of it that’s interesting. It’s more about the people and the atmosphere than the tech. Still a good read.