Computer animation is a task both delicate and tedious, requiring the manipulation of a computer model into a series of poses over time saved as keyframes, further refined by adjusting how the computer interpolates between each frame. You need a rig (a kind of digital skeleton) to accurately control that model, and researcher [Alec Jacobson] and his team have developed a hands-on alternative to pushing pixels around.

The skeletal systems of computer animated characters consists of kinematic chains—joints that sprout from a root node out to the smallest extremity. Manipulating those joints usually requires the addition of easy-to-select control curves, which simplify the way joints rotate down the chain. Control curves do some behind-the-curtain math that allows the animator to move a character by grabbing a natural end-node, such as a hand or a foot. Lifting a character’s foot to place it on chair requires manipulating one control curve: grab foot control, move foot. Without these curves, an animator’s work is usually tripled: she has to first rotate the joint where the leg meets the hip, sticking the leg straight out, then rotate the knee back down, then rotate the ankle. A nightmare.

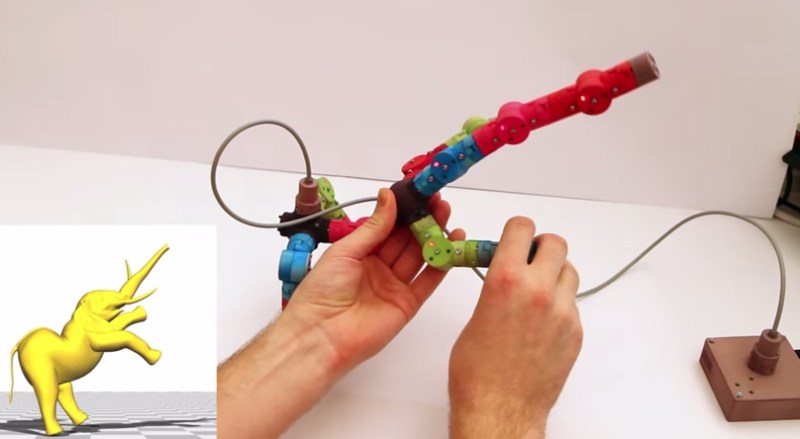

[Alec] and his team’s unique alternative is a system of interchangeable, 3D-printed mechanical pieces used to drive an on-screen character. The effect is that of digital puppetry, but with an eye toward precision. Their device consists of a central controller, joints, splitters, extensions, and endcaps. Joints connected to the controller appear in the 3D environment in real-time as they are assembled, and differences between the real-world rig and the model’s proportions can be adjusted in the software or through plastic extension pieces.

The plastic joints spin in all 3 directions (X,Y,Z), and record measurements via embedded Hall sensors and permanent magnets. Check out the accompanying article here (PDF) for specifics on the articulation device, then hang around after the break for a demonstration video.

[Thanks Sam]

One issue that stands out to me would be resetting the rigs back to specific positions. Instead of being able to save and easily restore specific frames, you’d have to manually reposition it as closely as you could. Just hope you get it right on the first take.

Though, I’m sure when combined with traditional editing this probably wouldn’t be much of a problem.

Actually this is going to be incredible as a input method to traditional editing, most of the time is a lot easier to obtain a position with a physical model that with a virtual one.

Interesting issue you raise there.

Starting from a specific position – only known digitally – will be tricky. You can easily sort of “snap” on the digital side to get back to the old state, but then the real/virtual model will be slightly out of sycn position wise. hmm..

It would work quite well for claymation style animation. What I don’t see is proper inverse kinematics being employed, which makes it useless from that perspective.

i dont think this would be much of a problem at all. you start with a basic position alredy, so you would just select a diffrent keyframe, update the rig to match the model, then set the frame. when you need to get back to a previous position, just copy it from the previous keyframe.

this could be easily solved with a a green/red led on every joint, you choose the keyframe you want to replicate and once you rotate the joint to the right position it will light up green

Nice, reminds me of Dynamixel servos (that also come with this posing feature)

http://www.robotis.com/xe/dynamixel_en

The plus side there is that they CAN restore frames, since well, they are servos.

Standard servos can readout position as well if they’re driven by a special circuit. http://forums.parallax.com/showthread.php/84991-Propeller-Application-Proportional-feedback-from-a-Standard-Hobby-Servo-%28Upda/page2 Post #30 for the details.

Basically under program control I boost the supply impedance for the servo to ~100 ohms. Then I send a 200uS command pulse and measure how long it takes the servo to brown out it’s supply. This pulse width is proportional to the internal position. Micro servos require an extra long pulse to probe as they activate the motor as soon as the internal or command pulse ends, but are just as precise. Works with any analog servo, but only if it’s not moving. (too much energy stored in the spinning motor)

Pretty sweet. How open source (if any) is this?

Minecraft doesn’t need advanced animations yet it’s more fun and has made more money than any AAA game.

orly? it has made more money than WoW or LoL?

And games are totally the only medium that could ever make use of 3d modelling.

As someone who enjoys Minecraft I have to say the sheer stupidity of this comment is mind boggling.

Minecraft? All those fancy 3d graphics! You dont need that! Tetris has sold 3 times as much!

Tic Tac Toe! Over a trillion games played!

For perspective, Minecraft made $101M in 2012 (source: http://venturebeat.com/2013/02/01/minecrafts-notch-on-earning-101m-in-2012-its-weird-as-f/ )

CoD: Modern Warfare 2 made $310M on *release day* (source: http://en.wikipedia.org/wiki/Call_of_Duty:_Modern_Warfare_3 )

This isn’t a criticism of Notch or minecraft, and I’m not saying Minecraft hasn’t been a fantastic success. Obviously, CoD of course had a vastly larger budget and is splitting that revenue between many more people. Truth is that indie games and big name sold-in-stores titles exist in basically different worlds that operate under very different rules.

It’s also important to understand how the actual gameplay of Minecraft dovetails with the narrative of its development. It rose to popularity, at least initially, on word of mouth, so potential players were entirely aware that MC was literally a one man project for most of its history. The brilliance of this narrative is that the game itself is focused on creation, so Notch’s story lures in the very people the gameplay appeals to. This is a perfect setup for presenting the technical rough edges as part of the charm rather than a shortcoming. IMO this is the more important problem with amgi’s post, beyond just containing an obvious factual error. His implicit thesis–that games don’t need graphics beyond swinging block-arms–is premised in a fundamental failure to understand not just how the industry works in general, but how Minecraft in particular succeeded.

It’s also popular we all got trolled.

This looks like it could be used for live action animation motion capture,

if you have the sensors attached to a person, instead of that setup with

all the dots and multiple cameras that they use nowadays.

I agree, It’s best application is as a mocap suit. You would need to make it wireless and I don’t know how you would handle the head/neck

I think individually addressable servo based would be better.Since you could “undo”.

This could be useful for robotic movement training.

Phil Tippet devised a system like this (minus the pose restoration) way back on Jurassic Park.

http://mediacommons.futureofthebook.org/tne/sites/mediacommons.futureofthebook.org.tne/files/images/oliver_dinosaur_input_device.preview.jpg

It sounds really great air. Is there a possible integration maya?