Moonpig is a well-known greeting card company in the UK. You can use their services to send personalized greeting cards to your friends and family. [Paul] decided to do some digging around and discovered a few security vulnerabilities between the Moonpig Android app and their API.

First of all, [Paul] noticed that the system was using basic authentication. This is not ideal, but the company was at least using SSL encryption to protect the customer credentials. After decoding the authentication header, [Paul] noticed something strange. The username and password being sent with each request were not his own credentials. His customer ID was there, but the actual credentials were wrong.

[Paul] created a new account and found that the credentials were the same. By modifying the customer ID in the HTTP request of his second account, he was able to trick the website into spitting out all of the saved address information of his first account. This meant that there was essentially no authentication at all. Any user could impersonate another user. Pulling address information may not sound like a big deal, but [Paul] claims that every API request was like this. This meant that you could go as far as placing orders under other customer accounts without their consent.

[Paul] used Moonpig’s API help files to locate more interesting methods. One that stood out to him was the GetCreditCardDetails method. [Paul] gave it a shot, and sure enough the system dumped out credit card details including the last four digits of the card, expiration date, and the name associated with the card. It may not be full card numbers but this is still obviously a pretty big problem that would be fixed immediately… right?

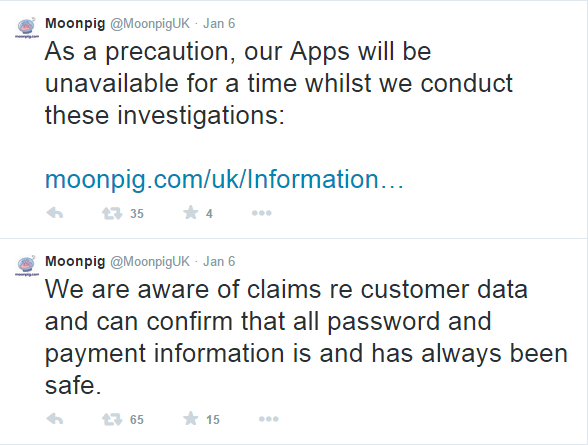

[Paul] disclosed the vulnerability responsibly to Moonpig in August 2013. Moonpig responded by saying the problem was due to legacy code and it would be fixed promptly. A year later, [Paul] followed up with Moonpig. He was told it should be resolved before Christmas. On January 5, 2015, the vulnerability was still not resolved. [Paul] decided that enough was enough, and he might as well just publish his findings online to help press the issue. It seems to have worked. Moonpig has since disabled its API and released a statement via Twitter claiming that, “all password and payment information is and has always been safe”. That’s great and all, but it would mean a bit more if the passwords actually mattered.

I hope this guy printed out all his correspondence between them where he was trying to be helpful and disclose the bug. It is pretty sad that business do not take this stuff seriously into you pull their pants down in front of the world, serves them right for KNOWINGLY exposing personal info for over a year.

If only there was an edit button to fix all the autocorrect typos….

We don’t need that here, HaD tried alternative comment systems and nobody liked it.

It’s a constant worry to me that yet another site get some third party crap, including tracking and censoring, as comment system. Like the annoying ‘disqus’ or worse still google’s.. Because that means I have to forget about yet another site and find another place to get my internet time wasted. Until the day there are none left and I will have to forget about comments.

It also helps that people can’t go back and edit out stupid things they say once they get called up on it.

Most people here are intelligent enough to read past typos/spelling/grammar errors and focus on the actual point of a post.

..or at least be more careful before hitting the “POST COMMENT” button.

I quit using auto correct soon after the first time I enabled it in an application, as not being that helpful in the long run, not to enable it again in any application. Although I do tend to depend too much on applications highlighting errors.

A friend of mine once found a very similar bug in our university’s student registration system and brought it to the attention of the university staff. A kind gesture, no? No. They almost expelled him and made sure that he was not welcome to come back for a master’s degree. Nothing much a malicious person could do with SSNs and the ability to add and drop classes.

This is bad on both sides.

Moonpig are complete idiots for having such a system.

The security researcher should Not have given them that much time.

Lets hope they(MP) can get their act together.

“The security researcher should Not have given them that much time.”

Security researchers are routinely threatened with criminal prosecution, so an over-abundance of caution and a consultation with legal experts is probably a good idea before making accusations of incompetence.

Could it be a variation of Streisand effect ? Gets public to know of an issue that can embarrass the company.

http://en.wikipedia.org/wiki/Streisand_effect

you’re not exactly proving your point if you have to post the link

I think it’s more of a name and shame effect. It seems a lot of corporations don’t intend to do anything unless it will affect their bottom line. Fixing it would cost time and money, so it would negatively impact their profits. If fixing it means they profited than I’m sure they would have been all over it like a pig in slop. What more often happens is stupid support people rarely think a problem is as big as it really is, so what probably happened is it never went anywhere since they either didn’t understand the problem or the scope of it.

I think it’s more that these kinds of problems reflect a broken organizational structure–if they had a system capable of recognizing that the devs were incompetent the problem would never have happened. It’s entirely possible the company as a whole just didn’t care, but applying Hanlon’s Razor here I think the reasonable conclusion is that name and shame is the only way to bypass the communication/management breakdown and get someone with actual authority to intercede.

The trouble in the real world is that often “the devs” are an entirely separate entity, outsourced, or “downsized” once the job was done. Those organisations that manage to hang on to good developers are few and far between. Everything is done for a quick buck, crank out the code, get the orders in, security is an afterthought.

Even the large financial instututions play these games.

“Security fixes” often involves loosley taping shut the stable door long after the horse has left, if they bother to fix at all. 4 digit pin on your bank card, non encryted transactions, credit card details left on web servers… don’t kid yourself this is a particularly unique problem. Good security costs money, so it is a balancing act… pay good developers and take a few more months with the development cycle or get it out the door fast and fix the issues later.

In this case it is completely wrong. The devs in question are not outsourced. I know a couple and the response, “I cannot talk about this…”

NO it is NOT a problem with “lazy developers” You might as well blame bad fast food on the cashier. Developers DO WHAT THEY ARE TOLD TO DO, the problem is MANAGEMENT.

this is wrong, good devs, assumed they recognize security issues, talk to the managers and usually refuse to implement it that way, this leads to discussions that take up most of the developement time and lead to either the devs getting fired, the managers getting fired or the product getting better, ive seen all three of the possibe outcommings and its the third most of the time. so this is a problem of communication

The problem is shortsightedness. Sure it would cost money to fix, but now thier api is down (losing sales) and they still have to fix it. Save now, lose later, the hallmark of poor management.

it’s not shortsightedness either, for the small business owner it is simply badly calculated risk. What is the right amount of time to spend on security? How much money to spend on door locks and alarm systems? These are are calculated risks, and people simply don’t have the data available to make a proper risk assessment.

Should anyone discount the significance of knowing the last 4 digits of a credit card and the associated mailing address, I would suggest they read about the Mat Honan attack.

I’ve seen receipts with only the last 4 digits blanked out. If you have one of those, knowing the last 4 is quite useful!

The question is, does the system requires you to type the full credit card number or would the last four and the rest of your info would be enough to order something? If the info is already on record, then no matter how it’s hidden, the certification process would allow for it if the answer is yes.

Net savvy user sends security issue to website. Website management can’t understand what has been spelt out to them in the simplest terms – chooses to do nothing and hope the problem (savvy user) goes away. Nothing new here, this is just how the non-secure internet works. It is only interesting to those who have been deluded into believing that there is some level of security inherent in the internet, there’s not. Just like everything else, it’s mostly advertising and marketing. Security costs what website operators won’t pay … until they have been hacked. Don’t believe me? look at a “small” company like Sony!

As a HAD member I would much rather NOT being associates with this sort of hacking. Mainstream media can’t distinguish between black hat (criminals) and white hat (security advisers). To them it’s all hacking. And a different sort of hacking to what hack a day represents, so please let’s NOT blur the line further.

That line you don’t want to blur is already at quantum-waveform-function levels of blur.

The types you mention already think of you as an evil wizard capable of all the magics, just for knowing how to do things they can’t like “change a tire”, “plug appliance into wall outlet”, matching up the +/- symbols on a battery and the thing you’re jamming the battery into, or using a screw driver/solder iron/computer without setting yourself aflame or poking out an eye :P

The white/black hat battle is far past lost

“The white/black hat battle is far past lost”

yes indeed that’s why online vendors like ebay and amazon lose so much money to hackers every year, oh wait, you are in your fantasy world, sorry

I am a security researcher, hunting for security vulnerabilities is part of my job, I’m responding to your comment JUST so you and your comment can be linked to me.

“those who have been deluded into believing that there is some level of security inherent in the internet, there’s not.”

TELL US MORE about the billions and billions of dollars that amazon loses to hackers every year, oh yeah right it doesn’t happen

Well is that because there is security that is ‘inherent’ to the internet or because they have a lot of staff just like yourself to ‘fix’ the problems?

It sounds to me that your work has been almost exclusively with web based technology and you don’t have any other point of reference as far as security goes. I was working in security as a network maintained engineer long before we had an internet, so from this point of reference, I maintain my assertion that there is no security that is ‘inherent’ to the internet.

If you disagree then I challenge you to present absolutely any aspect of internet technology that cannot be hacked!

This is why the time to disclosure rules setup by companies such as Google’s security research team are important. If there is no real incentive to fix or address the issue, the company won’t. Yeah, it bites back sometimes when companies forget or choose not to respond in a timely manner, but things like this Moonpig response to a disclosure show time and time again that there needs to be a real stick involved.

if banks really cared about you and your money they would conduct security audits on their merchant account holders, but no

It’s a bit sad to see this kind of behaviour of companies.

I was in a security conference a couple of weeks. There, the dutch national cyber-security center explained that they are now actively promoting a “responsable disclosure policy” to companies in the Netherlands.

In short, it’s a deal made between the person who finds the vulnerability and the companies: one side promises not to exploit the vulnerability, the company promises to follow-up on the problem and even provide feedback to the person who discovered it if and how it was fixed. See text here: http://responsibledisclosure.nl/en/

The cyber-security center themselfs even have a public “hall of fame” for people who reported vulnerabilities: https://www.ncsc.nl/english/current-topics/wall-of-fame.html

This looks like a much more sensable approach then what this company here choice to do.

BTW. Perhaps not a bad idea for the “hackaday” website too ! :-)

“Perhaps not a bad idea for the “hackaday” website too ! ”

yes indeed if you make a point of punching in your credit card numbers and your banking information into blog web sites.

Hi F,

So it is OK to hack into hackaday and change your posts and add sexist, racists or comments ?

Kristoff

I’m no expert, but those suggested guidelines don’t put any expectations on the business with vulnerable website. Other than “We will respond to your report within 3 business days with our evaluation of the report and an expected resolution date”, but no recourse if for the person submitting the report if the resolution date isn’t meet other that sitting on the information they have. The moral of the entire strory so far seems to be. In the evemt you have the skills to do so, don’t test the websites yo to business with to protect yourself. Because if yo think there’s a problem the only thing you can do is not potronise the company,even warning family and friends could lead to problems. I hope those T shirts say “I potentially save others from loosing a lot of money; All I got was this lousy T shirt”

Hi Static,

Well, the idea -as explained by the person of the dutch cybercrime agency is just “to show that you will take the issue seriously and responably”.

Image this: you are a company -say- selling shoes over the internet. Your knowledge is about designing, manufactoring and distributing shoes. The internet and the webshop is just a tool to do this, so you let somebody else (an internet ecommerce company) do that.

Then, out of the blue, you get an email, “I am and have found a hole in your website so that all data of your customers -including the credit-card numbers- can be extracted. I can help you”;

The first time this happens, the person in that company is probably going to get a heart-attack. “Oh no, we are hacked!!!”. Concidering your see in the newspapers about hackers, etc., the first thing most companies do is run to the police (or ignore it)

On the other side, image what happens to the person who reported the problem. “I am so nice to report a possible issue, and they send the police after me”. As Rob also reported below, the law is some countries does not always make a difference between hacking a website for malicous reasons or doing it to show/prove an issue you want to rapport. So the last thing he/she want, is to get into legal issues him/herself just to help somebody else.

So what the “responsable disclosure policy” does is show to the person who has found the vulnerability that the case will be taken up responsability and with mutual respect. Nothing more but also nothing less.

You are correct, it is not a “trade contract with binding clauses for garanteed delivery of services”. But that’s not its goal. Concider it a tool to facilitate the first contact between the reporter and the company.

Kristoff

I’m surprised the crony capitalism hasn’t tried to pass legislation to criminalize what Paul and others have to do to discover that something may be awry and potentially harmful to the public, need to do to double check it. I belie some agricultural states have made it a crime to take employment as farms and food processing facilities for the express purpose to either confirm or discover practices that aren’t safe for the general public.

That’s the case here in Australia. It’s a crime to circumvent security measures irrespective of the reason for doing so. If I security test a web site / server with full authorization from the owner then I can spend a long time in jail.

If I were caught testing the security of my own servers I could be imprisoned, how ridiculous is that!

If I know the IP and address / ID of an active hacker – I dare not tell anyone out of fear of prosecution.

Consequently, money loss though cyber crime is a huge issue here in Australia. Our stupid laws have made it a hacker haven.

he should have used their service to send them a card, sorry about your haxxed API.