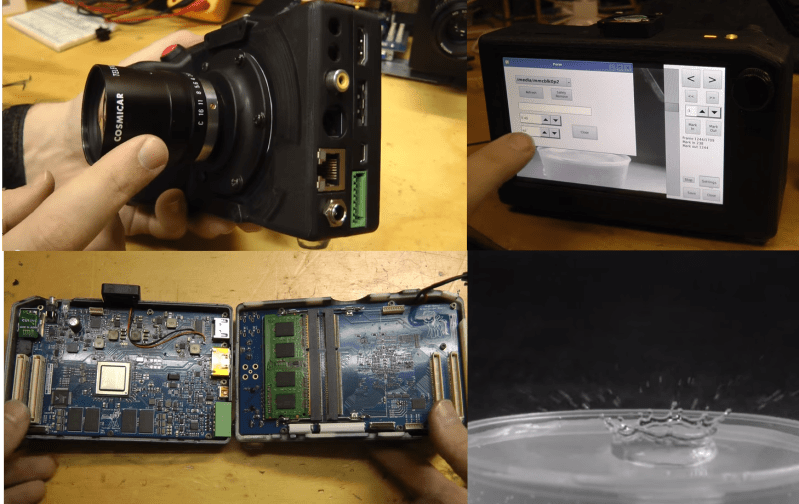

[Tesla500] has a passion for high-speed photography. Unfortunately, costs for high-speed video cameras like the Phantom Flex run into the tens or even hundreds of thousands of dollars. When tools are too expensive, you do the only thing you can – you build your own! [Tesla500’s] HSC768 is named for the data transfer rate of its image sensor. 768 megapixels per second translates to about 960MB/s due to the 10 bit pixel format used by the On Semiconductor Lupa1300-2 image sensor.

This is actually [Tesla500’s] second high-speed camera, the first was HSC80, based upon the much slower Lupa300 sensor. HSC80 did work, but it was tied to an FPGA devboard and controlled by a PC. [Tesla500’s] experience really shows in this second effort, as HSC768 is a complete portable system running Linux with a QT based GUI and a touchscreen. A 3D printed case gives the camera that familiar DSLR/MILC shape we’ve all come to know and love.

The processor is a Texas Instruments TMS320DM8148 DaVinci, running TI’s customized build of Linux. The DaVinci controls most of the mundane things like the GUI, trigger I/O, SD card and SATA interfaces. The real magic is the high-speed image acquisition, which is all handled by the FPGA. High-speed image acquisition demands high-speed memory, and a lot of it! Thankfully, desktop computers have given us large, high-speed DDR3 ram modules. However, when it came time to design the camera, [Tesla500] found that neither Xilinx nor Altera had a FPGA under $1000 USD with DDR3 module support. Sure, they will support individual DDR3 chips, but costs are much higher when dealing with chips. Lattice did have a low-cost FPGA with the features [Tesla500] needed, so a Lattice ECP3 series chip went into the camera.

The final result looks well worth all the effort [Tesla500] has put into this project. The HSC768 is capable of taking SXGA (1280×1024) videos at 500 frames per second, or 800×600 gray·scale images at the 1200 frames per second. Lower resolutions allow for even higher frame rates. [Tesla500] has even used the camera to analyze a strange air oscillation he was having in his pneumatic hand dryer. Click past the break for an overview video of the camera, and the hand dryer video. Both contain some stunning high-speed sequences!

Nice work !

But I wonder how much it costs vs. an used one.

it’s a 10 layer PCB….

5k vs 15k I believe

Concept, execution, results, 10/10. Simply stunning.

Agreed. This is excellent work. Can’t wait for a Kickstarter. Would be cool to allow open source on some of the components – 3D case and software to allow for customization.

this is a seriously cool and polished project

respect

I want

to break free!

to at least break even would be nice

I wonder if he could support his hobby through small batch sales? Probably a gigantic hassel for him though. Even a kit would be cool.

His air dryer trouble shoot got me thinking about schlieren photography, which would also let you see the oscillations. Those videos are really cool to watch.

Nice work, I would buy/build one. I agree the jog wheel seems to provide an intuitive tactile method of input, whilst still remaining fairly precise and simple.

PS.

I did like the bttf reference dropped in at 2:24 on the second video.

I’m very impressed with his dedication for this projects over that timespan, that detail and at that age. Dang it. VERY nice work. He will get far.

I’m pretty sure almost all of the 7 series FPGAs from Xilinx can support DDR3 DIMMs. Spartan-6s don’t, but the Artix-7 35Ts aren’t much more expensive ($35 vs $15 or so). Far cry from $1000…

Also if you can step back to DDR2 DIMMs, dirt-cheap Spartan-3s can handle them, although they’re so resource starved compared to the 6 and 7 series guys that it’s probably not worth using it.

The DDR3 routing time lapse videos are 3 years old. The decision was made before the 7 series was available.

Ah, that’ll do it, which also explains why a split FPGA+SoC was used instead of the fancy-pants all-in-one chips (the dual-ARMs + FPGA).

One of those, assuming you can get enough RAM, would make things pretty darn trivial – since you just have the FPGA stream straight into RAM, and then interrupt the SoC and you’re done, because it already has the data. Sending it over as a video stream to be encoded is pretty clever, though.

One of the problems with using Xilinx parts is the eye watering licence costs for some of the software, so that maybe where some of the $1000 comes from.

That is really awesome! Really interested in the total price he had to invest. Surely above 2k$, the Sensor alone is listed at 1,5k$

But very well done.

Now, kickstart a devkit ;)

Projects like this needed to be manufactured in batches or funded by kickstarter type of preordering as the raw parts are getting too expensive to be funded out of pocket.

Only comment I can make is the case – would be nice to have a grove (( along the edges on both side of the clam shell to get a better seal against dust etc. O-ring around display, dust covers for connectors etc would be nice too.

Needs a kickstart.

Wow, incredible work. Looking forward to seeing future progress.

Here, is a link to a description of someone who wrote his thesis about computer vision, along with boosting normal image sensors to be high speed sensors:

http://thomaspfeifer.net/fpga_dsp_bildverarbeitung.htm

Yeah a ZynQ would be the ideal platform for this project. It even has a FPGA logic accessible AXI master port for DMA’ing direct to main DDR3 that goes through the SCU to take advantage of L2 cache. Would be killer include an Adapteva Epiphony on the board too – like the Parallella.

Not sure about the video suggestion of using flash instead of DDR3. eMMC parts typically have slow write speeds around 15MB/s. You would need 64 of them to hit 960 MB/s aggregate. But it would give you anywhere from .5 to 4 TB of capture time. Nearly 9 minutes for the former – over 1 hour for the later.

Why not consumer SATA SSD’s? Even the cheapest ones do >200MB/s write rate if all sectors have been pre-trimmed.

or Samsung’s new SM 951, PCIe 3.0 x4, SSD with 1.5 GB/s write speed ?

There is a kickstarter already – search for edgertronic. The sensor is a little pricey, about us$1700 from memory. Way cool though.

Strictly speaking… that’s a completed kickstarter for different product. The embeded linux computer with the on camera UI looks like a significant upgrade over the Edgertronic device.

wow!

That’s the kind of project I’d like to see frequently on HaD – less articles, more awesome.

Nice job! This is absolutely amazing work.

That looks like a really neat piece of kit. Personally, if I was putting this on kickstarter, I’d be tempted to look in to making a non-standalone version, as a lower price alternative; No display etc, just sends the image data to the computer, via either USB, ethernet, or something similar, depending on the transfer speeds neccecary (I know they’d be pretty high). That’d possibly mean that the second board would be largely unnececary, and could be replaced by something simpler.

I like the interface features, it’s nice if a portable device has some of the options we normally see on desktop systems but often are unnecessarily and annoyingly reduced and simplified or even not given to to the user on commercial portable devices.

And I was astounded to see the mount, even if I had such a CNC I’d never use a material that can end up throwing debris on the sensor, If I would even think of using it.

And it’s not only an impressive project but it’s pleasing to see the maker enjoy the output so much and use it in a practical investigative manner.

The whole thing is just pretty awe inspiring.

Oh and he’s so right about the video scrolling with the wheel, I always wanted that on my Modesto system but it’s indeed not done in most software, and I don’t get that software developers don’t have that wish themselves and find a way to support it. And even software that used to decode and buffer so that you could at least smoothly scroll has started to move to decoding on the fly so that’s it is no longer possible to do that smoothly backwards even with a wheel interface.

Modesto=desktop, no idea why it was corrected to that, or what the hell a Modesto is.

A friend of mine had this problem on her Android phone. I fixed it by changing the secondary spell check language in the device’s settings. Hers was set to Italian too, for some reason, as yours appears to be. Google tells me “modesto” is Italian for “modest”…

If it doesn’t do it often then it’s probably the secondary translation language that only kicks in when a typed work is not found in the primary language dictionary. I would guess it didn’t find the work “desktop” in it’s English dictionary because it would have instead expected “desk top”….

-typed work is

+typed word is

-the work “desktop”

+the word “desktop”

:)

There’s also the fps1000. Probably the device he was referring to in the video.

what is causing the negative ghost image in the pneumatic hand dryer video after 8:32?

why a bronie?? look at that pcb!

I don’t know if it was clear from the first video, but I think he likes the jog-wheel?

You can skip all that patents crap and simply have a lens that projects the LCD from the phone onto a surface. LCD and backlight are already built-in on a phone, so just need a clip on len and no need for electronics. China can roll this out and you can pick it up in a dollar store.

Sorry wrong thread.

A superb piece of work, a professional-looking machine producing professional-looking results. If you start selling them I wish you much success. In the meantime, I suggest that you try making some high speed film of that helpful cat who appears in the video.

Is it possible to buy the entire setup or parts of it? It would be really helpful for my lab’s experiment data collection.

Thanks