FPGA development has advanced dramatically in the last year, and this is entirely due to an open-source toolchain for Lattice’s iCE40 FPGA. Last spring, the bitstream for this FPGA was reverse engineered and a toolchain made available for anything that can run Linux, including a Raspberry Pi. [Dave] from Xess thought it was high time for a Raspberry Pi FPGA board. With the help of this open-source toolchain, he can program this FPGA board right on the Raspberry Pi.

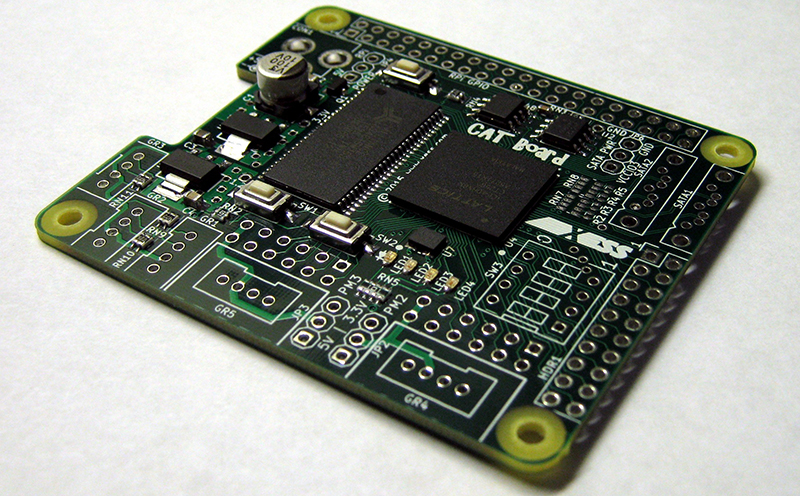

The inspiration for [Dave]’s board came from the XuLA and StickIt! boards that give the Raspberry Pi an FPGA hat. These boards had a problem; the Xilinx bitstreams had to be compiled on a ‘real’ PC and brought over to the Raspberry Pi world. The new project – the CAT Board – brings an entire FPGA dev kit over to the Raspberry Pi.

The hardware for the CAT Board is a Lattice iCE-HX8K, 32 MBytes of SDRAM, a serial configuration flash, LEDs, buttons, DIP switches, grove connectors, and SATA connectors (although [Dave] is just using these for differential signals; he doesn’t know if he can get SATA hard drives to work with this board).

Despite some problems with his board house, [Dave] eventually got his FPGA working, or at least the bitstream configuration part, and he can blink a pair of LEDs with a Raspberry Pi and programmable logic. The Hello World for this project is done, and now the only limit is how many gates are on this FPGA.

Stfu and take my money!!! Perfect for a flying development board!!!

I am really curious what is appealing about something like this when this is available for $55: https://www.crowdsupply.com/krtkl/snickerdoodle

(Note: I have no idea how much this thing costs…)

I think it’s a cool project and not trying to knock the project/creator at all – I’m honestly just curious. I’m sure I’m missing something and it would be great to get some honest feedback.

I’ve got to admit, at $55.00, the Snickerdoodle is a game changer for me; especially when compared to the cost of purchasing a PI and the CAT board.

Having said this, I must to admit that the two products appear to fill different, albeit overlapping niches’. Regardless, I am definitely considering being a backer of your project; but am somewhat shy about the CrowdSupply funding model, rules, and safeguards.

Anyone have any experience with CrowdSupply?

Same model: all-or-nothing, vetted projects, etc. CS works directly with creators on helping make sure product is delivered (supply chain, manufacturing, packaging, fulfillment) but otherwise the exact same – minus the fact that they exclusively have projects that involve delivery of physical goods (i.e. no “creative” projects).

Ultimately you’re backing the creators and the project, not the platform. If you believe we’ll deliver the product (which we’ve been working for two years on and I can personally guarantee you will be the case if we hit our goal) then it would be great to have you as a backer.

The snickerdoodle board seems to be based on Xilinx Zynq. The Xilinx WebPACK ISE is a multi-gigabyte download and works on x86 computers only (Windows or Linux). If you want to run the tools self-hosted on the Zynq, no such luck.

A device that can recompile and reprogram its own FPGA on the fly is a powerful concept. Think of how the GPU is used these days to accelerate certain types of computation. The compiler for the GPU is actually built-in to the graphics driver! In the same vein, the FPGA could become the third leg of CPU/GPU/FPGA-based “diversity computing”.

I have a couple of Parallella boards that also have the Zynq chip and I rarely use the FPGA on them for anything, just because the tools are so painful. FPGAs are just waiting for the vendor chokehold on their crappy tools to end.

Hi, I am one of the snickerdoodle.io creators. You do know that the Parallella was prototyped on a Xilinx FPGA? In fact their entire Kickstarter campaign video consisted of one of the founders having his daughter demonstrate the capabilities of the Xilinx ZC702 eval kit. Capabilities that did not require the parallela at all. As for the Xilinx tools our thought is that you don’t necessarily need the FPGA tools on the target if you have open-source compilers that are targeting a pre-built placed/routed and verified general purpose accelerator that has been implemented in PL.

That’s a very good pragmatic short-term solution. I’m not against that at all.

To be honest, most people won’t be developing FPGA code in Verilog/VHDL but instead rely on those few who do. But being one of the few, I would like to see the FPGA vendor’s tools getting a lot more open. And quickly!

Yes, most of the people know that it was- and that you still have a Xilinx FPGA on the things, esp. if you OWN one like the other party and myself have. You can’t do dynamic compilation and reconfiguration of the bitfield for the fabric on it any more than you can with YOUR device (Don’t get me wrong, I’m up for several of the boards as soon as I can lay hands on the resources for them…the low price for other things that don’t need the Adapteva components make them ROCK). This? You can do it from the Pi. As long as the desired task fits in the gatecount on the Lattice part, you can reprogram the FPGA with a range of things including Haskell. Again…differing goals that I wish Xilinx and/or Altera would figure out here. Opened opens up a range of things that the X86 based stuff can’t bring to the table- and even though Lattice didn’t willfully open up, the fact that they’re open ANYHOW means a lot for a lot of things.

We tend to see a really unreasonable jump from what is really being delivered with CPUs, DSPs and GPUs when platforms introduce FPGAs. Expecting the synthesis, place and route tools to be self hosted on *any* FPGA is not logically akin to having a target hosted C++/Python/Java/LISP ship on your Raspberry Pi.

A correct and fair analogy would be if a portable version the billion $ 300mm wafer TSMC fab was shipped with each Raspberry Pi/CPU/DSP/GPU.

That’s not what I said at all.

The GPU code gets compiled on the host CPU, in the GPU driver. The resulting binary then gets transferred to the GPU, not unlike transferring bitcode to FPGAs.

By self hosting I mean the combination of CPU+GPU+FPGA can compile/synthesize code for all of the three components. And that probably happens on the CPU, because that’s its forte. But that requires the tools to be open.

We use both Lattice and Xilinx parts in our designs. Xilinx for the higher end complex end and Lattice mostly for interfacing, bridging and glue logic. I highly respect Lattice as a company as they’ve really made a good effort to target the entry level end of the market and they have excellent documentation and lots of free and useful IP. What I’m curious about is seeing as this was completely reverse engineered how the open-source tool chain expects to ever be able to achieve accurate timing analysis without detailed knowledge of the underlying silicon routing structure and fabrication properties. These are things that simply aren’t ever going to be presented in a manufacturer datasheet.

Someday we’ll see github commits from Xilinx, Altera, Lattice, Atmel… One can dream!

Presumably not counting these: https://github.com/Xilinx

Ha! Touché…

Next step: delay and supply current calculation algorithms

Not a bad start.

When the free version of Vivado is there, and I can download JUST the updates instead of re-downloading umpeen gigabytes (or asking for a dvd) we might bo onto something.

I don’t see a compiler or bitfield uploader- that’s all the GPL required stuff for U-Boot, etc. out of them for the Zynq stuff.

Glad they’re doing that and willingly. Problem is…it’s not QUITE what Photon Peddler’s talking to.

Clifford Wolf and the IceStorm project has already started to document the iCE40 timing. I am not involved in this by I believe they are deriving it from timing reports generated by the Lattice development tools. When I can get some time away from my real job, I plan to start to add timing-driven place and route to arachne-pnr. Clifford is also adding a static timing analysis pass to Yosys so you’ll get accurate timing reports.

I’m not sure Brian, I think Al wins as he mentioned more cats in his post :^)

http://hackaday.com/2015/10/10/open-source-fpga-pi-hat/

(although he does get points knocked off for not tagging it as well)

ValentFX already offers a Raspberry PI / Xilinix LX9 shield called the LogiPi. http://valentfx.com/logi-pi/

Last I checked you can’t do Xilinx FPGA development without an x86 computer running their proprietary software (at least the command line utilities). I’d love to be wrong about that, though, if you know something I don’t.

The chip used in this project uses an open source FPGA toolchain.

Kinda important to have an open source tool-chain when sticking an FPGA on a PI. It SUCKS not being able to configure the FPGA from the PI, and the best you can do is having to create a bitstream using proprietary software on a regular desktop/laptop, then send it to the pi for programming. At that point, having a PI there to support the FPGA is useless unless its doing the computing-oriented stuff for the application.

With and open-source tool chain, you can put the tools to program and develop the FPGA on the PI, and do it from there. That means having a project that can support it’s own development, and all the ease of use associated with that. One of the reasons the PI is so popular is because you can program a PI on itself with a keyboard and monitor, no external programming computer required. (which is especially useful out in the field)

I think this is one of the often-overlooked aspects of open source. Not only can you vet the code, which seems to be what everyone cares about these days (with good reason), but it allows you and the community at large to just add whatever functionality is lacking. This could be tools and features, or it could be an entire port to another new architecture.

Hi, M – I’d be curious to hear what functionality is currently lacking with say something like the Xilinx SDK/Vivado. Yes, they’re “closed” tools, but I’m not sure how much more open you’d need/want a platform to be considering we’re talking about an FPGA – which is inherently the most “open” hardware architecture you could as for.

And while there are many tools (*particularly* the open ones) that I’d be interested in “vetting the code” for, I’m not sure the tools used for developing systems for the most security-sensitive systems in the world (see: defense and aerospace) fall into that category, but I’d be interested to hear your take…

They’re not quote-unquote closed, they’re quite simply closed. I don’t have the source so I can’t compile them for a new architecture or operating system, and I don’t have the documentation needed to write my own from scratch. As far as tools go, it doesn’t get more closed.

Free-as-in-freedom software is practical. The lack of FPGA development tools on Linux/ARM is an obvious case-in-point.

The functionality that’s missing is the ability to compile their toolchain from source, to be able to study it, and to be able to make derivative works.

This is particularly important if the derivative works are ones that the original author does not consider valuable. e.g. having a single toolchain that supports rivals’ FPGA chips as well as Xilinx.

Heh… The problem’s one of you need an X86 machine, running Linux or Windows to be able to build a bitfield file. With this? It all can reside on the Pi. And you don’t even need to build it with VHDL (Even though you probably will…). You could, if you wanted to, do it with Haskell, etc. Kind of nice for educational purposes if nothing else.

I’m not at all trying to defend the Xilinx/Altera/Lattice business model when it comes to tools but I’d just like to point out that:

a) the HDL/IP underlying ARM is not open source, neither is x86 architecture which only recently could be reasonably synthesized on an FPGA

b) Raspberry Pi is not actually open source — their PCB films are not available for any of the RPi models to my knowledge and I don’t think an RPi 2 schematic has been made available yet. Also, it took 3-4 years? to get the VideoCore IV architecture manual released by Broadcom — far past the useful life for a GPU originally designed to run a Roku player

c) Why could you not have an accelerator realized in FPGA that had a fully open hardware and ISA? Is that not open source? I have yet to see anyone semi manufacturers hand out the tapes/photomasks for a modern processor.

1) You need their tools to MAKE that accelerator in the first place.

2) If you’re doing the algos in C/C++, you’re going to spend megabucks on licensing for those tools.

3) All of the tools only run on X86 machines.

Sorry, you’re quite simply not grasping this. What’s a better solution for education of this subject? Needing a PC, or being able to duff about ON the target in the first place.

Also in a pure logical sense, there is no way to host an FPGA “compiler” on a pure FPGA itself — that would be like an egg cooking a chicken. Or another one I used to like throwing at professors — writing the compiler for a completely new computer language in the language itself. Never got an answer on that one….

To the latter statement: Oh, come on! That’s easy!

You first create a very bare-bones compiler for your new language in a language that is available for the architecture you have. This first compiler has no optimization and probably omits most of the advanced features of the new language. Then, compile your new compiler, written in the new language, with that bare-bones compiler and presto! It becomes self hosting. This exact thing has happened numerous times in the past.

Wanna bet? You just need a bitfield with a CPU to load first (Hint: I have several, esp. if you’ve got OKAD, that will load up just nifty fine on an LX9…)… It’s not practical…or probably even DOABLE (Unless you’ve got Chuck Moore’s OKAD)…but the presumption that you “can’t” is not wholly correct.

One idea I’ve been considering is implementing a virtual FPGA on a physical FPGA. On the modern Xilinx parts about 1/3 of the logic is SLICEMs which can be utilized as distributed RAM, in other words lookup tables that can be modified post configuration. The rest of the logic is SLICELs which are set by the bitstream. If you were to say use the SLICELs and the opaque routing backing them to implement the virtual routing and then use the SLICEMs for your look-up tables you could implement an FPGA on an FPGA — at a considerable scale penalty of course. Nonetheless it can be done which is interesting.

Hello chandra.

If you have details on the distribution and component availability I would be interested (Not to mention EVERYONE ELSE HERE).

Now for the critique sandwich. Xilinx no longer manufacture anything below 7 series tech.

So, the worst part is that LX9 is that is the lowest model. Which we can acquire via ebay at price point of $40 USD.

The problem is that the LOGI-PI AND Logi-bone (Raspberry Pi AAAANNNNDDD for the Beagle Bone Bone Black have SERIOUS SUPPLY ISSUES).

*ehm* Now, from what I remember the Series 6 – WebPack supports LX9 up to LX45.

I do like the project above. HOWEVER if you can give us relevant and more data on LX150 and basic support WE would be grateful.

That said…*sigh* Do you have a way to bypass the FlexNet???

THAT WOULD BE A WORTHWHILE PIECE OF DATA!

Regardless of comments above and below, We would be grateful to be able to leverage stated issues as workaround and solutions. Sure, we can WebPack or use FOSS.

Allow us to get a SD image to load unto the proper high level chip-set via the proper simulator?

We are grateful for your contribution that said could you provide BETTER EXTRAPOLATION or WORK AROUND WOULD BE GREAT!

“FPGA development has advanced dramatically in the last year, and this is entirely due to an open-source toolchain for Lattice’s iCE40 FPGA.”

Really? I heard about this open source toolchain, but I doubt it had a “dramatic” impact on FPGA development. And it’s not like it makes any real difference to the general FPGA “maker”, since FPGA tools from the big vendors like Xilinx are already free anyway – let alone that it would make any difference in the professional space. So maybe it would make a difference to some die hard “open source fanatics” who just can’t sleep at the thought of using a free battle tested tool provided by Xilinx. But that would seem a tiny minority – not close to having a dramatic impact. Do you have any proof of this dramatic advancement in FPGA development in the past year?

The only way to guarantee freedom/privacy is through open source software and hardware. Using binary blobs (that generally remain static for years, after initial development) when it comes to security is never a good idea. A company who predominately makes hardware in generally are unconcerned about security in their software tool chain.

But I have to agree with you that FPGA’s are a low risk area for security (for now at least), in 10 years time that may totally change but if everything is blob based, it will be hard to fix when that standard is ubiquitous.

Everyone seems to obsess over the ‘security’ aspect of open source (with good reason) but that has nothing to do with what’s important about this tool chain being open source. By being open source, it means that the software can be extended and modified to introduce new functionality, and can even be ported to an new architecture. This means that I can run the tools needed to configure the FPGA on my PI on my PI. One of the reasons for the PI’s success is that you can program a PI on itself, with no external equipment/computers/laptops hooked in. All I need is a screen and a keyboard, which is an especially big help in the field. Even the PI itself isn’t entirely open-source, what with the MS boot code blob at it’s core, but it’s open-source-nes has helped out in other ways.

That’s why we need to develop “the most open-source hardware and software stack ever created” at an initial cost of approximately $500MM

Open Source Silicon + Open Source FPGA tools + Open Source Soft Microprocessor + Open Source Compilers + Open Source Operating System

I would venture to say the recent bump in FPGA research and development has been from bitcoin mining.

Haha, yes any degree of FPGA dev advancement being “entirely” due to an open-source toolchain is probably a bit of a stretch… What will *really* lead to a dramatic advancement is when tools like SDCoC and HLS are free – regardless of whether they’re open or not. Creating IP blocks and accelerating algorithms in hardware using nothing but C code? Yes please!

“at the thought of using a free battle tested tool provided by Xilinx … ”

1) where have you seen Xilinx Vivado is “free” ? If you can say that, it’s a big big scoop. You absolutely need a license to use it; My Vivado license is only valid for the Spartan LX9 on my microboard and that’s it; plus i wont’ get any update after one year. So it’s a LICENSE for an EVALUATION of a PARTICULAR device and for a LIMITED time. So to put it another way, if next year Xilinx stops evaluation licences you are stuck. You’ll have to shop for a REAL license and it’s around 3000 or 4000$ …

2) Xilinx Vivado is perhaps one of the most huge software project from a private company; the installable is provided on a DVD, it’s size is around 7,3GB, it needs around 60 GB on your disk (it’s not an error, it is Gigabytes). You need a powerful x86 PC to run that, with tons of options everywhere.

Don’t get me wrong, i don’t have any problem with that, it’s a professional tool for professionals; Xilinx is not a charity and they have to pay for their engineers. They have fierce competitors and want to stay in the game. They do work for customers with deep pockets who don’t want to waste their time with untested solutions, and want Xilinx’s support when something goes wrong and the project’s deadline is approaching.

But i’m just a hobbyist interested by FPGAs; i want to learn how it works and possibly make some projects of my own. If it’s just for downloading some bitstreams i don’t understand, i don’t see the point, anybody can do that.

This Lattice ICE40 solution and open source toolchain does make much more sense to me. I won’t do more with professional tools and i suspect most here won’t.

Yes, there is indeed a “free” license available for Vivado (free as in beer). The Vivado “WebPACK” license is available and it is not a time limited evaluation (which also exists). It is limited in the devices it can support, but it does exist. See http://www.xilinx.com/products/design-tools/vivado/vivado-webpack.html (there is also a similar free “WebPACK” license for the older ISE suite that supports your LX9 part http://www.xilinx.com/products/design-tools/ise-design-suite/ise-webpack.html).

It will of course not run on a Raspberry Pi (and even if it could run, I doubt it would be a pleasant experience – it needs a fairly beefy system and is still “sluggish” in the GUI).

One thing that is completely and wholly unaddressed with this open-source solution — aside from timing closure on unknowable silicon — is how you are going to debug these things especially if there are issues due to underlying silicon properties? That’s about 60% of any software discipline and now we are talking about actual hardware with an FPGA. It makes far more sense to cooperate with a company giving you free verified tools (Lattice does the same BTW) then to reverse engineer a hack just so you can say it is open-source.

The Vivado install is huge, but it’s only about 14GB for me including all the Zynq support for the snickerdoodle.io — I’m not sure where you are getting 60GB from?

I disagree. Of course I use the vendor’s tools at the moment. That’s a given. There’s no other choice at the moment.

But what I don’t use is a compiler from Intel, ARM, MIPS, Atmel or any of the other CPU vendors. I use GCC. And I can see the vendors’ names mentioned in the commit logs of GCC and related tools, so I know they support the toolchain I have chosen.

This is not the case of FPGAs yet, but it will be. Reverse engineering the bitstream will put open source on a collision course with the bottom line of these companies and force them to react. And not a day to soon.

There are a few other things on a collision course with the bottom line of most FPGA companies (see: Intel/Altera, Lattice/SiliconBlue, MicroSemi/Actel) but your point is understood.

The majority of the FPGA tool install are the device support files with models and low level libraries. So regardless of whether it is open source or not, there won’t be much of a size saving.

60GB is tiny these days. Get over the size part. 1 or 2 TB drives are now in the commodity market. AAA game can easily reach 50-60GB installed size. They do a lot less (other than lots of game data like textures, models etc).

1) Free for just the VHDL stuff. Want to do C/C++ HLS? You pay dearly for it unless you want to jump through flaming hoops and accept some limitations.

2) The free stuff? It doesn’t run on the ARM platform right at the moment. THIS does.

Come on Brian, this article does not live up to its potential. There is a format:

Tell us what person X did with product Y, plug vendors and PRICES, collect swag and cash from vendors.

You always seem to forget the step involving publishing purchase prices, which is important. Many of us have already taken time to look at previous FPGA offerings and noted that we were either priced out of the market, or unable to find utility at the past price points. So, it is unlikely that we will follow the vendor links, unless we see a price that is better than this previously listed.

Please provide price when talking about new offerings.

Couldn’t agree more, donkey. Brian was nice enough to write something up on a new Zynq dev board (snickerdoodle) we’d just launched a couple weeks ago. Article was great, only key piece of info missing was that it’s $55 – arguably it’s most important “feature”…

Yes, low-cost is an important feature of a product that has previously been beyond the reach of the consumer.

I just finished reading about the Snickerdoodle on PCWorld and it looks like a fantastic product.

It’s because this is vaporware and nothing more than a PCB with couple of parts with blinking LED has been shown to date. It does have some “i hope it works” to it though. I do hope it works too. Usually these posts wait until it really does work before posting……

Any idea on the synthesis time for a real project (a bit more than blinking an LED) ? A project for a Xilinx LX9 taking 30% of the logic can take ~20minutes to synthesize on a recent x86 machine and can take ~2GB or RAM during synthesis. What about the open source Lattice toolchain ?

Well, as long as it works in several hours or less, I’m ok with that. I think being to program the FPGA on the PI from the PI itself is a pretty big achievement. And who knows? Maybe some one will implement a more resource friendly version that’s a bit more stripped down?

What about the RAM ? With 1GB or RAM some design may fail to synthesize or slow down a lot due to SD card accesses ( and kill your SD card little by little).

I have a serious proposal. If we really want a fully supported 100% open source FPGA which would be the:

“most open-source complete hardware/software stack architecture of all time”

then we would be willing to launch a Kickstarter or Indegogo project to raise funding to purchase the IP and recruit part of the team from a recently defunct FPGA company: Tabula. They closed down in March of 2015 in San Jose so a lot of the talent may still be recruitable if we act fast.

Here’s the plan:

1) Start with a simplified architecture targeting around <200,000 LUTs at 45nm or 65nm

2) Keep the architecture simple and uniform: CLBs, BRAMs, DSP — we will have to look at the transceiver issue to see if that can be pulled in also while still maintaining the open-source status

3) Integrate Flash and DRAM in multichip packages along with offering some non-BGA packages to make utilization easier for the maker community but also appealing to professional use

4) Implement a fully open-source soft CPU architecture/ISA and a mainline Linux port to it making the entire gates to apps stack truly open-source

4) Every single thing about the technology and fabless company including the staff, payroll, architecture, complete tool chain,designs and outputs to fab will be continuously transparent and open source (via GitHub)

5) It will be founded as a 501(c)(3) non-profit in the San Francisco Bay Area for the benefit of the hardware community and will utilize sustainable business practices.

I would estimate that the required Kickstarter raise (which obviously can't come from commercial investors) would need to be in the range of $200MM to $500MM to make the above a reality based on prior venture capital activity in this sector.

This will be "most open-source complete hardware/software stack architecture of all time" and we will work closely with the FSF to make sure that we abide by that foundational rule.

I’m in… but anything over 1M for a Kickstarter is not going to be easy.

IGG then perhaps? https://www.indiegogo.com/projects/ubuntu-edge#/

And the open-source Death Star started to make a pretty good dent before it fizzled out: https://www.kickstarter.com/projects/461687407/kickstarter-open-source-death-star

Unlike the FLOSS Death Star, this is a real project.

Unlike the Ubuntu Edge we don’t need to break into telecom carrier market and deal with 30 different wireless standards, just purchase assets and recruit talent that already exists.

This is totally tractable and reasonable as Adapteva and nameless other small fabless semiconductor companies already demonstrate — especially with the open-source community support and funding. This is moving in the right direction since every element from the gate level up to application level would be able to be openly reviewed and modified.

Might I suggest, a good option would be to look at OpenRISC, for which there is a Linux port.

There’s other bits and pieces on opencores.org too, varying quality apparently, but it’s a start.

You do realize that companies that make programmable logic holds lots of patents. Back in the day, they said the law suits between Xilinx and Altera makes Intel and AMD looks like child play. Thankfully these two went on a cease fire. It gets very hard to come up with entirely new ways of doing thing when these guys have been around since 1980’s. On the other hand some of the old expired patents could be useful. Majority of that $1M would probably be spent on CYA researching and getting around the patents.

I would love to be a part of this (not least due to an interest in VLSI design), but I can only imagine this failing spectacularly at anything other than getting publicity. I doubt crowd funding will net HUNDREDS OF MILLIONS of dollars, and mainstream investors would laugh at us for asking without having ANYTHING to show for it yet.

If however we could show the FPGA vendors that we are willing to go to such an extreme, and make them think we could succeed, they may well open source their tools. I doubt we could scare them though.

FYI: http://www.adapteva.com/white-papers/a-lean-fabless-semiconductor-startup-model/

This is a good read to get a feel on the startup cost of design chips. Factor in the fact that FPGA companies are at the bleeding edge and work very closely with the chip fabs. I have been told that they are usually the first few customers that try out a new process as the FPGA are essentially a large tile of very regular blocks of logic/memory which make the perfect benchmark for tuning a new process.

Like I said, this will have a lot of legal issue due to the existing patents etc and the fact that you have a very steep learning curve having to design things, support software, essentially from scratch without any previous related products.

https://youtu.be/dPEdwaLQLag?t=1m9s

I’m not sure where $1MM came from — I said $200MM to $500MM which according to this adapteva link is about right. I’d lean in the $500MM direction as Tabula burned $200MM and still failed.

Somehow, I think that for $500MM we probably could address working around/with the patent issues and we aren’t proposing doing anything super cutting edge — the important part is that it is open-source.

Resources permitting, I’m in too. If you DO start something like this, I’ll be watching for it and be willing to pitch in. Seriously.

On my Xeon E3 1220, a tiny project (pretty much just a counter and grey code generator hooked up to the LED’s) for an iCEStick (the ice40hx1k ) compiles from verilog to a running fpga blinking LED’s in a little under 3.3 seconds.

Is that fast enough for you? Certainly beats 20 minutes.

If you’d like to try it out, I just put it up at https://github.com/RGD2/icestorm_example

Enjoy.

I also saw somewhere that the open source toolchain cannot use the PLL’s and block ram, if its true, what is the plan to use the board SDRAM ?

I’m guessing you map it into your design the old fashion way, with logic and math and stuff, until that gets sorted out.

I am not sure about PLLs (I believe those may still be a work in progress), but block RAM does appear to work already. See http://hackaday.com/2015/07/28/open-source-fpga-toolchain-builds-cpu/ which uses the 8K of block ram to provide a Forth environment. Dave’s board will use the larger HX8K which has 16KB of block RAM (plus the boards external SDRAM).

PLLs are working, we have an example here: https://github.com/SubProto/icestick-vga-test

Thanks for the clarification.

Does the open source tool chain comes with a HDL simulator that can do testbench, pre and post route timing? That’s a very important part of the process flow.

GHDL could be run on Pi. Also GTKWave. A lot depends your testbench style. And for verilog, maybe icaros could be used.

I haven’t tested really but those are free tools. Just hack the compilation to work.

Post route timing requires full timing models of the parts in question. I have a hard time believing that you can get it without using Lattice’s code.

Which might expose you and everyone using the tools to a giant DMCA/Patent lawsuit I would imagine.

It won’t be a patent law suit. It is not like you are making FPGA all of a sudden. There are companies that characterize the timing of actual parts and make a model, but that requires some major funding.

You can’t really have a 100% Open Source tool chain reaching the level of Vendor functionality. You’ll need that if you want to having timing closure for any modern day designs or interfaces that pushes the parts. So for any serious FPGA work, this is not getting anywhere.

not FPGA patents/copyrights, software patents/copyrights

Copyrights only apply to the actual implementation in question (i.e. THEIR software and ONLY it. If you don’t copy anything directly and R-E it all…no Copyright violation is possible, PERIOD.)

Patents on software are tenuous after recent Supreme Court rulings. They’re doable, but in this case, they’re going to have a *HELL* of a time defending anything and you can move for a summary judgement on the basis they don’t meet the test criteria from the Supreme Court rulings.

Keep in mind…this is someone that has ported games to Linux professionally, done 30+ years in the industry, been a CTO and tried to file his own patents telling you this. You should confer with a licensed attorney- but in the same vein, this is NOT as big a problem as you’re making it out to be.

Lattice’s patches under a compatible license to add the aforementioned timing information would be very welcome.

In the meantime, there’s an opening for a competitor here: you provide the chips and tell us how they work, we’ll give you a free (open-source) toolchain that you can provide to your customers. Company gets toolchain for free, customers get a chip they can take full advantage of using a toolchain they can use and re-use in any manner that meets their needs.

Win win.

Yes, competitors can see it too, that’s what copyright and patents are for, to protect your implementation of the FPGA. The toolchain makers aren’t in it to make FPGAs, simply use them.

I once tried doing some development with an Altera FPGA … I gave up in disgust when I couldn’t get the Quartus toolchain working, and have since refused to have anything to do with FPGAs. The fact tht there is now an FPGA that CAN be utilised using true open-source tools, is starting to get me interested again.

I would suppose that if someone sat down and experimented enough inside the chip, you might be able to work out a rough approximation for the timing delays by taking elements in and out of circuit. It would probably require a fair bit of equipment and mojo, but I figure if someone can figure out how to build an atomic force microscope using piezo elements and tungsten wire, some crazy son-of-a-***** might be able to work out something usable.

That would be observed timing over a very tiny sample not a “guaranteed” one by the vendor. The level of trust just isn’t there. As a lot of things that are open source, you might find the measurement complete out of date and only applies to rev C of the chips and completely on your own if the vendor change fabs or fine tune their fab process.

All of this jumping around hoops to get 100% “open source” tool chain is like vegan of the engineering world. It might be a *long term* ideal you want, but don’t let that be the stumbling block to getting the actual work done now.

A reverse engineering hack for ice40 is not what I would call a FPGA tool-chain in a traditional sense. Sure you can synthesis HDL and deply it. But it has no support or endorsement from Lattice (let’s hope they listen) and certainly isn’t anything remotely close feature-wise to Diamond, Quartus, Vivado/ISE, or Libero.

And the idea that running a development tool directly on the target is all that is needed to finally ‘unleash’ the unbridled power of this board is absurd. Lattice has code you can download and cross-compile to deploy a bit-stream to a target already. So the tool-chain just gives you synthesis on the target – which IMO isn’t that useful. It’s like saying the only reason I use an RPI is because I can build the distribution for the RPi on the RPi. Even the creators don’t do that! Even I cross compile all my application code for RPi on a 12 core Xeon and NFS mount the output directory.. because it’s slightly faster!

That seems sensible. But nonetheless I really think with all the battles around closed IP everywhere it is just a matter of time before “The most open source hardware/software stack of all time” (see above) becomes reality.

I’m going to test the interest level — the Ubuntu Phone and the Death Star didn’t work out on Kickstarter but you never know!

A lot of the comments here seem to indicate that FPGAs have a binary nature – that designs either work or they don’t. It is a very software-tainted view, where an FPGA is a lot of tiny look-up tables and single bit registers, connected by wires and programmable switch boxes. It is a very deterministic, almost Newtonian view.

But it just isn’t true. As you look in more detail get closer to performance limits what looked like 1s and 0s no longer is so. You have clock delays, routing delays, set up times, hold times, fan outs, meta-stability issues, ststic and dynamic hazards, package delays, buffer delays, deterministic jitter, random jitter, differences due to voltage, differences due to temperature, differences due to process. You don’t talk about designs working or not, but designs exceeding the required mean time between failure.

It is not a nice binary would under the package – and the tools hide that all from you. The vendor tools are not that complex because they can’t write good code, it is that complex because they have a very deep understanding of the problems, from millions if not billions of $s of R&D.

Exactly – this is software crossing into the “real world” where screwing up destroys parts and kills people.

The Raspberry Pi/GCC/Open-Source analogies to everything are getting somewhat tiresome. Although I’m sure you could start an FPGA company for $500MM as Tabula burned about $200MM in funding.

Lot of comments about the toolchain and close source software. Guys, we created a startup to make FPGA a user friendly and affordable technology, and to bring it to the masses. We’re working on a modern language + SDK to code applications for FPGAs and to program them. Please have a look https://www.synflow.com/ and let me know what you think of it :-)

I’ve checked you guys out before and your concept is pretty cool. I was not clear on the details of your synflow board availability or price from the website as I haven’t been able to find it on KS. Also, how do the pay cores you list work? Are those priced per project? If you are interested we should talk because we have an optional per unit secure hardware mechanism on the snickerdoodle.io that is designed to enable 3rd party FPGA IP developers like yourself to safely market their cores on a per unit basis.

My sense is that most people still don’t think FPGA is necessary in fact it’s associated with complexity in a four-letter dirty-word kind of way. Firms that have successfully deployed FPGA technology into wider non-specialist markets often avoid the word “FPGA” altogether despite the fact that pretty much all digital ASIC technology is prototyped on FPGAs first.

Thanks! The KS campaign is not started yet, we are waiting for some quotes from providers to set the rewards. Hopefully, we will start the campaign in the coming weeks.

Yes it’s priced per project. We’ve always believed that IP cores should be available to all engineers and makers, not only companies so we priced our cores per project and depending on the final customer (e.g. maker, pro, startup).

I too think that FPGAs have a poor reputation, and this is understandable. There is a world of difference between software and hardware, respectively: open source, affordable, user friendly software, modern languages versus close source, expensive, highly complex software, ageing languages. We can and we should make a difference but it will take some time :)

Your alternative to VHDL/Verilog is very well-designed and the tool looks great.

Thanks ^^

Silly question, so is the goal of this to provide a full environment for FPGA development including producing the bitstream, or is this a front-end to VHDL/Verilog development which you then use proprietary tools to take from there into a synthesized bitstream?

“[Dave] from Xess thought it was high time for a Raspberry Pi FPGA board.” Are you kidding me FPGAs for Rpi have been around for years and hackaday is pitching it as if this is novel…… I suspect this is self-marketing for when they decide to carry this one of many FPGA boards for the Rpi.

http://bugblat.com/products/pif/index.html

True, but two things:

(1) none of the previous solutions were “open-source” in terms of the synthesis, place and route toolchain

(2) the RPi is never going to be a good platform for an FPGA because what non-existent high speed interconnect exactly are you going to use to move data between your FPGA and your RPi. That’s pretty much a hobby until the FPGA is at least sitting on something equivalent to a memory bus.

This is probably a broken record, but it is exactly these kind of issues we looked at with snickerdoodle.io in selecting an SoC that integrates the Cortex-A microprocessors and the FPGA because our architecture has the equivalent of about *1000* wires between the microprocessors and FPGA fabric providing a bandwidth between the Cortex-A9 microprocessor and FPGA fabric of around 134 gigabits/second. In fact there is even a 64 bit wide port that can look directly into the processor cache for super hard-core FPGA enabled accelerators.

The writer that there are no FPGA boards for the RPi when he makes this statement, which is obviously skewed and incorrect. He makes no mention that this is one of many FPGA boards that you can happen to run the tools on Linux-ARM architecture. All of which is not unique when there are plenty of x86 based boards that can run proven tools with an FPGA attached. https://www.kickstarter.com/projects/802007522/up-intel-x5-z8300-board-in-a-raspberry-pi2-form-fa

I believe that the challenge with the snickerdoodle will be the large learning curve of tools, community and support, all of which are huge factors for the Pi. Though I agree that it is a performace trade-off where the snickerdoodle takes the cake all day long and a great pice-point.

Thanks, Enthusiast. And we totally agree – that’s one of the main reasons we started out by giving everyone the ability to choose their I/O / hardware “personality”/configuration without touching Vivado/VHDL/Verilog. All you need is the Eclipse SDK and a mobile device. You can ease into hardware design as you go (or not!). Really, with this architecture, the FPGA should be invisible to the end user unless they don’t want it to be.

I work a bit with the ZynQ, and i think that its very painful to use. The advantage you mention (the logic and CPU being tightly coupled) can become a real hurdle for simple projects since you’ll spend a lot of time trying to get your peripheral to integrate well with Linux (more time than designing the core logic itself).

One tool that ease the development a lot is http://xillybus.com/ as it handles all the Linux side and gives you FIFO/Memory that you can interface your logic with. Its limiting the performance a bit, but is very handy for prototyping.

Jpiat, if you like the idea of Zynq but don’t like ‘experience’ of working with it, you should honestly check this out: http://snickerdoodle.io

We’ve spent two years building something that is low-cost (as in R Pi pricing) and easy to use. If you still end up hating it, absolute worst case you lost $55…but I promise that will not be the case.

I don’t hate the ZynQ itself, because its a very good SOC performance-wise. I just think that its a lie to pretend that its an easy to use tool that you can use to build almost anything. SOC + FPGA are very complex chip and being able to create something with it requires a lot of knowledge. Using plug and play module to hide the complexity is a good approach but won’t allow the user to have a custom architecture as you cannot aggregate all the modules you want into your architecture without the need to go through synthesis (unless you use dynamic partial reconfiguration … which is not free).

This Snickerdoodle board looks very good and i’d like to use it in my day job, but for now i think that its too complex for the average maker/hacker with no FPGA knowledge. The price-point you managed to obtain (i still don’t understand how you could get it that low) will convince a lot of users to buy it, but if your buyer base is not composed of hard-core FPGA designer and Linux developers you’ll have a lot of work to make it what you advertise.

Jpiat, if you’re going to doubt that we can do what we’re saying we’re going to do/have already done, that’s one thing, but if you’re going to blatantly call me/anyone on my team a “liar” I would like to hear your specific and explicit justification for doing so.

Sorry if you misunderstood me or if my word were to hard. I’am not calling you a liar at all, and i really think that its a good thing to have more people trying to make FPGA easier … but i don’t think that your easy is the average user easy.

What if Raspberry Pi can talk with FPGA through USB?

There is one particular example in http://www.future-ds.com/en/products.html#CON_FMC

where any FMC-enabled FPGA board can be used with Raspberry Pi.

can this FPGA card use same than mesa 7C81 card whit linuxcnc. or have this something fake toy only.