Between Tesla Motors’ automobiles and SpaceX’s rockets, Elon Musk’s engineers just have to be getting something right. In part, SpaceX’s success in landing their first stage rockets is due to analysis of telemetry data. You can see some of the data from their launch vehicles on the live videos and there is surely a lot more not shown.

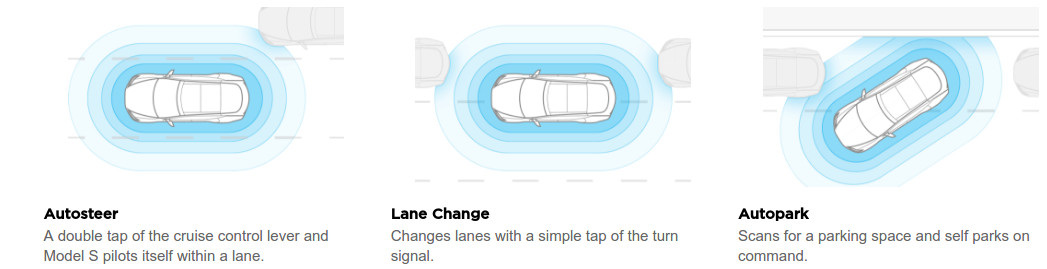

An article in MIT Technology Review provides similar insights in how Tesla came from behind in autonomous vehicle operation by analyzing telemetry from their cars. Since 2014 their Model S received an increasing number of sensors that all report their data over the vehicle’s always-on cellular channel. Sterling Anderson of Tesla reported they get a million miles of data every 10 hours.

The same approach can help us to improve our systems but many believe creating a log of key data is costly in time and resources. If your system is perfect (HA HA!) that would be a valid assessment. All too often such data becomes priceless if analysis explains why your drone or robot wanted to go left into a building instead of right into the open field.

I’ve used logging in both my professional and hobby projects. One system used satellite communications to obtain data from remote pipeline locations. Two notable locations were the side of a mountain in Nevada which was totally inaccessible in the winter and a swamp in the middle of Louisiana. These remote units, and their counterparts at the client’s data centers, provided log data about the operation of the communications to our 24/7 operations center. It wasn’t unusual for our operators to contact a client about a remote site going down before the client’s operators noticed the problem.

The amount of data to log varies among systems and the state of development. In a production system the log consists of high level data and operational steps. A system undergoing intensive testing and debug will record extensive details on operational steps and follows data and calculations step by step through the processing. The level is determined by the likelihood of failure and the data required to determine why the failure occurred. Some systems may allow adjustments to a level while operating while others require recompiling.

Usually the most intensive is the debug level which tracks all the sensor readings, calculations, and outputs down to a very detailed level. The least intensive might capture sensors, outputs, and the results of calculations that go directly into determining the outputs. Usually there is at least one, and possibly more, intermediate levels available for use.

But any way you look at it, people who are serious about building and maintaining complex and robust systems include data logging in their design plan. If you aren’t already doing this with every one of your projects you should be.

How to Organize Data for Journaling (Data Logging)

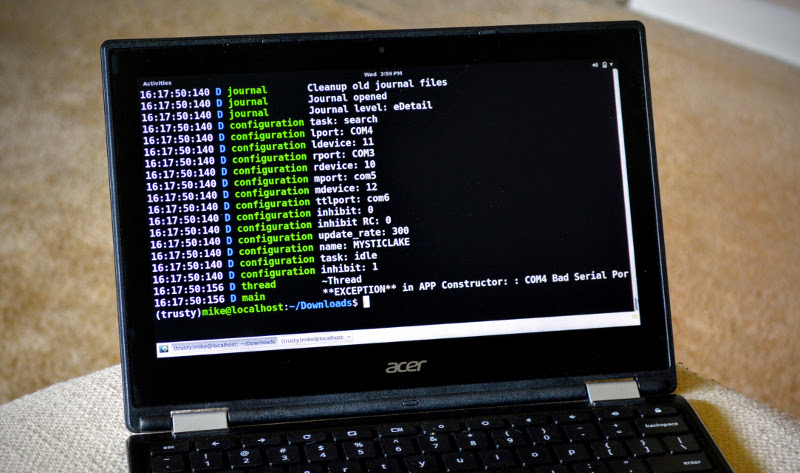

I call my system a journaling system to avoid name conflicts with logarithm functions. My system uses three levels: detail, trace and log. These can be combined with another flag which tracks function calls. Here is a sample of an output file which is organized as four columns of data.

16:17:50:125 D journal Journal srr091213.journal opened 16:17:50:140 D journal Cleanup old journal files 16:17:50:140 D journal Journal opened 16:17:50:140 D journal Journal level: eDetail 16:17:50:140 D configuration task: search 16:17:50:140 D configuration lport: COM4 16:17:50:140 D configuration ldevice: 11 16:17:50:140 D configuration rport: COM3 16:17:50:140 D configuration rdevice: 10 16:17:50:140 D configuration mport: com5 16:17:50:140 D configuration mdevice: 12 16:17:50:140 D configuration ttlport: com6 16:17:50:140 D configuration inhibit: 0 16:17:50:140 D configuration inhibit RC: 0 16:17:50:140 D configuration update_rate: 300 16:17:50:140 D configuration name: MYSTICLAKE 16:17:50:140 D configuration task: idle 16:17:50:140 D configuration inhibit: 1 16:17:50:156 D thread ~Thread 16:17:50:156 D main **EXCEPTION** in APP Constructor: : COM4 Bad Serial Port Handle

The first column in each line is the time of day to milliseconds. Next there is a designator letter which indicates which type of logging is active, followed by the name of the C++ file that is writing to the log. Finally the actual data is recorded and this can vary greatly from task to task.

Precisely recording the time is important for a few reasons. Obviously it tells us in what order the events occurred, and allows sorting of the logs based on this. But there can be other correlations that will make it useful. My robots save video of their operation that contain their own time stamps which can be matched with the first entry in the log.

The designator letter, in this case a ‘D’, indicates the output is from a detailed trace. This could also be ‘T’, ‘L’, or ‘F’ for trace, log, or function. This indicates the level of detail the logs are recording. When things are not going well you want as much data as possible for troubleshooting, and this verbosity setting will be clearly indicated in my logs.

The first three fields are fixed length which allows me to load them into a spreadsheet. Once in the spreadsheet I can sort based on these fields but still return the file to the original order by sorting on the time stamp.

Reading Through This Example

The first few entries in this file are simply the startup of the journal system. The first line tells the date for this journal. It’s a bit old, 12 September 2013. It then reports the cleanup of old journal files. This is important because if your system resources are limited, large journal files can consume the file system. I used a PC with a solid-state drive but still cleaned out old files automatically because they quickly became outdated due to code changes.

Once the journal was running the configuration of the system began. This read an INI file containing the resources to use which were a number of control boards connected to serial ports. Each board had an identification number to allow multiple boards on a port. The journal reported the port and the identification number to use for each controller. For instance, the left motor port, lport, is on COM4 with the identifier of 11.

After the configuration, the main processing began, except in this case it didn’t because a serial port was not connected. The attempt to open it failed which threw an exception.

Using the Journaled Data

At one point I had a problem where the robot would move, stop for an unknown reason, and then start moving again. The journal helped me find the answer. The key line was:

16:17:53:172 D chassis kill 0 loV 1 err 121 lim 1 temp 281 targ 0 rc1 0 rc2 0

This line reported the data read from a motor control board. The file is chassis since this section of code was concerned with the chassis control. As you might guess, the problem was the input voltage was dropping below the limit I’d set, as indicated by the ‘loV’ being 1. You also see the temperature was 28.1 C and the radio control inputs were not active.

This shows the utility of journaling or logging. Without this log it would have been difficult to determine why the robot was stopping. As I recall the robot was attempting a tight turn which caused motors to stall, increased the current draw from the battery, and dropped the battery voltage.

Using a PC I had plenty of room for logs and these days the ubiquity of Single Board Computers (often with huge SD cards) continues to make journaling an easy option. If you’re on an Arduino Uno you don’t have much space but you could send the log over a wireless channel to be recorded on a smartphone or laptop. On a slightly larger system you could keep the log in memory using a circular buffer. The trick there is keeping the unit powered on until you somehow read the buffered data.

You can also add a board specifically for logging. The Adafruit Arduino Logging Shield use I2C while the Sparkfun OpenLog uses a serial connection to handle the data transfer. Since these are writing to SD cards the speed of transfer might limit how much data you can transfer. If you run into that limit you might transfer binary data rather than text. A program on your PC or another board could translate the binary to text.

Logging data is a useful tool for projects of some complexity. It’s worth the time to develop utility routines to help with recording the data regardless of the storage media. The data you save might be the data that saves the day.

“Elton Musk’s” – really? ;-)

That’s Elon’s older brother. Ha!

Fixed, thanks.

Didn’t Elton Musk do a song called “Rocketman” ?

Also “Bennie and the Jets”.

Does he wear sequins and look “fabulous” at award shows as well?

I really want Elon Musk to dress up as “Elton Musk” for halloween.

A couple of things I learnt

– Privacy/minimalism: those data are potentially privacy-relevant.

Think about ways of disposing of them or making them available

only to those who should have access to them from the beginning.

Later it can be painful.

– Timestamps: never, ever use local time (or include the relevant

time zone offset (*not* a symbolic time zone name!) in the log.

Sometimes I’m lazy and use the Unix epoch (number of secs since

start of 1970).

– Sometimes it makes sense to have a two-dimensional tag: submodule

and level. The Unix log system is a good inspiration, btw.

I bet most people didn’t know their cars were sending data about their position and all sensors 24/7 to the central servers. But again, even our internet browsers send data in favour of “service improvement”.

My 1993 Geo Metro doesn’t, LOL! It still uses OBD-1 with 8192 baud.

I like my older cars and truck. At least I can fix a carburetor without having to download a PDF!

I prefer downloading a PDF (mostly you find it free) against having to buy a printed service manual.

In the 90’s I was charged with two felony’s for “data logging”… In the twenty-teens I guess it’s the “IoT way”.

I’d love to hear more!

I know this is way overkill for most – but anyone who’s interested in a system to parse structured and unstructured data should check out Splunk. I’ve been using their free product at home for ADSB logs, temp/humidity from various sources, bandwidth usage on the network, etc. it’s ability to parse JSON, CSV, MYSQL, and human-readable logs has been a life saver.

+1 for Splunk.

Normally I would agree, but it costs a bunch if you mis-estimate the amount of data you collect/parse in a a commercial environment.

Sorry, are you referring to their price model? If so – that’s why I was suggesting the free version. Totally agree that it gets really expensive really quickly…but if you want to play with logging at home / on the bench its a pretty good solution. I think the daily logging limit is something like 500mb or something – which is way more than most need to hack around with. I import a ton of data sources at (relatively) high frequencies and I’ve never hit the limit for the free version.

I’ve worked as IT guy at a medical chemlab with lots of automated test equipment and we did an extraordinary amount of data logging of literally everything that came in or went out. Because we had to be able to provide proof it wasn’t our fault if someone got an incorrect result or the wrong type of blood transfusion. The old paper trail replaced by a digital trail.

And, if you want a simple way to dump data to RRD graphs, check out Munin, http://www.munin-monitoring.org/

Nice tool, heaps of plug-ins. Seems to cover pretty much anything you’d want to keep an eye on and has several language options for writing your own.

> Why Aren’t You?

Microsoft is logging it all for me

You actually make me think, it’s actually really odd how that while we all know MS logs the hell out of everything, and more and more sends it home, but they still have idiotic bugs galore that have been there for more than a decade and that make you wonder ‘doesn’t anybody at MS use windows and make notes?’

That’s what you get if you log not for improving your product but ‘other’ reasons.

It’s not unique to MS though.

Is it possible that they use Linux and Macs, but run Windows in an emulator? They really don’t seem to want Win10 (or 8, in my case) to be compatible with older printers and scanners.

Not cool, Micro$oft.

“the vehicle’s always-on cellular channel.”

I guess we can stop dreaming about getting a tesla then. As with 90% of modern inventions that seem so nice and neat – on the surface of it.

Always on cellular in a vehicle is just so scary…I’ll keep my dumb gas chugger for now until the world of vehicle network security beefs up a bit (or at least I learn enough to protect myself). Not excited one bit about what exploits might be floating out there…especially with the drive by wire designs that seem to be getting so popular.

Just buy a wrecked Tesla and read back a couple of days. Rolling our own EV’s is readily possible and you can cut out all that big brother monitoring crap from the equation.

It’s definitely on the big to-do list – but I certainly don’t have the personal capital floating around :-D

Love to do that, but I’d still need someone to pay for the EV caracass, no way I could afford that :D

“If you aren’t already doing this with every one of your projects (comma) you should be.”

No, you really shouldn’t. Every tool has it’s application. This tool is overkill for 99% of projects on HackADay.

That’s just for people who can’t deduce that if the motor isn’t running, that it’s drive transistor must be baked.

Except when it’s one of the 50 other things in the chain of events from initial go-command to reading sensors to analyzing the current status of the device to generating a drive output. If your project is more complex than a blinky LED then assuming it’s the drive transistor will just lead you round in circles.

List of the most recent electronic projects on Hackaday:

Hack a LED array into an Ikea projector

Telepresence system (WiFi controlled motorized cart)

Large VU meter

Webcam in the fridge

Marble run with sound effects

Audio sync with MP3 player

LED matrix used for audio visualizations

WiFi e-ink display hacked to display weather

Four button keyboard

Tiny Tiny RGB LED matrix

LEDs on a geodesic sphere

Using a WS2811 to drive incandescent bulbs

Single instruction computer

Automatic digital microscope

Space rated GNU for SDR

Fixing a broken CCFL

zero part count temp sensor

hacking a hover board for parts

fixing broken consumer electronics

DIY penplotter

So, they all should be doing data logging eh? I don’t even see one who would benefit.

I’ve started data-logging my data-logging of my logged data.

It’s getting a bit out of hand!

We are more concerned with what is still in your hand, and what you are doing with it.

+1

*giggle*