Usually, when you think of driving a VGA–in software or hardware–you think of using a frame buffer. The frame buffer is usually dual port RAM. One hardware or software process fills in the RAM and another process pulls the data out at the right rate and sends it to the VGA display (usually through a digital to analog converter).

[Connor Archard] and [Noah Levy] wanted to do some music processing with a DE2-115 FPGA board. To drive the VGA display, they took a novel approach. Instead of a frame buffer, they use the FPGA to compute each pixel’s data in real-time.

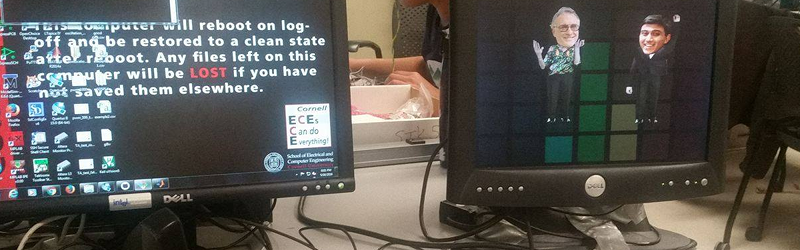

We aren’t sure why, but the project is titled “Bruce in the club” although we suspect it has something to do with [Bruce Land]. It reads in audio to the DE2-115 through a codec, filtered, and provided to the graphics engine. There are four dancers on the screen that move to the music. The lowest frequency detected represents the beat of the song which allows the dancing to synchronize to the music. The FPGA can track up to 64 objects on the screen at a time including depth.

[Conner] and [Noah] describe the system in detail and you can also see a video demonstration below. There’s a lot of good stuff coming out of [Bruce]’s FPGA class at Cornell, including this ultra-fast FPGA audio visualization and this all-FPGA VGA finger-tracking game.

That actually is a very common technique; almost all graphical chipsets from the time before framebuffers were viable use this, and any design which uses e.g. hardware sprites or tiles or display lists still uses this.

I agree, the ambiguity is in the meaning of frame *buffer*.

You use a buffer when you are translating between two different dot clock standards like VGA to HDMI.

You also use a buffer in computers but that is to save the CPU the effort of re-drawing everything, every frame.

But outside these uses, it doesn’t make sense to use a buffer.

Still a cool example of nuts and bolts HDL though.

I disagree with the notion of this being a novel idea as well. The Atari 2600 is a very notable example of an early gaming system that generated each pixel as it was clocked out. Also look at a few online talks given by Jeri Elsworth where she demonstrates this on an FPGA demo as well.

Also isn’t the idea of a sprite engine still based on a frame buffer concept? The pixels being mixed are from an unchanging RAM based buffer repeatedly accessed vsync to vsync. The sprites are not a full frame. But conceptually if you made each of the sprites the full resolution of the output, you would have multiple composited frame buffers!

lol, it even pre-dates the digital era!

Very early PONG like games were analog. The bats were generated by comparing a potentiometer voltage with a window comparator for the line field and using timing for the horizontal etc.

Yeah, we see this a lot here on Hackaday too — basically on every computers/microcontroller project that is too memory-starved to do a full framebuffer.

Doing it with full-frame video as fast, in an FPGA, is pretty fun though.

Yeah, it’s known under the term ‘racing the beam’. Was common in the seventies when memory was expensive and hence frame buffers small or non-existing.

It’s such a well known, even if long time obsolete, technique that I can’t help thinking that the adjective ‘novel’ in the article was just click bait.

https://youtu.be/RYJPcRr-yeI?list=PLzdPghmYexriGUsJQ83yOziJBiYHRooXf&t=53

To actually see the project in action.

HEY ITS NEW TO THEM GUYS

As long as they don’t think they hit some golden age of FPGA graphics which they can patent and get rich from, let them think whatever they want.