[adam] is a caver, meaning that he likes to explore caves and map their inner structure. This is still commonly done using traditional tools, such as notebooks (the paper ones), tape measure, compasses, and inclinometers. [adam] wanted to upgrade his equipment, but found that industrial LiDAR 3D scanners are quite expensive. His Hackaday Prize entry, the Open LIDAR, is an affordable alternative to the expensive industrial 3D scanning solutions out there.

![The 3D scan of a small cave near Louisville (source: [caver.adam's] Sketchfab repository)](https://hackaday.com/wp-content/uploads/2016/06/bildschirmfoto-2016-06-27-um-20-02-11.png?w=250)

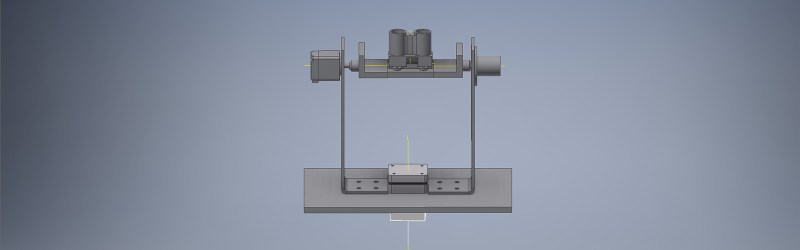

The gimbal he designed for this task uses stepper motors to aim an SF30-B laser rangefinder. An Arduino controls the movement and lets the eye of the sensor scan an object or an entire environment. By sampling the distance readings returned by the sensor, a point cloud is created which then can be converted into a 3D model. [adam] plans to drive the stepper motors in microstepping mode to increase the resolution of his scanner. We’re looking forwards to see the first renderings of 3D cave maps captured with the Open LIDAR.

For those looking for something shorter range but much higher framerate, the Kinect 2 is a ready made LIDAR imaging unit. Of which, maybe it could be possible to increase its range by replacing the emitter with a more powerful one.

Did quite some reverse engineering with the Kinect 2. There is a custom laser driver used for the emitters which also use custom lenses. For the driver there is no datasheet available. Worse, it is confidential. I tried to measure all signals coming from and going to the driver. It went disfunctional as soon as one wire was connected to the ic. Removing the wires made the Kinect functional again. Also it seems like the used lasers are extremely powerful and fit the laser driver. I seriously dount that there is a replacement. Apart from this i guess there is a tuning of the camera to a certain distance range and signals that travel longer will for sure be ignored. Finally the Kinect 2 is not a Lidar but a ToF Sensor. I guess one could do this with te Kinect 360 more easily

LIDAR is normally “ToF” (Time of flight) measurement. But I know the “Kinect” as a structured light measurement. The laser projects some dots or raster-pattern which is used to gather distance information. Bu6t I was not aware, that more than one “Kinect” exist. So it is possible, that different methods are used.

Hi Martin,

sorry for being inprecise. of ocurse LIDAR is a ToF measurement. The Kinect 2 (namely Kinect One. What a confusing name) uses a specialized high resolution 2D ToF Sensor with 512×424 pixel resolution and is somewhat different to conventional LIDAR systems. For the Emission three “high” power laser with special lens systems are used.

In contrast, the old Kinect 360 uses a quasi random projection 160×120 pattern with an astigmatic lens and Laser projection. The camera finds the dot location using the shape, direction and size of the detected dots.

The Kinect 360 was easily modable. With the Kinect One we failed when it came to Hardware mods.

Best

from my very limited knowledge Kinect ONE _does not_ have a full hardware ToF Sensor, more like a phase detection sensor, and bulk of the depth calculation has to be performed on the console GPU after pumping raw sensor data over USB 3.0.

Sorry man, but Kinect2 isn’t good enough or really the right tech.

Great at detecting motion from 15 feet away, probably terrible at high-res scanning of the environment.

Kinect actual works pretty decent if you care about the picture aspect. Kinect is rated to about 3.5 meters and has a lower resolution. Also, you only get color if your work environment has enough light for the color camera to work (otherwise you only get the IR camera and depth map). Instead of using the Kinect I would recommend the Structure sensor (iPhone) or the Intel Realsense sensor (android?). They are much smaller and work on the same principal as the Kinect.

I build something similar to this a view years ago with a uni-t laser rangefinder I purchased for about 60 bucks. It can measure up to 25m with a precision of +- 1.5mm but only about once every second. The readout was made through an Arduino Mega 2560. The main flaw of my design then was that I measured the rotation and inclination of the unit with an cheap IMU that was good for measuring with a +- 2 degree accuracy. It was a proof of concept, but the next revision is going to get some nice 18 bit rotaty encoders :D

I also programmed a little GUI for controlling the device and viewing its measurements.

If you posted your results online, then your project is one of the ones that inspired this one. I just needed to kick up the sample rate. If you can do it, so can the rest of us!

“[adam] plans to drive the stepper motors in microstepping mode to increase the resolution of his scanner.”

I have little experience driving steppers, and it looks like a great idea! Would differences in the stepper’s windings add some inaccuracy to the positioning system?

You might want to ‘close the loop’ with some rotation sensors, like [Hassi] plans to do.

Another suggestion; don’t go cheap on the lazy susan! The ones you have LOOK great but if you can hear grinding then you probably should get another one.

Once again, your project looks great and I someday want to do this! :D

I just saw the steppers you were talking about, and they look really helpful. It seems that every time I turn around There is another way to do things. Part of the fun of doing project work. I also just found out about hollow shaft stepper motors to simplify the gimbal design.

Can someone explain the thumbnail vs the actual output. I wouldn’t have thought the LIDAR-Lite 2 can capture distance and imagery? How is the tunnel mapped. is someone carrying the LIDAR on their head or is it a stitched mesh from multiple scans? Great project!

In the write up I tried to clarify about the picture I included. All pictures were done with a Faro Focus 3D (time donated from a local company). The pictures are from a single scan. The scan captures distance and intensity of the points. Those points were inserted into Scene (the Faro product) to produce these pictures (from the point cloud itself). I’ve switched over to AutoDesk ReCap to do the same work because my trial ended and I have Autodesk as a student. The software creates a picture (or a spherical panorama) from the point of view of the sensor.

In the images I posted you may see some spheres. Those spheres are used in the software to “register” multiple scans together on a desktop computer. That’s how we get the top and side views of the passage including multiple scans at the same time. Since that software isn’t readily available to the caving community, my roadmap calls for a different method for registering scans together that will work on the device itself…but I need to get the scanner working at all before I go down that rabbit hole.

Oh, and the LidarLite doesn’t appear to do intensity. But the SF-30b appears to capture intensity so I expect it to work similar to the Faro. The two will give different images based on these limitations. With the LidarLite it will probably be necessary to convert the point cloud to a mesh and then set up artificial lights to create shadows.

Thanks for your reply in both cases. Sorry I missed the write-up TL;DR I only perused the documentation. You’ve cleared up a few things and I’m excited about this project!

Why have all these people who build gimbals for lasers, cameras etc. never heard of mirrors?

Because these modules have ~25mm of spacing between the laser and the photodiode. So when we rotate the mirror we experience a parallax error from the mirror in addition to the error from laser distance module. When the plane of the mirror is oriented so laser and diode are equidistant to the mirror you get [near] zero error and when the mirror is rotated 90 degrees so the laser is closest to the mirror and the photodiode is farthest from the mirror you get max error. Also, mirrors can be expensive for good mirrors. And it leaves one more optical element that needs to be cleaned (this is in a cave).

In addition, a spinning mirror only removes the sensor cart and the slip ring from the list of required equipment. So…I can literally mod this gimbal to use a mirror later without losing more than $10 (low on the list of activities currently).

High end LIDAR machines with spinning mirrors likely use laser interferometry laser distance measurements which do not suffer from parallax error in the same way. But the goal of this project is to use quick off the shelf parts to make a reasonably usable device.

So, now someone can strap that Lidar on a drone and explore the caves without actually placing him/herself in danger. Also, recreating that scene from Prometheus would be awesome.

Hi Adam, This is Nathan from Tumbling Rock. Would like your help with a project if interested. Look me up please. Thanks!