Ever wonder why analog TV in North America is so weird from a technical standpoint? [standupmaths] did, so he did a little poking into the history of the universally hated NTSC standard for color television and the result is not only an explanation for how American TV standards came to be, but also a lesson in how engineers sometimes have to make inelegant design compromises.

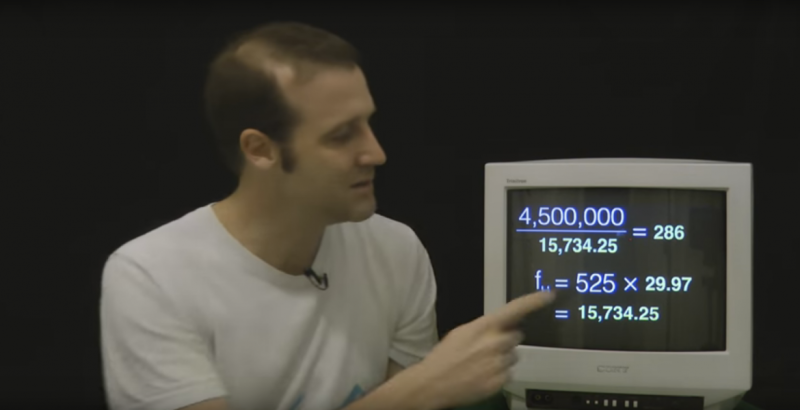

Before we get into a huge NTSC versus PAL fracas in the comments, as a resident of the US we’ll stipulate that our analog color television standards were lousy. But as [standupmaths] explores in some depth, there’s a method to the madness. His chief gripe centers around the National Television System Committee’s decision to use a frame rate of 29.97 fps rather than the more sensible (for the 60 Hz AC power grid) 30 fps. We’ll leave the details to the video below, but suffice it to say that like many design decisions, this one had to do with keeping multiple constituencies happy. Or at least equally miserable. In the end [standupmaths] makes it easy to see why the least worst decision was to derate the refresh speed slightly from 30 fps.

Given the constraints they were working with, that fact that NTSC works as well as it does is pretty impressive, and quite an epic hack. And apparently inspiring, too; we’ve seen quite a few analog TV posts here lately, like using an SDR to transit PAL signals or NTSC from a microcontroller.

Title image by Denelson83 [CC-BY-SA-3.0], via Wikimedia Commons

I absolutely LOVE how I get a video suggested on YouTube, and the next day there is an article here about the video… The last like 6 of these tho has happend to. It is hilarious that even with my watching of gaming videos and truckers and tow truck drivers, that I watch enough DIY vids to get the same suggestions as the HAD editors…

some buddies in an irc room noticed that if they shared a link in the room, it would be suggested on youtube to folks that didnt click the link. google knows your friends…

I have some insight into how that happens and it’s not what people imagine. Let’s say it’s yt link and it’s shared in a semi public space like discord.

The group of people have no other connection, most of them end up seeing the same video in their feed and think spooky Google watching them.

Reality is many of them already had this video suggestion and hadn’t noticed it yet. All of these users will get the same suggestion because it’s based on their demographic + randomization attempt at keeping you engaged.

Same goes for you are speaking of a subject and nobody searches anything related yet again later you see suggestions on this obscure thing and think omg they are listening to me.

No in this case the reason the subject came up in conversation is because the person had already been exposed to the idea and hadn’t remembered the source of the topic or wilfully acted as if it was their original thought.

Basically by the time you notice an ad it’s been flashed in your view dozens of times through subtle placement and by the time you have started talking about the thing it’s been engrained so deep you actually think it was your idea in the first place.

“John Carpenter’s They Live” is a very accurate example of how this is accomplished but irl no aliens or portal watches.

And before Europeans start beating up on NTSC, just remember that monochrome NTSC came over twenty years before PAL, and color NTSC came 10 years before.

Of course PAL can be superior — it is designed for color from the start, could use all of the lessons learned from NTSC, and was based on an electronics industry that was 20 years more mature. NTSC was invented even before the transistor!

We learn from others’ mistakes.

And it only took you a decade to do it.

Only is the key word here. The USA should figure it out sometimes in the next 50 years.

And long before that John Logie Baird wanted to deploy 1000-line colour system :)

From Baird that would have been extreme wishful thinking because he was stuck on attempting to perfect an electro-mechanical system, despite freely given information from Philo Farnsworth who invented the all electronic TV method.

PAL was also black and white to start with it just wasn’t called PAL then but the same system was in use before color was added.

If I remember my history correctly, there was a time when European TV standards were a mess too. If I remember correctly, France had an 819 line TV standard (when I lived in the Netherlands, we had an old black and white TV with a button to switch between 819 and 625). Great Britain also had several different standards; BBC2 was 625 lines from the start but BBC1 was a different number, I don’t remember how many.

And then of course there are many minor differences between analog TV standards internationally; for example in Britain the audio carrier was 6MHz away from the video carrier whereas in Europe the offset was 5.5MHz. And in Latin America they used a version of PAL called PAL-M that runs at 60Hz.

Sorry, that PAL-M was for Brazil and, I don’t remember. The countries with 50 Hz, like Uruguay and Argentina had PAL-N. We got the bandwidth of NTSC with the color method of PAL, what could have been worse ?…

There was 405-line before 625-line, the BBC broadcast both for a while on what became BBC 1.

Then France, Russia and parts of the Middle East had SECAM, the primary reason for France inventing it seems to have been NIH and the other countries adopting it so that they could have a different standard to other countries (so residents couldn’t watch TV from other countries.

Even for the French, the primary reason was to prevent local reception of ‘outside’ broadcasts. Although in their case it was to do with french law – which ‘outside’ broadcasters did not have to comply with – rather than censorship as in the case of the Soviet Union and other Warsaw Pact countries.

Quite often the place where a new technology first gets wide use of it gets stuck for a long time using the worst version of it, while other areas build on the original and improve it.

That’s why Britain has a struggling Victorian rail system.

…And really awful DAB digital radio.

and America has a struggling stone age rail system

America has a very nice rail system but it’s intended for freight. In Europe the very nice rail system is intended for passengers and most freight doesn’t travel by rail.

Exactly. Outside of the Northeast Corridor, it’s a freight system with some passenger trains. As more metro areas deploy assortments of technologies, it becomes even more fragmented from a passenger standpoint.

And awful HD Radio. Guess we’re not so different from our friends on the other side of the pond after all!

The BBC looked into adopting NTSC as the UK’s colour system, integrating it into the existing 405 line transmissions. One of the TV museums has an American round-screen colour TV converted to 405 line for their experiments.

It’s also worth reminding NTSC haters that the vast majority of NTSC sets didn’t implement the full standard in their decoders, for cost cutting reasons Thus the original NTSC sets like the RCA CT-100 give a better colour performance than some that followed.

The old dot triad CRTs had a better looking picture than the aperture grille and slot mask types. The phosphors were printed onto the glass as discrete dots instead of continuous vertical stripes. Thus they did not have the vertical color bleeding from energized areas of the phosphor stripes glowing outside their pixel.

What the aperture grille (Trinitron and short lived copies after the patent ran out not long before flat panels took over) and slot mask had going for them was a brighter picture due to there being less metal between the electron gun and the face of the tube.

Distortion was also easier to control. Trinitron and copies stretched the grille tightly vertically but had to maintain the sideways curve. With a slot mask it was possible to stretch it both ways and make a perfectly flat faced CRT.

That could be done with a dot triad shadow mask but I doubt it ever was.

One method of distortion management was to manufacture the mask distorted in such a way that when it heated up to operating temperature it would warp into proper shape to align the beams properly with the phosphor dots.

In the 1980s Zenith offered a 14″ flat tension mask (FTM) tube that used the triad arrangement: the model 1490. The similarity between 1492 (the year Columbus reached the New World) and 1490 led some wags to claim Zenith’s FTM restored the world to its (proper?) flat Earth state.

Yeah…. I kinda sensed “You suck, we rule” vibes here.

The original work that led to backwards compatible colour systems was done during the 1930s and 40s. There were several prototype colour systems around before WW2 in the US and Europe, but the receiver technology was not ready for mass production. As a result B&W TV came first. By the time the receiver technology had advanced enough to make colour TV manufacturing cost effective, the installed base was too big. It wasn’t just the receivers that were a problem for changing something like the line frequency or channel spacing, it was all the transmitters, and the distribution networks. And this was all before transistors!

The theory behind both NTSC & PAL was around in the early 40s, and the US networks were shown demos of a 525/30 version of PAL sometime in 1945 or 46 (I can’t find my notes, so I am going from memory. In 1987 I met one of the engineers who was there!), but when they asked when it would be ready, the answer was maybe 5 years. The receiver circuitry to sync with the alternating sub-carrier phase was not easy to do cheaply at the time using valves (tubes). The circuits were just not stable enough without making them more complicated. In fact I don’t think PAL colour receivers became affordable until the late 60s when the decoders could use transistors. My Dad bought a colour TV in 1967, which had valves in all the RF and high voltage stages, but transistors in the PAL decoder and audio sections. In 1945/46 NTSC receivers were practical and the manufacturers said they could have them in the shops immediately, so that’s what the networks went with!

For the record, the standard acronym for NTSC is Never Twice the Same Color. PAL is Pictures At Last, and SECAM is System Entirely Contrary to the American Method. The differences are due to distortions to the sub-carrier in the distribution/transmission chain. With NTSC these show up as changes of HUE so faces go green or purple. The line-by-line phase alternation in PAL cancels out the phase distortion, but this reduces the SATURATION so colours will look faded. NTSC & PAL both use AM or Amplitude Modulation for the colour sub-carrier, while SECAM uses FM (Frequency Modulation) and this gets round the problem and makes the signal much more robust.

Of course Digital TV gets round all of these problems, but analogue is going to be around for sometime yet and parts of the world where the cost of converting is still too high.

Here in Australia we maintained compatibility with black and white TV with the PAL colour standard we used here.

If the engineers of the day had taken his suggestion and gone to 625 lines, existing black and white TV’s would not have been able to display color channels, and that was a huge consideration because the cost of a TV set was far higher in terms of typical incomes than it is today. It would have also resulted in even crappier horizontal resolution than we had because we would still have had only 4.5 MHz for all that pixel data, and with the color smearing worse as well.

In the UK we started with 405-line and then added 625-line later on, keeping 405-line legacy transmissions for a while so people weren’t left behind too abruptly. All technology has obsolescence at some point, the question is at what point do you cut away the dead flesh and move forward.

Kind of inelegant, though.

How can creating a nice clean standard with a transition period be considered less elegant than the mess that invariably ends up when you use the alternative. We did so with digital TV too. Run them side by side and then turn off the analogue stuff.

speaking of digital, US ended up with ancient mpeg2

By 1953 there had been enough TVs sold for about 1/5th of the US population. Color didn’t gain much of a foothold here until 1964~1965.

In 1964 most American TV shows were monochrome. NBC made the decision to go 100% color in 1965, any show that didn’t go to color, wasn’t on NBC anymore.

ABC and CBS transitioned many of their shows to color in 1965 and most, if not all, by 1966.

Adopting a new color system not backwards compatible with NTSC monochrome would have been enormously expensive for broadcasters and the TV owning public. A dual broadcast system would have required TV stations to buy second transmitters and pay the FCC for additional channel licenses.

The bandwidth was there in VHF but in most areas they liked to keep one blank channel between active ones to prevent interference, though for some reason it seemed to be less of an issue between channel 6 and 7. Here we had CBS on 2, PBS on 4, ABC on 6 and NBC on 7, with no UHF in use. Then in 1980~81 an independent started on 12 then ~1990 another started on 9. By then FOX was on 12. A few years ago FOX moved to 9 and 12 went independent.

ATSC’s use of the 6Mhz channel bandwidth is much more precisely controlled, so it can be used for multiple subchannels. IIRC a single 1080p broadcast will use most of the bandwidth so nobody in the USA broadcasts anything over 1080i, with most stations running a single 720p and multiple 480p subchannels.

The licensing scheme in Canada requires a separate license for each subchannel, so up there they have stations broadcasting a a single 1080p channel, with few using any subchannels.

VHF channels 6 and 7 aren’t actually adjacent. They sit in two different bands separated by about 90 MHz.

FM radio and other services sit beetween 6 and 7.

Also a small gap between 4 and 5.

I had an early RCA with channel 1 on it.

You could purchase small B&W TV’s well into the 80’s. My parents bought me one as a graduation present in 1980, but you also had small portable ones that ran off 12 volts.

I think the little 5″ ones made it into the 2000s, but 12″ B&W disappeared when color sets got cheaper.

Holy crap you can still buy them on amazon.

Why would you buy one when the last NTSC broadcast in the US was years ago?

CCTV, maybe, but all you need is a monitor, not a full TV…

Lots of weird stuff in the corners of Amazon, I think.

Crappy TVs have frequently been cheaper than monitors.

You can read the proper explanation on Wikipedia: https://en.wikipedia.org/wiki/NTSC#Color_encoding

bad youtube

Nothing in what is said on wikipedia contractict what is said in the video and no assertion is wrong in what is said in the video. This is a good youtube video.

There are a number of errors in the video.

PAL wasn’t just European.

The time periods were wrong because US had National TV Standards Committee NTSC black and white and then NTSC color.

In my country we had an un-named standard for black and white which was subsequently called PAL when color was added.

So to say the US had NTSC ‘X’ years before there was PAL is comparing the time of US black and white to other countries color.

Then there is the issue of perceived picture quality which was explained as a difference in resolution, 525 lines vs 625 lines. That has nothing to do with why NTSC has poor color. Other systems have better color when transmitted as their modulation scheme was designed to cancel phase distortions that occur with terrestrial transmission and the is why Phase Alternating Lines (PAL) is better when transmitted.

It’s still a very good video and an excellent guide to help understand NTSC.

It will actually help me with one project that uses NTSC and no-one has been able to tell me which version of NTSC because they don’t seem to understand that as an outside I see two versions. Now finally I understand why Americans don’t know that there are two versions and why they don’t.

The two versions being the different frame rates.

About the phase distortions for color. PAL used a modulation scheme that requires using an analog delay line. These days those are a slab of crystal with piezo elements along the edges. Did those even exist when NTSC color was created?

Did PAL delay lines exist when NTSC color was created – No.

Did the supporting technology exist to create delay lines – Yes, and especially so in the US after WW2 when crystal technology was important.

It’s fair to say that the later version of PAL used the delay line. The earlier and completely compatible version didn’t, it relied on your eye to cancel out the errors between lines.

Great video, Wish I understood this stuff 15 years ago I would have been hacking TV channels, damn you digital!!! Also it would have been better to included SECAM which is used in France, parts of Africa and Russia.

Get a Hides.com.tw USB television modulator and you can have another go with digital TV without breaking the bank.

Thanks Bob I think I will give that a go, While NTSC & PAL are interesting I wouldn’t really want to put too much time into dying tech.

NTSC–the first example of a very-widely-adopted, lossy video compression system.

Actual NTSC isn’t compressed and works perfectly over a cable but as soon as you transmit it terrestrially, phase distortions and cancellations degrade the color information.

Discarding information (such as interlacing) is a form of lossy compression.

It most certainly is not. Compression implies digitization, which wasn’t involved in video when ntsc was conceived.

Yeah…well.. No:

https://en.m.wikipedia.org/wiki/Dynamic_range_compression

Compression exists in the analog world.

But this is not compression as the correct frame rate is specified so there isn’t missing information. It’s just transmitting the same amount of information in a different format.

@Localroger is right, the decision to bodge together n NTSC colour (yea, COLOUR!) standard was more sociological than technical. Modern NTSC colour was introduced in 1953, by that time there were about 21 million* TV sets in the U.S., so there was no way people would stand for their TVs being junked practically overnight (which I’m sure the NTSC assumed would happen) so they went with the hack instead of pissing off 21 million TV viewers. (Sure the same transition happened in Britain from 405 to PAL 625 but considering the Brits just had a couple of channels at the time and 405 was kept on air who cared?) So the fact is NTSC was a bodge but it had to be because the two things Americans love most are TV and guns, and if you take away their TV they’ll go get their guns, and nobody wants that!

*

http://www.tvhistory.tv/Annual_TV_Sales_39-59.JPG

Oh yea, and for all the NTSC Colour haters just be glad the NTSC didn’t adopt (or finalize the adoption) of the CBS Field Sequential Colour System, yikes!

https://en.wikipedia.org/wiki/Field-sequential_color_system

Yep, as a mate of mine and I used to suggest:

NTSC – Never The Same Colour

SECAM – System Even Cr@pper than the American Method

PAL – Perfect At Last

I was told by my broadcast systems lecturer that PAL could stand for Pale And Lurid but they seem rather conflicting. One disadvantage of PAL is that it averages out chroma values which means it actually lacks some of the colour accuracy and definition of NTSC, however the chroma phase is always more accurate.

That was a deliberate design choice as the human eye is far more sensitive to colour shifts than amplitude shifts.

The ‘Pale And Lurid’ label comes from the fact that the technology is an exact science but the human eye isn’t :) Some people are more sensitive to colour and/or brightness shifts than others.

Picture Always Lousy

FSCS looks so much simpler and is easier to understand, but would have performed terribly compared to NTSC color. Hacking color into NTSC is really one of the great epic hacks of 20th century engineering. That it worked at all was a miracle, and it really wasn’t until the occasional computer using the TV as a color display that we really realized just how bad it was. It was incredibly well designed for its intended purpose of adding color to live scene moving images without adding to the bandwidth needed for existing channel allotments.

The old computer CGA video standard had two palates and one of them deliberately used the problems with NTSC monitors to generate the extra palate. I never saw this as we didn’t have NTSC TV’s here.

LGR (lazy game reviews) on Youtube has a demonstration of this effect.

*Palette (Palate is the roof of your mouth)

That’s what you get with multiple language spell checkers. It’s looks wrong to read so you just think it must be correct in US English or British English.

The main reason for the later introduction of colour television in Europe is that Americans had more money in their pockets because they didn’t have to rebuild a country that had been bombed to bits. The UK was broadcasting TV before the war and had implemented the technology shift from mechanical to electronic scanning. When the European economies started generating significant amounts of disposable income all sorts of ‘modern’ products and infrastructure became affordable and better TV was part of that.

The US colour TV system was an engineering marvel – count the number of transistors in today’s cameras, mixers, distribution and transmission equipment, let alone the consumer receivers and then count the number of valves (tubes) in early equipment .

The engineers involved in the NTSC system (it’s not just the receiver) brought out a masterpiece of technology, and it had a lot of compromises in it because it was designed by engineers not prima donnas. As for the differing standards – ’twas always thus, and still is to a great extent. Politicians think standards are there to make life difficult for foreigners.

This is something I don’t think was touched on in the video, there were more people in the USA with TVs then pretty much everywhere else combined. Prior to 1942, TV in America was more of an experiment by a couple broadcasters in New York. When NTSC Black and White was established in the fall of 1941, many of the TV sets were incompatible and obsoleted by the standard. There was also a frequency reallocation that occurred at some point which messed things up too. But as you can see, there were less than 10000 TVs out there so it was deemed acceptable.

However after the NTSC Black and White standard was established you see rapid growth from 1942 onward. When Color came along, the last thing anyone wanted to do was piss everyone off again by either obsoleting hardware or doing another frequency reallocation. They also didn’t want to establish the color channels on a new frequency range because the only available slots were much higher frequencies which would have made interference greater and required a greater number of terrestrial broadcast towers.

So NTSC Color was a huge hack that kept all of the BW Televisions working while enabling new Color TVs with the added benefit that the channels remained exactly the same. Think about the frequency reallocation for the Digital Transition, it sucked but was surprisingly smooth.

Have a look at http://www.farnovision.com Philo T. Farnsworth was definitely a hacker. He knew exactly what he wanted to do to make all electronic television work, and worked deliberately towards that goal.

“We didn’t know it couldn’t be done, so we just went ahead and did it.”

Or maybe it had less to do with keeping Americans happy and more to do with making lots of money. Because less face it, how can you reach people inside their houses for advertising if their TV doesn’t work?

Except I think Elvis shot his TV due to the programming he received rather than not having any.

https://xkcd.com/927/

um when NTSC was being consider Europe had sheep and goats roaming around, TV came decades later and by then they were able to learn from the mistakes of their masters so yah it is easier to imitate than to innovate; ask China but some times you do get the benefits of the innovation lessons that you are trying to imitate

We still have sheep and goats roaming around, we eat them.

the first regularly scheduled tv station was british, not american, all american stations up to that point did not have regular schedules and were considered experimental.

Europe was busy inventing TV back when the US had buffalo roaming around.

It’s not the sheep and goats that were the problem but the fact that they had just been through 6 years of war.

One forgets that it took 20 years for color to penetrate the market. There were black and white shows into the 70’s.

My grandmother even had a B/W TV in the early 70ies (when I was a small child). Which was still a bonus, because we did not have even a B/W one.

The first television set I ever bought was a 13″ Quasar color TV in 1976. I think my parents still had a B/W set at the time. They bought it around 1966, I think. I still remember seeing NBC’s peacock logo in black-and-white, accompanied by the phrase, “The following program is brought to you in living color” at the start of programs.

Another part of this worth considering is the bandwidth that was achieved using the raised cosine for the teletext. That was a marvellous bit of design given the technology available at the time.

Part of the trouble with introducing a “more horizontal lines” standard in the US is that, while older sets could handle a slightly-wrong refresh rate, the time base circuits that determined the number of lines were originally based on components that multiplied the refresh rate by the odd numbers 3, 5, and 7. NTSC was (3*5*5*7) lines, while PAL is (5*5*5*5) lines. For American sets to accommodate a 625 line format, TV repairmen would have needed to retrofit aditional timing electronics into every set with a switch to select between standards (because not all stations would upgrade at the same time), so it was far simpler to tweak the broadcast sync signal at no cost to the consumer.

>TV repairmen would have needed to retrofit aditional

which is what happened in post soviet bloc countries when transitioning from Secam to Pal, Poland in 1995, and again for FM radio in 2000.

The slo mo guys captured scanlines drawing on a crt tv in extreme slow motion, it’s interesting to watch them drawing (118000 fps!):

https://www.youtube.com/watch?v=3BJU2drrtCM

Also worth mentioning is that PAL and NTSC are not TV systems. They are colour encoding standards. They don’t define the number of lines, the number of frames etc, which is part of the TV standard. They only define the method to piggyback the supplemental colour information onto the monochrome TV standard. In fact the term “composite video” means exactly that. A combination of the monochrome analog information with the modulated chroma supplemental information.

And the reason for the 30/25 fps choice was because the power transformer in the TV sets, running at 60/50Hz, were affecting the CRT’s deflection coils resulting in a rolling, horizontal, relatively fade, line. With the frame rate set to an exact multiple that interference line was not noticeable.

Basically the whole colour information embedding is an amazing hack, worth checking. Long story made short, the analog video signal from a spectral content point of view, is not continuous. It looks like a big number of “carriers” with “gaps” in between them. The modulated colour information has the same fragmented look. So the ingenious hack was to adjust the colour subcarrier frequency, so the “carriers” of the colour signal would fall in the “gaps” of the black and white signal, avoiding interference while essentially occupying the same bandwidth.That’s the reason why both the NTSC and PAL carrier frequencies have to be so precisely set relative to the black and white signal.

Now, this is quite an amazing hack if you consider that a monochrome analog signal with a bandwidth of 4.5MHz was combined with the modulated colour supplemental information with a bandwidth of about 2MHz, without increasing the TV channel’s bandwidth not even a single Hz!

I’d love to see a hackaday article on the subject, from someone who actually understands the whole story, because the video referenced in this article is both inadequate and in many cases inaccurate. Not to mention the blatantly false assumption that the horizontal lines could be uped to 625 without any consequences (apart from the compatibility issues with pre-existing TV sets, keeping the same bandwidth would result in a higher vertical resolution and a lower horizontal resolution).

Here are the TV Systems, not colour encoding schemes.

https://en.wikipedia.org/wiki/Broadcast_television_systems#ITU_standards

And this is why he got into trouble with frame rates – ‘Inception’ at the next level

https://www.youtube.com/watch?v=Yp12c3-IL-I

:o)

I remember taping NTSC brodcasts on VHS tape… Dear God… ;_;

There… was color in it… I guess…

I always enjoyed referring to this as “recording on masking tape”.

I’ve seen that on the first gen VCRs, and later ones in Super Extra Hyper Ultra Long play mode… look like you’ve given the kid cans of spray paint to do her coloring book with.

This is in no way an ‘inelegant’ solution. Even integers are fine for looking at designs and code but have no bearing on the real world outside our logical organizations. That they managed to balance societal forces, engineering needs, and governmental regulation to a completely successful design is amazing.

They found a technical solution that solved their interference problems and allowed the country that at the time had the most television sets, to continue using those sets. A tv could continue being used for decades instead of becoming obsolete. Squeezing a whole new data set into a format that was never designed to carry it allowed for a wider set of products to be available. Lets not forget that a TV was a major purchase for households before recent times.

Heck, in 1986, when my parents replaced our old vacuum tube TV with a new (remote control!) set, i remember standing there, turning it on and off, marveling at how the picture came on almost instantly

This might seem silly but was there no way they could have encoded TV signals into mains electricity, that way there would be no need for transmitters?

Transformers and PFC capacitor banks at each and every power station severely limit the bandwidth. Even audio transformers for tube amplifiers have their windings split into sections just to limit inductance and the parasitic capacitance, so sound is not distorted. There is also reason, while they don’t use sheets of iron to make any RF transformer or inductor…

Yeah when you look at it like that it makes sense. Thanks for answering.

They should have abandoned interlacing once they transitioned to digital. What gets interesting is that some early multi GPU setups worked by using the two GPUs to render the two halves of an interlaced image and using some logic to deinterlace the image for display. Although that worked really well for load balancing, modern multi GPU (SLI) setups do not do that as good deinterlacing is apparently harder than splitting the image just once and using some logic to decide the split point for load balancing!

Likewise, the oddball nonintegral frame rate should be abandoned for nonrealtime transport mechanisms like physical media and on demand online streaming. It causes problems with GPUs that were designed with only integral frame rates in mind. (e.g. Ivy Bridge and earlier PCs that were popular as HTPCs!)

Not one of those things is true.

“The received line picture was evident this time.”

Not near as pithy as “What hath God wrought?” or “Watson! Come here! I want you!” or “Mary had a little lamb.”

If only Farnsworth had written in his journal what George Everson, the man who convinced the most penny pinching banker on the West coast to invest $25,000 in Farnsworth’s television idea, telegraphed to the bankers.

“The damned thing works!”

All analogue TV was bad!

There’s a key difference between NTSC and PAL that you don’t take into account: the comparative distortion of sound. 25 fps might be a nice metric number, but it’s also a decision to be so out of sync with the 24 fps of film that there’s an audible difference in pitch that the difference between 29.97 and the dividable-by-three 30 fps doesn’t introduce.

As a total ludite, it took awhile and some reviewing and then reading the comments to KIND of get the idea, but I still am left with one question (as uninformed as it might be), why the need to interlace the image. Why not just write all lines consecutively? Wouldn’t that eliminate the extra time lost in the writing process, and wouldn’t that change the math dilemma?

Because in those days, 50 and 60 fps used too much spectrum to send in full frame. progressive format. you could send 25p or 30p but that would still be a slower refresh rate and cause flicker. So instead of sending 30 or 60 full frames, they invented interlacing which separates two 50p or 60p separate frames into their odd even pixel row half frame components. One half of one full frame which contains its odd row of horizontal pixels is sent, and then the half of the next full frame containing its even rows is sent. When you are watching an interlaced video, you are watching parts of two different frames shown as different parts of the same frame on screen. This reduces the spectrum requirements for transmission by half. A 60i video could using the same amount of frequency spectrum as a 30p video. You can also separate a true single 25p or 30p frame from a progressive video source, into half frames and send that as an interlaced signal for compatibility with interlaced only displays. This is called progressive segmented frame.Because the screen if refreshing so fast first showing odd fields then refreshing to show even fields, it looks like a genuine full frame progressive video even though it isn’t. Flicker is reduced even when showing interlaced contect with half frames made from progressive video frames which have been segmented, because a full frame still gets shown the same way, odd frames first then even frames at double the refresh rate of the progressive source.

Correct me if I’m wrong but wasn’t NTSC better for colour than PAL for transmission medium which were more reliable than terrestrial antenna transmission, for example analog cable?

It was the effects of OTA transmission that caused NTSC color artifscting but over analog cable (especially fiber systems of the 80s and 90s).

It was also good for videotape storage, presuming the signal over the cables in the place where the video was produced had perfect signal integrity from cameras to VTR recorder, and there were no glitches on the tape. I’m English and when when digital TV came out in the UK with full component colour (Mpeg 2 signal over DVB) I noticed older American TV shows colour look better than older British TV shows. Presumably they were all recorded to analog tape in PAL or NTSC format and later digitised for DTV, and the USA shows with better colour were presumably recorded with NTSC encoded colour.

Some of my comment got truncated when pasting from an editor. I had to paste it in from a text editor on android because typing in this box kept glitcing.

I meant NTSC colour artifacts were caused by OTA transmission meaning to transmitted and received with antennas. But over analog cable the picture is much better, especially over the analog cable TV fiber systems used by some cable providers in the 80s and 90s.